I expect most answers will relate to constructs of

Endpoint Groups (EPGs), contracts and filters. These concepts are the foundations of ACI. But when it comes to any infrastructure capabilities, designs and customers’ requirements are constantly evolving, often leading to new segmentation challenges. That is why I would like to introduce a relatively recent, powerful option called Endpoint Security Groups (ESGs). Although ESGs were introduced in Cisco ACI a while back (version 5.0(1) released in May 2020), there is still ample opportunity to spread this functionality to a broader audience.

For those who have not explored the topic yet, ESGs offer an alternate way of handling segmentation with the added flexibility of decoupling this from the earlier concepts of forwarding and security associated with Endpoint Groups. This is to say that ESGs handle segmentation separately from the forwarding aspects, allowing more flexibility and possibility with each.

EPG and ESG – Highlights and Differences

The easiest way to manage endpoints with common security requirements is to put them into groups and control communication between them. In ACI, these groups have been traditionally represented by EPGs. Contracts that are attached to EPGs are used for controlling communication and other policies between groups with different postures. Although EPG has been primarily providing network security, it must be married to a single bridge domain. This is because EPGs define both forwarding policy and security segmentation simultaneously. This direct relationship between Bridge Domain (BD) and an EPG prevents the possibility of an EPG to span more than one bridge domain. This design requirement can be alleviated by ESGs. With ESGs, networking (i.e., forwarding policy) happens on the EPG/BD level, and security enforcement is moved to the ESG level.

Operationally, the ESG concept is similar to, and more straightforward than the original EPG approach. Just like EPGs, communication is allowed among any endpoints within the same group, but in the case of ESGs, this is independent of the subnet or BD they are associated with. For communication between different ESGs, we need contracts. That sounds familiar, doesn’t it? ESGs use the same contract constructs we have been using in ACI since inception.

So, what are the benefits of ESGs then? In a nutshell, where EPGs are bound to a single BD, ESGs allow you to define a security policy that spans across multiple BDs. This is to say you can group and apply policy to any number of endpoints across any number of BDs under a given VRF. At the same time, ESGs decouple the forwarding policy, which allows you to do things like VRF route leaking in a much more simple and more intuitive manner.

ESG. A Simple Use Case Example

To give an example of where ESGs could be useful, consider a brownfield ACI deployment that has been in operation for years. Over time things tend to grow organically. You might find you have created more and more EPG/BD combinations but later realize that many of these EPGs actually share the same security profile. With EPGs, you would be deploying and consuming more contract resources to achieve what you want, plus potentially adding to your management burden with more objects to keep an eye on. With ESGs, you can now simply group all these brownfield EPGs and their endpoints and apply the common security policies only once. What is important is you can do this without changing anything having to do with IP addressing or BD settings they are using to communicate.

EPG to ESG Migration Simplified

In case where your infrastructure is diligently segmented with EPGs and contracts that reflect application tiers’ dependencies, ESGs are designed to allow you to migrate your policy with just a little effort.

The first question that most probably comes to your mind is how to achieve that? With the EPG Selector, one of the new methods of classifying endpoints into ESGs, we enable a seamless migration to the new grouping concept by inheriting contracts from the EPG level. This is an easy way to quickly move all your endpoints within one or more EPGs into your new ESGs.

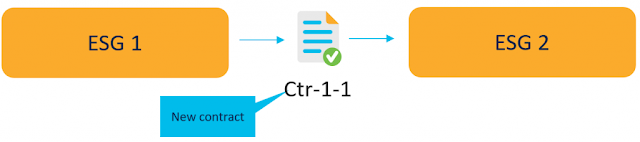

For a better understanding, let’s evaluate the below example. See Figure 1. We have a simple two EPGs setup that we will migrate to ESGs. Currently, the communication between them is achieved with contract Ctr-1.

High-level migration steps are as follows:

1. Migrate EPG 1 to ESG 1

2. Migrate EPG 2 to ESG 2

3. Replace the existing contract with the one applied between newly created ESGs.

Figure-1- Two EPGs with the contract in place

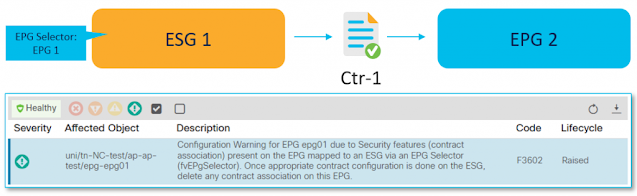

The first step is to create a new ESG 1 where EPG 1 is matched using the EPG Selector. It means that all endpoints that belong to this EPG become part of a newly created ESG all at once. These endpoints still communicate with the other EPG(s) because of an automatic contract inheritance (Note: You cannot configure an explicit contract between ESG and EPG).

This state, depicted in Figure 2, is considered as an intermediate step of a migration, which the APIC reports with F3602 fault until you migrate outstanding EPG(s) and contracts. This fault is a way for us to encourage you to continue with a migration process so that all security configurations are maintained by ESGs. This will keep the configuration and design simple and maintainable. However, you do not have to do it all at once. You can progress according to your project schedule.

Figure 2 – Interim migration step

As a next step, with EPG Selector, you migrate EPG 2 to ESG 2, respectively. Keep in mind that nothing stands in the way of placing other EPGs into the same ESG (even if these EPGs refer to different BDs). Communication between ESGs is still allowed with contract inheritance.

To complete the migration, as a final step, configure a new contract with the same filters as the original one – Ctr-1-1. Assign one ESG as a provider and the second as a consumer, which takes precedence over contract inheritance. Finally, remove the original Ctr-1 contract between EPG 1 and EPG 2. This step is shown in Figure 3.

Figure 3 – Final setup with ESGs and new contract

Easy Migration to ACI

The previous example is mainly applicable when segmentation at the EPG level is already applied according to the application dependencies. However, not everyone may realize that ESG also simplifies brownfield migrations from existing environments to Cisco ACI.

A starting point for many new ACI customers is how EPG designs are implemented. Typically, the most common choice is to implement such that one subnet is mapped to one BD and one EPG to reflect old VLAN-based segmentation designs (Figure 4). So far, moving from such a state to a more application-oriented approach where an application is broken up into tiers based on function has not been trivial. It has often been associated with the need to transfer some workloads between EPGs, or re-addressing servers/services, which typically leads to disruptions.

Figure 4 – EPG = BD segmentation design

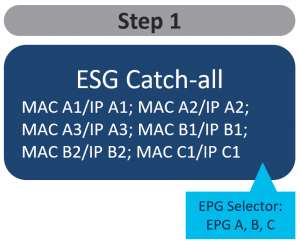

Introducing application-level segmentation in such a deployment model is challenging unless you use ESGs. So how do I make this migration from pure EPG to using ESG? With the new selectors available, you can start very broadly and then, when ready, begin to define additional detail and policy. It is a multi-stage process that still allows endpoints to communicate without disruption as we make the transition gracefully. In general, the steps of this process can be defined as follows:

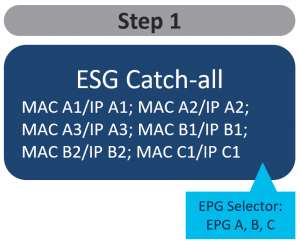

1. Classify all endpoints into one “catch-all” ESG

2. Define new segmentation groups and seamlessly take out endpoints from “catch-all” ESG to newly created ESGs.

3. Continue until all endpoints are assigned to new security groups.

In the first step (Figure 5), you can enable free communication between EPGs, by classifying all of them using EPG selectors and putting them (temporarily) into one “catch-all” ESG. This is conceptually similar to any “permit-all” solutions you may have used prior to ESGs (e.g. vzAny, Preferred Groups).

Figure 5 – All EPGs are temporarily put into one ESG

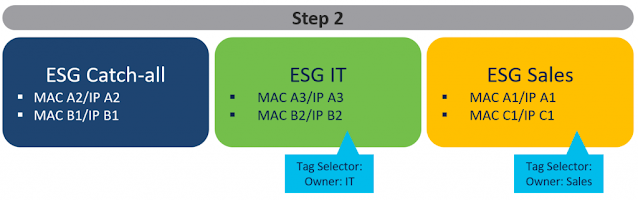

In the second step (Figure 6), you can begin to shape and refine your security policy by seamlessly taking out endpoints from the catch-all ESG and putting them into other newly created ESGs that meet your security policy and desired outcome. For that, you can use other endpoint selector methods available – in this example – tag selectors. Keep in mind that there is no need to change any networking constructs related to these endpoints. VLAN binding to interfaces with EPGs remains the same. No need for re-addressing or moving between BDs or EPGs.

Figure 6 – Gradual migration from an existing network to Cisco ACI

As you continue to refine your security policies, you will end up in a state where all of your endpoints are now using the ESG model. As your data center fabric grows, you do not have to spend any time worrying about which EPG or which BD subnet is needed because ESG frees you of that tight coupling. In addition, you will gain detailed visibility into endpoints that are part of an ESG that represent a department (like IT or Sales in the above example) or application suite. This makes management, auditing, and other operational aspects easier.

Intuitive route-leaking

It is well understood that getting Cisco ACI to interconnect two VRFs in the same or different tenants is possible without any external router. However, two additional aspects must be ensured for this type of communication to happen. First is regular routing reachability and the second is security permission.

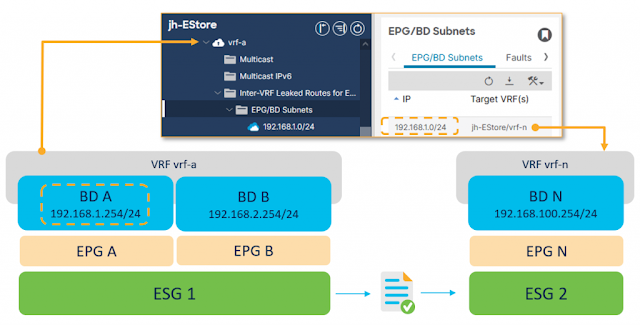

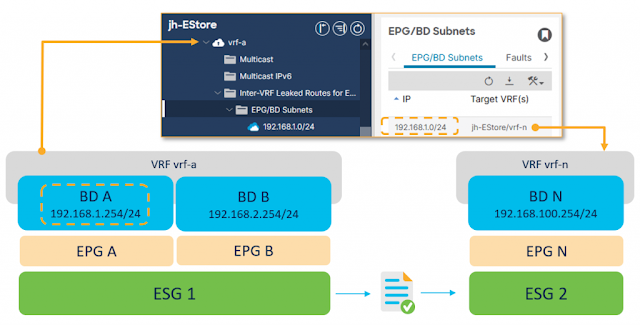

In this very blog, I stated that ESG decouples forwarding from security policy. This is also clearly visible when you need to configure inter-VRF connectivity. Refer to Figure 7 for high-level, intuitive configuration steps.

Figure 7. Simplified route-leaking configuration. Only one direction is shown for better readability

At the VRF level, configure the subnet to be leaked and its destined VRF to establish routing reachability. A leaked subnet must be equal to or be a subset of a BD subnet. Next attach a contract between the ESGs in different VRFs to allow desired communication to happen. Finally, you can put aside the need to configure subnets under the provider EPG (instead of under the BD only), and make adjustments to define the correct BD scope. These are not required anymore. The end result is a much easier way to set up route leaking with none of the sometimes confusing and cumbersome steps that were necessary using the traditional EPG approach.

Source: cisco.com