Excellence in service matters. Whether you are a mom-and-pop operation or a multibillion-dollar business spanning multiple industries, keeping customers happy and satisfied is essential. No one knows this better than telecommunication (telecom) providers—who experience close to 40% customer churn due to network quality issues, according to

McKinsey.

For telecom providers, delivering an outstanding digital experience means smarter troubleshooting, better problem-solving, and a faster route to market for innovative and differentiated services. As if delighting customers and generating new revenue weren’t enough, there is also the added pressure of keeping operational costs low.

For operators of mass-scale networks, that can be a tall order. The good news is that innovative solutions like transport slicing and automated assurance are creating opportunities for service providers to build new revenue streams and differentiate services on quality of experience (QoE) with competitive

service-level agreements (SLAs). In this blog post, we will explore how transport slicing and automated assurance can revolutionize the network landscape and transform service delivery, paving the way for financial growth.

Simplify and transform service delivery

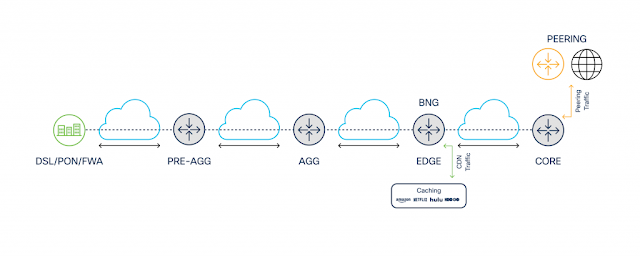

Despite ongoing transformation efforts, telecom and high-performance enterprise networks are becoming increasingly complex and challenging to manage. Operations for multivendor networks can be even more complicated, with their various domains, multiple layers of the OSI stack, numerous cloud services, and commitment to end-to-end service delivery.

Complexity also impacts service performance visibility and how quickly you can find, troubleshoot, and fix issues before customer QoE is impacted. To understand the customer experience and differentiate services with competitive enterprise SLAs, you need real-time, KPI-level insights into network connections across domains and end-to-end service visibility. To confront these challenges, many service providers have been attempting to simplify network operations while reducing the cost of service delivery and assurance.

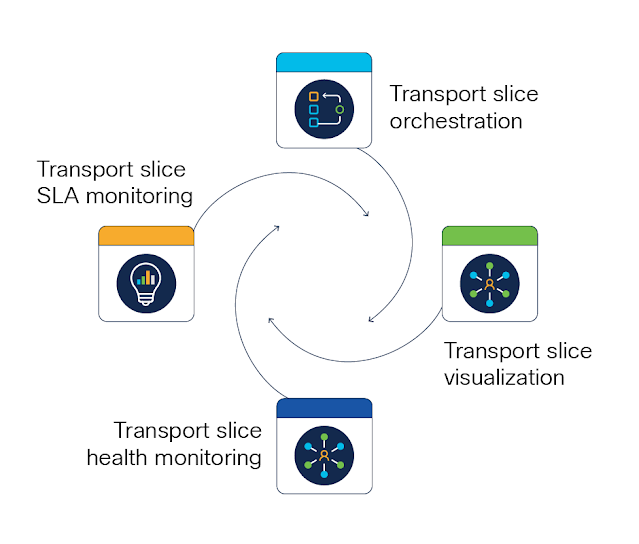

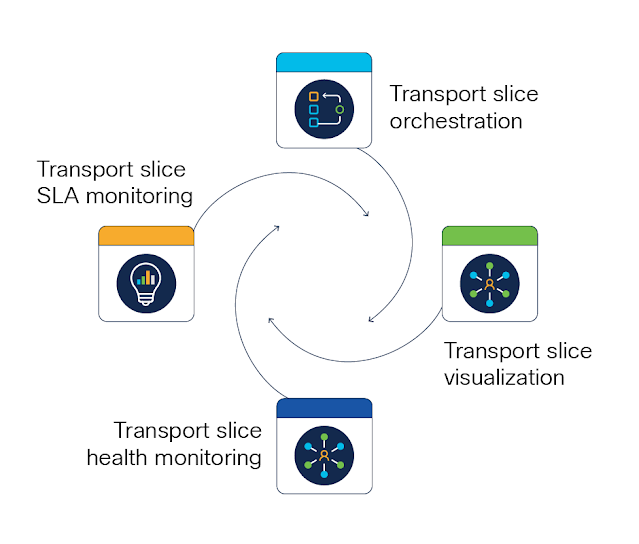

So, how do you streamline increasingly busy transport network operations? For one, advanced automation and orchestration tools can automate intent-based service provisioning, continuously monitor SLAs, and take corrective action to maintain service intent. Automated assurance, for example, can proactively monitor service performance, as well as predict and remediate issues before customers are impacted. This reduces manual work and allows for quicker reaction times to events that impact service.

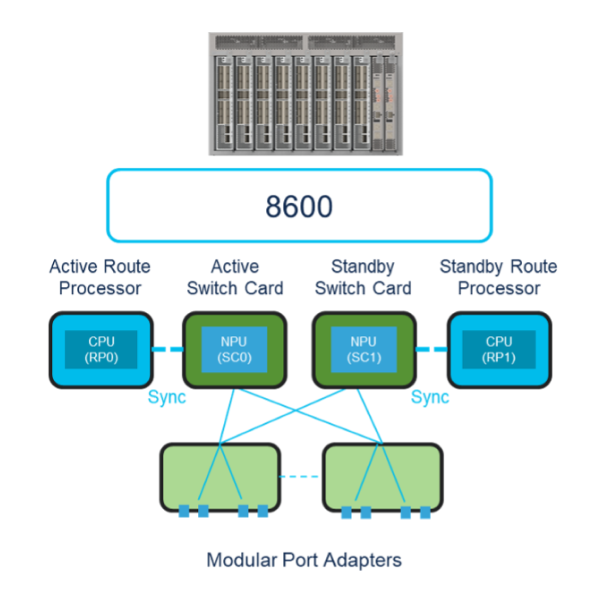

With leading-edge platform and automation capabilities, you can deliver a wide range of use cases with a unified interface for visualization and control to manage network services effectively. This makes complex multidomain transport networks more accessible and easier to operate, which can help improve capital efficiency, enhance OpEx utilization, and accelerate new service launches.

The operational agility created by simplifying network operations allows you to adapt quickly to market changes and customer needs. You can assure services while securing new revenue streams and capitalizing on emerging service delivery opportunities.

Leverage transport slicing for the best outcomes

Simplifying network operations is only one part, because the one-size-fits-all approach to network infrastructure is no longer sufficient as your customers demand more personalized, flexible, and efficient services. This is where transport slicing can help, by offering a new level of customization and efficiency that directly impacts the service quality and service guarantees you can offer.

Network slicing is typically associated with delivering ultra-reliable 5G network services, but the advantages of transport slicing reach well beyond these areas. Network as a service (NaaS), for example, addresses enterprise customers’ need for more dynamic, personalized networks that are provisioned on demand, like cloud services. Using transport slicing, you can create customized virtual networks that provide customers with tailored services and control, while simplifying network management.

For telecom providers, transport slicing and automation will be critical to managing service level objectives (SLOs) of diverse, slice-based networks at scale. The transport layer plays a critical role in service delivery, and automation is essential to simplify operations and reduce manual workflows as slicing and enterprise services based on slicing become more complex.

Together, transport slicing and automation fundamentally change how network services are delivered and consumed. And while the slicing market is still in early stages of adoption, end-to-end slicing across domains offers a level of efficiency that translates into direct benefits through faster service deployment, enhanced performance, cost savings, and the ability to scale services quickly.

A complete, end-to-end automated slicing and assurance solution

Traditionally, the service monitoring and telemetry required to do any service-level assurance was an afterthought—built separately and not easily integrated into the service itself. Now, you can leverage the power of network slicing while ensuring each slice meets the stringent performance criteria your customers expect, as well as enable closed-loop automation based on end-user experiences at microsecond speeds. For example, tight integration between Cisco Crosswork Network Automation and Accedian’s performance monitoring solution provides a more complete, end-to-end automated slicing and assurance approach.

You can create and modify network slices based on real-time demand, and then leverage performance monitoring tools to not only assure the health and efficiency of these slices, but also provide empirical data to validate SLAs. You can use predictive analytics and real-time insights to identify and mitigate issues before they impact service quality, enabling increased network uptime and enhancing customer experience.

A proactive approach towards network management helps you use resources more efficiently, decrease complexity, and ensure customer satisfaction is prioritized—all while creating new opportunities for innovative revenue streams through highly differentiated and competitive service offerings. Ultimately, automated slicing and assurance drives greater operational excellence.

Fix problems before customers notice

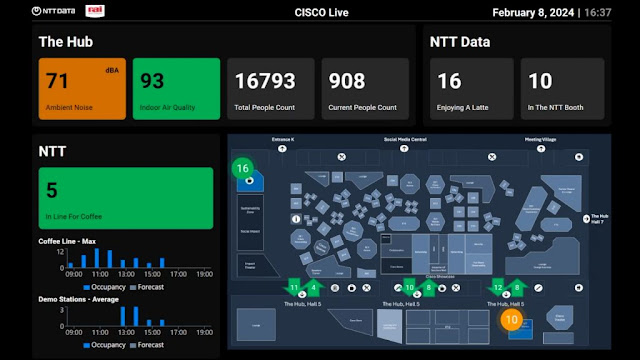

Competition remains fierce in the telecom market, as service providers strive to meet B2B customers’ demand for high-quality services, fast service provisioning, and performance transparency. Speed is a differentiator, and customers notice immediately when service disrupts. Customers are willing to pay a premium for critical network performance, service insights, and enhanced SLAs that promise immediate resolution. To meet this demand, you need the ability to find and fix problems before customers notice.

Transport slicing and automated assurance are at the forefront of this challenge, enabling service providers to not only deliver services faster and more reliably, but to also have confidence those services will have the performance and QoE that customers expect.

The right network automation capabilities can drive simplified, end-to-end lifecycle operations, including service assurance that’s dynamic, intelligent, and automated. This paves the way for revenue-generating, premium services and delivering the outstanding experiences your customers expect.

Source: cisco.com