MDS 9500 family has supported customers for more than a decade helping them through FC speed transitions from 1G, 2G, 4G, 8G and 8G advanced without forklift upgrades. But as we look in the future the MDS 9700 makes more sense for a lot of data center designs. Top four reasons for customers to upgrade are

1. End of Support Milestones

2. Storage Consolidation

3. Improved Capabilities

4. Foundation for Future Growth

So lets look at each in some detail.

1. End of Support Milestones

MDS 4G parts are going End of Support on Feb 28th 2015. Impacted part numbers are DS-X9112, DS-X9124, DS-X9148. You can use the MDS 9500 Advance 8G Cards or MDS 9700 based design. Few advantages MDS 9700 offers over any other existing options are

a. Investment Protection – For any new Data Center design based on MDS 9700 will have much longer life than MDS 9500 product family. This will avoid EOL concerns or upgrades in near future. Thus any MDS 9700 based design will provide strong investment protection and will also ensure that the architecture is relevant for evolving data center needs for more than a decade.

b. EOL Planning – With MDS 9700 based design you control when you need to add any additional blades but with MDS 9500, you will have to either fill up the chassis within 6 months (End of life announcement to End of Sales) or leave the slots empty forever after End of Sale date.

c. Simplify Design – MDS 9700 will allow single skew, S/W version, consistent design across the whole fabric which will simplify the management. MDS 9700 massive performance allows for consolidation and thus reducing footprint and management burden.

d. Rich Feature Set – Finally as we will see later MDS provides host of features and capabilities above and beyond MDS 9500 and that enhancement list will continue to grow.

2. Storage Consolidation

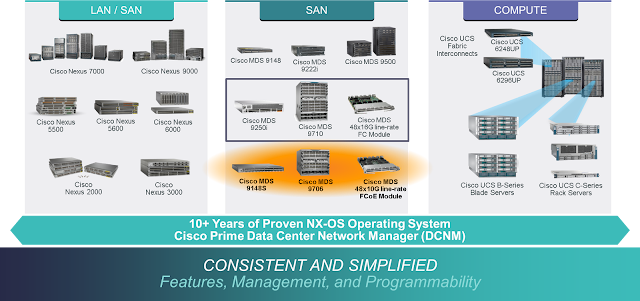

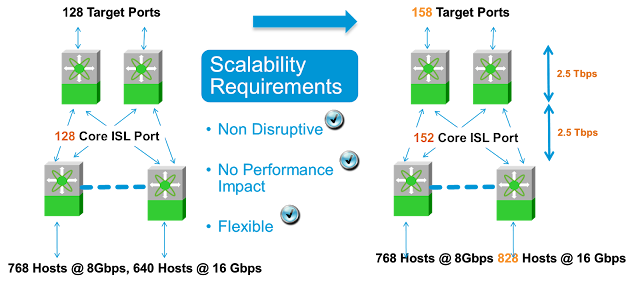

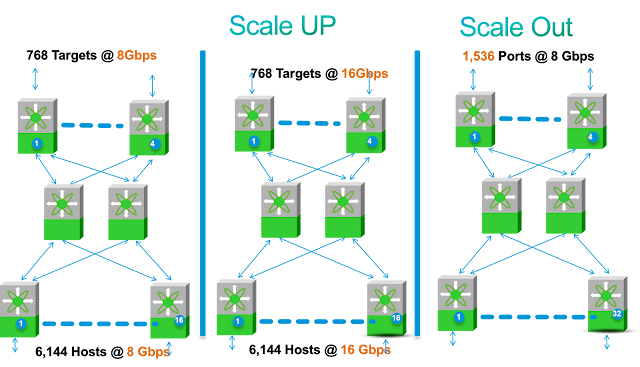

MDS 9700 provides unprecedented consolidation compared to the existing solutions in the industry. As an example with MDS 9710 customers can use the 16G Line Rate ports to support massively virtualized workload and consolidate the server install base. Secondly with 9148S as Top of Rack switch and MDS 9700 at Core, you can design massively scalable networks supporting consistent latency and 16G throughput independent of the number of links and traffic profile and will allow customers to Scale Up or Scale Out much more easily than legacy based designs or any other architecture in the industry.

Moreover as shown in figure above for customers with MDS 9500 based designs MDS 9710 offers higher number of line rate ports in smaller footprint and much more economical way to design SANs. It also enables consolidation with higher performance as well as much higher availability.

3. Improved Capabilities

MDS 9700 design provides more enhanced capabilities above and beyond MDS 9500 and many more capabilities will be added in future. Some examples that are top of mind are detailed below

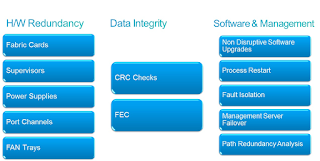

Availability: MDS 9700 based design improves the reliability due to enhancements on many fronts as well as simplifying the overall architecture and management.

◉ MDS 9710 introduced host of features to improve reliability like industry’s first N+1 Fabric redundancy, smaller failure domains and hardware based slow drain detection and recovery.

◉ Its well understood that reliability of any network comes from proper design, regular maintenance and support. It is imperative that Data Center is on the recommended releases and supported hardware. As an example data center outage where there are unsupported hardware or software version failure are exponentially more catastrophic as the time to fix those issues means new procurement and live insertion with no change management window. Cost of an outage in an Data Center is extremely high so it is important to keep the fabric upgraded and on the latest release with all supported components. Thus for new designs it makes sense that it is based on the latest MDS 9700 directors, as an example, rather than MDS 9513 Gen-2 line cards because they will fall of the support on Feb 28, 2015. Also a lot of times having different versions of the hardware and different software versions add complexity to the maintenance and upkeep and thus has a direct impact on the availability of the network as well as operational complexity.

Throughput:

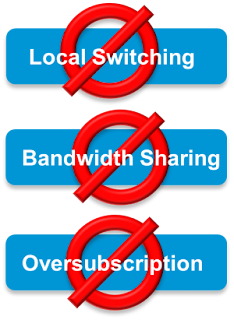

With massive amounts of virtualization the user impact is much higher for any downtime or even performance degradation. Similarly with the data center consolidation and higher speeds available in the edge to core connectivity more and more host edge ports are connected through the same core switches and thus higher number of apps are dependent on consistent end to end performance to provide reliable user experience. MDS 9700 provides industries highest performance with 24Tbps switching capability. The Director class switch is based on Crossbar architecture with Central Arbitration and Virtual Output Queuing which ensures consistent line rate 16G throughput independent of the traffic profile with all 384 ports operating at 16G speeds and without using crutches like local switching (muck akin to emulating independent fixed fabric switches within a director), oversubscription (can cause intermittent performance issues) or bandwidth allocation.

Latency:

MDS Directors are store and forward switches this is needed as it makes sure that corrupted frames are not traversing everywhere in the network and end devices don’t waste precious CPU cycles dealing with corrupted traffic. This additional latency hit is OK as it protects end devices and preserves integrity of the whole fabric. Since all the ports are line rate and customers don’t have to use local switching. This again adds a small latency but results in flexible scalable design which is resilient and doesn’t breakdown in future. These 2 basic design requirements result in a latency number that is slightly higher but results in scalable design and guarantees predictable performance in any traffic profile and provides much higher fabric resiliency .

Consistent Latency: For MDS directors latency is same for the 16G flow to when there are 384 16G flows going through the system. Crossbar based switch design, Central arbitration and Virtual Output Queuing guarantees that. Having a variable latency which goes from few us to a high number is extremely dangerous. So first thing you need to make sure is that director could provide consistent and predictable latency.

End to End latency: Performance of any application or solution is dependent on end to end latency. Just focusing on SAN fabric alone is myopic as major portion of the latency is contributed by end devices. As an example spinning targets latency is of the order of ms. In this design few us is orders of magnitude less and hence not even observable. With SSD the latency is of the order of 100 to 200 us. Assuming 150 us the contribution of SAN fabric for edge core is still less than 10%. Majority (90%) of the latency is end devices and saving couple of us in SAN Fabric will hardly impact the overall application performance but the architectural advantage of CRC based error drops and scalable fabric design will make provided reliable operations and scalable design.

Scalability:

For larger Enterprises scalability has been a challenge due to massive amount of host virtualization. As more and more VMs are logging into the fabric the requirement from the fabric to support higher flogins, Zones. Domains is increasing. MDS 9700 has industries highest scalability numbers as its powered by supervisor that has 4 times the memory and compute capability of the predecessor. This translates to support for higher scalability and at the same time provides room for future growth.

4. Foundation for Future Growth:

MDS 9700 provides a strong foundation to meet the performance and scalability needs for the Data Center requirements but the massive switching capability and compute and memory will cover your needs for more than a decade.

◉ It will allow you to go to 32G FC speeds without forklift upgrade or changing Fabric Cards (rather you will need 3 more of the same Fabric card to get line rate throughput through all the 384 ports on MDS 9710 (and 192 on MDS 9706).

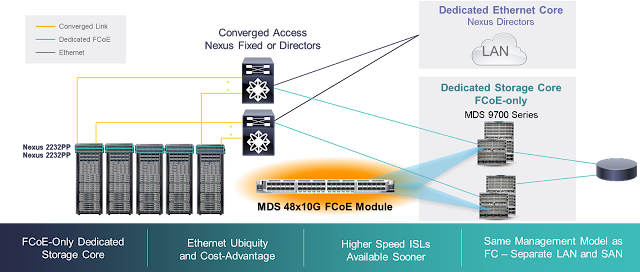

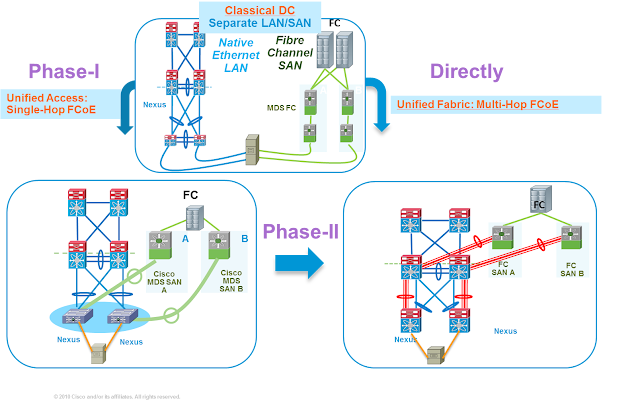

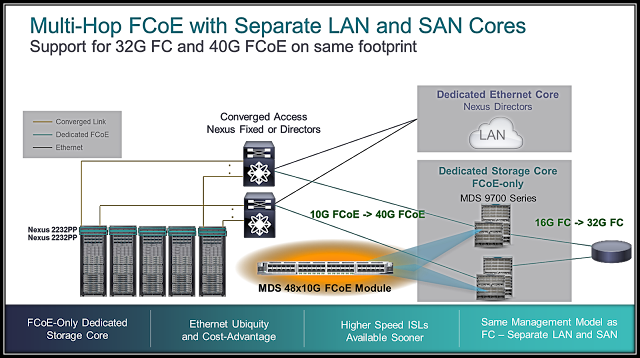

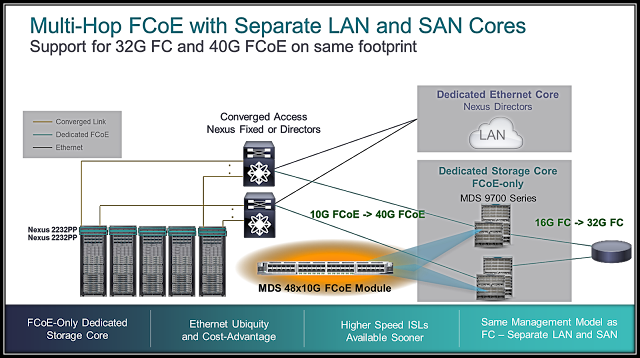

◉ MDS 9700 allow customers to deploy 10G FCoE solution today and upgrade without forklift upgrade again to 40G FCoE.

◉ MDS 9700 is again unique such that customers can mix and match FC and FCoE line cards any way they want without any limitations or constraints.

Most importantly customers don’t have to make FC vs FCoE decision. Whether you want to continue with FC and have plans for 32G FC or beyond or if you are looking to converge two networks into single network tomorrow or few years down the road MDS 9700 will provide consistent capabilities in both architectures.

In summary SAN Directors are critical element of any Data Center. Going back in time the basic reason for having a separate SAN was to provide unprecedented performance, reliability and high availability. Data Center design architecture has to keep up with the requirements of new generation of application, virtualization of even the highest performance apps like databases, new design requirements introduced by solutions like VDI, ever increasing Solid State drive usage, and device proliferation. At the same time when networks are getting increasingly complex the basic necessity is to simplify the configuration, provisioning, resource management and upkeep. These are exact design paradigms that MDS 9700 is designed to solve more elegantly than any existing solution.