- CML_USERNAME: Username for the CML user

- CML_PASSWORD: Password for the CML user

- CML_HOST: The CML host

- CML_LAB: The name of the lab

Thursday, 18 January 2024

How to Use Ansible with CML

Thursday, 17 August 2023

Cisco Drives Full-Stack Observability with Telemetry

Telemetry data holds the key to flawless, secure, and performant digital experiences

The answers lie in telemetry, but there are two hurdles to clear

Cisco occupies a commanding position with access to billions upon billions of data points

Cisco plays a leading role in the OpenTelemetry movement, and in making systems observable

Wednesday, 1 September 2021

Accelerate Data Lake on Cisco Data Intelligence Platform with NVIDIA and Cloudera

The Big Data (Hadoop) ecosystem has evolved over the years from batch processing (Hadoop 1.0) to streaming and near real-time analytics (Hadoop 2.0) to Hadoop meets AI (Hadoop 3.0). These technical capabilities continue to evolve, delivering the data lake as a private cloud with separation of storage and compute. Future enhancements include support for a hybrid cloud (and multi-cloud) enablement.

Cloudera and NVIDIA Partnerships

Cloudera released the following two software platforms in the second half of 2020, which, together, enables the data lake as a private cloud:

◉ Cloudera Data Platform Private Cloud Base – Provides storage and supports traditional data lake environments; introduced Apache Ozone, the next generation filesystem for data lake

◉ Cloudera Data Platform Private Cloud Experiences – Allows experience- or persona-based processing of workloads (such as data analyst, data scientist, data engineer) for data stored in the CDP Private Cloud Base.

Today we are excited to announce that our collaboration with NVIDIA has gone to the next level with Cloudera, as the Cloudera Data Platform Private Cloud Base 7.1.6. will bring in full support of Apache Spark 3.0 with NVIDIA GPU on Cisco CDIP.

Cisco Data Intelligence Platform (CDIP)

Cisco Data Intelligence Platform (CDIP) is a thoughtfully designed private cloud for data lake requirements, supporting data-intensive workloads with the Cloudera Data Platform (CDP) Private Cloud Base and compute-rich (AI/ML) and compute-intensive workloads with the Cloudera Data Platform Private Cloud Experiences — all the while providing storage consolidation with Apache Ozone on the Cisco UCS infrastructure. And it is all fully managed through Cisco Intersight. Cisco Intersight simplifies hybrid cloud management, and, among other things, moves the management of servers from the network into the cloud.

CDIP as a private cloud is based on the new Cisco UCS M6 family of servers that support NVIDIA GPUs and 3rd Gen Intel Xeon Scalable family processors with PCIe Gen 4 capabilities. These servers include the following:

◉ Cisco UCS C240 M6 Server for Storage (Apache Ozone and HDFS) with CDP Private Cloud Base — extends the capabilities of the Cisco UCS rack server portfolio with 3rd Gen Intel Xeon Scalable Processors, supporting more than 43% more cores per socket and 33% more memory than the previous generation.

◉ Cisco UCS® X-Series for CDP Private Cloud Experiences — a modular system managed from the cloud (Cisco Intersight). Its adaptable, future-ready, modular design meets the needs of modern applications and improves operational efficiency, agility, and scale.

Tuesday, 2 March 2021

Machine Reasoning is the new AI/ML technology that will save you time and facilitate offsite NetOps

Machine reasoning is a new category of AI/ML technologies that can enable a computer to work through complex processes that would normally require a human. Common applications for machine reasoning are detail-driven workflows that are extremely time-consuming and tedious, like optimizing your tax returns by selecting the best deductions based on the many available options. Another example is the execution of workflows that require immediate attention and precise detail, like the shut-off protocols in a refinery following a fire alarm. What both examples have in common is that executing each process requires a clear understanding of the relationship between the variables, including order, location, timing, and rules. Because, in a workflow, each decision can alter subsequent steps.

So how can we program a computer to perform these complex workflows? Let’s start by understanding how the process of human reasoning works. A good example in everyday life is the front door to a coffee shop. As you approach the door, your brain goes into reasoning mode and looks for clues that tell you how to open the door. A vertical handle usually means pull, while a horizontal bar could mean push. If the building is older and the door has a knob, you might need to twist the knob and they push or pull depending on which side of the threshold the door is mounted. Your brain does all of this reasoning in an instant, because it’s quite simple and based on having opened thousands of doors. We could program a computer to react to each of these variables in order, based on incoming data, and step through this same process.

Now let’s apply these concepts to networking. A common task in most companies is compliance checking where each network device, (switch, access point, wireless controller, and router) is checked for software version, security patches, and consistent configuration. In small networks, this is a full day of work; larger companies might have an IT administrator dedicated to this process full-time. A cloud-connected machine reasoning engine (MRE) can keep tabs on your device manufacturer’s online software updates and security patches in real time. It can also identify identical configurations for device models and organize them in groups, so as to verify consistency for all devices in a group. In this example, the MRE is automating a very tedious and time-consuming process that is critical to network performance and security, but a task that nobody really enjoys doing.

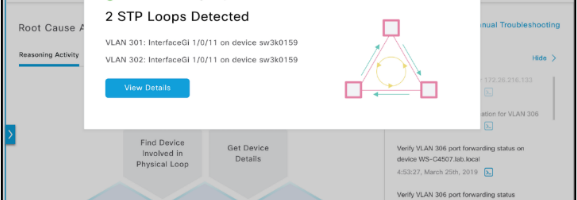

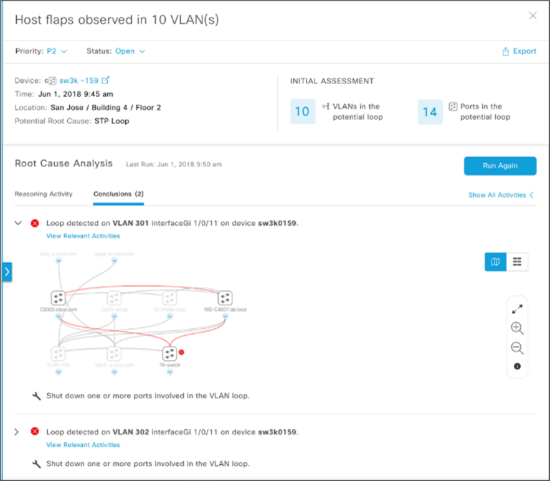

Another good real world example is troubleshooting an STP data loop in your network. Spanning Tree Protocol (STP) loops often appear after upgrades or additions to a layer-2 access network and can data storms that result in severe performance degradation. The process for diagnosing, locating, and resolving an STP loop can be time-consuming and stressful. It also requires a certain level of networking knowledge that newer IT staff members might not yet have. An AI-powered machine reasoning engine can scan your network, locate the source of the loop, and recommend the appropriate action in minutes.

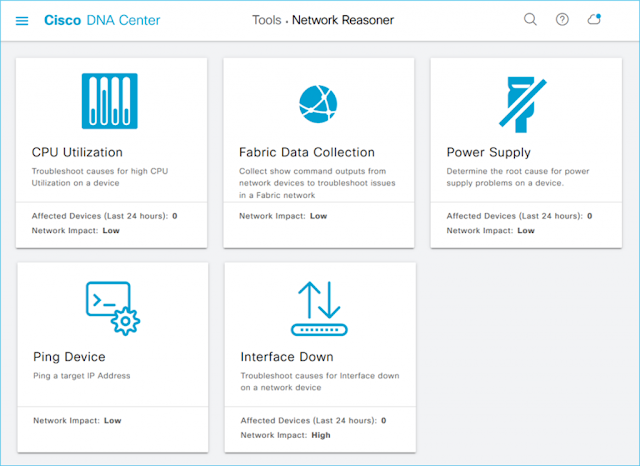

Cisco DNA Center delivers some incredible machine reasoning workflows with the addition of a powerful cloud-connected Machine Reasoning Engine (MRE). The solution offers two ways to experience the usefulness of this new MRE. The first way is something many of you are already aware of, because it’s been part of our AI/ML insights in Cisco DNA Center for a while now: proactive insights. When Cisco DNA Center’s assurance engine flags an issue, it may determine to send this issue to the MRE for automated troubleshooting. If there is an MRE workflow to resolve this issue, you will be presented with a run button to execute that workflow and resolve the issue. Since we’ve already mentioned STP loops, let’s take a look at how that would work.

When a broadcast storm is detected, AI/ML can look at the IP addresses and determine that it’s a good candidate for STP troubleshooting. You’ll get the following window when you click on the alert:

Saturday, 26 September 2020

Automated response with Cisco Stealthwatch

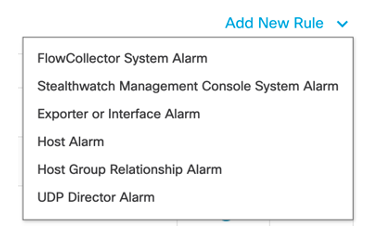

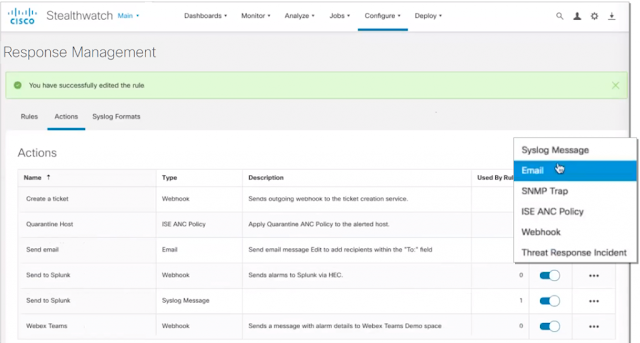

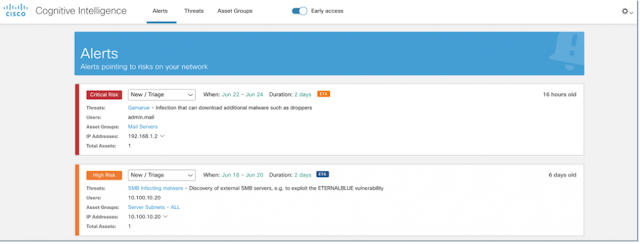

Cisco Stealthwatch provides enterprise-wide visibility by collecting telemetry from all corners of your environment and applying best in class security analytics by leveraging multiple engines including behavioral modeling and machine learning to pinpoint anomalies and detect threats in real-time. Once threats are detected, events and alarms are generated and displayed within the user interface. The system also provides the ability to automatically respond to, or share alarms by using the Response Manager. In release 7.3 of the solution, the Response Management module has been modernized and is now available from the web-based user interface to facilitate data-sharing with third party event gathering and ticketing systems. Additional enhancements include a range of customizable action and rule configurations that offer numerous new ways to share and respond to alarms to improve operational efficiencies by accelerating incident investigation efforts. In this post, I’ll provide an overview of new enhancements to this capability.

Benefits:

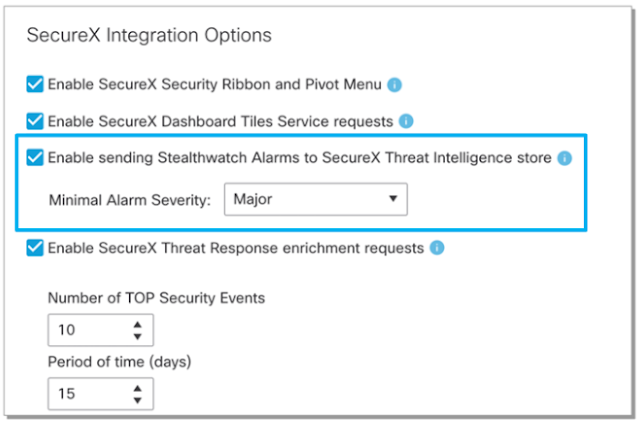

◉ The new modernized Response Management module facilitates data-sharing with third party event gathering and ticketing systems through a range of action options.

◉ Save time and reduce noise by specifying which alarms are shared with SecureX threat response.

◉ Automate responses with pre-built workflows through SecureX orchestration capabilities.

Alarms generally fall into two categories:

Thursday, 10 September 2020

Introducing Stealthwatch product updates for enhanced network detection and response

Automated Response updates

Release 7.3, introduces automated response capabilities to Stealthwatch, giving you new methods to share and respond to alarms through improvements to the Response Management module, and through SecureX threat response integration enhancements.

New methods for sharing and responding to alarms

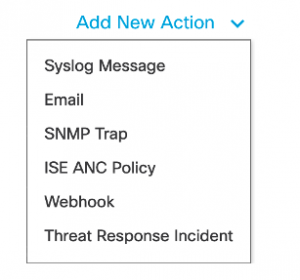

Stealthwatch’s Response Management module has been moved to the web-based UI and modernized to facilitate data-sharing with 3rd party event gathering and ticketing systems. Streamline remediation operations and accelerate containment through numerous new ways to share and respond to alarms through a range of customizable action and rule options. New response actions include:

◉ Webhooks to enhance data-sharing with third-party tools that will provide unparalleled response management flexibility and save time

◉ The ability to specify which malware detections to send to SecureX threat response as well as associated response actions to accelerate incident investigation and remediation efforts

◉ The ability to automate limiting a compromised device’s network access when a detection occurs through customizable quarantine policies that leverage Cisco’s Identity Services Engine (ISE) and Adaptive Network Control (ANC)

Enhanced security analytics

Easier management

Wednesday, 2 September 2020

Tools to Help You Deliver A Machine Learning Platform And Address Skill Gaps

…but it still can be hard!

With expectations set very high in Public Cloud, ML platforms delivered on-premise by IT teams have been made even more difficult because the automation flows and their associated tooling to power these, have been well-hidden behind public cloud customer consoles and therefore, the process to replicate these is not very obvious.

Even though abstraction technologies, such as Kubernetes, reflect and relate well to the underlying infrastructure, the education needed to bridge current Data Center skills over to cloud native tools takes enthusiasm and persistence in the face of potential frustration as these technology ‘stacks’ are learned and mastered.

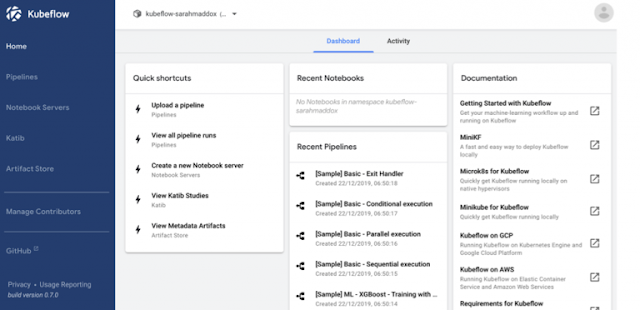

Considering this, the Cisco community has developed an open source tool named “MLAnywhere” to assist with the skills needed for cloud native ML platforms. MLAnywhere provides an actual, usable deployed Kubeflow workflow (pipeline) with sample ML applications, all of this on top of Kubernetes via a clean and intuitive interface. As well as addressing the educational aspects for IT teams, it significantly speeds up and automates the deployment of a Kubeflow environment including many of the unseen essential aspects.

How MLAnywhere works

MLAnywhere is a simple Microservice, built using container technologies, and designed to be easily installed, maintained and evolved. The fundamental goal of this open-source project is to help IT teams understand what it takes to configure these environments whilst providing the Data Scientist a usable platform, including real world examples of ML code built into the tool via Jupyter Notebook samples.

The installation process is very straight forward — simply download the project files from the Cisco DevNet repository, follow the instructions to build a container using a Dockerfile, and launch the resulting container on an existing Kubernetes cluster.

So what’s in it for IT Operations teams?

Not forgetting the Data Scientists

What does the future hold?

Saturday, 28 March 2020

Cisco Announces Kubeflow Starter Pack

Here are are the major components of Kubeflow 1.0:

Jupyter Notebook

Many data science teams live on Jupyter notebook since it allows them to collaborate and share their projects, with multi-tenant support. Personally, I use it to develop Python code because I like its ability to single step my code, with immediate results. Within the data science context, Jupyter becomes the primary user interface for data scientists, machine learning engineers.

TensorFlow and Other Deep Learning Frameworks

Originally designed to only support TensorFlow, Kubeflow version 1.0 now supports other deep learning frameworks, including PyTorch. These are two of the leading deep learning frameworks that customers are asking about today.

Model Serving

Once a machine learning model is created, the data science team often must create an application or web page to feed new data and execute the trained model. With Kubeflow, there are built-in capabilities with TFServing enabling models to be used without worrying about the detailed logistics of a custom application. As you can see in the screen shot below, the data pipeline enables data model to be served. In fact, the model can be called through a URL.

Other Components

Cisco Kubeflow Starter Pack

Thursday, 20 February 2020

Answering The Big Three Data Science Questions At Cisco

Data Science Applied In Business

In the past decade, there has been an explosion in the application of data science outside of academic realms. The use of general, statistical, predictive machine learning models has achieved high success rates across multiple occupations including finance, marketing, sales, and engineering, as well as multiple industries including entertainment, online and store front retail, transportation, service and hospitality, healthcare, insurance, manufacturing and many others. The applications of data science seem to be nearly endless in today’s modern landscape, with each company jockeying for position in the new data and insights economy. Yet, what if I told you that companies may be achieving only a third of the value they could be getting with the use of data science for their companies? I know, it sounds almost fantastical given how much success has already been achieved using data science. However, many opportunities for value generation may be getting over looked because data scientists and statisticians are not traditionally trained to answer some of the questions companies in industry care about.

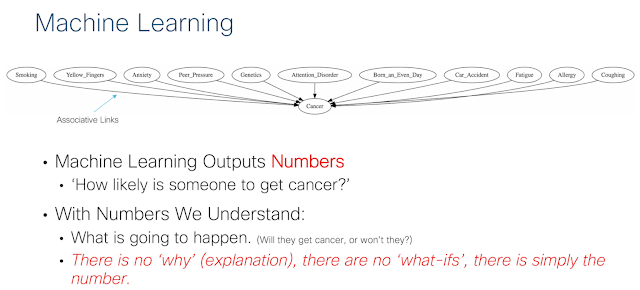

Most of the technical data science analysis done today is either classification (labeling with discrete values), regression (labeling with a number), or pattern recognition. These forms of analysis answer the business questions ‘can I understand what is going on’ and ‘can I predict what will happen next’. Examples of questions are ‘can I predict which customers will churn?’, ‘can I forecast my next quarter revenue?’, ‘can I predict products customers are interested in?’, ‘are there important customer activity patterns?’, etc… These are extremely valuable questions to companies that can be answered by data science. In fact, answering these questions is what has caused the explosion in interest in applying data science in business applications. However, most companies have two other major categories of important questions that are being totally ignored. Namely, once a problem has been identified or predicted, can we determine what’s causing it? Furthermore, can we take action to resolve or prevent the problem?

I start this article discussing why most data driven companies aren’t as data driven as they think they are. I then introduce the idea of the 3 categories of questions companies care about the most (The Big 3), discuss why data scientists have been missing these opportunities. I then outline how data scientists and companies can partner to answer these questions.

Why Even Advanced Tech Companies Aren’t as Data Driven As They Think They Are.

Many companies want to become more ‘data driven’, and to generate more ‘prescriptive insights’. They want to use data to make effective decisions about their business plans, operations, products and services. The current idea of being ‘data driven’ and ‘prescriptive insights’ in the industry today seems to be defined as using trends or descriptive statistics about about data to try to make informed business decisions. This is the most basic form of being data driven. Some companies, particularly the more advanced technology companies go a step further and use predictive machine learning models and more advanced statistical inference and analysis methods to generate more advanced descriptive numbers. But that’s just it. These numbers, even those generated by predictive machine learning models, are just descriptive (those with a statistical background must forgive me for the overloaded use of the term ‘descriptive’). They may be descriptive in different ways, such as machine learning generating a predicted number about something that may happen in the future, while a descriptive statistic indicates what is happening in the present, but these methods ultimately focus on producing a number. To take action to bring about a desired change in an environment requires more than a number. It’s not enough to predict a metric of interest. Businesses want to use numbers to make decisions. In other words, businesses want causal stories. They want to know why a metric is the way it is, and how their actions can move that metric in a desired direction. The problem is that classic statistics and data science falls short in pursuit of answers to these questions.

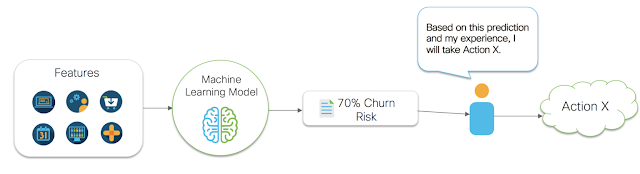

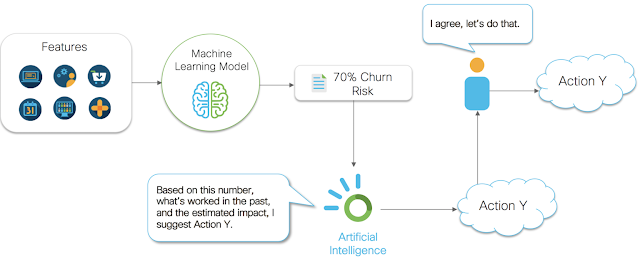

Take the example diagram shown in figure 1 below. Figure 1 shows a very common business problem of predicting the risk of a customer churning. For this problem, a data scientist may gather many pieces of data (features) about a customer and then build a predictive model. Once a model is developed, it is deployed as a continually running insight service, and integrated into a business process. In this case, let’s say we have a renewal manager that wants to use these insights. The business process is as follows. First, the automated insight service that was deployed gathers data about the customer. It then passes that data to the predictive model. The predictive model then outputs a predicted risk of churn number. This number is then passed to the renewal manager. The renewal manager then uses their gut intuition to determine what action to take to reduce the risk of churn. This all seems straightforward enough. However, we’ve broken the chain of being data driven. How is that you ask? Well, our data driven business process stopped at the point of generating our churn risk number. We simply gave our churn risk number to a human, and they used their gut intuition to make a decision. This isn’t data driven decision making, this is gut driven decision making. It’s a subtle thing to notice, so don’t feel too bad if you didn’t see it at first. In fact, most people don’t recognize this subtlety. That’s because it’s so natural these days to think that getting a number to a human is how making ‘data driven decisions’ works. The subtlety exists because we are not using data and statistical methods to evaluate the impact of actions the human can take on the metric they care about. A human sees a number or a graph, and then *decides* to take *action*. This implies they have an idea about how their *action* will *effect* the number or graph that they see. Thus, they are making a cause and effect judgement about their decision making and their actions. Yet, they aren’t using any sort of mathematical methods for evaluating their options. They are simply using their personal judgement to make a decision. What can end up happening in this case is that a human may see a number, make a decision, and end up making that number worse.

Let’s take the churn risk example again. Let’s say the customer is 70% likely to churn and that they were likely to churn because their experience with the service was poor, but assume that the renewal manager doesn’t know this (this too is actually a cause and effect statement). Let’s also say that a renewal manager sends a specially crafted renewal email to this customer in an attempt to reduce the likelihood of churn. That seems like a reasonable action to take, right? However, this customer receives the email, and is reminded of how bad their experience was, and is now even more annoyed with our company. Suddenly the likelihood to churn increases to 90% for this customer. If we had taken no action, or possibly a different action (say connecting them with digital support resources) then we would have been better off. But without an analysis of cause and effect, and without systems that can analyze our actions and prescribe the best ones to take, we are gambling with the metrics we care about.