Tuesday, 7 March 2023

ACI Segmentation and Migrations made easier with Endpoint Security Groups (ESG)

Sunday, 29 May 2022

Enabling Scalable Group Policy with TrustSec Across Networks to Provide More Reliability and Determinism

Cisco TrustSec provides software-defined access control and network segmentation to help organizations enforce business-driven intent and streamline policy management across network domains. It forms the foundation of Cisco Software-Defined Access (SD-Access) by providing a policy enforcement plane based on Security Group Tag (SGT) assignments and dynamic provisioning of the security group access control list (SGACL).

Cisco TrustSec has now been enhanced by Cisco engineers with a broader, cross-domain transport option for network policies. It relies on HTTPS, Representational State Transfer (REST) protocol API, and the JSON file and data interchange format for far more reliable and scalable policy updates and segmentation for more deterministic networks. It is a superior choice over the current use of RADIUS over User Datagram Protocol (UDP), which is notorious for packet drops and retries that degrade performance and service guarantees.

Scaling Policy

Cisco SD-Access, Cisco SD-WAN, and Cisco Application Centric Infrastructure (ACI) have been integrated to provide enterprise customers with a consistent cross-domain business policy experience. This necessitated a more robust, reliable, deterministic, and dependable TrustSec infrastructure to meet the increasing scale of SGTs and SGACL policies―combined with high-performance requirements and seamless policy provisioning and updates followed by assured enforcement.

With increased scale, two things are required of policy systems.

◉ A more reliable SGACL provisioning mechanism. The use of RADIUS/UDP transport is inefficient for the transport of large volumes of data. It often results in a higher number of round-trip retries due to dropped packets and longer transport times between devices and the Cisco Identity Services Engine (ISE server). The approach is error-prone and verbose.

◉ Determinism for policy updates. TrustSec uses the RADIUS change of authorization (CoA) mechanism to dynamically notify changes to SGACL policy and environmental data (Env-Data). Devices respond with a request to ISE to update the specified change. These are two seemingly disparate but related transaction flows with the common intent to deliver the latest policy data to the devices. In scenarios where there are many devices or a high volume of updates, there is a higher risk of packet loss and out-of-ordering, it is often challenging to correlate the success or failure of such administrative changes.

More Performant, Scalable, and Secure Transport for Policy

The new transport option for Cisco TrustSec is based on a system of central administration and distributed policy enforcement, with Cisco DNA Center, Cisco Meraki Enterprise Cloud, or Cisco vManage used as a controller dashboard and Cisco ISE serving as the service point for network devices to source SGACL policies and Env-Data (Figure 1).

Figure 1 shows the Cisco SD-Access deployment architecture depicting a mix of both old and newer software versions and policy transport options.

Tuesday, 1 February 2022

Application-centric Security Management for Nexus Dashboard Orchestrator (NDO)

Nexus Dashboard Orchestrator (NDO) users can achieve policy-driven Application-centric Security Management (ASM) with AlgoSec

AlgoSec ASM A32 is AlgoSec’s latest release to feature a major technology integration, built upon a well-established collaboration with Cisco — bringing this partnership to the front of the Cisco innovation cycle with support for Cisco Nexus Dashboard Orchestrator (NDO) allows Cisco ACI – and legacy-style Data Center Network Management – to operate at scale in a global context, across data center and cloud regions. The AlgoSec solution with NDO brings the power of intelligent automation and software-defined security features for ACI, including planning, change management, and micro-segmentation, to global scope. There are multiple use cases, enabling application-centric operation and micro-segmentation, and delivering integrated security operations workflows. AlgoSec now brings support for EPG and Inter-Site Contracts with NDO, boosting their existing ACI integration.Let’s Change the World by Intent

Since its 2014 introduction, Cisco ACI has changed the landscape of data center networking by introducing an intent-based approach, over earlier configuration-centric architecture models. This opened the way for accelerated movement by enterprise data centers to meet their requirements for internal cloud deployments, new DevOps and serverless application models, and the extension of these to public clouds for hybrid operation – all within a single networking technology that uses familiar switching elements. Two new, software-defined artifacts make this possible in ACI: End-Point Groups (EPG) and Contracts – individual rules that define characteristics and behavior for an allowed network connection.

ACI Is Great, NDO Is Global

That’s really where NDO comes into the picture. By now, we have an ACI-driven data center networking infrastructure, with management redundancy for the availability of applications and preserving their intent characteristics. Using an infrastructure built on EPGs and contracts, we can reach from the mobile and desktop to the datacenter and the cloud. This means our next barrier is the sharing of intent-based objects and management operations, beyond the confines of a single data center. We want to do this without clustering types, that depend on the availability risk of individual controllers, and hit other limits for availability and oversight.

Instead of labor-intensive and error-prone duplication of data center networks and security in different regions, and for different zones of cloud operation, NDO introduces “stretched” EPGs, and inter-site contracts, for application-centric and intent-based, secure traffic which is agnostic to global topologies – wherever your users and applications need to be.

Having added NDO capability to the formidable, shared platform of AlgoSec and Cisco ACI, region-wide and global policy operations can be executed in confidence with intelligent automation. AlgoSec makes it possible to plan for operations of the Cisco NDO scope of connected fabrics to be application-centric and enables unlocking the ACI super-powers for micro-segmentation. This enables a shared model between networking and security teams for zero-trust and defense-in-depth, with accelerated, global-scope, secure application changes at the speed of business demand — within minutes, rather than days or weeks.

Key Use Cases

Change management — For security policy change management this means that workloads may be securely re-located from on-premises to public cloud, under a single and uniform network model and change-management framework — ensuring consistency across multiple clouds and hybrid environments.

Visibility — With an NDO-enabled ACI networking infrastructure and AlgoSec’s ASM, all connectivity can be visualized at multiple levels of detail, across an entire multi-vendor, multi-cloud network. This means that individual security risks can be directly correlated to the assets that are impacted, and a full understanding of the impact by security controls on an application’s availability.

Risk and Compliance — It’s possible across all the NDO connected fabrics to identify risk on-premises and through the connected ACI cloud networks, including additional cloud-provider security controls. The AlgoSec solution makes this a self-documenting system for NDO, with detailed reporting and an audit trail of network security changes, related to original business and application requests. This means that you can generate automated compliance reports, supporting a wide range of global regulations, and your own, self-tailored policies.

The Road Ahead

Cisco NDO is a major technology innovation and AlgoSec and Cisco are delighted and enthusiastic about our early adoption customers. Based on early reports with our Cisco partners, needs will arise for more automation, which would include the “zero-touch” push for policy changes – committing EPG and Inter-site Contract changes to the orchestrator, as we currently do for ACI and APIC. Feedback will also shape a need for automation playbooks and workflows that are most useful in the NDO context, and that we can realize with a full committable policy by the ASM Firewall Analyzer.

Source: cisco.com

Tuesday, 5 October 2021

Using Infrastructure as Code to deploy F5 Application Delivery and Cisco ACI Service Chaining

Every data center is built to host applications and provide the required infrastructure for the applications to run, communicate with each other, be accessed by their users from anywhere, and scale on demand.

To achieve this, your data center network must be able to provide different types of connectivity to different applications. This includes east-west connectivity between application tiers, as well as north-south connectivity between users and applications. Both rely on additional application delivery Layer 4 to Layer 7 services like load balancers and web application firewalls.

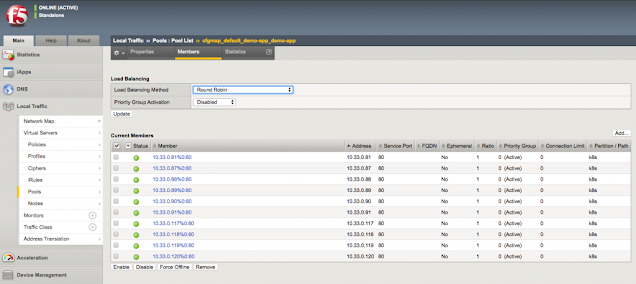

Cisco ACI and F5 BIG-IP Service Insertion

Cisco ACI’s powerful L4-L7 services redirection capabilities will allow you to insert services and redirect traffic from the source to the destination anywhere in your fabric without needing to change any of the existing cabling. This is where you can insert F5 BIG-IP load balancer, to provide application availability, access control, and security.

Read More: 500-440: Designing Cisco Unified Contact Center Enterprise (UCCED)

This is possible using the Policy Based Redirection (PBR) capabilities of the Cisco ACI fabric by configuring a Service Graph in APIC.

But PBR policy and Service Graphs entail a series of manual configurations. This can be tedious, error prone, and inefficient especially if the same configuration happens very often. On top of that, the configuration of the BIG-IP service itself requires information from the Cisco ACI Service Graph.

Simplified Service Insertion with Cisco and F5

This is why Cisco partnered with F5—a leader in the application delivery and web application firewall space around the Cisco ACI and the F5 BIGIP solutions—to simplify the deployment of F5-powered L4-L7 services using the F5 ACI ServiceCenter App for APIC.

End-to-End Service Insertion Automation with Infrastructure as Code

Saturday, 11 September 2021

Introducing Success Track for Data Center Network

The pace of digital transformation has accelerated. In the past year and a half, business models have changed, and there is a need for enterprises to quickly improve the time taken to launch new products or services. The economic uncertainty has forced enterprises to reduce their overall spending and focus more on improving their operational efficiencies. As the pandemic has changed the way the world does business, organizations have been quick to pivot to digital mediums, as their survival depends on it. The results of a Gartner survey published in November 2020, highlights this rapid shift. 76% of CIOs reported increased demand for new digital products and services and 83% expected that to increase further in 2021, according to the Gartner CIO Agenda 2021.

Today, more than ever, IT operations are being asked to manage complex IT infrastructure. This when coupled with rising volumes of data, makes the task of IT teams more difficult to manage today’s dynamic, constantly changing data center environments. Automation is clearly the need of the hour and automation enabled by AI will play a huge role. Hyperscalers are leading the way in using AI for IT operations and are increasingly setting the trend that will see AI being embedded in every component of IT. Powered by AI, hyperscalers are quickly defining the future of IT– from self-healing infrastructure to databases that can recover quickly in the event of a failure or networks that can automatically configure and re-configure without any human intervention.

Cisco Application Centric Infrastructure (ACI) is a software-defined networking (SDN) solution designed for data centers. Cisco ACI allows network infrastructure to be defined based upon network policies and facilitates automated network provisioning – simplifying, optimizing, and accelerating the application deployment lifecycle.

To minimize the effort of managing your data center networks, Cisco has created Success Track for Data Center Network, a new innovative service offering. We want to help you simplify and remove roadblocks. Success Tracks provides coaching and insights at every step of your lifecycle journey.

Saturday, 14 August 2021

How To Simplify Cisco ACI Management with Smartsheet

Have you ever gotten lost in the APIC GUI while trying to configure a feature? Or maybe you are tired of going over the same steps again and again when changing an ACI filter or a contract? Or maybe you have always asked yourself how you can integrate APIC with other systems such as an IT ticketing or monitoring system to improve workflows and making your ACI fabric management life easier. Whatever the case may be, if you are interested in finding out how to create your own GUI for ACI, streamline and simplify APIC GUI configuration steps using smartsheets, and see how extensible and programmable an ACI fabric is, then read on.

Innovations that came with ACI

I have always been a fan of Cisco ACI (Application Centric Infrastructure). Coming from a routing and switching background, my mind was blown when I started learning about ACI. The SDN implementation for data centers from Cisco, ACI, took almost everything I thought I knew about networking and threw it out the window. I was in awe at the innovations that came with ACI: OpFlex, declarative control, End-Point Groups (EPGs), application policies, fabric auto discovery, and so many more.

The holy grail of networking

It felt to me like a natural evolution of classical networking from VLANs and mapped layer-3 subnets into bridge domains and subnets and VRFs. It took a bit of time to wrap my head around these concepts and building underlays and overlays but once you understand how all these technologies come together it almost feels like magic. The holy grail of networking is at this point within reach: centrally defining a set of generic rules and policies and letting the network do all the magic and enforce those policies all throughout the fabric at all times no matter where and how the clients and end points are connecting to the fabric. This is the premise that ACI was built on.

Automating common ACI management activities

So you can imagine when my colleague, Jason Davis (@snmpguy) came up with a proposal to migrate several ACI use cases from Action Orchestrator to full blown Python code I was up for the challenge. Jason and several AO folks have worked closely with Cisco customers to automate and simplify common ACI management workflows. We decided to focus on eight use cases for the first release of our application:

◉ Deploy an application

◉ Create static path bindings

◉ Configure filters

◉ Configure contracts

◉ Associate EPGs to contracts

◉ Configure policy groups

◉ Configure switch and interface profiles

◉ Associate interfaces to policy groups

Using the online smartsheet REST API

You might recognize these as being common ACI fabric management activities that a data center administrator would perform day in and day out. As the main user interface for gathering data we decided to use online smartsheets. Similar to ACI APIC, the online smartsheet platform provides an extensive REST API interface that is just ripe for integrations.

The plan was pretty straight forward:

1. Use smartsheets with a bit of JavaScript and CSS as the front-end components of our application

2. Develop a Python back end that would listen for smartsheet webhooks triggered whenever there are saved Smartsheet changes

3. Process this input data based on this data create, and trigger Ansible playbooks that would perform the configuration changes corresponding to each use case

4. Provide a pass/fail status back to the user.

Find the details on DevNet Automation Exchange

Tuesday, 22 June 2021

Power of Cloud Application Centric Infrastructure (Cloud ACI) in Service Chaining

It is a reality that most enterprise customers are moving from a private data center model to a hybrid multi-cloud model. They are either moving some of their existing applications or developing newer applications in a cloud native way to deploy in the public clouds. Customers are wary about sticking to just a single public cloud provider for fear of vendor lock-in. Hence, we are seeing a very high percentage of customers adopting a multi cloud strategy. According to Flexera 2021 State of the cloud report, this number stands at 92%. While a multi cloud model gives customers flexibility, better disaster recovery and helps with compliance, it also comes with a number of challenges. Customers have to learn not just one, but all of the different public cloud nuances and implementations.

More Info: 352-001: CCDE Design Written Exam (CCDE)

Navigating the different islands of public cloud

Load Balancers and More!

Unleash the power of service chaining

Thursday, 8 April 2021

Designing Fault Tolerant Data Centers of the Future

System crashes. Outages. Downtime.

These words send chills down the spines of network administrators. When business apps go down, business leaders are not happy. And the cost can be significant.

Recent IDC survey data shows that enterprises experience two cloud service outages per year. IDC research conservatively puts the average cost of downtime for enterprises at $250,000/hour. Which means just four hours of downtime can cost an enterprise $1 million.

More Info: 300-425: Designing Cisco Enterprise Wireless Networks (ENWLSD)

To respond to failures as quickly as possible, network administrators need a highly scalable, fault tolerant architecture that is simple to manage and troubleshoot.

What’s Required for the Always On Enterprise

Let’s examine some of the key technical capabilities required to meet the “always-on” demand that today’s businesses face. There is a need for:

1. Granular change control mechanisms that facilitate flexible and localized changes, driven by availability models, so that the blast radius of a change is contained by design and intent.

2. Always-on availability to help enable seamless handling and disaster recovery, with failover of infrastructure from one data center to another, or from one data center to a cloud environment.

3. Operational simplicity at scale for connectivity, segmentation, and visibility from a single pane of glass, delivered in a cloud operational model, across distributed environments—including data center, edge, and cloud.

4. Compliance and governance that correlate visibility and control across different domains and provide consistent end-to-end assurance.

5. Policy– driven automation that improves network administrators’ agility and provides control to manage a large-scale environment through a programmable infrastructure.

Typical Network Architecture Design: The Horizontal Approach

With businesses required to be “always on” and closer to users for performance considerations, there is a need to deploy applications in a very distributed fashion. To accomplish this, network architects create distributed mechanisms across multiple data centers. These are on-premises and in the cloud, and across geographic regions, which can help to mitigate the impact of potential failures. This horizontal approach works well by delivering physical layer redundancy built on autonomous systems that rely on a do-it-yourself approach for different layers of the architecture.

However, this design inherently imposes an over-provisioning of the infrastructure, along with an inability to express intent and a lack of coordinated visibility through a single pane of glass.

Some on-premises providers also have marginal fault isolation capabilities and limited-to-no capabilities or solutions for effectively managing multiple data centers.

For example, consider what happens when one data center—or part of the data center—goes down using this horizontal design approach. It is typical to fix this kind of issue in place, increasing the time it takes for application availability, either in the form of application redundancy or availability.

This is not an ideal situation in today’s fast-paced, work-from-anywhere world that demands resiliency and zero downtime.

The Hierarchical Approach: A Better Way to Scale and Isolate

Today’s enterprises rely on software-defined networking and flexible paradigms that support business agility and resiliency. But we live in an imperfect world full of unpredictable events. Is the public cloud down? Do you have a switch failure? Spine switch failure? Or even worse, a whole cluster failure?

Now, imagine a fault-tolerant data center that automatically restores systems after a failure. This may sound like fiction to you but with the right architecture it can be your reality today.

A fault-tolerant data center architecture can survive and provide redundancy across your data center landscapes. In other words, it provides the ultimate in business resiliency, making sure applications are always on, regardless of failure.

The architecture is designed with a multi-level, hierarchical controller cluster that delivers scalability, meets the availability needs of each fault domain, and creates intent-driven policies. This architecture involves several key components:

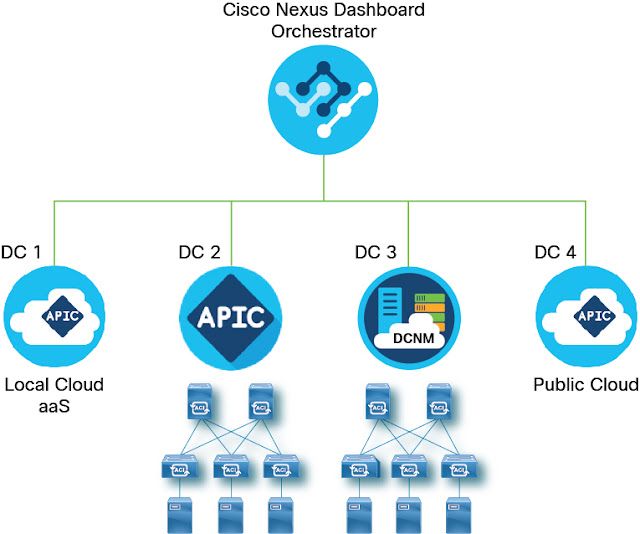

1. A multi-site orchestrator that pushes high-level policy to the local data center controller—also referred to as a domain controller—and delivers the separation of fault domain and the scale businesses require for global governance with resiliency and federation of data center network.

2. A data center controller/domain controller that operates both on-premises and in the cloud and creates intent-based policies, optimized for local domain requirements.

3. Physical switches with leaf-spine topology for deterministic performance and built-in availability.

4. SmartNIC and Virtual Switches that extend network connectivity and segmentation to the servers, further delivering an intent-driven, high-performing architecture that is closer to the workload.

Thursday, 4 March 2021

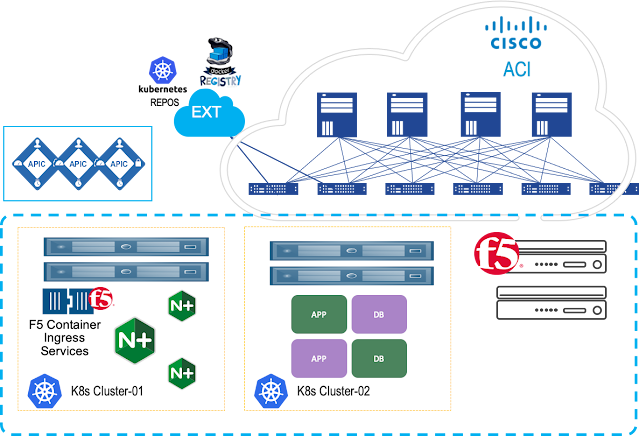

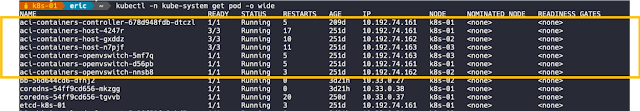

Enable Consistent Application Services for Containers

Kubernetes is all about abstracting away complexity. As Kubernetes continues to evolve, it becomes more intelligent and will become even more powerful when it comes to helping enterprises manage their data center, not just at the cloud. While enterprises have had to deal with the challenges associated with managing different types of modern applications (AI/ML, Big data, and analytics) to process that data, they are faced with the challenge to maintain top-level network and security policies and gaining better control of the workload, to ensure operational and functional consistency. This is where Cisco ACI and F5 Container Ingress Services come into the picture.

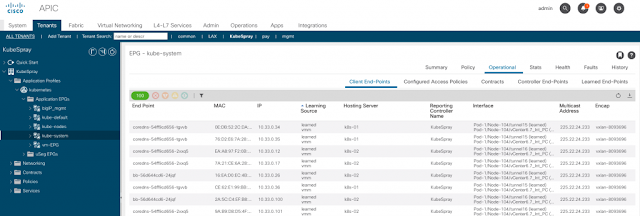

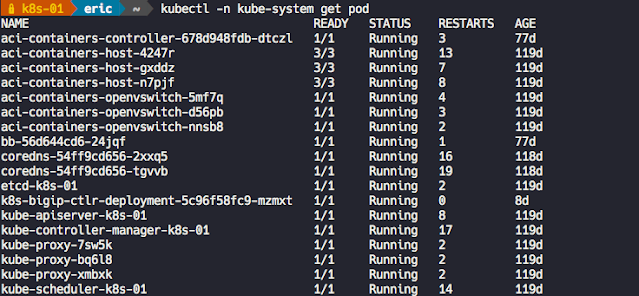

F5 Container Ingress Services (CIS) and Cisco ACI

Cisco ACI offers these customers an integrated network fabric for Kubernetes. Recently, F5 and Cisco joined forces by integrating F5 CIS with Cisco ACI to bring L4-7 services into the Kubernetes environment, to further simplify the user experience in deploying, scaling, and managing containerized applications. This integration specifically enables:

◉ Unified networking: Containers, VMs, and bare metal

◉ Secure multi-tenancy and seamless integration of Kubernetes network policies and ACI policies

◉ A single point of automation with enhanced visibility for ACI and BIG-IP.

◉ F5 Application Services natively integrated into Container and Platform as a Service (PaaS)Environments

One of the key benefits of such implementation is the ACI encapsulation normalization. The ACI fabric, as the normalizer for the encapsulation, allows you to merge different network technologies or encapsulations be it VLAN or VXLAN into a single policy model. BIG-IP through a simple VLAN connection to ACI, with no need for an additional gateway, can communicate with any service anywhere.