Tuesday, 14 May 2024

Optimizing business velocity with Cisco Full-Stack Observability

Wednesday, 6 September 2023

Taming AI Frontiers with Cisco Full-Stack Observability Platform

The Generative AI Revolution: A Rapidly Changing Landscape

Kubernetes Observability

Infrastructure Cloudscape

Beyond Clouds

Bridging the Gaps with Cisco Full-Stack Observability

Friday, 28 October 2022

Cisco Announces Open Source Cloud-Native Offerings for Securing Modern Applications

OpenClarity is a trio of projects

Building the Application-First Future

Cisco Leading in Open Source

Join Us at KubeCon in Detroit

Friday, 26 August 2022

Service Chaining VNFs with Cloud-Native Containers Using Cisco Kubernetes

To support edge use cases such as distributed IoT ecosystems and data-intensive applications, IT needs to deploy processing closer to where data is generated instead of backhauling data to a cloud or to the campus data center. A hybrid workforce and cloud-native applications are also pushing applications from centralized data centers to the edges of the enterprise. These new generations of application workloads are being distributed across containers and across multiple clouds.

Network Functions Virtualization (NFV) focuses on decoupling individual services—such as Routing, Security, and WAN Acceleration—from the underlying hardware platform. Enabling these Network Functions to run inside virtual machines increases deployment flexibility in the network. NFV enables automation and rapid service deployment of networking functions through service-chaining, providing significant reductions in network OpEx. The capabilities described in this post extend service-chaining of Virtual Network Functions in Cisco Enterprise Network Function Virtualization Infrastructure (NFVIS) to cloud-native applications and containers.

Cisco NFVIS provides software interfaces through built-in Local Portal, Cisco vManage, REST, Netconf APIs, and CLIs. You can learn more about NFVIS at the following resources:

◉ Virtual Network Functions lifecycle management

◉ Secure Tunnel and Sharing of IP with VNFs

◉ Route-Distribution through BGP NFVIS system enables learning routes announced from the remote BGP neighbor and applying the routes to the NFVIS system; as well as announcing or withdrawing NFVIS local routes from the remote BGP neighbor.

◉ Security is embedded from installation through all software layers such as credential management, integrity and tamper protection, session management, and secure device access.

◉ Clustering combines nodes into a single cluster definition.

◉ Third-party VNFs are supported through the Cisco VNF Certification Program.

Integrate Cloud-Native Applications with Cisco Kubernetes

Collaborative Tools to Simplify Cloud Native Container Applications

Tuesday, 17 May 2022

Network Service Mesh Simplifies Multi-Cloud / Hybrid Cloud Communication

Kubernetes networking is, for the most part, intra-cluster. It enables communication between pods within a single cluster:

The most fundamental service Kubernetes networking provides is a flat L3 domain: Every pod can reach every other pod via IP, without NAT (Network Address Translation).

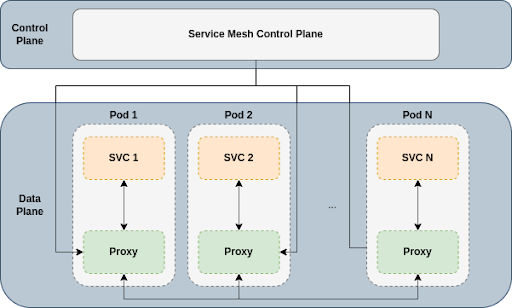

The flat L3 domain is the building block upon which more sophisticated communication services, like Service Mesh, are built:

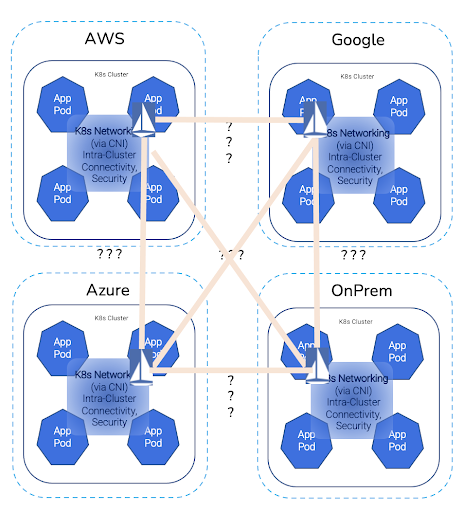

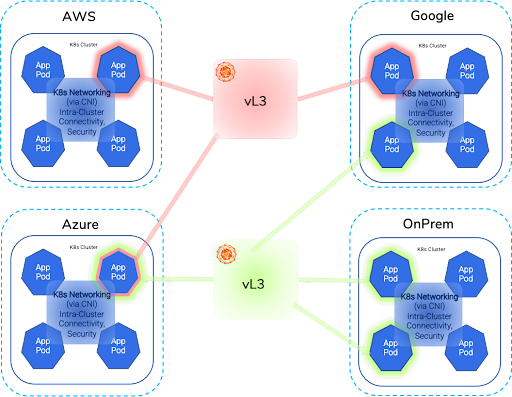

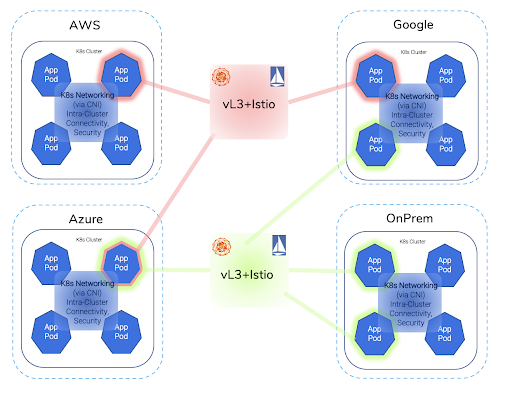

Multi-cluster communication

Network Service Mesh benefits

Tuesday, 26 April 2022

How To Do DevSecOps for Kubernetes

In this article, we’ll provide an overview of security concerns related to Kubernetes, looking at the built-in security capabilities that Kubernetes brings to the table.

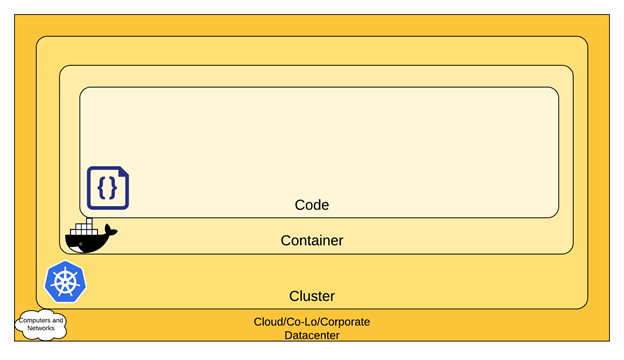

Kubernetes at the center of cloud-native software

Since Docker popularized containers, most non-legacy large-scale systems use containers as their unit of deployment, in both the cloud and private data centers. When dealing with more than a few containers, you need an orchestration platform for them. For now, Kubernetes is winning the container orchestration wars. Kubernetes runs anywhere and on any device—cloud, bare metal, edge, locally on your laptop or Raspberry Pi. Kubernetes boasts a huge and thriving community and ecosystem. If you’re responsible for managing systems with lots of containers, you’re probably using Kubernetes.

The Kubernetes security model

When running an application on Kubernetes, you need to ensure your environment is secure. The Kubernetes security model embraces a defense in depth approach and is structured in four layers, known as the 4Cs of Cloud-Native Security:

Read More: 350-801: Implementing Cisco Collaboration Core Technologies (CLCOR)

1. Cloud (or co-located servers or the corporate datacenter)

2. Container

3. Cluster

4. Code

Authentication

Authorization

Admission

Secrets management

Data encryption

Encryption at rest

Encryption in transit

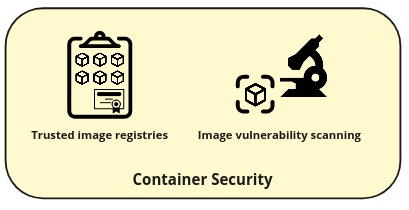

Managing container images securely

Defining security policies

Monitoring, alerting, and auditing

Tuesday, 29 March 2022

Hyperconverged Infrastructure with Harvester: The start of the Journey

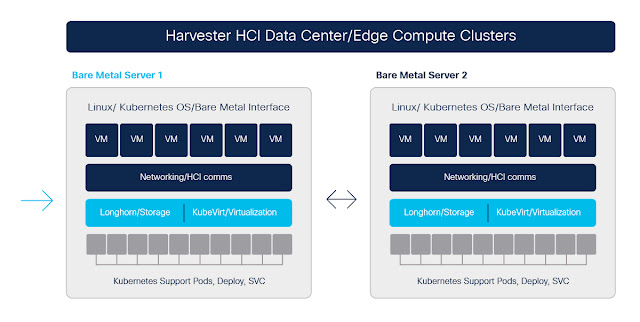

Deploying and running data center infrastructure management – compute, networking, and storage – has traditionally been manual, slow, and arduous. Data center staffers are accustomed to doing a lot of command line configuration and spending hours in front of data center terminals. Hyperconverged Infrastructure (HCI) is the way out: It solves the problem of running storage, networking, and compute in a straightforward way by combining the provisioning and management of these resources into one package, and it uses software defined data center technologies to drive automation of these resources. At least in theory.

Recently, a colleague and I have been experimenting with Harvester, an open source project to build a cloud native, Kubernetes-based Hyperconverged Infrastructure tool for running data center and edge compute workloads on bare metal servers.

Harvester brings a modern approach to legacy infrastructure by running all data center and edge compute infrastructure, virtual machines, networking, and storage, on top of Kubernetes. It is designed to run containers and virtual machine workloads side-by-side in a data center, and to lower the total cost of data center and edge infrastructure management.

Why we need hyperconverged infrastructure

Many IT professionals know about HCI concepts from using products from VMWare, or by employing cloud infrastructure like AWS, Azure, and GCP to manage Virtual Machine applications, networking, and storage. The cloud providers have made HCI flexible by giving us APIs to manage these resources with less day-to-day effort, at least once the programming is done. And, of course, cloud providers handle all the hardware – we don’t need to stand up our own hardware in a physical location.

Adapting to the speed of change

Hyperconverged everything

Monday, 5 April 2021

Intersight Kubernetes Service (IKS) Now Available!

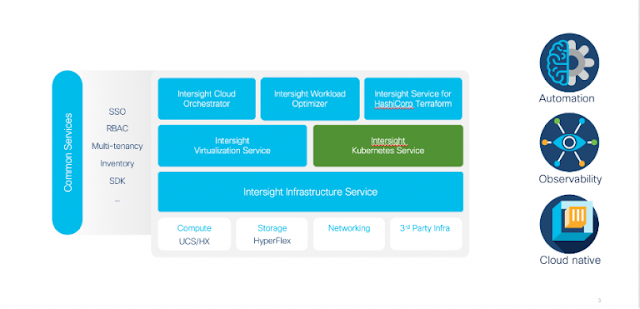

We announced the Tech Preview of Intersight Kubernetes Service (IKS) which received tremendous interest. Over 50 internal sales teams, partners and customers participated and provided valuable recommendations and great validation for our offering and strategic direction. Today we are pleased to announce the general availability of IKS!

Read More: SaaS-based Kubernetes lifecycle management: an introduction to Intersight Kubernetes Service

Intersight Kubernetes Service’s goal is to accelerate our customers’ container initiatives by simplifying the management effort for Kubernetes clusters across the full infrastructure stack and expanding the application operations toolkit. IKS provides flexibility and choice of infrastructure (on-prem, multi-hypervisor, bare metal, public cloud) so that our customers can focus on running and monetizing business critical applications in production, without having to worry about the challenges of open-source or figuring out the mechanics to manage, operate and correlate between each layer of the infrastructure stack.

A common platform for full-stack infrastructure and K8s management

Continuous Delivery for Kubernetes clusters and apps

Full-stack app visualization, AIOps rightsizing and intelligent top-down auto-scaling

Thursday, 4 March 2021

Enable Consistent Application Services for Containers

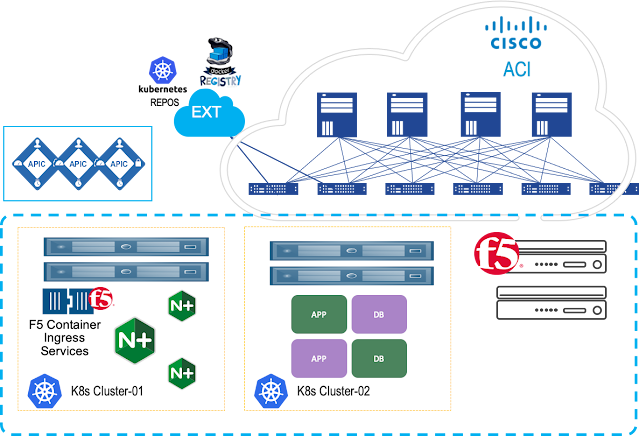

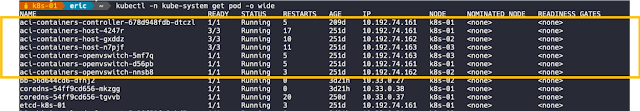

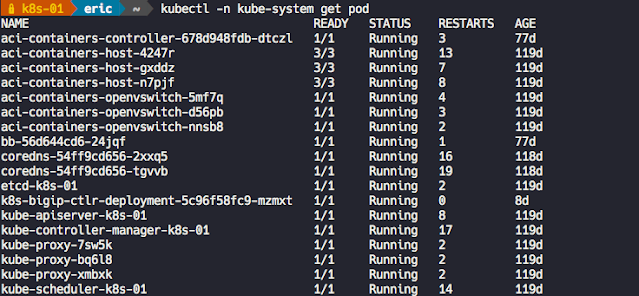

Kubernetes is all about abstracting away complexity. As Kubernetes continues to evolve, it becomes more intelligent and will become even more powerful when it comes to helping enterprises manage their data center, not just at the cloud. While enterprises have had to deal with the challenges associated with managing different types of modern applications (AI/ML, Big data, and analytics) to process that data, they are faced with the challenge to maintain top-level network and security policies and gaining better control of the workload, to ensure operational and functional consistency. This is where Cisco ACI and F5 Container Ingress Services come into the picture.

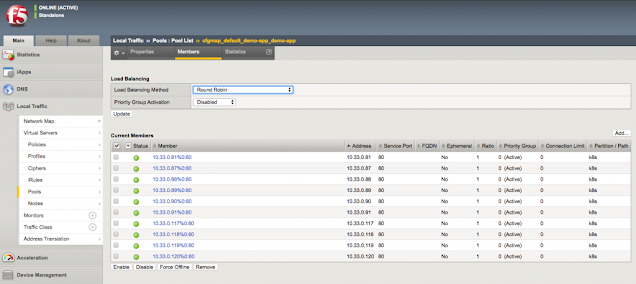

F5 Container Ingress Services (CIS) and Cisco ACI

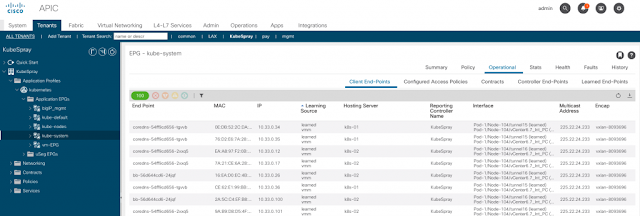

Cisco ACI offers these customers an integrated network fabric for Kubernetes. Recently, F5 and Cisco joined forces by integrating F5 CIS with Cisco ACI to bring L4-7 services into the Kubernetes environment, to further simplify the user experience in deploying, scaling, and managing containerized applications. This integration specifically enables:

◉ Unified networking: Containers, VMs, and bare metal

◉ Secure multi-tenancy and seamless integration of Kubernetes network policies and ACI policies

◉ A single point of automation with enhanced visibility for ACI and BIG-IP.

◉ F5 Application Services natively integrated into Container and Platform as a Service (PaaS)Environments

One of the key benefits of such implementation is the ACI encapsulation normalization. The ACI fabric, as the normalizer for the encapsulation, allows you to merge different network technologies or encapsulations be it VLAN or VXLAN into a single policy model. BIG-IP through a simple VLAN connection to ACI, with no need for an additional gateway, can communicate with any service anywhere.