- CML_USERNAME: Username for the CML user

- CML_PASSWORD: Password for the CML user

- CML_HOST: The CML host

- CML_LAB: The name of the lab

Thursday, 18 January 2024

How to Use Ansible with CML

Thursday, 30 November 2023

Making Your First Terraform File Doesn’t Have to Be Scary

The HCL File: What Terraform will configure

The Terraform workflow: How Terraform applies configuration

A bonus tip

Tuesday, 6 June 2023

Understanding Application Aware Routing (AAR) in Cisco SD-WAN

Setting the stage

Searching for ‘the why’

Measure, measure, measure!

Finding the sweet spot

Sunday, 22 January 2023

Launch Your Cybersecurity Career with Cisco CyberOps Certifications | Part 1

Cisco CyberOps Certification Evolution

Cisco SOC Tier 1 Analyst Learning Path

SOC Analyst Job Outlook

Tuesday, 2 August 2022

Exploring the Linux ‘ip’ Command

I’ve been talking for several years now about how network engineers need to become comfortable with Linux. I generally position it that we don’t all need to become “big bushy beard-bearing sysadmins.” Rather, network engineers must be able to navigate and work with a Linux-based system confidently. I’m not going to go into all the reasons I believe that in this post (if you’d like a deeper exploration of that topic, please let me know). Nope… I want to dive into a specific skill that every network engineer should have: exploring the network configuration of a Linux system with the “ip” command.

A winding introduction with some psychology and an embarrassing fact (or two)

If you are like me and started your computing world on a Windows machine, maybe you are familiar with “ipconfig” on Windows. The “ipconfig” command provides details about the network configuration from the command line.

A long time ago, before Hank focused on network engineering and earned his CCNA for the first time, he used the “ipconfig” command quite regularly while supporting Windows desktop systems.

What was the IP assigned to the system? Was DHCP working correctly? What DNS servers are configured? What is the default gateway? How many interfaces are configured on the system? So many questions he’d use this command to answer. (He also occasionally started talking in the third person.)

It was a great part of my toolkit. I’m actually smiling in nostalgia as I type this paragraph.

For old times’ sake, I asked John Capobianco, one of my newest co-workers here at Cisco Learning & Certifications, to send me the output from “ipconfig /all” for the blog. John is a diehard Windows user still, while I converted to Mac many years ago. And here is the output of one of my favorite Windows commands (edited for some privacy info).

Windows IP Configuration

Host Name . . . . . . . . . . . . : WINROCKS

Primary Dns Suffix . . . . . . . :

Node Type . . . . . . . . . . . . : Hybrid

IP Routing Enabled. . . . . . . . : No

WINS Proxy Enabled. . . . . . . . : No

DNS Suffix Search List. . . . . . : example.com

Ethernet adapter Ethernet:

Connection-specific DNS Suffix . : home

Description . . . . . . . . . . . : Intel(R) Ethernet Connection (12) I219-V

Physical Address. . . . . . . . . : 24-4Q-FE-88-HH-XY

DHCP Enabled. . . . . . . . . . . : Yes

Autoconfiguration Enabled . . . . : Yes

Link-local IPv6 Address . . . . . : fe80::31fa:60u2:bc09:qq45%13(Preferred)

IPv4 Address. . . . . . . . . . . : 192.168.122.36(Preferred)

Subnet Mask . . . . . . . . . . . : 255.255.255.0

Lease Obtained. . . . . . . . . . : July 22, 2022 8:30:42 AM

Lease Expires . . . . . . . . . . : July 25, 2022 8:30:41 AM

Default Gateway . . . . . . . . . : 192.168.2.1

DHCP Server . . . . . . . . . . . : 192.168.2.1

DHCPv6 IAID . . . . . . . . . . . : 203705342

DHCPv6 Client DUID. . . . . . . . : 00-01-00-01-27-7B-B2-1D-24-4Q-FE-88-HH-XY

DNS Servers . . . . . . . . . . . : 192.168.122.1

NetBIOS over Tcpip. . . . . . . . : Enabled

Wireless LAN adapter Wi-Fi:

Media State . . . . . . . . . . . : Media disconnected

Connection-specific DNS Suffix . : home

Description . . . . . . . . . . . : Intel(R) Wi-Fi 6 AX200 160MHz

Physical Address. . . . . . . . . : C8-E2-65-8U-ER-BZ

DHCP Enabled. . . . . . . . . . . : Yes

Autoconfiguration Enabled . . . . : Yes

Ethernet adapter Bluetooth Network Connection:

Media State . . . . . . . . . . . : Media disconnected

Connection-specific DNS Suffix . :

Description . . . . . . . . . . . : Bluetooth Device (Personal Area Network)

Physical Address. . . . . . . . . : C8-E2-65-A7-ER-Z8

DHCP Enabled. . . . . . . . . . . : Yes

Autoconfiguration Enabled . . . . : Yes

It is still such a great and handy command. A few new things in there from when I was using it daily (IPv6, WiFi, Bluetooth), but it still looks like I remember.

The first time I had to touch and work on a Linux machine, I felt like I was on a new planet. Everything was different, and it was ALL command line. I’m not ashamed to admit that I was a little intimidated. But then I found the command “ifconfig,” and I began to breathe a little easier. The output didn’t look the same, but the command itself was close. The information it showed was easy enough to read. So, I gained a bit of confidence and knew, “I can do this.”

When I jumped onto the DevNet Expert CWS VM that I’m using for this blog to grab the output of the “ifconfig” command as an example, I was presented with this output.

(main) expert@expert-cws:~$ ifconfig

Command 'ifconfig' not found, but can be installed with:

apt install net-tools

Please ask your administrator.

This brings me to the point of this blog post. The “ifconfig” command is no longer the best command for viewing the network interface configuration in Linux. In fact, it hasn’t been the “best command” for a long time. Today the “ip” command is what we should be using. I’ve known this for a while, but giving up something that made you feel comfortable and safe is hard. Just ask my 13-year-old son, who still sleeps with “Brown Dog,” the small stuffed puppy I gave him the day he was born. As for me, I resisted learning and moving to the “ip” command for far longer than I should have.

Eventually, I realized that I needed to get with the times. I started using the “ip” command on Linux. You know what, it is a really nice command. The “ip” command is far more powerful than “ifconfig.”

When I found myself thinking about a topic for a blog post, I figured there might be another engineer or two out there who might appreciate a personal introduction to the “ip” command from Hank.

But before we dive in, I can’t leave a cliffhanger like that on the “ifconfig” command.

root@expert-cws:~# apt-get install net-tools

(main) expert@expert-cws:~$ ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:9a:0c:8a:ee txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens160: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.16.211.128 netmask 255.255.255.0 broadcast 172.16.211.255

inet6 fe80::20c:29ff:fe75:9927 prefixlen 64 scopeid 0x20

ether 00:0c:29:75:99:27 txqueuelen 1000 (Ethernet)

RX packets 85468 bytes 123667981 (123.6 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 27819 bytes 3082651 (3.0 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 4440 bytes 2104825 (2.1 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4440 bytes 2104825 (2.1 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

There it is, the command that made me feel a little better when I started working with Linux.

Exploring the IP configuration of your Linux host with the “ip” command!

So there you are, a network engineer sitting at the console of a Linux workstation, and you need to explore or change the network configuration. Let’s walk through a bit of “networking 101” with the “ip” command.

First up, let’s see what happens when we just run “ip.”

(main) expert@expert-cws:~$ ip

Usage: ip [ OPTIONS ] OBJECT { COMMAND | help }

ip [ -force ] -batch filename

where OBJECT := { link | address | addrlabel | route | rule | neigh | ntable |

tunnel | tuntap | maddress | mroute | mrule | monitor | xfrm |

netns | l2tp | fou | macsec | tcp_metrics | token | netconf | ila |

vrf | sr | nexthop }

OPTIONS := { -V[ersion] | -s[tatistics] | -d[etails] | -r[esolve] |

-h[uman-readable] | -iec | -j[son] | -p[retty] |

-f[amily] { inet | inet6 | mpls | bridge | link } |

-4 | -6 | -I | -D | -M | -B | -0 |

-l[oops] { maximum-addr-flush-attempts } | -br[ief] |

-o[neline] | -t[imestamp] | -ts[hort] | -b[atch] [filename] |

-rc[vbuf] [size] | -n[etns] name | -N[umeric] | -a[ll] |

-c[olor]}

There’s some interesting info just in this help/usage message. It looks like “ip” requires an OBJECT on which a COMMAND is executed. And the possible objects include several that jump out at the network engineer inside of me.

◉ link – I’m curious what “link” means in this context, but it catches my eye for sure

◉ address – This is really promising. The ip “addresses” assigned to a host is high on the list of things I know I’ll want to understand.

◉ route – I wasn’t fully expecting “route” to be listed here if I’m thinking in terms of the “ipconfig” or “ifconfig” command. But the routes configured on a host is something I’ll be interested in.

◉ neigh – Neighbors? What kind of neighbors?

◉ tunnel – Oooo… tunnel interfaces are definitely interesting to see here.

◉ maddress, mroute, mrule – My initial thought when I saw “maddress” was “MAC address,” but then I looked at the next two objects and thought maybe it’s “multicast address.” We’ll leave “multicast” for another blog post.

The other objects in the list are interesting to see. Having “netconf” in the list was a happy surprise for me. But for this blog post, we’ll stick with the basic objects of link, address, route, and neigh.

Where in the network are we? Exploring “ip address”

First up in our exploration will be the “ip address” object. Rather than just go through the full command help or man page line (ensuring no one ever reads another post of mine), I’m going to look at some common things I might want to know about the network configuration on a host. As you are exploring on your own, I would highly recommend exploring “ip address help” as well as “man ip address” for more details. These commands are very powerful and flexible.

What is my IP address?

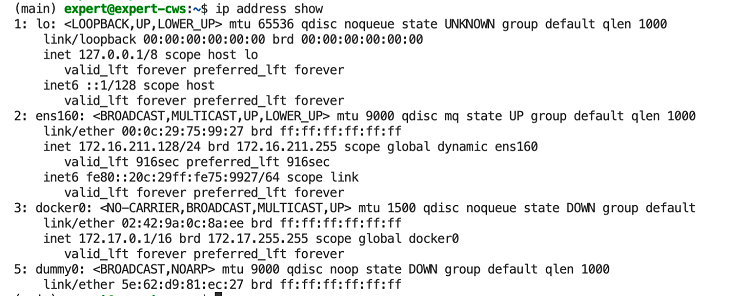

(main) expert@expert-cws:~$ ip address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:75:99:27 brd ff:ff:ff:ff:ff:ff

inet 172.16.211.128/24 brd 172.16.211.255 scope global dynamic ens160

valid_lft 1344sec preferred_lft 1344sec

inet6 fe80::20c:29ff:fe75:9927/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:9a:0c:8a:ee brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

Running “ip address show” will display the address configuration for all interfaces on the Linux workstation. My workstation has 3 interfaces configured, a loopback address, the ethernet interface, and docker interface. Some of the Linux hosts I work on have dozens of interfaces, particularly if the host happens to be running lots of Docker containers as each container generates network interfaces. I plan to dive into Docker networking in future blog posts, so we’ll leave the “docker0” interface alone for now.

We can focus our exploration by providing a specific network device name as part of our command.

(main) expert@expert-cws:~$ ip add show dev ens160

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:75:99:27 brd ff:ff:ff:ff:ff:ff

inet 172.16.211.128/24 brd 172.16.211.255 scope global dynamic ens160

valid_lft 1740sec preferred_lft 1740sec

inet6 fe80::20c:29ff:fe75:9927/64 scope link

valid_lft forever preferred_lft forever

Okay, that’s really what I was interested in looking at when I wanted to know what my IP address was. But there is a lot more info in that output than just the IP address. For a long time, I just skimmed over the output. I would ignore most output and simply look at the address and for state info like “UP” or “DOWN.” Eventually, I wanted to know what all that output meant, so in case you’re interested in how to decode the output above…

- Physical interface details

- “ens160” – The name of the interface from the operating system’s perspective. This depends a lot on the specific distribution of Linux you are running, whether it is a virtual or physical machine, and the type of interface. If you’re more used to seeing “eth0” interface names (like I was) it is time to become comfortable with the new interface naming scheme.

- “<BROADCAST,MULTICAST,UP,LOWER_UP>” – Between the angle brackets are a series of flags that provide details about the interface state. This shows that my interface is both broadcast and multicast capable and that the interface is enabled (UP) and that the physical layer is connected (LOWER_UP)

- “mtu 1500” – The maximum transmission unit (MTU) for the interface. This interface is configured for the default 1500 bytes

- “qdisc mq” – This indicates the queueing approach being used by the interface. Things to look for here are values of “noqueue” (send immediately) or “noop” (drop all). There are several other options for queuing a system might be running.

- “state UP”- Another indication of the operational state of an interface. “UP” and “DOWN” are pretty clear, but you might also see “UNKNOWN” like in the loopback interface above. “UNKNOWN” indicates that the interface is up and operational, but nothing is connected. Which is pretty valid for a loopback address.

- “group default” – Interfaces can be grouped together on Linux to allow common attributes or commands. Having all interfaces connected to “group default” is the most common setup, but there are some handy things you can do if you group interfaces together. For example, imagine a VM host system with 2 interfaces for management and 8 for data traffic. You could group them into “mgmt” and “data” groups and then control all interfaces of a type together.

- “qlen 1000” – The interface has a 1000 packet queue. The 1001st packet would be dropped.

- “link/ether” – The layer 2 address (MAC address) of the interface

- “inet” – The IPv4 interface configuration

- “scope global” – This address is globally reachable. Other options include link and host

- “dynamic” – This IP address was assigned by DHCP. The lease length is listed in the next line under “valid_lft”

- “ens160” – A reference back to the interface this IP address is associated with

- “inet6” – The IPv6 interface configuration. Only the link local address is configured on the host. This shows that while IPv6 is enabled, the network doesn’t look to have it configured more widely

Network engineers link the world together one device at a time. Exploring the “ip link” command.

Now that we’ve gotten our feet wet, let’s circle back to the “link” object. The output of “ip address show” command gave a bit of a hint at what “link” is referring to. “Links” are the network devices configured on a host, and the “ip link” command provides engineers options for exploring and managing these devices.

What networking interfaces are configured on my host?

(main) expert@expert-cws:~$ ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:75:99:27 brd ff:ff:ff:ff:ff:ff

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:9a:0c:8a:ee brd ff:ff:ff:ff:ff:ff

After exploring the output of “ip address show,” it shouldn’t come as a surprise that there are 3 network interfaces/devices configured on my host. And a quick look will show the output from this command is all included in the output for “ip address show.” For this reason, I almost always just use “ip address show” when looking to explore the network state of a host.

However, the “ip link” object is quite useful when you are looking to configure new interfaces on a host or change the configuration on an existing interface. For example, “ip link set” can change the MTU on an interface.

root@expert-cws:~# ip link set ens160 mtu 9000

root@expert-cws:~# ip link show dev ens160

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:75:99:27 brd ff:ff:ff:ff:ff:ff

Note 1: Changing network configuration settings requires administrative or “root” privileges.

Note 2: The changes made using the “set” command on an object are typically NOT maintained across system or service restarts. This is the equivalent of changing the “running-configuration” of a network device. In order to change the “startup-configuration” you need to edit the network configuration files for the Linux host. Check the details for network configuration for your distribution of Linux (ie Ubuntu, RedHat, Debian, Raspbian, etc.)

Is anyone else out there? Exploring the “ip neigh” command

Networks are most useful when other devices are connected and reachable through the network. The “ip neigh” command gives engineers a view at the other hosts connected to the same network. Specifically, it offers a look at, and control of, the ARP table for the host.

Do I have an ARP entry for the host that I’m having trouble connecting to?

A common problem network engineers are called on to support is when one host can’t talk to another host. If I had a nickel for every help desk ticket I’ve worked on like this one, I’d have an awful lot of nickels. Suppose my attempts to ping a host on my same local network with IP address 172.16.211.30 are failing. The first step I might take would be to see if I’ve been able to learn an ARP entry for this host.

(main) expert@expert-cws:~$ ping 172.16.211.30

PING 172.16.211.30 (172.16.211.30) 56(84) bytes of data.

^C

--- 172.16.211.30 ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2039ms

(main) expert@expert-cws:~$ ip neigh show

172.16.211.30 dev ens160 FAILED

172.16.211.254 dev ens160 lladdr 00:50:56:f0:11:04 STALE

172.16.211.2 dev ens160 lladdr 00:50:56:e1:f7:8a STALE

172.16.211.1 dev ens160 lladdr 8a:66:5a:b5:3f:65 REACHABLE

And the answer is no. The attempt to ARP for 172.16.211.30 “FAILED.” However, I can see that ARP in general is working on my network, as I have other “REACHABLE” addresses in the table.

Another common use of the “ip neigh” command involves clearing out an ARP entry after changing the IP address configuration of another host (or hosts). For example, if you replace the router on a network, a host won’t be able to communicate with it until the old ARP entry ages out and the system tries ARPing again for a new address. Depending on the operating system, this can take minutes — which can feel like years when waiting for a system to start responding again. The “ip neigh flush” command can clear an entry from the table immediately.

How do I get from here to there? Exploring the “ip route” command

Most of the traffic from a host is destined somewhere on another layer 3 network, and the host needs to know how to “route” that traffic correctly. After looking at the IP address(es) configured on a host, I will often take a look at the routing table to see if it looks like I’d expect. For that, the “ip route” command is the first place I look.

What routes does this host have configured?

(main) expert@expert-cws:~$ ip route show

default via 172.16.211.2 dev ens160 proto dhcp src 172.16.211.128 metric 100

10.233.44.0/23 via 172.16.211.130 dev ens160

172.16.211.0/24 dev ens160 proto kernel scope link src 172.16.211.128

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

It may not look exactly like the output of “show ip route” on a router, but this command provides very usable output.

◉ My default gateway is 172.16.211.2 through the “ens160” device. This route was learned from DHCP and will use the IP address configured on my “ens160” interface.

◉ There is a static route configured to network 10.233.44.0/23 through address 172.16.211.130

◉ And there are 2 routes that were added by the kernel for the local network of the two configured IP addresses on the interfaces. But the “docker0” route shows “linkdown” — matching the state of the “docker0” interface we saw earlier.

The “ip route” command can also be used to add or delete routes from the table, but with the same notes as when we used “ip link” to change the MTU of an interface. You’ll need admin rights to run the command, and any changes made will not be maintained after a restart. But this can still be very handy when troubleshooting or working in the lab.

And done… or am I?

So that’s is my “brief” look at the “ip” command for Linux. Oh wait, that bad pun attempt reminded me of one more tip I meant to include. There is a “–brief” option you can add to any of the commands that reformats the data in a nice table that is often quite handy. Here are a few examples.

(main) expert@expert-cws:~$ ip --brief address show

lo UNKNOWN 127.0.0.1/8 ::1/128

ens160 UP 172.16.211.128/24 fe80::20c:29ff:fe75:9927/64

docker0 DOWN 172.17.0.1/16

(main) expert@expert-cws:~$ ip --brief link show

lo UNKNOWN 00:00:00:00:00:00 <LOOPBACK,UP,LOWER_UP>

ens160 UP 00:0c:29:75:99:27 <BROADCAST,MULTICAST,UP,LOWER_UP>

docker0 DOWN 02:42:9a:0c:8a:ee <NO-CARRIER,BROADCAST,MULTICAST,UP>

Not all commands have a “brief” output version, but several do, and they are worth checking out.

There is quite a bit more I could go into on how you can use the “ip” command as part of your Linux network administration skillset. (Checkout the “–json” flag for another great option). But at 3,000+ words on this post, I’m going to call it done for today. If you’re interested in a deeper look at Linux networking skills like this, let me know, and I’ll come back for some follow-ups.

Source: cisco.com

Sunday, 1 May 2022

ChatOps: How to Secure Your Webex Bot

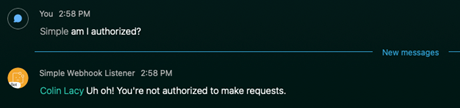

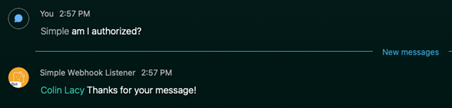

This is the second blog in our series about writing software for ChatOps. In the first post of this ChatOps series, we built a Webex bot that received and logged messages to its running console. In this post, we’ll walk through how to secure your Webex bot with authentication and authorization. Securing a Webex bot in this way will allow us to feel more confident in our deployment as we move on to adding more complex features.

[Access the complete code for this post on GitHub here.]

Very important: This post picks up right where the first blog in this ChatOps series left off. Be sure to read the first post of our ChatOps series to learn how to make your local development environment publicly accessible so that Webex webhook events can reach your API. Make sure your tunnel is up and running and webhook events can flow through to your API successfully before proceeding on to the next section. From here on out, this post assumes that you’ve taken those steps and have a successful end-to-end data flow. [You can find the code from the first post on how to build a Webex bot here.]

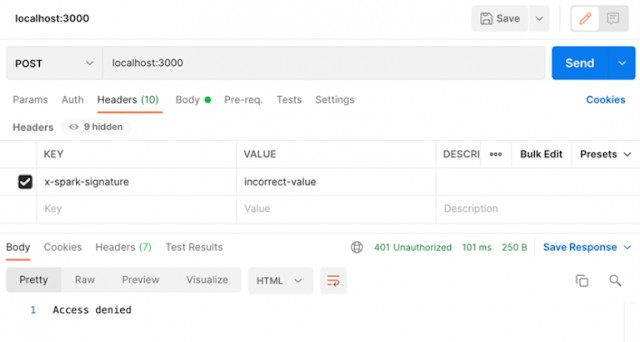

How to secure your Webex bot with an authentication check

Webex employs HMAC-SHA1 encryption based on a secret key that you can provide, to add security to your service endpoint. For the purposes of this blog post, I’ll include that in the web service code as an Express middleware function, which will be applied to all routes. This way, it will be checked before any other route handler is called. In your environment, you might add this to your API gateway (or whatever is powering your environment’s ingress, e.g. Nginx, or an OPA policy).

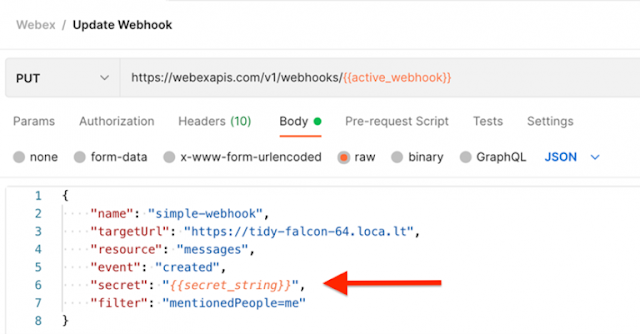

How to add a secret to the Webhook

Use your preferred tool to generate a random, unique, and complex string. Make sure that it is long and complex enough to be difficult to guess. There are plenty of tools available to create a key. Since I’m on a Mac, I used the following command:

$ cat /dev/urandom | base64 | tr -dc '0-9a-zA-Z' | head -c30

The resulting string was printed into my Shell window. Be sure to hold onto it. You’ll use it in a few places in the next few steps.

Now you can use that string to update your Webhook with a PUT request. You can also add it to a new Webhook if you’d like to DELETE your old one: