Tuesday, 5 December 2023

Integrated Industrial Edge Compute

Saturday, 19 June 2021

Create new possibilities at the IoT Edge with the Cisco Catalyst IR1800 Series

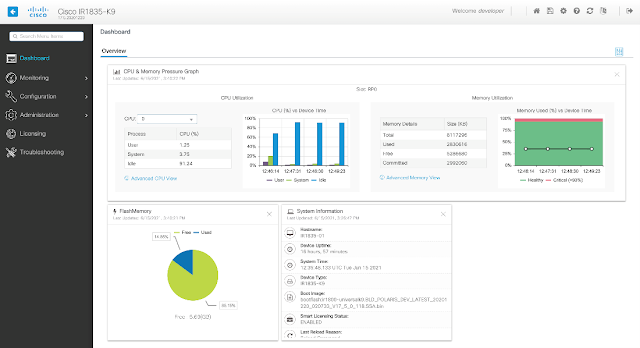

Get ready for an all-new Cisco industrial router: the Cisco Catalyst IR1800 Rugged Series. With many new interfaces and modules backed by a stronger CPU and more memory, the IR1800 series gives IoT application developers new possibilities for innovating at the IoT Edge, for example to host applications that can extract and transform IoT data right at the edge. The DevNet IoT Dev Center has a new learning lab and sandbox so you can try out these new features on a real IR1835 ruggedized router.

More Info: 300-715: Implementing and Configuring Cisco Identity Services Engine (SISE)

With the 5G/LTE, Wi-Fi 6, industrial SSD and GPS modules, the IR1800 series prepares you for the future, but that’s not all. The IR1800 focuses on supporting mobility , especially in the transportation industry with features like CAN bus, FirstNet, GPS/GNSS + dead reckoning and ignition power management. Furthermore, you can access all these interfaces from your IOx edge applications and use the data to power use-cases like recording video surveillance, streaming multi-media entertainment and advertisement content or providing predictive maintenance for the vehicle itself.

IOx Edge Compute

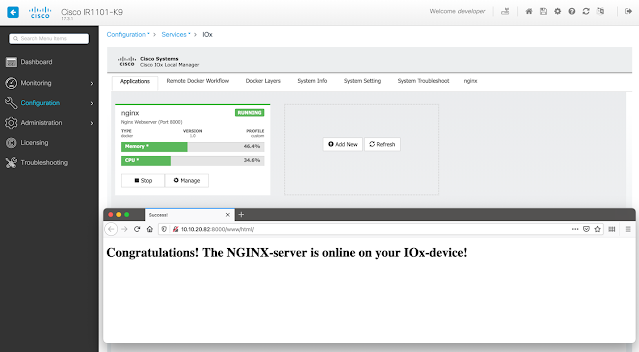

Here the NGINX server is installed and reachable on Port 8000.

Device APIs NETCONF & RESTCONF

WebUI

Thursday, 15 April 2021

Get Hands-on Experience with Programmability & Edge Computing on a Cisco IoT Gateway

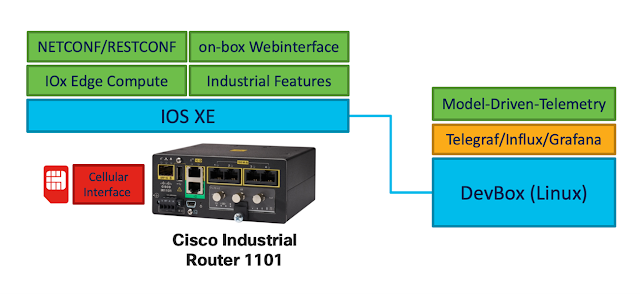

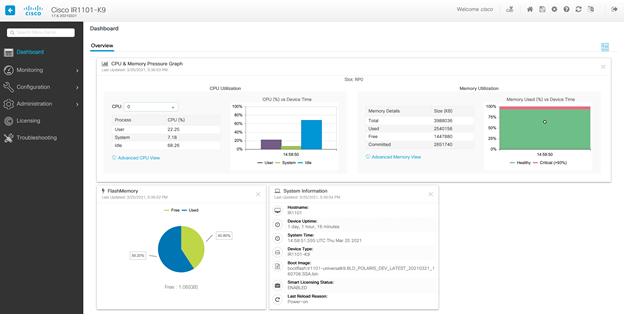

Are you still configuring your industrial router with CLI? Are you still getting network telemetry data with SNMP? Do you still use many industrial components when you can just have one single ruggedized IoT gateway that features an open edge-compute framework, cellular interfaces, and high-end industrial features?

Also Read: 200-201: Threat Hunting and Defending using Cisco Technologies for CyberOps (CBROPS)

Get ready to try out these features in an all-new learning lab and DevNet Sandbox featuring real IR1101 ruggedized hardware.

◉ Take me to the new learning lab

◉ Take me directly to the Industrial Networking and Edge Compute IR1101 Sandbox

The Industrial Router 1101

WebUI & high-end industrial feature-set

IOx Edge Compute

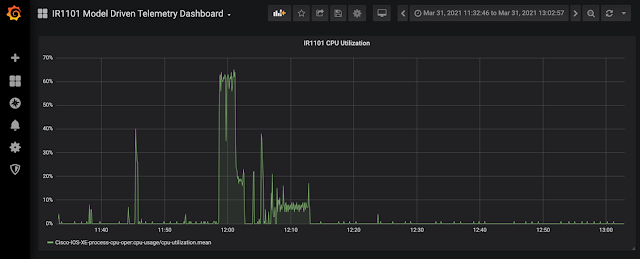

Device APIs NETCONF/RESTCONF & Model-Driven Telemetry

Monday, 16 March 2020

Setting a simple standard: Using MQTT at the edge

What is MQTT?

MQTT is the dominant standard used in IoT communications. It allows assets/sensors to publish data, for example, a weather sensor can publish the current temperature, wind metrics, etc. MQTT also defines how consumers can receive that data. For example, an application can listen to the published weather information and take local actions, like starting a watering system.

Why is MQTT ideal for edge computing?

There are three primary reasons for using this lightweight, open-source protocol at the edge. Because of its simplicity, MQTT doesn’t require much processing or battery power from devices. With the ability to use very small message headers, MQTT doesn’t demand much bandwidth, either. MQTT also makes it possible to define different quality of service levels for messages – enabling control over how many times messages are sent and what kind of handshakes are required to complete them.

How does MQTT work?

The core of the MQTT protocol are clients and servers that send many-to-many communications between multiple clients using the following:

◉ Topics provide a way of categorizing the types of message that may be sent. As one example, if a sensor measures temperature, the topic might be defined as “TEMP” and the sensor sends messages labeled “TEMP.”

◉ Publishers include the sensors that are configured to send out messages containing data. In the “TEMP” example, the sensor would be considered the publisher.

◉ In addition to transmitting data, IoT devices can be configured as subscribers that receive data related to pre-defined topics. Devices can subscribe to multiple topics.

◉ The broker is the server at the center of it all, transmitting published messages to servers or clients that have subscribed to specific topics.

Why choose MQTT over other protocols?

HTTP, Advanced Message Queuing Protocol (AMQP) and Constrained Application Protocol (CoAP) are other potential options at the edge. Although I could write extensively on each, for the purposes of this blog, I would like to share some comparative highlights.

A decade ago, HTTP would have seemed the obvious choice. However, it is not well suited to IoT use cases, which are driven by trigger events or statuses. HTTP would need to poll a device continuously to check for those triggers – an approach that is inefficient and requires extra processing and battery power. With MQTT, the subscribed device merely “listens” for the message without the need for continuous polling.

The choice between AMQP and MQTT boils down to the requirements in a specific environment or implementation. AMQP offers greater scalability and flexibility but is more verbose; while MQTT provides simplicity, AMQP requires multiple steps to publish a message to a node. There are some cases where it will make sense to use AMQP at the edge. Even then, however, MQTT will likely be needed for areas demanding a lightweight, low-footprint option.

Finally, like MQTT, CoAP offers a low footprint. But unlike the many-to-many communication of MQTT, CoAP is a one-to-one protocol. What’s more, it’s best suited to a state transfer model – not the event-based model commonly required for IoT edge compute.

These are among the reasons Cisco has adopted MQTT as the standard protocol for one of our imminent product launches. Stay tuned for more information about the product – and the ways it enables effective computing at the IoT edge.

Friday, 14 February 2020

Where is the edge in edge computing?

In 2015, Dr. Karim Arabi, vice president, engineering at Qualcomm Inc., defined edge computing as, “All computing outside cloud happening at the edge of the network.”

Dr. Arabi’s definition is commonly agreed upon. However, at Cisco — the leader in networking — we see the edge a little differently. Our viewpoint is that the edge is anywhere that data is processed before it crosses the Wide Area Network (WAN). Before you start shaking your head in protest, hear me out.

The benefits of edge computing

The argument for edge computing goes something like this: By handling the heavy compute processes at the edge rather than the cloud, you reduce latency and can analyze and act on time-sensitive data in real-time — or very close to it. This one benefit — reduced latency — is huge.

Reducing latency opens up a host of new IoT use cases, most notably autonomous vehicles. If an autonomous vehicle needs to break to avoid hitting a pedestrian, the data must be processed at the edge. By the time the data gets to the cloud and instructions are sent back to the car, the pedestrian could be dead.

The other often cited benefit of edge computing is the bandwidth or cost required to send data to the cloud. To be clear, there’s plenty of bandwidth available to send data to the cloud. Bandwidth is not the issue. The issue is the cost of that bandwidth. Those costs are accrued when you hit the WAN — it doesn’t matter where the data is going. In a typical network the LAN is a very cheap and reliable link, whereas the WAN is significantly more expensive. Once it hits the WAN, you’re accruing higher costs and latency.

Defining WHERE edge computing is

If we can agree that reduced latency and reduced cost are key characteristics of edge computing, then sending data over the WAN — even if it’s to a private data center in your headquarters — is NOT edge computing. To put it another way, edge computing means that data is processed before it crosses any WAN, and therefore is NOT processed in a traditional data center, whether it be a private or public cloud data center.

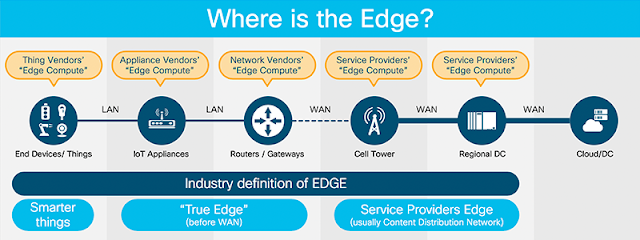

The following picture illustrates the typical devices in an Industrial IoT solution and who claims to have “Edge Compute” in this topology:

As you can also infer from the graphic, end devices should be excluded from edge compute because it can be near impossible to draw the line between things, smart things and edge compute things.

What’s old is new

We can take the concept of edge computing a step further and assert that it’s not new at all. In fact, we as an IT industry have been doing edge computing for quite some time. Remember how we learned about the cyclic behavior of compute centralization and compute decentralization? Edge compute is basically the latest term for decentralized compute.

The edge can mean very different things to different organizations, depending on the network infrastructure and use case. However, if you think about the edge in terms of the benefits you want to achieve, then it becomes clear very quickly where the edge of your IT environment begins and ends.

Thursday, 6 February 2020

Cisco Edge Intelligence: IoT data orchestration from edge to multi cloud

However, various challenges stand in the way of enabling this today:

◉ Geographically remote and distributed assets – The location of IoT assets vary from a dense urban area like traffic intersections to remote area like gas and water distribution where cellular connectivity is scarce

◉ Heterogeneous environments – IoT assets come from various manufacturers and no two manufacturers speak the same protocol or handle the same data model

◉ Multiple consumers of data – As the need for IoT data grows and the number of applications that can leverage data increases, making sure there are proper control and governance policies before the data leaves the network is an ever-growing challenge

◉ Complexity – Current approaches are often sub-scale and require custom software and integration of multi-vendor technologies that are overwhelmingly complex to deploy and manage

Cisco recently announced a new offering for IoT edge called Cisco Edge Intelligence. Cisco Edge Intelligence is a new IoT data orchestration software that extracts, transforms and delivers data of connected assets from edge to multi-cloud destinations with granular data control. It is a software service deployed on Cisco’s IoT Gateway (GW)/ Networking portfolio for easy, out-of-the–box deployments.

Cisco recently requested 451 research to conduct an analysis on the top issues with IoT deployment and the role of IoT edge. The research report helped validate the hypothesis of the problem and the solution that Cisco Edge Intelligence is addressing.

Cisco is currently conducting early field trials/pilot projects with select customers such as voestalpine, Port of Rotterdam, AHT Cooling (Daikin), National Informatics Center – India and many more across roadways, water quality/distribution and remote industrial asset monitoring. Cisco Edge Intelligence will be available for public in 2Q CY 2020. Primary value proposition of Cisco Edge Intelligence is as follows:

Out-of-the-box solution

Cisco Edge Intelligence comes out of the box working on most of Cisco’s IoT GW portfolio for plug–and–play operations, which is configured and managed using a SaaS or on-prem solutions. User experience is built grounds up after studying various industry user personas. Cisco Edge Intelligence has simplicity built into its core and makes data extraction, transformation, governance and delivery to its applications as easy as a click of a button. It can be deployed across thousands of gateways from a centralized location without having to worry about the underlying network configurations.

Pre-integrated data extraction for specific industries

Cisco has learnt that the hardest and challenging aspect of IoT deployments is consistently extracting data from various greenfield and brownfield assets. Cisco Edge Intelligence comes pre-integrated with a curated set of asset/device connectors based on specific industries that allow customers to onboard IoT assets seamlessly. Further, it provides an ability for customers to add meta data that helps normalize data across vendors.

Convert raw data to intelligent data

Based on feedback received, Cisco has embraced tools for development and debugging which are widely accepted by the community. Cisco Edge Intelligence provides scripting engines that allow customers’ and partners’ developers to develop scripts that can convert raw data to intelligent data. The scripts could be simple filtering, averaging, thresholding of data or complex analytical data processing for cleaning and grooming of data. These tools enable developers to remotely debug on a physical IoT GW and deploy with a click of a button from the same development interface.

Granular data governance

As the promise of IoT is delivered with every small thing being connected, and as the number of applications that can make sense of the IoT data increase, the problem of N*M arises. ‘N’ things being source of data from multiple things from different manufacturers and ‘M’ applications being destination of data from multiple applications from different vendors. The goodness IoT brings, could become a chaos to deal with, if not addressed ahead of time.

As this data deluge erupts, Cisco Edge Intelligence provides a fundamentally strong and holistic data control from the point of ingestion to consumption in a non-overwhelming way.

Pre-integrated with growing eco-system

The last and a key step necessary to deliver data to applications requires Cisco Edge Intelligence to be integrated with a growing set of platforms and applications. It is currently pre-integrated with one of the leading cloud providers and other platform/on-prem providers such as Software AG and Quantela. Cisco Edge Intelligence also supports standards based MQTT and encourages partners who are interested in working with Cisco to leverage the same. Cisco will continue to grow this ecosystem of IoT platform/application partners.

“When customers take advantage of both Cisco’s new Edge Intelligence running on Cisco’s GW and Software AG’s Cumulocity IoT running in data center or cloud, they will be able to bring IoT asset data from edge to cloud quickly and seamlessly,” said Yasir Qureshi, VP, IoT and Analytics at Software AG. “Keeping IoT simple with little or no coding has been a key to successfully unlocking the business value of the IoT on either edge or cloud-based applications.”

Cisco Edge Intelligence is a big step for Cisco IoT towards bridging the gap from where data is generated to where it is consumed. It offers an out–of–the–box, plug–and–play solution for everything in between.

Friday, 7 September 2018

Time to Get Serious About Edge Computing

Edge Computing Workloads Are Uniquely Demanding

There are three attributes in particular that need careful consideration when networking edge applications.

Very High Bandwidth

Video surveillance and facial recognition are probably the most visible of edge implementations. HD cameras operate at the edge and generate copious volumes of data, most of which is not useful. A local process on the camera can trigger the transmission of a notable segment (movement, lights) without feeding the entire stream back to the data center. But add facial recognition and the processing complexity increases exponentially, requiring much faster and more frequent communication with the facial analytics at the cloud or data center. For example, with no local processing at the edge, a facial recognition camera at a branch office would need access to costly bandwidth to communicate with the analytic applications in the cloud. Pushing recognition processing to the edge devices, or their access points, instead of streaming all the data to the cloud for processing decreased the need for high bandwidth while increasing response times.

Latency and Jitter

Sophisticated mobile experience apps will grow in importance on devices operating at the edge. Apps for augmented reality (AR) and virtual reality (VR) require high bandwidth and very low (sub-10 millisecond) latency. VoIP and telepresence also need superior Quality of Service (QoS) to provide the right experience. Expecting satisfactory levels of service from cloud-based applications over the internet is wishful thinking. While some of these applications run smoothly in campus environments, it’s cost prohibitive in most branch and distributed retail organizations using traditional WAN links. Edge processing can provide the necessary levels of service for AR and VR applications.

High Availability and Reliability

Many use cases for IoT edge computing will be in the industrial sector with devices such as temperature/humidity/chemical sensors operating in harsh environments, making it difficult to maintain reliable cloud connectivity. Far out-on-the-edge, devices such as gas field pressure sensors may not need real-time connections, but reliable burst communications to warn of potential failures. Conversely, patient monitors in hospitals and field clinics need consistent connectivity to ensure alerts are received when patients experience distress. Retail stores need high availability and low latency for Point of Sale payment processing and to cache rich media content for better customer experiences.

Building Hybrid Edge Solutions for Business Transformation

Cisco helps organizations create hybrid edge-cloud or edge-data center systems to move processing closer to where the work is being done—indoor and outdoor venues, branch offices and retail stores, factory floor and far in the field. For devices that focus on collecting data, the closest network connection—wired or wireless—can provide additional compute resources for many tasks such as filtering for significant events and statistical inferencing using Machine Learning (ML). Organizations with many branches or distributed store fronts designed for people interactions, can take advantage of edge processing to avoid depending on connectivity to corporate data centers for every customer transaction.

An example of an edge computing implementation is one for a national quick serve restaurant chain that wanted to streamline the necessary IT components at each store to save space while adding bandwidth for employee and guest Wi-Fi access, enable POS credit card transactions even when an external network connection is down, and connect with the restaurant’s mobile app to route orders to the nearest pickup location. Having all the locally-generated traffic traverse a WAN or flow through the internet back to the corporate data center is unnecessary, especially when faster response times enable individual stores to run more efficiently, thus improving customer satisfaction. In this particular case, most of the mission-critical apps run on a compact in-store Cisco UCS E-series with an ISR4000 router, freeing up expensive real estate for the core business—preparing food and serving customers—and improving in-store application experience. The Cisco components also provide local edge processing for an in-store kiosk touch screen menu interface to speed order management and tracking.

The Cisco Aironet Access Point (AP) platform adds another dimension to edge processing with distributed wireless sensors. APs, like the Cisco 3800, can run applications at the edge. The capability enables IT to design custom apps that process data from edge devices locally and send results to cloud services for further analysis. For example, an edge application that monitors the passing of railway cars and track conditions. Over a rail route, sensors at each milepost collect data on train passages, rail conditions, temperature, traffic loads, and real-time video. Each edge sensor attaches to an AP that aggregates, filters, and transmits the results to the central control room. The self-healing network minimizes service calls along the tracks while maintaining security of railroad assets via sensing and video feeds.

Bring IT to the Edge

There are thousands of ways to transform business operations with IoT and edge applications. Pushing an appropriate balance of compute power to the edge of the cloud or enterprise network to work more closely with distributed applications improves performance, reduces data communication costs, and increases the responsiveness of the entire network. Building on a foundation of an intent-based network with intelligent access points and IOS-XE smart routers and Access Points, you can link together edge sensors, devices, and applications to provide a secure foundation for digital transformation. Let us hear about your “edgy” network challenges.

Thursday, 25 January 2018

IoT Edge Compute Part 1 – Apps and Deployment on Cisco IoT Gateways

Why We Need Edge Compute

We have been hearing about the increasing need for compute at the edge of our network for IoT devices. In case you are not familiar with this concept, edge compute is ncecessary due to the amount of information that is and will be generated from IoT devices. The problem to be addressed is typically one of three things: