Cisco ACI has provided integration with KVM in OpenStack environments quite for some time now. OpenStack is a great technology to implement Infrastructure-as-a-Service (Iaas) with uses cases for Private, Public and Telco clouds. But indeed Openstack provides a cloud platform, tailored to “cattle-type” of workloads, as opposed to “pet-type” workloads.

Many organizations will continue to run a significant amount of pet-type workloads, most of which can run virtualized. KVM is a fantastic hypervisor for such workloads, and the oVirt open source project provides a fully functional virtualization management solution. Red Hat Virtualization (RHV) is a supported version of oVirt, and it is probably the leading open-source KVM virtualization management platform in the marketplace.

Red Hat Virtualization enables organizations to build x86 pools of capacity, using centralized or distributed storage solutions to quickly spin up Virtual Machines. A common challenge remains in achieving programmable network and security to match the speed of provisioning and workload mobility possible within RHV clusters.

With ACI 3.1 we introduced integration between APIC and RHV Manager in order to address this challenge and bring the power of SDN and the group-based policy model to Red Hat Virtualization customers. In this first phase of integration we focused on bringing the benefits of network automation and segmentation by automating the extension of EndPointGroups (EPG) to Red Hat Virtualization clusters.

Let’s look and you can do today when combining ACI 3.1 with Red Hat Virtualization.

In RHV/oVirt nomenclature, virtual networks are called “Logical Networks”. Logical Networks represent broadcast domains and are traditionally mapped to VLANs in the physical network, thus connecting the various RHV hosts that make up the RHV clusters. It is possible to perform live migration of VMs within a Cluster, and between Clusters. For this to work, there must be a network configured for this purpose: the Live Migration Logical Network. Also, the Logical Network used to attach the VM vNIC(s) must exist on the sourcing and receiving RHV host, and accordingly on the physical switches that connect them.

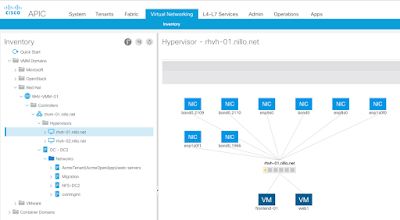

Since ACI 3.1, it is possible to create a VMM Domain for APIC to interface with the Red Hat Virtualization Manager. Once the VMM is configured the APIC will connect to the RHV Manager API and obtain an inventory of virtual resources, as seen on the sample screenshot below.

Not only we can simplify and automate network configurations now, but we can also provide better segmentation. Imagine that you have Virtual Machines that perform different functions and you want to isolate how they communicate yet you need to keep them on the same subnet. A basic example, seen in the demo at the end of this section, is to have a reverse proxy as a front-end for a web application.

You can configure an EPG for your frontend and another EPG for your web application, each representing a Logical Network, and both using the same subnet in ACI. In the graphic below we show these EPGs mapped to a RHV cluster, both would be associated to the same Bridge Domain and the same subnet. We also show another EPG mapped to a Physical domain to connect bare metal databases. There is also a connection to an external network via Border Leafs, so the ACI fabric can also filter north-south traffic. We also illustrate that ACI leafs provide distributed security, and it is possible to use ACI contracts to limit external access to only the frontend Logical Network, and only to the right ports and protocols, as well as to provide East-West security between the frontend and web Logical Networks, and between the web Logical Network and the bare metal databases.

Many organizations will continue to run a significant amount of pet-type workloads, most of which can run virtualized. KVM is a fantastic hypervisor for such workloads, and the oVirt open source project provides a fully functional virtualization management solution. Red Hat Virtualization (RHV) is a supported version of oVirt, and it is probably the leading open-source KVM virtualization management platform in the marketplace.

Red Hat Virtualization enables organizations to build x86 pools of capacity, using centralized or distributed storage solutions to quickly spin up Virtual Machines. A common challenge remains in achieving programmable network and security to match the speed of provisioning and workload mobility possible within RHV clusters.

With ACI 3.1 we introduced integration between APIC and RHV Manager in order to address this challenge and bring the power of SDN and the group-based policy model to Red Hat Virtualization customers. In this first phase of integration we focused on bringing the benefits of network automation and segmentation by automating the extension of EndPointGroups (EPG) to Red Hat Virtualization clusters.

Let’s look and you can do today when combining ACI 3.1 with Red Hat Virtualization.

Physical and Virtual Network Automation

In RHV/oVirt nomenclature, virtual networks are called “Logical Networks”. Logical Networks represent broadcast domains and are traditionally mapped to VLANs in the physical network, thus connecting the various RHV hosts that make up the RHV clusters. It is possible to perform live migration of VMs within a Cluster, and between Clusters. For this to work, there must be a network configured for this purpose: the Live Migration Logical Network. Also, the Logical Network used to attach the VM vNIC(s) must exist on the sourcing and receiving RHV host, and accordingly on the physical switches that connect them.

Since ACI 3.1, it is possible to create a VMM Domain for APIC to interface with the Red Hat Virtualization Manager. Once the VMM is configured the APIC will connect to the RHV Manager API and obtain an inventory of virtual resources, as seen on the sample screenshot below.

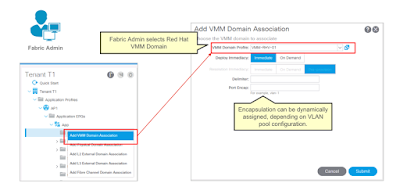

Using this API, APIC can also provision Logical Networks. One ACI EPG will map to a Logical Network. Whenever a new Logical Network is required, the fabric administrator will create an EPG and then add the VMM association, such as shown below for an EPG named ‘App’:

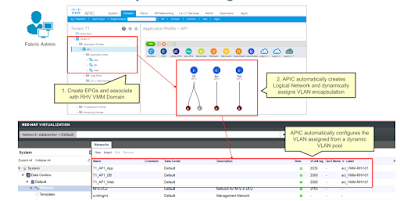

This simple action is all that is required for completing all network provisioning, even if you have several clusters with hundreds of RHV hosts across many different ACI Leafs. APIC will assign a VLAN to the EPG from the VMM VLAN pool, it will provision it on all the ACI leaf ports connected to Red Hat Virtualization Clusters, and it will automatically configure a matching Logical Network on the RHV Manager. The end result is illustrated on the picture below, showing several EPGs and the corresponding Logical Networks:

In the following video we show various ways to configure EPGs and map them to a RHV VMM domain. Depending on the separation of concerns, and the familiarity with different tools, administrators can use the APIC NX-OS CLI, or the APIC GUI. It is also possible to use the ansible ‘aci_epg_to_domain’ module to quickly associate an EPG to a RHV VMM Domain.

Segmentation and distributed security

Not only we can simplify and automate network configurations now, but we can also provide better segmentation. Imagine that you have Virtual Machines that perform different functions and you want to isolate how they communicate yet you need to keep them on the same subnet. A basic example, seen in the demo at the end of this section, is to have a reverse proxy as a front-end for a web application.

You can configure an EPG for your frontend and another EPG for your web application, each representing a Logical Network, and both using the same subnet in ACI. In the graphic below we show these EPGs mapped to a RHV cluster, both would be associated to the same Bridge Domain and the same subnet. We also show another EPG mapped to a Physical domain to connect bare metal databases. There is also a connection to an external network via Border Leafs, so the ACI fabric can also filter north-south traffic. We also illustrate that ACI leafs provide distributed security, and it is possible to use ACI contracts to limit external access to only the frontend Logical Network, and only to the right ports and protocols, as well as to provide East-West security between the frontend and web Logical Networks, and between the web Logical Network and the bare metal databases.

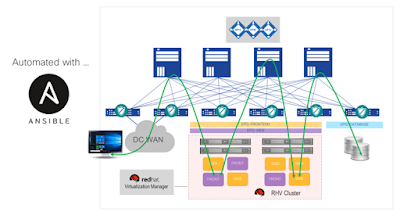

In the video I show more details of a lab implementing this topology and provide an example of how to completely automate this configuration using Ansible.

Simplified Operations: Virtual and Physical correlation

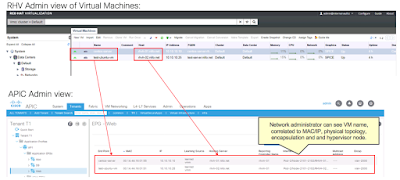

A key advantage of the ACI VMM integrations is enhanced operations. The APIC imports the inventory of the RHV Manager, thus allowing the fabric administrator to be able to identify Virtual Machines not just by their network identity (IP or MAC address), but also by their name. In this way, a fabric administrator can quickly search a VM, find the hypervisor where it is running, and verify if it is connected to the right Logical Network or whether it is powered off.

Every EPG will correspond to a Logical Network, and when the fabric administrator searches for an endpoint in the fabric, she or he can see under the EPG the complete correlation of physical and virtual topology. We show an example of this below, matching the IP/MAC to the name of the VM, name of the hypervisor, RHV Manager and physical ports on the ACI leafs.

This information is correlated automatically and is continuously updated, for instance to reflect when a VM moves from one host to another. If you watched the previous demo you may have seen this, where at the end of the demo I triggered a VM migration to illustrate that contracts continue being enforced and connectivity endures during the migration and after the migration the right information is seen under the EPG.

Running RHV Clusters on different Data Centers

For customers leveraging ACI Multi-POD, RHV architects will find that building active/active solutions becomes extremely easy. For instance, on the picture below we show a RHV Cluster stretched across two ACI PODs. The ACI PODs could be fabrics on two different rooms or on two different data centers (assuming storage replication is also taken care of). The picture illustrates how the EPG dedicated to Migration (in green) is easily extended, just like the EPGs for the application tiers. Live VM migrations can therefore happen between the data centers, and the VMs will remain on the same network, communicating always to the same distributed default gateway and having the same security policies applied.

Perhaps a more common design is where you have independent clusters on each data center or server room, illustrated on the picture below these lines. Again, the same simple provisioning discussed above will ensure that the right EPGs and policies are deployed and available to both clusters, including the migration network.

A quick look at the road ahead

Red Hat Virtualization provides a powerful and open virtualization solution for modern data centers. By integrating them with ACI for network and security automation, customers can simplify dramatically how applications are provisioned and overall DC operations. ACI brings distributed networking, simplified mobility and distributed security to RHV environments.

In future APIC releases we plan to continue enhancing the support for RHV, bringing more advanced micro segmentation features and providing even greater visibility.

Finally …

I will leave you with a final demo, leveraging the existing shipping integrations. The idea is showing the power of a multi-hypervisor SDN fabric. In this case to illustrate how easy it is to migrate from proprietary hypervisors to KVM when you easily can extend the networks from one to the other.

0 comments:

Post a Comment