Cisco DIRL offers solutions to SAN congestion without any dependency on hosts or storage.

We announced the release of Cisco® Dynamic Ingress Rate Limiting (DIRL), a major innovation to alleviate SAN congestion. Cisco DIRL is a reality today and promises to offer a practical solution to SAN congestion from a fabric perspective without any dependency on the host or storage. If you have not had a chance to review this new innovative technology, I would recommend reviewing the video, blog, presentation, solution overview or the Interfaces Configuration Guide.

In this writeup, I will cover the core of Cisco DIRL so that you can gain a solid understanding of this new innovative technology that does not require any changes to the host or storage. I will not cover how Cisco DIRL solves congestion, please refer to the web links listed above for that information.

First let’s cover the basics.

Fibre Channel (FC) Buffer to Buffer crediting

FC is built on the premise of offering a lossless fabric tailor made for storage protocols. Lossless fabric means that the probability of frame drop inside the switches and the interconnecting links is kept to a minimum. This guarantee is important to meet the performance requirements of storage protocols like SCSI/FICON/NVMe.

While FC switches implement various schemes to avoid frame drops within the switches, the way no frame drop is achieved on links interconnecting two FC ports is through a mechanism known as Buffer to Buffer (B2B) crediting. Let me break down the B2B crediting concept:

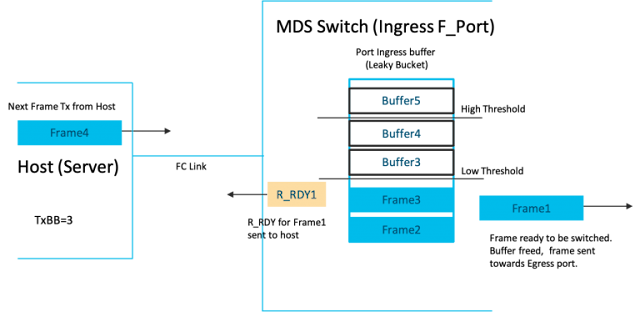

◉ During a FC link up, the number of receive buffers on a port is exchanged as B2B credits with its peer port (during FLOGI/ACC on a F-Port and ELP/ACC on a E-port). The transmitters at either ends set a TxBB counter = number of receive buffers on the peer port.

◉ An R_RDY primitive is used to indicate the availability of one buffer on the receive side of the port sending the R_RDY.

◉ As traffic starts flowing on the link, the R_RDY is used to constantly refresh the receive buffer levels to the transmitter. At the transmitter, every transmitted frame decreases TxBB counter by 1 and every R_RDY received from the peer increases the TxBB counter by 1. If TxBB counter drops to 0, no frame can be transmitted. Any frame needing to be sent has to wait till an R_RDY is received. At the receiver end, as and when incoming frames are processed and switched out, its ready to receive another frame and an R_RDY is sent back to the sender. This control loop constantly runs bidirectionally on every FC link. It ensures the transmit end of an FC port can transmit a frame only if the receiving port has a buffer to receive the frame.

Figure 1: B2B crediting on an FC link

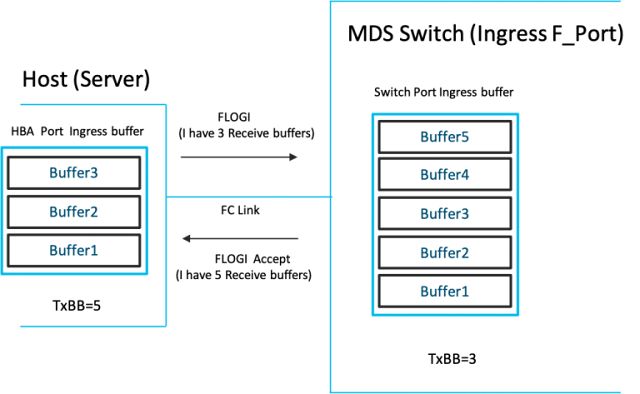

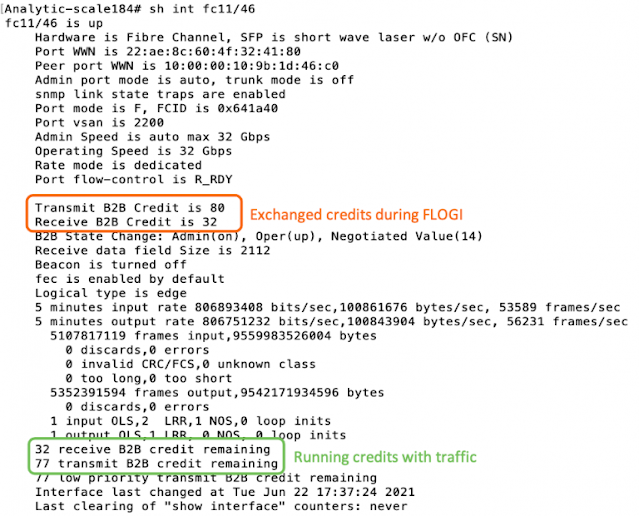

To see B2B crediting in action, take a look at a Cisco MDS switch that is switching traffic, and view the interface counters (Figure 2) that indicate the exchanged and live credits on a FC link.

Figure 2: Cisco MDS show interface command displays B2B credits

Insight: FC Primitives are single words (4 bytes) that carry a control message to be consumed at the lower layers of the FC stack. They are not FC frames and so transmitting a R_RDY itself does not require a credit. The R_RDYs are inserted as fill words between frames (instead of IDLEs) and so do not carry an additional bandwidth penalty either.

I hope I have touched upon just the enough of the basics to introduce you to two interesting concepts unique to Cisco MDS FC ASICs that forms the foundation of CISCO DIRL technology.

1. Ingress Rate Limiter

In the Cisco MDS FC ASICs, a frame rate limiter is implemented at the receiver side of port to throttle the peer transmitting to it. The rate limiter is implemented as a leaky bucket and can be enabled on any FC port. If you never heard this term before, please review the information at

Wikipedia: Leaky bucket to learn more about this technology.

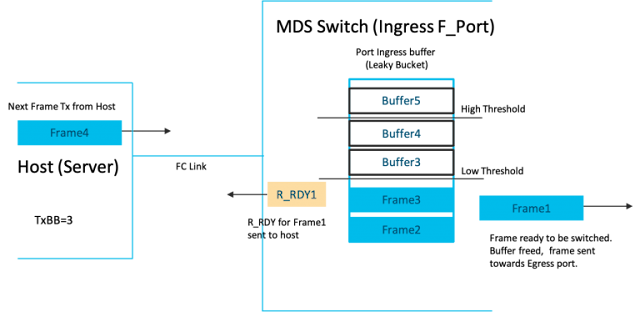

The ingress buffers on the port are treated like a leaky bucket and filled by a token when a full frame is received. At the same time the tokens are leaked from the bucket at a configurable rate of ‘R’. The rate ‘R’ is programmed based on a dynamically deduced rate by the Cisco DIRL software logic.

As the bucket leaks and the received frames are switched out, R_RDYs have to be sent to the peer. The sending of the R_RDY is tied to two bucket thresholds viz. Low and High threshold as follows:

◉ If bucket occupancy < Low Threshold, R_RDY is immediately sent out.

◉ If the bucket occupancy > Low Threshold and < High threshold, R_RDY is sent with credit pacing.

◉ If the bucket occupancy > High threshold, the R_RDY is held back and will be sent out when occupancy falls below the High threshold.

In summary, an R_RDY is sent out only if the bucket has ‘leaked enough’.

2. Credit pacing

When the buffer(bucket) occupancy hits the High threshold, all R_RDYs are stalled. Eventually the leak will cause the occupancy to fall below High threshold and all the pending R_RDYs will have to be sent out. This can result in a burst of R_RDYs sent to the peer which can result in a flood of waiting frames to be transmitted, which can again result in the buffer at the receiver port to go past the High threshold. This ‘ping-pong’ effect can result in very bursty traffic when traffic rates are high. To avoid this, R_RDY pacing is employed wherein a hardware timer paces out the release of R_RDYs such that incoming traffic is smoothed out while not exceeding the rate ‘R’.

By using ingress rate limiting and credit pacing on a port the Cisco MDS ASICs will ensure that a host/storage port is only able to send out frames at a rate <= ‘R’ on that port. The below diagrams illustrate the functioning of the scheme.

A. Port buffer occupancy is below the lower threshold

Figure 3: Port buffer below Low threshold

B. Port buffer occupancy is above the high threshold

Figure 4: Port buffer above High threshold

C. Port buffer occupancy is between Low and High threshold. Host can only send at rate <= R

Figure 5: Port buffer between High and Low threshold with R_RDY pacing

Source: cisco.com

0 comments:

Post a Comment