This is the final part on the High Performance Data Center Design. We will look at how high performance, high availability and flexibility allows customers to scale up or scale out over time without any disruption to the existing infrastructure. MDS 9710 capabilities are field proved with the wide adoption and steep ramp within first year of the introduction. Furthermore Cisco has not only established itself as a strong player in the SAN space with so many industry’s first innovations like VSAN, IVR, FCoE, Unified Ports that we introduced in last 12 years, but also has the leading market share in SAN.

Before we look at some architecture examples lets start with basic tenants any director class switch should support when it coms to scalability and supporting future customer needs

◉ Design should be flexible to Scale Up (increase performance) or Scale Out (add more port)

◉ The process should not be disruptive to the current installation for cabling, performance impact or downtime

◉ The design principals like oversubscription ratio, latency, throughput predictability (as an example from host edge to core) shouldn’t be compromised at port level and fabric level

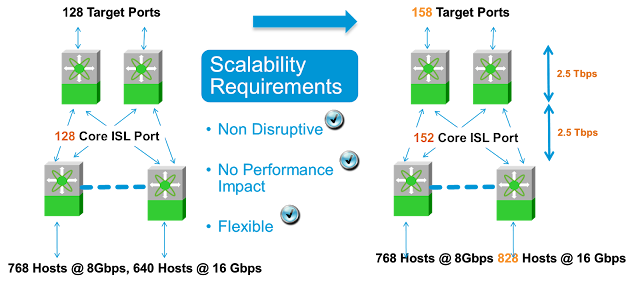

Lets take a scale out example, where customer wants to increase 16G ports down the road. For this example I have used a core edge design with 4 Edge MDS 9710 and 2 Core MDS 9710. There are 768 hosts at 8Gbps and 640 hosts running at 16Gbps connected to 4 edge MDS 9710 with total of 16 Tbps connectivity. With 8:1 oversubscription ratio from edge to core design requires 2 Tbps edge to core connectivity. The 2 core systems are connected to edge and targets using 128 target ports running at 16Gbps in each direction. The picture below shows the connectivity.

Before we look at some architecture examples lets start with basic tenants any director class switch should support when it coms to scalability and supporting future customer needs

◉ Design should be flexible to Scale Up (increase performance) or Scale Out (add more port)

◉ The process should not be disruptive to the current installation for cabling, performance impact or downtime

◉ The design principals like oversubscription ratio, latency, throughput predictability (as an example from host edge to core) shouldn’t be compromised at port level and fabric level

Lets take a scale out example, where customer wants to increase 16G ports down the road. For this example I have used a core edge design with 4 Edge MDS 9710 and 2 Core MDS 9710. There are 768 hosts at 8Gbps and 640 hosts running at 16Gbps connected to 4 edge MDS 9710 with total of 16 Tbps connectivity. With 8:1 oversubscription ratio from edge to core design requires 2 Tbps edge to core connectivity. The 2 core systems are connected to edge and targets using 128 target ports running at 16Gbps in each direction. The picture below shows the connectivity.

Down the road data center requires 188 more ports running at 16G. These 188 ports are added to the new edge director (or open slots in the existing directors) which is then connected to the core switches with 24 additional edge to core connections. This is repeated with 24 additional 16G targets ports. The fact that this scale up is not disruptive to existing infrastructure is extremely important. In any of the scale out or scale up cases there is minimal impact, if any, on existing chassis layout, data path, cabling, throughput, latency. As an example if customer doesn’t want to string additional cables between the core and edge directors then they can upgrade to higher speed cards (32G FC or 40G FCoE with BiDi ) and get double the bandwidth on the on the existing cable plant.

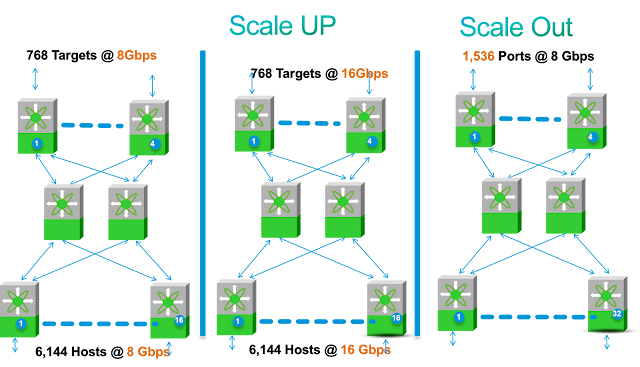

Lets look at another example where customer wants to scale up (i.e. increase the performance of the connections). Lets use a edge core edge design for this example. There are 6144 hosts running at 8Gbps distributed over 10 edge MDS 9710s resulting in a total of 49 Tbps edge bandwidth. Lets assume that this data center is using a oversubscription ratio of 16:1 from edge into the core. To satisfy that requirement administrator designed DC with 2 core switches 192 ports each running at 3Tbps. Lets assume at initial design customer connected 768 Storage Ports running at 8G.

Few years down the road customer may wants to add additional 6,144 8G ports and keep the same oversubscription ratios. This has to be implemented in non disruptive manner, without any performance degradation on the existing infrastructure (either in throughput or in latency) and without any constraints regarding protocol, optics and connectivity. In this scenario the host edge connectivity doubles and the edge to core bandwidth increases to 98G. Data Center admin have multiple options for addressing the increase core bandwidth to 6 Tbps. Data Center admin can choose to add more 16G ports (192 more ports to be precise) or preserve the cabling and use 32G connectivity for host edge to core and core to target edge connectivity on the same chassis. Data Center admin can as easily use the 40G FCoE at that time to meet the bandwidth needs in the core of the network without any forklift.

Or on the other hand customer may wants to upgrade to 16G connectivity on hosts and follow the same oversubscription ratios. . For 16G connectivity the host edge bandwidth increases to 98G and data center administrator has the same flexibility regarding protocol, cabling and speeds.

For either option the disruption is minimal. In real life there will be mix of requirements on the same fabric some scale out and some scale up. In those circumstances data center admins have the same flexibility and options. With chassis life of more than a decade it allows customers to upgrade to higher speeds when they need to without disruption and with maximum flexibility. The figure below shows how easily customers can Scale UP or Scale Out.

As these examples show Cisco MDS solution provides ability for customers to Scale Up or Scale out in flexible, non disruptive way.