In the not-too-distant past, everything in the application and networking stack was under IT’s control. Workloads lived securely in the on-premises data center—people sat in their campus offices connected to the secure wireless network, and an MPLS service with an SLA connected branch offices to the data center and each other.

Today, workforce productivity depends on cloud and SaaS applications that often rely on the public cloud infrastructure, which in turn depends on the internet as part or all the WAN connectivity. The internet paths depend on a multitude of ISPs, CDNs and advanced network services. Hybrid and native clouds applications are mostly containerized, so performance can be affected by the communication paths among the microservices, both in the data center and cloud. The total application experience as perceived by the workforce is dependent on the performance of all the components of applications and network connections acting in concert. If one element falters, the whole experience can be impacted.

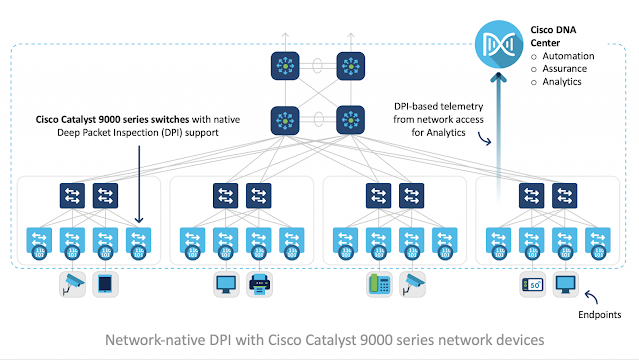

NetOps and DevOps need to understand the interdependencies among the component applications and tune the enterprise network and internet paths accordingly. A unifying view can only be provided by the network fabric that monitors and analyzes the full stack of interlacing components: from the foundational network data layer to the software-defined WAN to application containers in the cloud. With the workforce accessing applications from literally everywhere, all the time, IT requires pervasive, real-time monitoring of network, internet, and application performance with auto-healing capabilities. This is Deep Network Visibility, driven by software-defined controllers and network analytics that enable action, policy, and automation.

Visibility Begins with a Comprehensive Historical View

To improve application experience, IT needs tools to record, analyze, and report on network and application activity at a massive scale to build a deep historical data set against which to apply AI and Machine Reasoning tools. Hybrid and cloud applications consist of multiple micro-components connected by east-west traffic in the data center or cloud service. Continuous monitoring and analysis are needed to optimize application experience because many inter-application communication issues are transitory and difficult to replicate. Application performance needs to be recorded for machine analysis to determine recurring issues and root causes. Deep Network Visibility from the perspective of the application requires:

◉ Application experience as measured by ThousandEyes, NetFlow, and AppDynamics.

◉ Dependency graph to the underlying composite application services and infrastructures.

◉ Comprehensive availability and performance data on each of the supporting components such as composite application services, public cloud services, ISPs, networking devices, compute and storage infrastructure.

The irony of having mountains of telemetry and activity logs awaiting analysis by overworked IT teams is that there is too much noise in too much data for humans to deal with in a timely manner. When the volume of data is beyond human scale and below human sensitivity, machine reasoning (MR) can automate the analysis of trillions of bytes of switch and router telemetry, wireless radio fingerprints, and network access point interferences to uncover patterns in the chaos, and turn the findings into actionable insights and automated mitigation actions.

Automated Visibility with AI Network Analytics

To make full use of the deep historical and real-time data, IT can take advantage of an analytics software stack that can:

◉ Use purpose-built applications to augment human engineers in NetSecOps with Insights into network performance and security vulnerabilities.

◉ Leverage machine-speed analytics and knowledge-base Machine Reasoning Engine (MRE) to unburden NetSecOps from mundane monitoring tasks to focus on proactive digital transformation projects with DevOps.

◉ Achieve massive collection, storage, and analysis of diverse data lakes—collections of anonymized network and application telemetry based on volume, velocity, and variety of data to compare performance and security metrics.

For several decades, Cisco has been building a data lake of worldwide, anonymized customer telemetry in parallel with a knowledge-base of expert troubleshooting experience, both of which are available to machine reasoning algorithms under the command and control of Cisco DNA Center. With Cisco AI Network Analytics, NetOps can, for example, be forewarned of increases in Wi-Fi interference, network bottlenecks, uneven device onboarding times, and office traffic loads in the more traditional data center and campus network environments.