The modern data center has evolved in a brief period of time into the complex environments seen today, with extremely fast, high-density switching pushing large volumes of traffic, and multiple layers of virtualization and overlays. The result – a highly abstract network that can be difficult to monitor, secure and troubleshoot. At the same time, networking, security, operations and applications teams are being asked to increase their operational efficiency and secure an ever-expanding attack surface inside the Data Center. Cisco Tetration™ is a modern approach to solving these challenges without compromising agility.

It’s been almost two years since Cisco publicly announced Cisco Tetration™. And, after eight releases of code, there are many new innovations, deployment options, and new capabilities to be excited about.

Container use is one of the fastest growing technology trends inside data centers. With the recently released Cisco Tetration code (version 2.3.x), containers join an already comprehensive list of streaming telemetry sources for data collection. Cisco Tetration now supports visibility and enforcement for container workloads. . . and thus, the focus of this blog.

Protecting data center workloads

Most cybersecurity experts agree that data centers are especially susceptible to lateral attacks from bad actors who attempt to take advantage of security gaps or lack of controls for east-west traffic flows. Segmentation, whitelisting, zero-trust, micro-segmentation, and application segmentation are all terms used to describe a security model that, by default, has a “deny all,” catch-all policy – an effective defense against lateral attacks.

However, segmentation is the final act, so to speak. The opening act? Discovery of policies and inventory through empirical data (traffic flows on the network and host/workload contextual data) to accurately build, validate, and enforce intent.

To better appreciate the importance of segmentation, Tim Garner, a technical marketing engineer from the Cisco Tetration Business Unit has put together an excellent blog that explains how to achieve good data center hygiene.

Important takeaway #1: To reduce the overall attack surface inside the data center, the blast radius of any compromised endpoint must be limited by eliminating any unnecessary lateral communication. The discovery and implementation of extremely fine-grained security policies is an effective but not easily achieved approach.

Important takeaway #2: A holistic approach to hybrid cloud workload security must be agnostic to infrastructure and inclusive of current and future-facing workloads.

Containers are one of the fastest growing technology trends inside the Data Center. To learn more about how Cisco Tetration can provide lateral security for hybrid cloud workloads inclusive of containers, read on!!!

On to container support within Cisco Tetration . . .

The objective? To demonstrate visibility and enforcement inclusive of current and future workloads – that is, workloads that are both virtual and containerized. To simulate a real-world application, the following deployment of a WordPress application called BorgPress is leveraged.

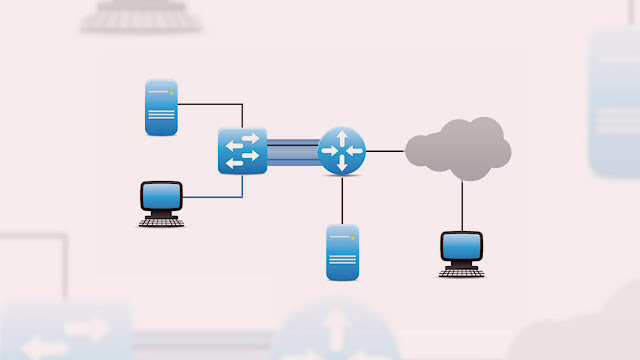

A typical, but often difficult to keep up with, approach to tracking the evolution of an application’s lifespan is by using a logical application flow diagram. The diagram below documents the logical flow between the application tiers of BorgPress. Network or security engineers responsible for implementing the security rules that allow required network communications through a firewall or security engine rely on such diagrams.

A quickly growing trend by developers is the adoption of Kubernetes as an open-source platform (from Google) for managing containerized applications and services. Bare metal servers still play a significant role 15 years after virtualization technology arrived. It’s expected that, as container adoption occurs, applications will be deployed as hybrids – a combination of bare metal, virtual, and containerized workloads. Therefore, BorgPress is deployed as a hybrid.

A wordpress web tier of BorgPress is deployed as containers inside a Kubernetes cluster. The proxies and database tiers are deployed as virtual machines.

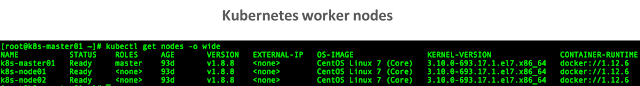

The Kubernetes environment is made up of one master node and two worker nodes.

Discovery of application policies is a more manageable task for containerized applications as compared to traditional workload types (bare metal or virtual machines). This is because container orchestrators leverage declarative object configuration files to deploy applications. These files contain embedded information regarding which ports are to be used. For example, BorgPress uses a YAML file— specifically, a replica set object, as shown in the figure below—to describe the number of wordpress containers to deploy and on which port (port 80) to expose the container.

To allow external users access to the BorgPress application, Kubernetes leverages an external service object type of NodePort to expose a dynamic port within a default range of 30000‒32767. Traffic received by the Kubernetes worker nodes destined to port 30000 (the service defined to listen to incoming requests for BorgPress) will be load-balanced to one of the three BorgPress endpoints.

Orchestrator integration

In a container eco-system, workloads are mutable and often short-lived. IP addresses come and go. The same IP that is assigned to workload A might, in a blink of an eye, be now assigned to workload B. As such, the policies in a container environment must be flexible and capable of being applied dynamically. A declarative policy that is abstract hides the underlying complexity. Lower-level constructs, such as IP addresses, are given context, for example through the use of labels, tags, or annotations. This allows humans to describe a simplified policy and systems to translate that policy.

Cisco Tetration supports an automated method of adding meaningful context through user annotations. These annotations can be manually uploaded or dynamically learned in real time from external orchestration systems. The following orchestrators are supported by Cisco Tetration (others can also be integrated through an open RESTful API):

◈ VMWare vCenter

◈ Amazon Web Services

In addition, Kubernetes and OpenShift now are also supported external orchestrators. When an external orchestrator is added (through Cisco Tetration’s user interface) for a Kubernetes or OpenShift cluster, Cisco Tetration connects to the cluster’s API server and ingests metadata, which is automatically converted to annotations prefixed with an “orchestrator_” tag.

In the example below, filters are created and used within the BorgPress application workspace to build abstract security rules that, when enforced, implement a zero-trust policy.

Data collection and flow search

To support container workloads, the same Cisco Tetration agent used on the host OS to collect flow and process information is now also aware and capable of doing the same for containers. Flows are stored inside a data lake that can be queried using out-of-the-box filters or directly from annotations learned from the Kubernetes cluster.

Policy definition and enforcement

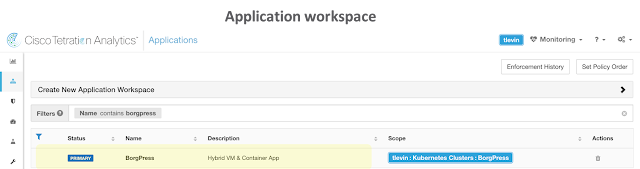

Application workspaces are objects for defining, analyzing, and enforcing policies for a particular application. BorgPress contains a total of 6 virtual machines, 3 containers, and 15 IP addresses.

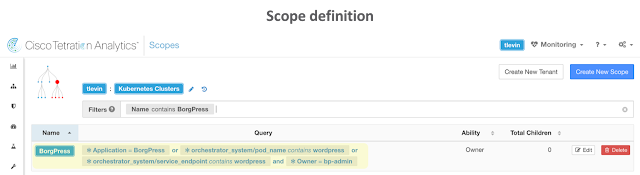

Scopes are used to determine the set of endpoints that are pulled into the application workspace and thus are affected by the created policies that are later enforced.

In the example below, a scope, BorgPress, is created that identifies any endpoint that matches the four defined queries. The queries for the BorgPress scope are based on custom annotations that have been both manually uploaded and learned dynamically.

Once a scope is created, the application workspace is built and associated to the scope. In the example below, a BorgPress application workspace is created and tied to the BorgPress scope.

Policies using prebuilt filters inside the application workspace are defined to build segmentation rules. In the example below, five default policies have been built that define the set of rules for BorgPress to function based on the logical application diagram discussed earlier. The orange boxes are with a red border are filters that describe the BorgPress wordpress tier that abstracts or contains container endpoints. The highlighted yellow box shows a single rule that allows any BorgPress database server (there are three virtual machine endpoints in this tier) to provide a service on port 3306 to the consumer – which is a BorgPress database HAProxy server.

To validate these policies, live policy analysis is used to cross-examine every packet of a flow against the five policies or intents and then classify each as permitted, rejected, escaped, or misdropped by the network. This is performed in near-real time and for all endpoints of the BorgPress application.

It’s important to point out that up to this point there is no actual enforcement of policies. Traffic classification is just a record of what occurred on the network as it relates to the intentions of the policy you would like to enforce. This allows you to be certain that the rules you ultimately enforce will work as intended. Through a single click of a button, Cisco Tetration can provide holistic enforcement for BorgPress across both virtual and containerized workloads.

Every rule does not need to be implemented on every endpoint. Once “Enforce Policies” is enabled, each endpoint, through a secure channel to the agent, receives only its required set of rules. The agent leverages the native firewall on the host OS (iptables or Windows firewall) to translate and implement policies.

The set of rules can be viewed from within the Cisco Tetration user interface or directly from the endpoint. In the example below, the rules received and enforced for the BorgPress database endpoint db-mysql01, a virtual machine, are shown. The rules match exactly the policy built inside the application workspace and are translated into the correct IPs on the endpoint using iptables.

Now that we’ve seen the rules enforced in a virtual machine for BorgPress, let’s look at how enforcement is done on containers. Enforcement for containers happens at the container namespace level. Since BorgPress is a Kubernetes deployment, enforcement happens at the pod level. BorgPress has three wordpress pods running in the default namespace.

Just as with virtual machines, we can view the enforcement rules both using the Cisco Tetration user interface or on the endpoint. In the example below, the user interface is showing the host profile of one of the Kubernetes worker nodes: k8s-node02. With container support, a new tab next to the Enforcement tab (“Container Enforcement”) shows the list of rules enforced to each pod.

At this point all endpoints, both virtual and container, have the necessary enforcement rules, and BorgPress is almost deployed with a zero-trust security model. Earlier I discussed the use of a type of Kubernetes service object called a NodePort. Its purpose is to expose the BorgPress wordpress service to external (outside the cluster) users. As the logical application flow diagram illustrates, the Web-HAProxy receives incoming client requests and load-balances them to the NodePort that every Kubernetes worker node listens on. Since the NodePort is a dynamically generated high-end port number, it can change over time. This presents a problem. To make sure the Web-Haproxy always has the correct rule to allow outgoing traffic to the NodePort, Cisco Tetration learns about the NodePort though the external orchestrator. When policy is pushed to the Web-HAProxy, Cisco Tetration also pushes the correct rule to allow traffic to the NodePort. If you noticed from the application workspace image earlier, there is no policy definition or rule for the NodePort 30000 to allow communication from Web-HAProxy to BP-Web-Tier. However, looking at the iptables of Web-HAProxy (see figure below), you can see that Cisco Tetration correctly added a rule to allow outgoing traffic to port 30000.