SCTE’s Cable-Tec Expo 2019 is just around the corner (September 30th-October 3rd in New Orleans). Plan on a busy week given the wide range of technology on display in the exhibit hall, the 115 papers being presented in the Fall Technical Forum, numerous panel discussions on the Innovation Stage, keynote presentations during the opening General Session and annual awards luncheon, and so much more. If you’re a newcomer to the industry (or new to Cable-Tec Expo), you may find some of the jargon at the conference a bit overwhelming.

I’ve defined 16 terms that you need to know before you go to Cable-Tec 2019:

1. Multiple System Operator (MSO) – A corporate entity such as Charter, Comcast, Cox, and others that owns and/or operates more than one cable system. “MSO” is not intended to be a generic abbreviation for all cable companies, even though the abbreviation is commonly misused that way. A local cable system is not an MSO, either – although it might be owned by one – it’s just a cable system. An important point: All MSOs are cable operators, but not all cable operators are MSOs.

2. Hybrid Fiber/Coax (HFC) – A cable network architecture developed in the 1980s that uses a combination of optical fiber and coaxial cable to transport signals to/from subscribers. Prior to what we now call HFC, the cable industry used all-coaxial cable “tree-and-branch” architectures.

3. Wireless – Any service that uses radio waves to transmit/receive video, voice, and/or data in the over-the-air spectrum. Examples of wireless telecommunications technology include cellular (mobile) telephones, two-way radio, and Wi-Fi. Over-the-air broadcast TV and AM & FM radio are forms of wireless communications, too.

4. Wi-Fi 6 – The next generation of Wi-Fi technology, based upon the Institute of Electrical and Electronics Engineers (IEEE) 802.11ax standard (the sixth 802.11 standard, hence the “6” in Wi-Fi 6), that is said to support maximum theoretical data speeds upwards of 10 Gbps.

5. Data-Over-Cable Service Interface Specifications (DOCSIS®) – A family of CableLabs specifications for standardized cable modem-based high-speed data service over cable networks. DOCSIS is intended to ensure interoperability among various manufacturers’ cable modems and related headend equipment. Over the years the industry has seen DOCSIS 1.0, 1.1, 2.0, 3.0 and 3.1 (the latest deployed version), with DOCSIS 4.0 in the works.

6. Gigabit Service – A class of high-speed data service in which the nominal data transmission rate is 1 gigabit per second (Gbps), or 1 billion bits per second. Gigabit service can be asymmetrical (for instance, 1 Gbps in the downstream and a slower speed in the upstream) or symmetrical (1 Gbps in both directions). Cable operators around the world have for the past couple years been deploying DOCSIS 3.1 cable modem technology to support gigabit data service over HFC networks.

7. Full Duplex (FDX) DOCSIS – An extension to the DOCSIS 3.1 specification that supports transmission of downstream and upstream signals on the same frequencies at the same time, targeting data speeds of up to 10 Gbps in the downstream and 5 Gbps in the upstream! The magic of echo cancellation and other technologies allows signals traveling in different directions through the coaxial cable to simultaneously occupy the same frequencies.

8. Extended Spectrum DOCSIS (ESD) – Existing DOCSIS specifications spell out technical parameters for equipment operation on HFC network frequencies from as low as 5 MHz to as high as 1218 MHz (also called 1.2 GHz). Operation on frequencies higher than 1218 MHz is called extended spectrum DOCSIS, with upper frequency limits as high as 1794 MHz (aka 1.8 GHz) to 3 GHz or more! CableLabs is working on DOCSIS 4.0, which will initially spell out metrics for operation up to at least 1.8 GHz.

9. Cable Modem Termination System (CMTS) – An electronic device installed in a cable operator’s headend or hub site that converts digital data to/from the Internet to radio frequency (RF) signals that can be carried on the cable network. A converged cable access platform (CCAP) can be thought of as similar to a CMTS. Examples include Cisco’s uBR-10012 and cBR-8.

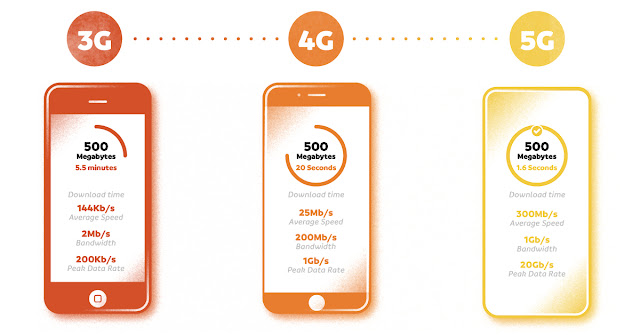

10. 5G – According to Wikipedia, “5G is the fifth generation cellular network technology.” You probably already have a smart phone or tablet that is compatible with fourth generation (4G) cellular technology, the latter sometimes called long term evolution (LTE). Service providers are installing new towers in neighborhoods to support 5G, which will provide their subscribers with much faster data speeds. Those towers have to be closer together (which means more of them) because of plans to operate on much higher frequencies than earlier generation technology. So, what does 5G have to do with cable? Plenty! For one thing, the cable industry is well-positioned to partner with telcos to provide “backhaul” interconnections between the new 5G towers and the telcos’ facilities. Those backhauls can be done over some of our fiber, as well as over our HFC networks using DOCSIS.

11. 10G – Not to be confused with 5G, this term refers to the cable industry’s broadband technology platform of the future that will deliver at least 10 gigabits per second to and from the residential premises. 10G supports better security and lower latency, and will take advantage of a variety of technologies such as DOCSIS 3.1, full duplex DOCSIS, wireless, coherent optics, and more.

12. Internet of Things (IoT) – IoT is simply the point in time when more ‘things or objects’ were connected to the Internet than people. Think of interconnecting and managing billions of wired and wireless sensors, embedded systems, appliances, and more. Making it all work, while maintaining privacy and security, and keeping power consumption to a minimum are among the challenges of IoT.

13. Distributed Access Architecture (DAA) – An umbrella term that, according to CableLabs, describes relocating certain functions typically found in a cable network’s headends and hub sites closer to the subscriber. Two primary types of DAA are remote PHY and flexible MAC architecture, described below. Think of the MAC (media access control) as the circuitry where DOCSIS processing takes place, and the PHY (physical layer) as the circuitry where DOCSIS and other RF signals are generated and received.

14. Remote PHY (R-PHY) – A subset of DAA in which a CCAP’s PHY layer electronics are separated from the MAC layer electronics, typically with the PHY electronics located in a separate shelf or optical fiber node. A remote PHY device (RPD) module or circuit is installed in a shelf or node, and the RPD functions as the downstream RF signal transmitter and upstream RF signal receiver. The interconnection between the RPD and the core (such as Cisco’s cBR-8) is via digital fiber, typically 10 Gbps Ethernet.

15. Flexible MAC Architecture (FMA) – Originally called remote MAC/PHY (in which the MAC and PHY electronics are installed in a node), FMA provides more flexibility regarding where MAC layer electronics can be located: headend/hub site, node (with the PHY electronics), or somewhere else.

16. Cloud – I remember seeing a meme online that defined the cloud a bit tongue-in-cheek as “someone else’s computer.” When we say cloud computing, that often means the use of remote computer resources, located in a third-party server facility and accessed via the Internet. Sometimes the server(s) might be in or near one’s own facility. What is called the cloud is used for storing data, computer processing, and for emulating certain functionality in software that previously relied upon dedicated local hardware.

There are many more terms and phrases you’ll see and hear at Cable-Tec Expo than can be covered here. If you find something that has you stumped, stop by Cisco’s booth (Booth 1301) and ask one of our experts.

1. Multiple System Operator (MSO) – A corporate entity such as Charter, Comcast, Cox, and others that owns and/or operates more than one cable system. “MSO” is not intended to be a generic abbreviation for all cable companies, even though the abbreviation is commonly misused that way. A local cable system is not an MSO, either – although it might be owned by one – it’s just a cable system. An important point: All MSOs are cable operators, but not all cable operators are MSOs.

2. Hybrid Fiber/Coax (HFC) – A cable network architecture developed in the 1980s that uses a combination of optical fiber and coaxial cable to transport signals to/from subscribers. Prior to what we now call HFC, the cable industry used all-coaxial cable “tree-and-branch” architectures.

3. Wireless – Any service that uses radio waves to transmit/receive video, voice, and/or data in the over-the-air spectrum. Examples of wireless telecommunications technology include cellular (mobile) telephones, two-way radio, and Wi-Fi. Over-the-air broadcast TV and AM & FM radio are forms of wireless communications, too.

4. Wi-Fi 6 – The next generation of Wi-Fi technology, based upon the Institute of Electrical and Electronics Engineers (IEEE) 802.11ax standard (the sixth 802.11 standard, hence the “6” in Wi-Fi 6), that is said to support maximum theoretical data speeds upwards of 10 Gbps.

5. Data-Over-Cable Service Interface Specifications (DOCSIS®) – A family of CableLabs specifications for standardized cable modem-based high-speed data service over cable networks. DOCSIS is intended to ensure interoperability among various manufacturers’ cable modems and related headend equipment. Over the years the industry has seen DOCSIS 1.0, 1.1, 2.0, 3.0 and 3.1 (the latest deployed version), with DOCSIS 4.0 in the works.

6. Gigabit Service – A class of high-speed data service in which the nominal data transmission rate is 1 gigabit per second (Gbps), or 1 billion bits per second. Gigabit service can be asymmetrical (for instance, 1 Gbps in the downstream and a slower speed in the upstream) or symmetrical (1 Gbps in both directions). Cable operators around the world have for the past couple years been deploying DOCSIS 3.1 cable modem technology to support gigabit data service over HFC networks.

7. Full Duplex (FDX) DOCSIS – An extension to the DOCSIS 3.1 specification that supports transmission of downstream and upstream signals on the same frequencies at the same time, targeting data speeds of up to 10 Gbps in the downstream and 5 Gbps in the upstream! The magic of echo cancellation and other technologies allows signals traveling in different directions through the coaxial cable to simultaneously occupy the same frequencies.

8. Extended Spectrum DOCSIS (ESD) – Existing DOCSIS specifications spell out technical parameters for equipment operation on HFC network frequencies from as low as 5 MHz to as high as 1218 MHz (also called 1.2 GHz). Operation on frequencies higher than 1218 MHz is called extended spectrum DOCSIS, with upper frequency limits as high as 1794 MHz (aka 1.8 GHz) to 3 GHz or more! CableLabs is working on DOCSIS 4.0, which will initially spell out metrics for operation up to at least 1.8 GHz.

9. Cable Modem Termination System (CMTS) – An electronic device installed in a cable operator’s headend or hub site that converts digital data to/from the Internet to radio frequency (RF) signals that can be carried on the cable network. A converged cable access platform (CCAP) can be thought of as similar to a CMTS. Examples include Cisco’s uBR-10012 and cBR-8.

10. 5G – According to Wikipedia, “5G is the fifth generation cellular network technology.” You probably already have a smart phone or tablet that is compatible with fourth generation (4G) cellular technology, the latter sometimes called long term evolution (LTE). Service providers are installing new towers in neighborhoods to support 5G, which will provide their subscribers with much faster data speeds. Those towers have to be closer together (which means more of them) because of plans to operate on much higher frequencies than earlier generation technology. So, what does 5G have to do with cable? Plenty! For one thing, the cable industry is well-positioned to partner with telcos to provide “backhaul” interconnections between the new 5G towers and the telcos’ facilities. Those backhauls can be done over some of our fiber, as well as over our HFC networks using DOCSIS.

11. 10G – Not to be confused with 5G, this term refers to the cable industry’s broadband technology platform of the future that will deliver at least 10 gigabits per second to and from the residential premises. 10G supports better security and lower latency, and will take advantage of a variety of technologies such as DOCSIS 3.1, full duplex DOCSIS, wireless, coherent optics, and more.

12. Internet of Things (IoT) – IoT is simply the point in time when more ‘things or objects’ were connected to the Internet than people. Think of interconnecting and managing billions of wired and wireless sensors, embedded systems, appliances, and more. Making it all work, while maintaining privacy and security, and keeping power consumption to a minimum are among the challenges of IoT.

13. Distributed Access Architecture (DAA) – An umbrella term that, according to CableLabs, describes relocating certain functions typically found in a cable network’s headends and hub sites closer to the subscriber. Two primary types of DAA are remote PHY and flexible MAC architecture, described below. Think of the MAC (media access control) as the circuitry where DOCSIS processing takes place, and the PHY (physical layer) as the circuitry where DOCSIS and other RF signals are generated and received.

14. Remote PHY (R-PHY) – A subset of DAA in which a CCAP’s PHY layer electronics are separated from the MAC layer electronics, typically with the PHY electronics located in a separate shelf or optical fiber node. A remote PHY device (RPD) module or circuit is installed in a shelf or node, and the RPD functions as the downstream RF signal transmitter and upstream RF signal receiver. The interconnection between the RPD and the core (such as Cisco’s cBR-8) is via digital fiber, typically 10 Gbps Ethernet.

15. Flexible MAC Architecture (FMA) – Originally called remote MAC/PHY (in which the MAC and PHY electronics are installed in a node), FMA provides more flexibility regarding where MAC layer electronics can be located: headend/hub site, node (with the PHY electronics), or somewhere else.

16. Cloud – I remember seeing a meme online that defined the cloud a bit tongue-in-cheek as “someone else’s computer.” When we say cloud computing, that often means the use of remote computer resources, located in a third-party server facility and accessed via the Internet. Sometimes the server(s) might be in or near one’s own facility. What is called the cloud is used for storing data, computer processing, and for emulating certain functionality in software that previously relied upon dedicated local hardware.