Every so often there comes a time when we witness a major shift in the networking industry that fundamentally changes the landscape, including product portfolios and investment strategies. Storage Area Networking (SAN) is undergoing one such paradigm shift that opens up a huge opportunity for those looking to refresh their SAN investments and take advantage of the latest and greatest developments in this particular space. We can think of it as a “trifecta effect.”

In this blog, we’ll discuss the latest and greatest innovations driving the SAN industry and try to paint a picture of how the SAN landscape will look five to seven years down the road, while focusing on asking the right questions prior to that critical investment. Following this, we will be posting additional blogs that will dig deeper into each of the technological advancements; but it helps to understand the bigger picture and the Cisco point of view, which we will cover here.

Modern enterprise applications are exerting tremendous pressure on your SAN infrastructure. Keeping up with the trends, customers are looking to invest in higher performing storage and storage networking. Combining the economic viability of All Flash Arrays and the technological advancements with NVMe over FC, there has never been a more compelling opportunity to upgrade your SAN infrastructure with investment protection and support for 64G Fibre Channel Performance.

But before we think about refreshing our SAN, we have to ask few questions ourselves:

◉ Does it support NVMe?

◉ Is it 64Gb FC ready?

◉ Do we get any sort of deep packet visibility, a.k.a SAN analytics, for monitoring, diagnostics, and troubleshooting?

◉ Do we really need to “RIPlace” our existing infrastructure?

We will elaborate more on above questions one by one in a this series of blogs.

Today, we’re going to talk about NVMe array support over FC using Cisco MDS 9000 series switches, and get to the bottom of why NVMe is so important, why there is so much excitement around NVMe, and why everyone is (storage vendors and customers) eager to implement NVMe.

NVMe has superseeded rotating/spinning disks. So with no more rotating motors or moving heads, everything is in the form of Non-Volatime Memory (NVM) based storage. This results in extremely high reads and writes. Using built-in multi-core, multi-threaded CPUs, and PCI 3.0 bus provides extreme high, low latency thoughput.

Does Cisco’s SAN solution have support for NVMe/FC?

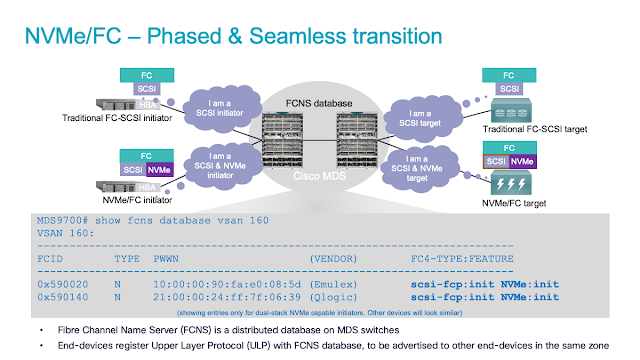

This is a very common and top-of-mind question from customers during conversations on roadmap, feature set, or for that matter any discussion involving SAN. The good news on Cisco MDS SAN solution is – yes, we do support NVMe/FC. We support it transparently – no additional hardware/commands needed to enable it. Any current 16G/32G Cisco MDS 9700 module or any current selling 16G/32G FC fabric switch using new NX-OS 8.x release supports it. There is no additional license needed, no additional features to enable identification of NVMe commands. Cisco MDS 9000 can unleash the performance of NVMe arrays over FC or FCoE transport, connected to SCSI or NVMe initiators or targets, concurrently, across the same SAN.

Vendor Certification

From the Ecosystem support perspective, we have certified Broadcom, Emulex, Cavium and Qlogic HBAs, along with Cisco UCS C-Series servers. We have also published a Cisco validated design guide with NVMe solution which are listed at the end of the blog.

We can run SCSI and NVMe flows together through the same hardware, through the same ISL (Inter Switch Link) and Cisco MDS switches will transparently allow successful registrations and logins with NVMe Name Servers as well as I/O exchanges between SCSI and NVMe initiators and targets, together.

This way, NVMe/FC, along with Cisco MDS SAN solution provides the best possible performance across the SAN with seamless insertion of NVMe storage arrays in the existing ecosystem of MDS hardware.

NVMe/FC Support Matrix with MDS

If you are looking for NVMe/FC and MDS integration within CVDs, here are some of the documents for you to start with:

1. Unleash the power of flash storage using Cisco UCS, Nexus, and MDS

2. FlashStack Virtual Server Infrastructure Design Guide for VMware vSphere 6.0 U2

3. Cisco UCS Integrated Infrastructure for SAP HANA

4. FlashStack Data Center with Oracle RAC 12cR2 Database

5. Cisco and Hitachi Adaptive Solutions for Converged Infrastructure Design Guide

6. VersaStack with VMware vSphere 6.7, Cisco UCS 4th Generation Fabric, and IBM FS9100 NVMe-accelerated Storage Design Guide

So, what are we waiting for? Probably nothing. The roads are ready, just get the drivers and cars on this road to make it Formula one racing track…

Why Now?

Modern enterprise applications are exerting tremendous pressure on your SAN infrastructure. Keeping up with the trends, customers are looking to invest in higher performing storage and storage networking. Combining the economic viability of All Flash Arrays and the technological advancements with NVMe over FC, there has never been a more compelling opportunity to upgrade your SAN infrastructure with investment protection and support for 64G Fibre Channel Performance.

But before we think about refreshing our SAN, we have to ask few questions ourselves:

◉ Does it support NVMe?

◉ Is it 64Gb FC ready?

◉ Do we get any sort of deep packet visibility, a.k.a SAN analytics, for monitoring, diagnostics, and troubleshooting?

◉ Do we really need to “RIPlace” our existing infrastructure?

We will elaborate more on above questions one by one in a this series of blogs.

Today, we’re going to talk about NVMe array support over FC using Cisco MDS 9000 series switches, and get to the bottom of why NVMe is so important, why there is so much excitement around NVMe, and why everyone is (storage vendors and customers) eager to implement NVMe.

NVMe has superseeded rotating/spinning disks. So with no more rotating motors or moving heads, everything is in the form of Non-Volatime Memory (NVM) based storage. This results in extremely high reads and writes. Using built-in multi-core, multi-threaded CPUs, and PCI 3.0 bus provides extreme high, low latency thoughput.

Does Cisco’s SAN solution have support for NVMe/FC?

This is a very common and top-of-mind question from customers during conversations on roadmap, feature set, or for that matter any discussion involving SAN. The good news on Cisco MDS SAN solution is – yes, we do support NVMe/FC. We support it transparently – no additional hardware/commands needed to enable it. Any current 16G/32G Cisco MDS 9700 module or any current selling 16G/32G FC fabric switch using new NX-OS 8.x release supports it. There is no additional license needed, no additional features to enable identification of NVMe commands. Cisco MDS 9000 can unleash the performance of NVMe arrays over FC or FCoE transport, connected to SCSI or NVMe initiators or targets, concurrently, across the same SAN.

Vendor Certification

From the Ecosystem support perspective, we have certified Broadcom, Emulex, Cavium and Qlogic HBAs, along with Cisco UCS C-Series servers. We have also published a Cisco validated design guide with NVMe solution which are listed at the end of the blog.

This way, NVMe/FC, along with Cisco MDS SAN solution provides the best possible performance across the SAN with seamless insertion of NVMe storage arrays in the existing ecosystem of MDS hardware.

NVMe/FC Support Matrix with MDS

If you are looking for NVMe/FC and MDS integration within CVDs, here are some of the documents for you to start with:

1. Unleash the power of flash storage using Cisco UCS, Nexus, and MDS

2. FlashStack Virtual Server Infrastructure Design Guide for VMware vSphere 6.0 U2

3. Cisco UCS Integrated Infrastructure for SAP HANA

4. FlashStack Data Center with Oracle RAC 12cR2 Database

5. Cisco and Hitachi Adaptive Solutions for Converged Infrastructure Design Guide

6. VersaStack with VMware vSphere 6.7, Cisco UCS 4th Generation Fabric, and IBM FS9100 NVMe-accelerated Storage Design Guide

So, what are we waiting for? Probably nothing. The roads are ready, just get the drivers and cars on this road to make it Formula one racing track…

0 comments:

Post a Comment