Meetings of the Future

What excites me about working on the bleeding edge of technology is not the technology itself, but what it enables. Everything we do in Cisco’s Collaboration organization is to build the best tech we can to bring people together. Our technology should never be at the forefront of the interaction. If we’re doing our job right, you shouldn’t even notice it’s there. What I find truly exciting is the experience we’re creating. The feeling of togetherness.

Closing the Gap Between Digital Data and the Physical World

How do we replicate that experience—not the exactness of it, but the essence of it—in a fully connected, ambient experience that draws on the best, fluid blend of physical and virtual elements?

Perhaps the best way I can share this is to describe what I want a typical meeting to be like in 2030:

At 9:30 am a pleasant bell tone sounds and my colleague, Cullen, materializes as a photorealistic hologram seated on the couch in front of me and we exchange hellos as another tone sounds and our mutual colleague, Jia, materializes standing next to the armchair to my right. I walk over to Jia’s outstretched hand and feel the sensory feedback of resistance and pressure as we haptically share a virtual handshake. Jia and Cullen’s holograms nod to each other in greeting and Jia’s hologram takes a seat in the armchair.

As we begin talking, Kodi captures key takeaways from the meeting and cycles 2 displays of information that continuously update and refresh as the conversation evolves. The first, visible to all three of us, is a constantly updating array of resources cycling across the surface of the coffee table between us showing past meeting notes, actions, and related research and news articles. The second array is visible to me only and floats at the periphery of my view with more personally attuned information—recent conversations I’ve had or notes I’ve taken, calendar updates, and my biometric readings as well as data aggregated from the three of us reflecting the tone and mood of the room. It also indicates that my coffee is ready, so I switch from full-form mode to face-only mode and Jia and Cullen can continue to see my facial expressions in real-time as my hologram remains seated facing them, but I physically get up to grab my coffee refill. As I sit back down in my armchair I toggle back to full-form mode as I shift positions in my chair and take a sip.

As we discuss an upcoming event, my calendar flicks to the foreground of my view showing the event details and surrounding events and locations before and after. I push this view into the middle of the room which enables Cullen and Jia to see my calendar view as well, but in moving from private to shared, the details of my appointments are masked. A moment later, the shared view updates to include Jia and Cullen’s schedules as they push their views into the shared space as well. Based on the context of our conversation, Kodi overlays major industry events over the top of our calendars. Jia points out a gap in activities about a week prior and suggests we target that date for our announcement. Kodi registers from the content that we will need a final review meeting and 3 potential meeting slots highlight on our shared calendar 3 days prior. We agree on the best one and our respective calendars are updated with the invite including key takeaways from today’s meeting and links to prior discussions on the same topic.

As we’re wrapping up, Cullen mentions he has an updated version of the prototype we are planning to announce. The object materializes in front of us as Cullen enables his share feature. On Cullen’s side, he is holding the physical object, but Jia and I see an identical virtual replica as Cullen points out the changes he’s made. He then shifts from physical share to virtual share and explodes the object out to the size of the room so we can see the updates at the internal component level. I get up and walk closer to Cullen, stepping inside the object, which I can then reach out and touch to manipulate, highlight, or edit. The changes look great and I turn and give Cullen a haptic high-five.

As our conversation wraps up, I see the actions and updates Kodi has added to my calendar and to-do list in my periphery. I wave goodbye to Jia and Cullen and they fade out of my room. It’s 10 am, and according to Kodi, it’s a good time for a morning break.

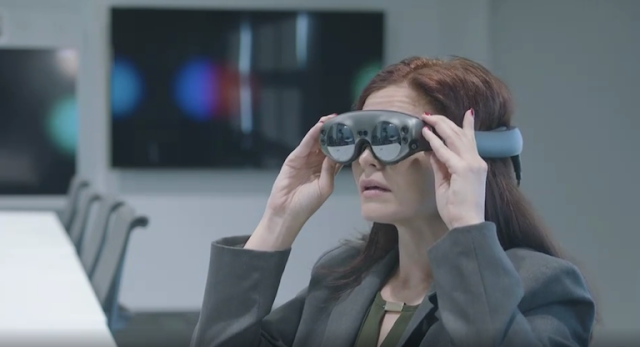

Combining AR in the Collaboration Space

Okay, some of this may still be out there on the time horizon, but some components are becoming a reality today. The above experience relies on a seamless mesh of natural language processing, adaptive algorithms, connected sensors, non-invasive biometrics, brain-computer interfaces, extended reality, and haptics. While some fields, like brain-computer interfaces and haptic feedback, are still in early stages, other areas like machine learning and natural language processing are becoming table stakes today.

Extended reality (augmented, virtual, mixed) is one area I’m particularly excited about. At Cisco, our Collaboration group has been fairly vocal about enabling augmented reality experiences with our Webex and Webex Teams APIs, specifically for a remote expert use case. Where we’ve been less vocal is what mixed reality can bring to the collaboration table, but we’ve been building something in stealth mode for the last couple of years which is truly exciting.

Proof of Concept for Three-Dimensional Collaboration in Real-Time

We’re not quite ready to share what we’ve been prototyping, but it’s been exciting challenging the current concept of what a meeting is and could be. We’re tapping into our internal knowledge of hardware, software, and networking, and we’ve been working behind closed doors with industry vendors at the top of their game—paradigm shifters who are opening up a whole new world of possibilities for creators, inventors, and dreamers everywhere.

I have the pleasure and privilege of leading initiatives for our innovation team where we are asking questions like: What if you could have real-life interactions that are better than what is currently possible in real life? What if conferencing didn’t have to be limited to a 2-dimensional plane? What happens when “real” and “virtual” are no longer distinct ideas?

There’s a lot to figure out and we’re not quite ready to tell the world what we’re building, but I can tell you, you’ve never experienced anything like this before because it’s never been done before! Stay tuned; you won’t want to miss it. These are exciting times to be a dreamer because, yes, dreams really do come true.

0 comments:

Post a Comment