In our ever-changing world, where the application represents the business itself and the level of digitization it provides is directly related to the perception of the brand; enterprises must ensure they stand differentiated by providing exceptional user experience – both for their customers as well as their employees alike. When the pandemic hit us, expectations by customers and employees initially were driven by empathy, with disruptions to services expected – but 18 months on, today everyone expects the same level of service they got pre-pandemic, irrespective of where people are working from. This drives a higher-level of expectation on the infrastructure and teams alike – towards providing an exceptional digital experience.

It is evident that application services are becoming increasingly distributed and reimagining applications through customer priorities is a key differentiator going ahead. A recent study on Global Cloud adoption by Frost & Sullivan has indicated a 70% jump in multi-cloud adoption in the Financial Services space. This is driven by a renewed focus towards innovation, along with the digitalization and streamlining of the businesses. On average, financial firms have placed more than half of their workloads in the cloud (public or private hosted) and that number is expected to grow faster than other industries over the next five years.

Digital Experience Visibility

In today’s world of applications moving to edge, applications moving to the cloud, and data everywhere – we really need to be able to manage IT irrespective of where we work, as well as where the applications are hosted or consumed from. It’s relatively easy to write up code for a new application; however, the complexity we are solving for in the current real-world scenario is that of deploying that code in today’s heterogenous environment, like that of a bank. Our traditional networks that we currently use to deploy into the data centers, predates cloud, predates SASE, Colo’s, IoT, 5G and certainly predates COVID and working from home.

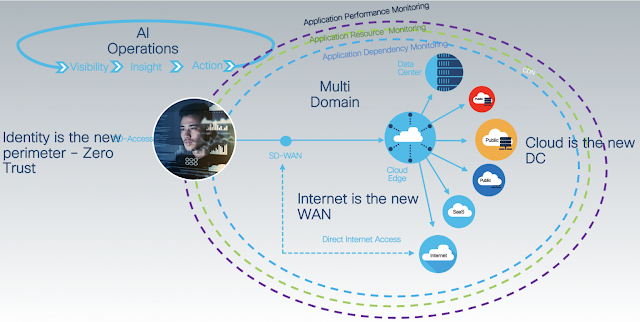

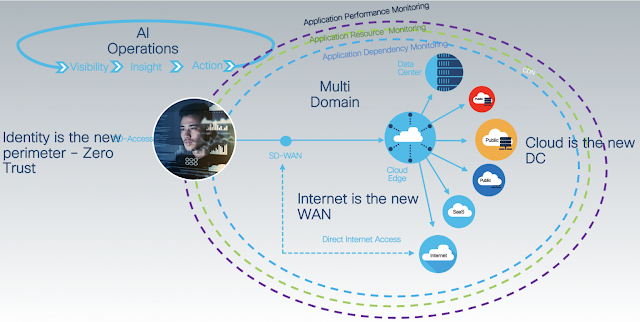

In today’s world cloud is the new data center and internet is the new WAN – thereby removing the concept of an enterprise perimeter and making identity the new perimeter. To provide that seamless experience, IT needs to not just monitor application performance, but also enable application resource monitoring and application dependency monitoring – holistically. This should enable the organization to figure out the business impact of an issue – be that a drop in conversion rate or a degradation in a service, and decide almost proactively if not predictively the kind of resources to allocate towards fixing that problem and curbing the business impact.

Observability rather than Visibility

In today’s world operations are complex with various teams relying on different tools, trying to trouble shoot and support their respective domains. This visibility across individual silos still leaves the organization miles away; left to collate the information and insights via war rooms, only then being able to identify the root cause of a problem. What is required is the ability to trouble shoot more holistically – via a data driven operating model.

Thus, it is important to use the network as a Central Nervous System and utilize Full Stack Observability to be able to look at visibility and telemetry from every networking domain, every cloud, the application, the code, and everything in between. Then use AI/ML to consume the various data elements in real time, figure out dynamically how to troubleshoot and get to the root cause of a problem faster and more accurately.

A FSO platform’s end goal is to have the single pane of glass, that would be able to:

◉ Ingest anything: any telemetry, from any 3rd party, from any domain, into a learning engine which has a flexible meta data model, so that it knows what kind of data it’s ingesting

◉ Visualize anything: end to end in a unified connected data format

◉ Query anything: providing cross domain analytics connecting the dots, providing closed loop analytics to faster pinpointed root cause analysis – before it impacts the user experience, which is critical

AI to tackle Experience Degradation

AI within an FSO platform is used not just to identify the dependencies across the various stacks of an application, but also to correlate the data, address issues, and right size the resources as they relate to performance and costs across the full life cycle of the application.

It is all about utilizing the Visibility Insights Architecture across a hybrid environment that enables balancing of performance and costs through real time analytics powered by AI. The outcome to solve for is Experience Degradation which cannot be solved individually in each of the domains (application, network, security, infrastructure) but by intelligently taking a holistic approach, with the ability to drill down as required.

Cisco is ideally positioned to provide this

FSO platform with

AppDynamics™ and Secure App at the core, combined with

ThousandEyes™ and

Intersight™ Workload Optimizer, providing a true end to end view of analyzing and in turn curbing the Business Impact of any issue in real time. This enables the Infrastructure Operators and the Application Operators of the enterprise, to work closely together, breaking the silos and enable this closed loop operating model that is paramount in today’s heterogenous environment.

Source: cisco.com

0 comments:

Post a Comment