The journey towards the intelligent enterprise

When talking about modern data processing in the digital economy, data is often regarded as the new oil. Enterprise companies are already competing in a race for the best mining, extraction and processing technologies to gain better insights into their companies, deals and processes. Winning in this race will ultimately lead to a competitive advantage in the market, since companies with a deep understanding for their businesses will be able to take the most profitable decisions and establish the most beneficial optimizations. For this reason, the way companies are handling data and analytics is changing from pure transactional, ETL-like processing towards adopting modern technologies such as machine learning, intelligent analytics, stream processing on-premise and in the cloud. We regard to this transition as the move towards the ‘intelligent enterprise’.

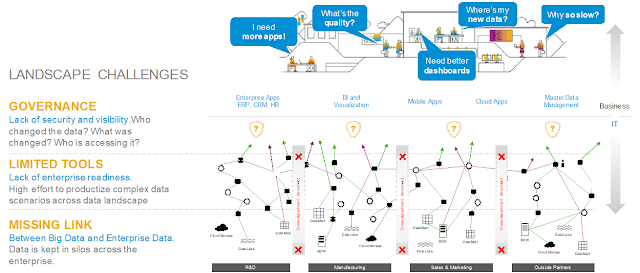

However, the transition also leads to the fact that data processing landscapes are getting more complex and more heterogeneous. Data is processed in a growing collection of different system, it is distributed over different places; its volume is growing by the day and customers need to orchestrate and integrate cloud technologies with classical on-premise systems. Among all the challenges that come with the journey towards digitalization and the ‘intelligent enterprise’ the following three main challenges emerge as the most pressing points in our customer base:

1. The tale about data governance and the lack of data knowledge, security and visibility

One of the biggest challenges in complex modern enterprise data landscape is the distribution of the data over a growing number of stores and processing systems. This leads to a missing knowledge about data positioning, data characteristics and governance. “What data is available in which store?”, “What are the major characteristics of my data sets?”, “Who changed the data, in what way and who has the permissions to access it?” are typical questions that are hard to answer even within a single company. However, it is key to find a strategy that allows a holistic data governance and data management across the entire company.

2. The legend about enterprise readiness of big data technologies

In the world of modern data processing technologies and big data management, we observe an incredible growth in tools and technologies a customer can choose from. While in the first place, choice seems to be an advantage, one quickly recognizes that this leads to a zoo of non-integrated system each exhibiting different characteristics, life cycles and environments. It is left to the customer to manage, organize and orchestrate those systems leading to a very high effort to arrive at an enterprise ready data landscape with a well-organized interplay of all components.

3. The story about easily processing enterprise data and big data together

The adoption of modern big data technologies mainly comes from the fact that augmenting classical enterprise data such as sales figures and revenue data with big data such as sensor streams, social media data collections or mobile device data, allows to create deeper and advanced analyses. However, in most cases enterprise data and big data are kept in different silos and exhibit totally different characteristics. Enterprise data typically comes from classical transactional systems such as ERP systems or transactional data bases, it is well structured and adheres a standardized schema. On the other hand, big data often arrives in its pure form as data streams or data collections stored in data lakes (e.g. Hadoop, S3, GCS). It is often unstructured, misses clear data types and might not adhere to a clear data schema. Accordingly, creating an end-to-end data pipeline across the enterprise that combines business data with big data comes with a considerable effort.

SAP and Cisco jointly recognize that our mutual customers need innovative new solutions that would help them overcome these hurdles in order to fully leverage the value of their distributed data and turn them into actionable insights.