The traditional development of applications is giving way to a new era of modern application development.

Modern apps are on a steep rise. Increasingly, the application experience is the new customer experience. Faster innovation velocity is needed to deliver on constantly changing customer requirements. The cloud native approach to modern application development can effectively address these needs:

◉ Connect, manage and observe across all physical, virtual, and cloud native API assets

◉ Secure and develop using distributed APIs, Applications and Data Objects

◉ Full-stack observability from API through bare metal and across multi-SaaS are table-stakes for globally distributed applications

“Shifting to an API-first application strategy is critical for enterprise organizations as they rearchitect their future portfolio,” said Michelle Bailey, Group Vice President, General Manager and Research Fellow at IDC.

Modern World of App Development

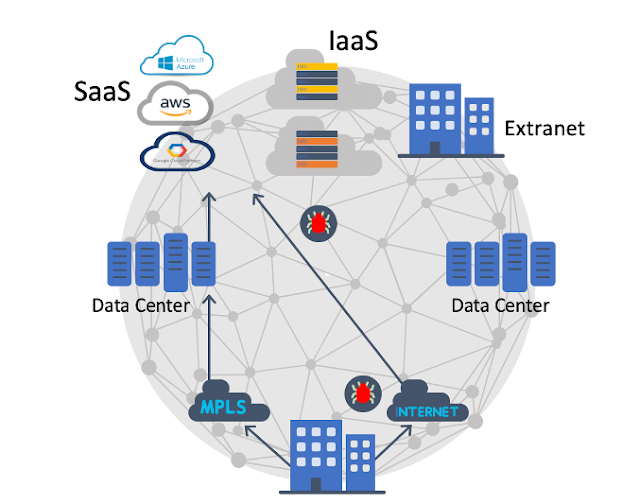

Modern applications are built using composable cloud native architectures. We see more modern apps being built and deployed because application components are disaggregated into reusable services from a single integrated image. These and other API’s are distributed across multiple SaaS, cloud or on-premises. Developers can use API’s from across all these properties to build their apps.

The benefits of this ideal API-first world in a native hybrid-cloud, multi-cloud future are:

◉ Greater uptime: only the components requiring an upgrade are taken offline as opposed to the entire application

◉ Choice: ability for developers to pick and choose APIs wherever they reside and matter most to their applications and businesses gives them flexibility

◉ Agile teams: small teams focused on a specialized component of the application means developers can work more autonomously, increasing velocity while enhancing collaboration with SRE and security teams

◉ Unleashing innovation: enables developers to specialize in their areas of expertise

Cisco — The Bridge to an API-first, Cloud Native World

We want a future where a developer can pick and choose any API’s (internal or 3rd party) needed to build their app, consume them and assign policies consistently across multiple providers. Connect, secure, observe and upgrade apps easily. Have confidence in the reputation and security of the apps, right from the time the IDE is fired and into production.

And do all of this with velocity and minimal friction between development and cloud engineering/SRE teams.

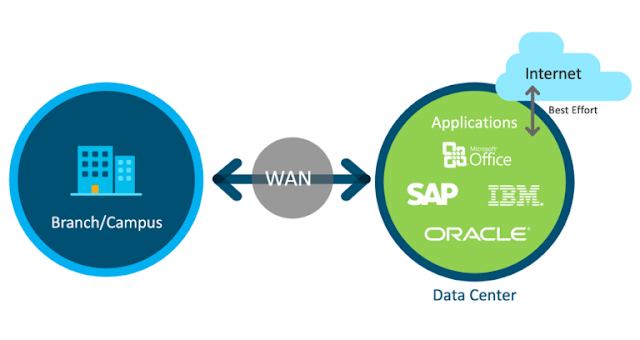

Even as some sprint out ahead in the cloud native world, bringing everyone along – wherever they are on their journey – is a top priority. We still need to bridge back to where the data resides in data centers, SaaS vendors, and in some cases even mainframes.

Developers will need to discover and safely connect to where the data and APIs are – wherever they reside. And be able to see top/down in apps. Observability throughout the stack is needed.

For a seamless developer experience, it will need the following:

◉ API-layer connectivity will influence all networking. API discovery, policy, and inter-API data flows will drive all connectivity configuration and policies down the infrastructure stack

◉ APIs and Data Objects are the new security perimeter. The Internet is the runtime for all modern distributed applications, therefore the new security perimeters are now diffused and expanded

◉ Observability is key to API-first distributed cloud native apps. Observability at the API and cloud native layers with well defined Service Level Objectives (SLOs) and AI/ML driven insights to drive down Mean Time To Resolution (MTTR) to deliver of an exceptional application experience

Here’s how we’re solving for it:

◉ Portfolio of mesh and connectivity software services that allow for the discoverability, consumption, data flow connectivity and real-time observability of distributed modern applications built across cloud native services at any layer of the stack, and integrates with event-driven services that bridge the monolithic world to the new cloud native world

◉ Portfolio of security services that detect, scan, observe, score and secure APIs (internal or 3rd party), while simultaneously ensuring real-time compliance reporting and integrations with existing development, deployment and operating toolchains

Source: cisco.com