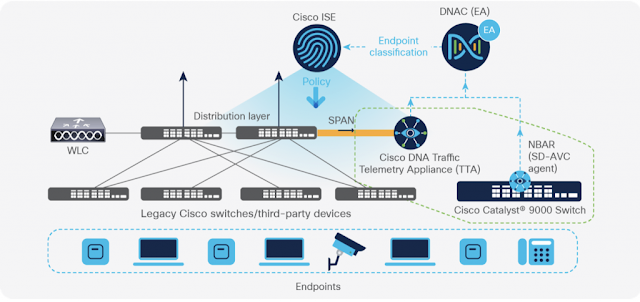

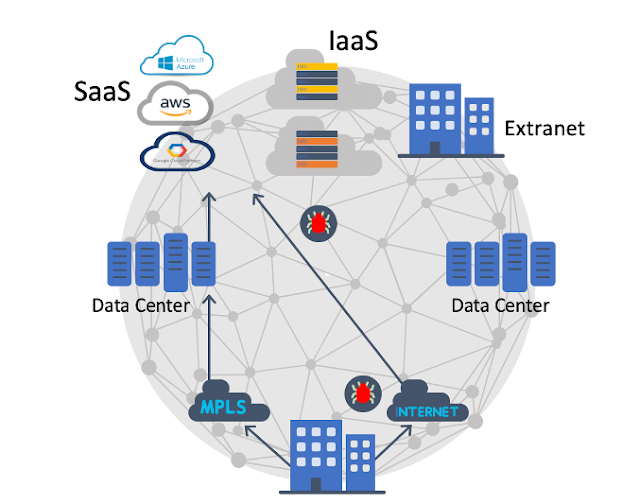

Enterprise network is undergoing digitization through multiple technological transitions that include explosion of connected and IoT devices in the network and the movement of applications and services to the cloud. Of the 29.3 billion networking devices that are forecasted to be seen in the network by 2023, 50% are expected to be IoT devices. Cloud based applications and services are expected to be accessed from multiple sites and locations, both on and off of the Enterprise network. These new trends and network transitions have not only increased the threat surface but also has advanced the sophistication of the attacks. Securing and protecting the Enterprise infrastructure has become top of the mind for network administrators and customers. With the advances and ratifications in the Wi-Fi standard, wireless has become the de facto standard of access technology in the Enterprise network. However, due to the inherent nature of the wireless networks, it becomes even more important to detect and protect not only the network infrastructure and users, but also to secure the air.

Saturday, 15 May 2021

Wireless Security Solutions Overview

Wednesday, 12 May 2021

Make Your Career Bright with Cisco 350-801 CLCOR Certification Exam

Cisco CLCOR Exam Description:

Cisco 350-801 Exam Overview:

- Exam Name- Implementing Cisco Collaboration Core Technologies

- Exam Number- 350-801 CLCOR

- Exam Price- $400 USD

- Duration- 120 minutes

- Number of Questions- 90-110

- Passing Score- Variable (750-850 / 1000 Approx.)

- Recommended Training- Implementing Cisco Collaboration Core Technologies (CLCOR)

- Exam Registration- PEARSON VUE

- Sample Questions- Cisco 350-801 Sample Questions

- Practice Exam- Cisco Certified Network Professional Collaboration Practice Test

Related Articles:-

What Do Network Operators Need for Profitable Growth?

For the past 30 years, service providers have built networks using technology that was limited in terms of speed, cost, and performance. Historically, optical and routing platforms evolved independently, forcing operators to build networks using separate architectures and on different timelines. This resulted in a solution that drives up complexity and Total Cost of Ownership (TCO).

Current multi-layer networks made up of Dense Wavelength Division Multiplexing (DWDM), Reconfigurable Optical Add-Drop Multiplexers (ROADMs), transponders, and routers suffer from current generational technology constraints and head-spinning complexity. Additionally, operators face a highly competitive pricing environment with flat Average Revenue Per User (ARPU) but enormous increases in usage. This requires re-evaluating network architecture to support massive scale in an economically and operationally viable way to improve TCO.

Here are a few factors that contribute to high network TCO:

◉ Redundant resiliency in each network layer leading to poor resource utilization

◉ Siloed infrastructure relying on large volumes of line cards for traffic handoff between layers

◉ High complexity due to multiple switching points, control, and management planes

◉ Layered architecture requiring manual service stitching across network domains that presents challenges to end-to-end cross-loop automation needed for remediation and shorter lead times

But now we live in a different world, and we have technology that can address these problems and more. We envision a network that is simpler and much more cost-effective, where optical and IP networks can operate as one. Rather than having two different networks that need to be deployed and maintained with disparate operational support tools, we’re moving toward greater IP and optical integration within the network.

Advances in technology enabling a fully integrated routing and optical layer

Service provider business profitability runs through best-in-class network processing and coherent optical technology advancements, enabling higher network capacities with lower power and space requirements. These, coupled with software providing robust automation and telemetry, will simplify the network and lead to greater utilization and monetization. Applications like Multi-access Edge Computing (MEC) that require every operator office to become a data center continue to increase the importance of delivering higher capacities.

We’ve made great strides in technology advancements across switching, Network Processing Unit (NPU) silicon, and digital coherent optics. Last year we introduced Cisco Silicon One, an exciting new switching and routing silicon used in the Cisco 8000 Series of devices that can also be purchased as merchant silicon.

Cisco Silicon One allows us to build switches and routers that can scale to 100s of Terabits. It also means the scalability is optimized for 400G and 800G coherent optics.

What can Cisco Routed Optical Networking do for you?

Our investment and strategy alignment

Sunday, 9 May 2021

Cisco introduces Dynamic Ingress Rate Limiting – A Real Solution for SAN Congestion

I’m sure we all agree Information Technology (IT) and acronyms are strongly tied together since the beginning. Considering the amount of Three Letter Acronyms (TLAs) we can build is limited and now exhausted, it comes with no surprise that FLAs are the new trend. You already understood that FLA means Four Letter Acronym, right? But maybe you don’t know that the ancient Romans loved four letter acronyms and created some famous ones: S.P.Q.R. and I.N.R.I.. As a technology innovator, Cisco is also a big contributor to new acronyms and I’m pleased to share with you the latest one I heard: DIRL. Pronounce it the way you like.

Please welcome DIRL

DIRL stands for Dynamic Ingress Rate Limiting. It represents a nice and powerful new capability coming with the recently posted NX-OS 8.5(1) release for MDS 9000 Fibre Channel switches. DIRL adds to the long list of features that fall in the bucket of SAN congestion avoidance and slow drain mitigation. Over the years, a number of solutions have been proposed and implemented to counteract those negative occurrences on top of Fibre Channel networks. No single solution is perfect, otherwise there would be no need for a second one. In reality every solution is best to tackle a specific situation and offer a better compromise, but maybe suboptimal in other situations. Having options to chose from is a good thing.

DIRL represents the newest and shining arrow in the quiver of the professional MDS 9000 storage network administrator. It complements existing technologies like Virtual Output Queues (VOQs), Congestion Drop, No credit drop, slow device congestion isolation (quarantine) and recovery, portguard and shutdown of guilty devices. Most of the existing mitigation mechanisms are quite severe and because of that they are not widely implemented. DIRL is a great new addition to the list of possible mitigation techniques because it makes sure only the bad device is impacted without removing it from the network. The rest of devices sharing the same network are not impacted in any way and will enjoy a happy life. With DIRL, data rate is measured and incremental, such that the level of ingress rate limiting is matched to the device continuing to cause congestion. Getting guidance from experts on what mitigation technique to use remains a best practice of course, but DIRL seems best for long lasting slow drain and overutilization conditions, localizing impact to a single end device.

The main idea behind DIRL

Why DIRL is so great

The detailed timeline of operation for DIRL

Thursday, 6 May 2021

Native or Open-source Data Models? Use Both for Software-Defined Enterprise Networks.

Enterprise IT administrators with hundreds, thousands, or even more networking devices and services to manage are turning to programmable, automated, deployment, provisioning, and management to scale their operations without having to scale their costs. Using structured data models and programmable interfaces that talk directly to devices―bypassing the command line interface (CLI)―is becoming an integral part of software-defined networking.

As part of this transformation, operators must choose:

◉ A network management protocol (e.g., Netconf or Restconf) that is fully supported in Cisco IOS XE

◉ Data models for configuration and management of devices

One path being explored by many operators is to use Google Remote Procedure Calls (gRPC), gRPC Network Management Interface (gNMI) as the network management protocol. But which data models can be used with gNMI? Originally it didn’t support the use of anything other than OpenConfig models. Now that it does, many developers might not realize that and think that choosing gNMI will result in limited data model options. Cisco IOS XE has been ungraded to fully support both our native models and OpenConfig models when gNMI is the network management protocol.

Here’s a look at how Cisco IOS XE supports both YANG and native data models with the “mixed schema” approach made possible with gNMI.

Vendor-neutral and Native Data Models

Cisco has defined data models that are native to all enterprise gear running Cisco IOS XE and other Cisco IOS versions for data center and wireless environments. Other vendors have similar native data models for their equipment. The IETF has its own guidelines based on YANG data models for network topologies. So too does a consortium of service providers led by Google, whose OpenConfig has defined standardized, vendor-neutral data modeling schemas based on the operational use cases and requirements of multiple network operators.

The decision of what data models to use in the programmable enterprise network typically comes down to a choice between using vendor-neutral models or models native to a particular device manufacturer. But rather than forcing operators to choose between vendor-neutral and native options, Cisco IOS XE with gNMI offers an alternative, “mixed-schema” approach that can be used when enterprises migrate to model-driven programmability.

Pros and Cons of Different Models

The native configuration data models provided by hardware and software vendors support networking features that are specific to a vendor or a platform. Native models may also provide access to new features and data before the IETF and OpenConfig models are updated to support them.

Vendor neutral models define common attributes that should work across all vendors. However, the current reality is that despite the growing scope of these open, vendor-neutral models, many network operators still struggle to achieve complete coverage for all their configuration and operational data requirements.

At Cisco we have our own native data models that encompass our rich feature sets for all devices supported by IOS XE. Within every feature are most, if not all, of the attributes defined by IETF and OpenConfig, plus extra features that our customers find useful and haven’t yet been or won’t be added to vendor-neutral models.

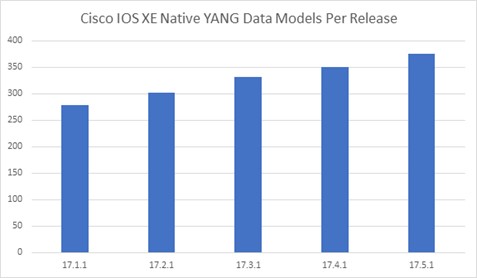

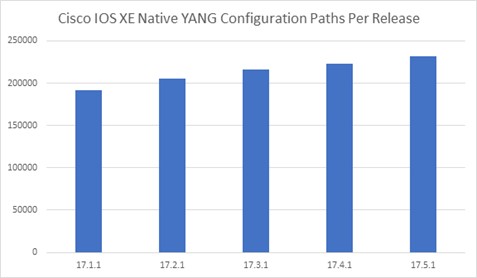

With each passing release of Cisco IOS XE there has been significant and steady growth both in the number of native YANG models supported and in the number of configuration paths supported (Figures 1 and 2). The number of paths provides an insight into the vast feature coverage we have in IOS –XE. As of IOS XE release 17.5.1, there are approximately 232000 XPaths covering a diverse set of features ranging from newer ones like segment routing to older ones like Routing Information Protocol (RIP).

The gNMI Mixed Schema Approach

Support for gNMI in IOS XE

Find Out More About gNMI

Wednesday, 5 May 2021

Building Hybrid Work Experiences: Details Matter

The Shift From Remote Work to a New Hybrid Work Environment

It’s exciting to see more organizations start to make the shift from remote work to a new hybrid work environment, but it’s a transition that comes with a new set of questions and challenges. How many people will return to the office, and what will that environment look like? Increasingly, we’re hearing that teams are feeling fatigued and disconnected – what tools can help to solve for that? And perhaps most importantly, how can we ensure that employees continuing to work remotely have the same connected and inclusive experience as those that return to the office?

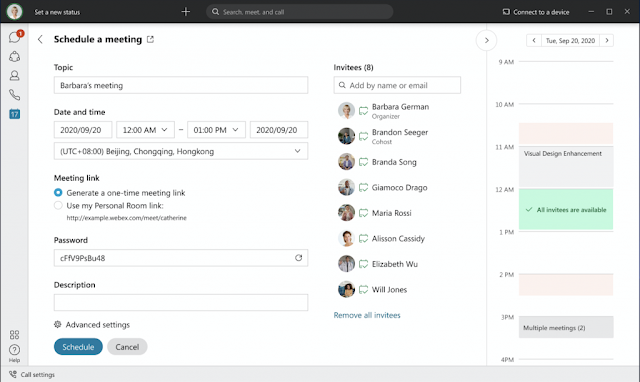

When we think about delivering positive employee experiences, it’s the details that matter. This month, we’re rolling out new Webex features that solve for a few challenges that might be overlooked and yet play an important role in the new hybrid working model.

Give More and Get More From Your Meeting Experiences

Whether in the office or working remotely, no one wants to sit in meeting after meeting listening to a presenter drone on. Or worse, participating in a video meeting where there are so many talking heads and content being shared that you just don’t know where to focus. Making meetings more engaging is important in the world of hybrid work.

We’re expanding our Webex custom layouts functionality to include greater host controls, resulting in a more personalized and engaging meeting experience. As a meeting host or co-host, you have the ability to hide participants who are not on video, bringing greater focus to facial expressions and interactions with video users. Using the slider feature, you are able to show all participants on screen, or focus on just a few. And now hosts and co-hosts can curate and synchronize the content and speakers you want your attendees to focus on, and then push that view to all participants. This allows you to set a common “stage” and establish a more engaging meeting experience for all.

Work More Efficiently with Personalized Webex Work Modes

Reimagining the Workplace

Tuesday, 4 May 2021

8 Reasons why you should pick Cisco Viptela SD-WAN

20 years ago, I used to work as a network engineer for a fast-growing company that had multiple data centers and many remote offices, and I remember all the work required to simply onboard a remote site. Basically, it took months of planning and execution which included ordering circuits, getting connectivity up and spending hours, and sometimes days, deploying complex configurations to secure the connectivity by establishing encrypted tunnels and steering the right traffic across them. Obviously, all this work was manual. At the time I was very proud of the fact that I was able to do such complex configurations that required so many lines of CLI but that was the way things were done.

Read More: 300-420: Designing Cisco Enterprise Networks (ENSLD)

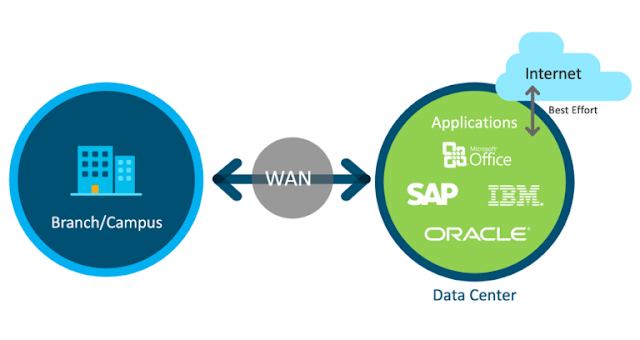

During the decade that followed, we saw a slew of WAN and encryption technologies become available to help with the demand and scale for secure network traffic. MPLS, along with frame Relay, became extremely popular and IPsec-related encryption technologies became the norm. All this was predicated on the fact that most traffic was destined to one clear location and that is the data center that every company had to build to store all its jewels including applications, databases and critical data. The data center also served as the gateway to the internet.