For organizations that are adopting DevOps practices and modern cloud capabilities to accelerate innovation and gain competitive advantage, one of the biggest challenges is maintaining common and consistent environments through an application’s lifecycle from development through to deployment. Containers solved the application portability problem of packaging all the necessary dependencies into discrete images, and Kubernetes has emerged as the defacto standard for how those containers are orchestrated and deployed.

By adopting containers and Kubernetes, IT and Line of Business users can focus their efforts on developing applications, rather than infrastructure and ‘plumbing’. Because Kubernetes is available everywhere, one can choose the best place to run an application based on business needs. For some applications, the scale and reach of the public cloud, along with its huge number of services available, will be the determining factor. For others, data locality, security or other concerns dictate an on-premises deployment.

Current solutions can be complex, requiring organizations to work across either isolated or separate environments and forcing teams to “glue” all the parts together themselves, at the expense of time and money. This can result in less choice by forcing organizations to choose between on-premises and public clouds or being limited by “all-or-nothing” stacks.

To help our customers with this challenge, Cisco announced today our collaboration with AWS to create the Cisco Hybrid Solution for Kubernetes on AWS. The new solution combines Cisco, AWS and Open Source technologies to simplify complexity and eliminate challenges for customers turning to Kubernetes to enable deploying applications across on-premises and the AWS cloud in a secure, consistent manner. It provides a tested, validated and simple solution that delivers consistent Kubernetes clusters both on premises and in the cloud, leveraging the best attributes of each. This reduces the burden on different teams with respect to people, processes and skill sets, accelerating the application deployment cycle and resulting in faster innovation. Customers can extend on-premises capabilities and resources to AWS cloud as well as utilize services and resources from AWS cloud on-premises.

The core component of the The Cisco Hybrid Solution for Kubernetes on AWS is a unique integration between Cisco Container Platform (CCP) and Amazon Elastic Container Service for Kubernetes (EKS) so that through the single CCP management UI, the customer can provision clusters both on-premises and on EKS in the cloud. CCP uses AWS IAM authentication to create the VPC, instructs EKS to create a new cluster, and then configures the worker nodes in that cluster.

By adopting containers and Kubernetes, IT and Line of Business users can focus their efforts on developing applications, rather than infrastructure and ‘plumbing’. Because Kubernetes is available everywhere, one can choose the best place to run an application based on business needs. For some applications, the scale and reach of the public cloud, along with its huge number of services available, will be the determining factor. For others, data locality, security or other concerns dictate an on-premises deployment.

Current solutions can be complex, requiring organizations to work across either isolated or separate environments and forcing teams to “glue” all the parts together themselves, at the expense of time and money. This can result in less choice by forcing organizations to choose between on-premises and public clouds or being limited by “all-or-nothing” stacks.

To help our customers with this challenge, Cisco announced today our collaboration with AWS to create the Cisco Hybrid Solution for Kubernetes on AWS. The new solution combines Cisco, AWS and Open Source technologies to simplify complexity and eliminate challenges for customers turning to Kubernetes to enable deploying applications across on-premises and the AWS cloud in a secure, consistent manner. It provides a tested, validated and simple solution that delivers consistent Kubernetes clusters both on premises and in the cloud, leveraging the best attributes of each. This reduces the burden on different teams with respect to people, processes and skill sets, accelerating the application deployment cycle and resulting in faster innovation. Customers can extend on-premises capabilities and resources to AWS cloud as well as utilize services and resources from AWS cloud on-premises.

Solution Overview

The core component of the The Cisco Hybrid Solution for Kubernetes on AWS is a unique integration between Cisco Container Platform (CCP) and Amazon Elastic Container Service for Kubernetes (EKS) so that through the single CCP management UI, the customer can provision clusters both on-premises and on EKS in the cloud. CCP uses AWS IAM authentication to create the VPC, instructs EKS to create a new cluster, and then configures the worker nodes in that cluster.

With Cisco Hybrid Solution for Kubernetes on AWS, customers use the CCP UI to launch Kubernetes clusters in Amazon AWS in addition to on-premises environments. They simply declare their Kubernetes cluster specification and reference the cisco managed operating system images for the worker node images to deploy clusters in either environment. AWS Identity and Access Management (IAM) is integrated as common authentication mechanism, so that the cluster administrator is free to apply the same role-based access control (RBAC) policies across both environments. Both environments are integrated with Amazon Elastic Container Registry (ECR), providing a secure, single repository for all the container images. A standard set of Open Source monitoring and logging tools based on Prometheus and ElasticSearch/FluentD/Kibana (EFK) stack is deployed to the clusters to provide consistent logging and metrics. Finally, Cisco’s site-to-site VPN solutions, such as CSR 1000v are leveraged to provide a range of secure connectivity options between the cloud-hosted and on-premises services.

Cisco offers a single point of contact for support across all the components of the solution (including AWS components – EKS, IAM and ECR) – as opposed to having to seek support for each component separately from different vendors.

Using Cisco Container Platform to Provision Kubernetes Clusters in Amazon EKS and on-premises

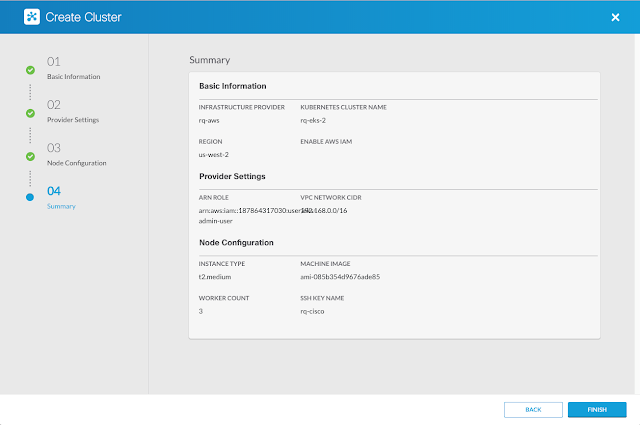

To see what this looks like in practice, lets walk through how the administrator would create an EKS cluster using the Cisco Container Platform (CCP) dashboard.

Provisioning an EKS clusters is as simple as a few button clicks. You first define AWS as your infrastructure provider. This includes a provider name, and AWS account credentials.

Note: The AWS account credentials specified here will be the AWS IAM identity that has privileges to manage the EKS cluster.

Next, you specify basic information about your Amazon EKS cluster. This includes the AWS region you want to deploy the EKS cluster in, an optional IAM user or role that you want allowed additionally to manage the EKS cluster, a cluster name and the Kubernetes version for the cluster.

Finally, you configure information about the EKS worker nodes. This includes the instance types, machine image, number of worker nodes and public ssh keys.

And that’s it! Behind the scenes, CCP uses the Amazon APIs to provision the following resources:

◈ A new VPC (including subnets, security groups, route tables, etc.) in your account in accordance with AWS best practices, with secure private and public subnets as recommended by Cisco for VPN interconnection

◈ A service role for EKS

◈ A node instance profile for the EKS worker nodes

◈ An EKS cluster

◈ An autoscaling group with EKS worker nodes

◈ A configMap on your cluster that allows the worker nodes to join the master

Once the cluster is deployed, you can download a pre-generated Kubernetes cluster config file ( ~/.kube/config) . CCP leverages the open source aws-iam-authenticator kubectl plugin that uses credentials from your local ~/.aws/credentials file to authenticate an AWS IAM user with the EKS cluster.

For on-premises Kubernetes clusters deployed and managed by CCP, the solution offers an integrated experience with Amazon Cloud. As part of the integration with AWS, you can now select the “enable AWS IAM” option, which will install the AWS IAM authenticator components in the newly created on-premises Kubernetes cluster. This allows you to use a single set of AWS IAM credentials to access Kubernetes clusters both on-premises as well as in EKS.

With clusters provisioned in cloud and on-premises environments, let’s take a deeper look at each of the AWS integrations in Cisco Hybrid Solution for Kubernetes on AWS.

Common IAM Identity for Authentication with a common RBAC policy for Authorization

CCP leverages the open source AWS IAM authenticator to enable a common AWS IAM user/role to authenticate with clusters in both cloud and on-premises environments. Once the user/role authenticates with the clusters, a configurable common RBAC policy defines the specific permissions that the user/role is authorized to perform within the respective clusters. As a result, you have to simply switch context using a common “kubectl” cli tool to access either environment.

By default, the AWS credentials specified at the time of Amazon EKS cluster creation are mapped to the Kubernetes ‘cluster-admin’ ClusterRole (the “system:managers” group ClusterRoleBinding). This IAM identity has administrative control of the EKS cluster. As noted before, you can optionally specify an additional AWS IAM role or IAM user as Amazon Resource Name (ARN). When you specify this, CCP:

1 ) Maps an additional associated role in the EKS cluster configMap as illustrated below:

2) Adds the associated role to the kube config so that the AWS IAM authenticator can use that role to authenticate with the EKS cluster as shown below:

For the on-premises cluster, you can enable the AWS IAM integration to authenticate with the cluster using the same IAM identity. You do this by specifying the ARN of an AWS IAM user during the on-premises cluster creation process. CCP similarly maps this user to the Kubernetes ‘cluster-admin’ ClusterRole in the on-premises cluster’s configMap. It also updates the on-premises cluster’s kubeconfig which in-turn enables the AWS IAM authenticator client to authenticate with the on-premises cluster using the same IAM identity.

With IAM configured as described above, it is then possible to use a common RBAC policy applied to Kubernetes clusters either an EKS or on-premises to control access to resources.

Common Amazon Elastic Container Registry (ECR)

CCP integrates with ECR, providing a secure, single repository for all the container images.

For Amazon EKS worker nodes, CCP automatically provisions an instance-role that has permissions to read/write from an ECR repository.

Since on-premises nodes have no such role, an additional step is necessary – the credentials must be stored in a Kubernetes secret which is then referenced by the pod manifest (see below). A script such as the following will do that for you (replace the items in [] as appropriate).

This script fetches an authorization token from AWS and stores it in a Kubernetes secret which is read during the pod deployment. Note that it is necessary to periodically refresh this token. By default, the token expires after 12 hours.

After running the script above, you can deploy a kubernetes manifest via kubectl, specifying the relevant details of the ECR repository, as you normally would. The example pod manifest below demonstrates how the ECR repository used by an application is specified in the image property.

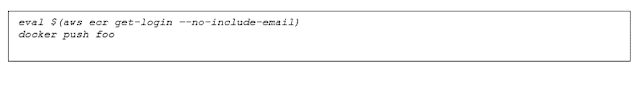

To pull images from an ECR registry, it is necessary to provide credentials. This is described in Amazon’s ECR documentation. For a user running docker, i.t looks like this: (ecr:GetAuthorizationToken privileges are required), while Kubernetes will use the credentials stored in a Kubernetes secret as described earlier and specified in the “imagePullSecret” in the pod manifest.

With CCP, you can deploy both your on-premises and Amazon EKS worker nodes with the same Kubernetes version and operating system.

At launch, you can deploy Kubernetes v1.10 with Ubuntu 18.04 worker nodes, using Cisco-provided images. You do not have to worry about Kubernetes and operating system version inconsistencies across siloed environments. Updates and security patches across the on-premises and AWS environment are handled seamlessly and provided via the CCP control plane software.

Common Monitoring and Logging

CCP provides integrated cluster monitoring via a Prometheus and EFK stack (ElasticSearch/FluentD/Kibana) that is deployed within each cluster deployed by CCP. Monitoring each cluster is in compliance with best practices that mandate separation of production data from development data and for keeping information local for GDPR. It also ensures that logs and metrics are not reliant upon a central service which could be unavailable. Cisco Services can help with log forwarding and central metrics collection as well as integration with customer’s own logging and metrics systems as desired.

Value-added Integrations for Connectivity, Security and Monitoring

Cisco’s extended cross-portfolio solutions provide a range of value-added solutions that can be leveraged from the AWS marketplace to complement the Cisco Hybrid Solution for Kubernetes on AWS.

These include:

◈ Application Deployment: Use Cisco CloudCenter to securely deploy both Kubernetes and VM-based workloads across both private and public infrastructure.

◈ Connectivity: Use Cisco CSR1000v to establish VPN connectivity between hybrid on-premises and cloud environments

◈ Security: Deploy Cisco Stealthwatch to monitor network traffic application traffic for anomalies, leveraging AWS flow logs for cloud-based workloads.

◈ Monitoring: Enable AppDynamics application performance monitoring to see the real-time impact that application performance has on your business results.

0 comments:

Post a Comment