DevNet Developer Advocates, Unite!

Cisco Action Orchestrator (CAO) has been getting some attention from the DevNet Developer Advocates recently. So, I’m going to jump in and do my part and write about how I used CAO to configure Cisco ACI and Cisco UCS.

It was kind of interesting how this plan came together. We were talking about a Multi-domain automation project/demo and were trying to determine how we should pull all the parts together. We all had different pieces: Stuart had SD-WAN; Matt had Meraki; I had UCS and ACI; Kareem had DNAC; and Hank had the bits and pieces and what-nots (IP addresses, DNS, etc.)

I suggested Ansible, Matt and Kareem were thinking Python, Stuart proposed Postman Runner, and Hank threw CAO into the mix. All valid suggestions. CAO stood out though because we could still use the tools, we were familiar with and have them called from a CAO workflow. Or we could use the built-in capabilities of CAO like the “HTTP Request” activity in the “Web Service” Adapter.

Gentlemen Start Your Workflows

CAO was decided upon and we all got to work on our workflows. The individual workflows are the parts we needed to make “IT” happen. Whatever the “IT” was.

I had two pieces to work on:

1. Provision a UCS Server using a Service Profile

2. Create an ACI tenant with all the necessary elements for policies, networks, and an Application Profile

I knew a couple of things my workflows would need – an IP Address and a Tenant Name. Hank’s workflows would give me the IP Address and Matt’s workflows would give me the Tenant Name.

Information in hand I got to making my part of the “IT” happen. At the same time, the others, knowing what they needed to provide, got to making their part of the “IT” happen.

Cisco ACI – DRY (Don’t Repeat Yourself)

A pretty standard rule in programming is “don’t repeat yourself.” What does it mean? Simply put, if you are going to do something more than once, write a function!

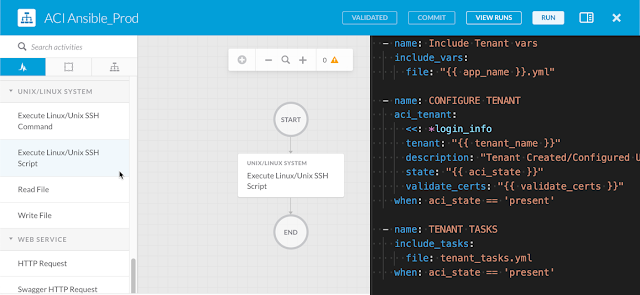

I already wrote several Ansible Playbooks to provision a Cisco ACI Tenant and create an Application Profile, so I decided to use them. The workflow I created in CAO for ACI is just a call to the “Execute Linux/Unix SSH Script” activity in the “Unix/Linux System” Adapter.

For the Workflow I had to define a couple of CAO items.

◉ A target – In this case, the “target” is a Linux host where the Ansible Playbook is run.

◉ An Account Key – The “Account Key” is the credential that is used to connect to the “target”. I used a basic “username/password” credential.

The workflow is simple…

◉ Get the Tenant Name

◉ Connect to the Ansible control node

◉ Run the ansible-playbook command passing the Tenant Name as an argument.

Could more be done here? Absolutely – error checking/handling, logging, additional variables, and so on. Lot’s more could be done, but I would do it as part of my Ansible deployment and let CAO take advantage of it.

Cisco UCS – Put Your SDK in the Cloud

The Cisco UCS API is massive – thousands and thousands of objects and a multitude of methods to query and configure those objects. Once connected to a UCS Manager all interactions come down to two basic operations, Query and Configure.

I created an AWS Lambda function and made it accessible via an AWS API Gateway. The Lambda function accepts a JSON formatted payload that is divided into two sections, auth, and action.

The auth section is hostname, username, password and is used to connect to the Cisco UCS Manager

The action section is a listing of Cisco UCS Python SDK Modules, Classes, and Object properties. The Lambda function uses Python’s dynamic module importing capability to import modules as needed.

This payload connects to the specified UCS Manager and creates an Organization called Org01

{

"auth": {

"hostname": "ucsm.company.com",

"username": "admin",

"password": "password"

},

"action": {

"method": "configure",

"objects": [

{

"module": "ucsmsdk.mometa.org.OrgOrg",

"class": "OrgOrg",

"properties":{

"parent_mo_or_dn": "org-root",

"name": "Org01"

},

"message": "created organization Org01"

}

]

}

}

This payload connects to the specified UCS Manager and queries the Class ID computeRackUnit

{

"auth": {

"hostname": "ucsm.company.com",

"username": "admin",

"password": "password"

},

"action": {

"method": "query_classid",

"class_id": "computeRackUnit"

}

}

To be honest there is a lot of DRY going on here as well. The Python code for this Lambda function is very similar to the code for the UCS Ansible Collection module, ucs_managed_objects.

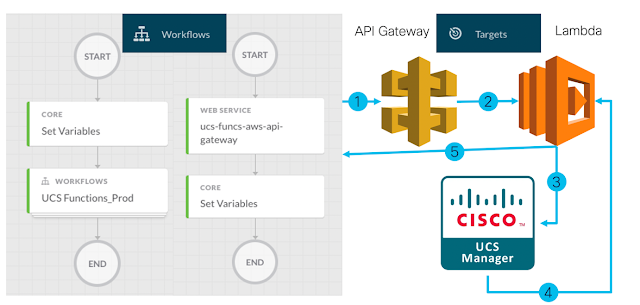

In CAO the workflow uses the Tenant Name as input and a Payload is created to send to the UCS SDK in the Cloud. There are two parts to the UCS CAO workflows. One workflow creates the Payload and another workflow processes the Payload by sending it to the AWS API Gateway. Creating a generic Payload processing workflow enables the processing of any payload that defines any UCS object configuration or query

For this workflow I needed to define a target in CAO

◉ Target – In this case the “target” is the AWS API Gateway that accepts the JSON Payload and sends it on to the AWS Lamba function.

The workflow is simple…

◉ Get the Tenant Name and an IP Address

◉ Create and process the Payload for a UCS Organization named with the Tenant Name

◉ Create and process the Payload for a UCS Service Profile named with the Tenant Name

Pulling “IT” all together

Remember earlier I indicated that we all went off and created our “IT” separately using our familiar tools and incorporating some DRY. When the time came for us to put our pieces together it didn’t matter that I was using Ansible and Python and AWS, my workflows just needed a couple inputs. As long as those inputs were available, we were able to tie my workflows into a larger Multi-domain workflow.

The same was true for Stuart, Matt, Kareem and Hank. As long as we knew what input was needed and what output was needed, we could connect our individual pieces. The pieces of “IT” fit together nicely, and within less than forty minutes on a Webex call we had the main all-encompassing workflow created and running without error.

0 comments:

Post a Comment