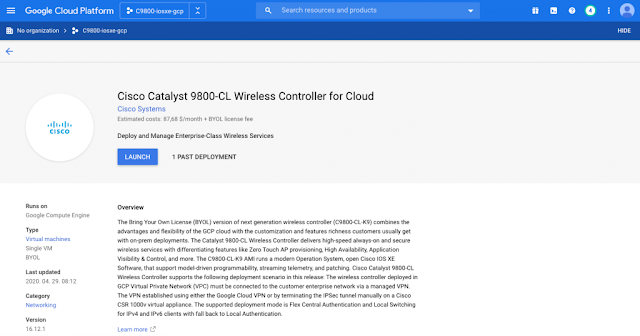

When I heard that the Cisco Catalyst 9800 Wireless Controller for Cloud was supported as an IaaS solution on Google Cloud with Cisco IOS-XE version 16.12.1, I wanted to give it a try.

Built from the ground-up for Intent-based networking and Cisco DNA, Cisco Catalyst 9800 Series Wireless Controllers are Cisco IOS® XE based, integrate the RF excellence of Cisco Aironet® access points, and are built on the three pillars of network excellence: Always on, Secure and Deployed anywhere (on-premises, private or public Cloud).

I had a Cisco Catalyst 9300 Series switch and a Wi-Fi 6 Cisco Catalyst 9117 access point with me. I had internet connectivity of course, and that should be enough to reach the Cloud, right?

I was basically about to build the best-in-class wireless test possible, with the best Wi-Fi 6 Access Point in the market (AP9117AX), connected to the best LAN switching technology (Catalyst 9300 Series switch with mGig/802.3bz and UPOE/802.3bt), controlled by the best Wireless LAN Controller (C9800-CL) running the best Operating System (Cisco IOS-XE), and deployed in what I consider the best public Cloud platform (GCP).

Let me show you how simple and great it was!

(NOTE: Please refer to Deployment Guide and Release Notes for further details. This blog does not pretend to be a guide but rather to share my experience, how to quickly test it and highlight some of the aspects in the process that excited me the most)

The only supported deployment mode is with a managed VPN between your premises and Google Cloud (as shown in previous picture). For simplification and testing purposes, I just used public IP address of cloud instance to build my setup.

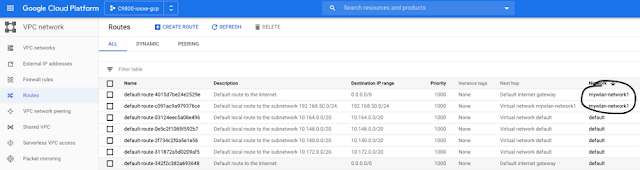

Virtual Private Cloud or VPC

GCP creates a ‘default’ VPC that we could have used for simplicity, but I rather preferred (and it is recommended) to create my specific VPC (mywlan-network1) for this lab under this specific (C9800-iosxe-gcp) project.

I did also select the region closest to me (europe-west1) and did select a specific IP address range 192.168.50/24 for GCP to automatically select an internal IP address for my Wireless LAN Controller (WLC) and a default-gateway in that subnet (custom-subnet-eu-w1).

A very interesting feature in GCP is that network routing is built in; you don’t have to provision or manage a router. GCP does it for you. As you can see, for mywlan-network1, a default-gateway is configured in 192.168.50.0/24 (named default-route-c091ac9a979376ce for internal DNS resolution) and a default-gateway to internet (0.0.0.0/0). Every region in GCP has a default subnet assigned making all this process even more simple and automated if needed.

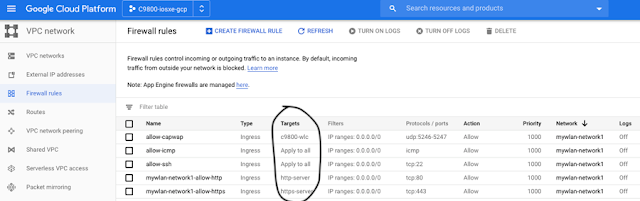

Firewall Rules

Another thing you don’t have to provision and that GCP manages for you: a firewall. VPCs give you a global distributed firewall you can control to restrict access to instances, both incoming and outgoing traffic. By default, all ingress traffic (incoming) is blocked. To connect to the C9800-CL instance once it is up and running, we need to allow SSH and HTTP/HTTPS communication by adding the ingress firewall rules. We will also allow ICMP, very useful for quick IP reachability checking.

We will also allow CAPWAP traffic from AP to join the WLC (UDP 5246-5247).

You can define firewall rules in terms of metadata tags on Compute Engine instances, which is really convenient.

As you can see, these are ACLs based on Targets with specific tags, meaning that I don’t need to base my access-lists on complex IP addresses but rather on tags that identify both sources and destinations. In this case, we can see that I permit http, https, icmp or CAPWAP to all targets or just to specific targets, very similar to what we do with Cisco TrustSec and SGTs. In my case, the C9800-CL belongs to all those tags, so I´m basically allowing all mentioned protocols needed.

Launching the Cisco Catalyst C9800-CL image on Google Cloud

Launching a Cisco Catalyst 9800 occurs directly from the Google Cloud Platform Marketplace. Cisco Catalyst 9800 will be deployed on a Google Compute Engine (GCE) Instance (VM).

You then prepare for the deployment through a wizard that will ask you for parameters like hostname, credentials, zone to deploy, scale of your instance, networking parameters, etc. Really easy and intuitive.

And GCP will magically deploy the system for you!

(External IP is ephemeral, so not a big deal and will only be used during this test while instance is running).

MJIMENA-M-M0KF:~ mjimena$ ping 35.189.203.140

PING 35.189.203.140 (35.189.203.140): 56 data bytes

64 bytes from 35.189.203.140: icmp_seq=0 ttl=247 time=33.608 ms

64 bytes from 35.189.203.140: icmp_seq=1 ttl=247 time=31.220 ms

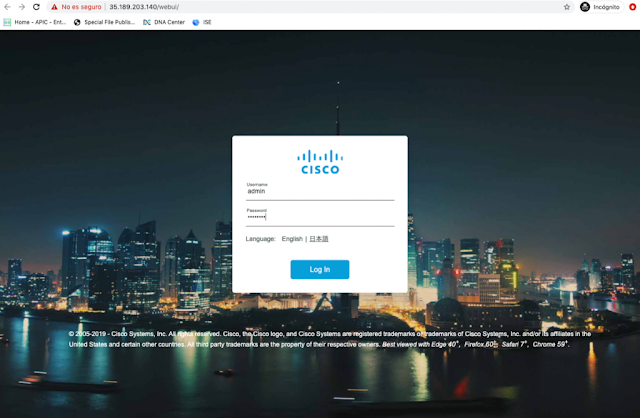

I have IP reachability. Let me try to open a web browser and…………I’m in!!

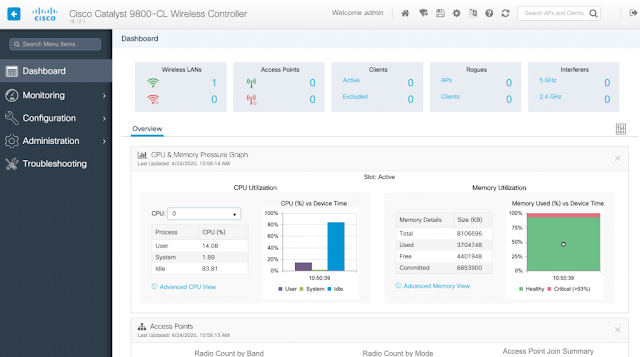

After some initial GUI setup parameters, my C9800-CL is ready. With a WLAN (SSID) configured but with no Access Point registered yet.

I access C9800 CLI with SSH (remember the firewall rule we configured in GCP).

MJIMENA-M-M0KF:~ mjimena$ ssh admin@35.189.203.140

The authenticity of host '35.189.203.140 (35.189.203.140)' can't be established.

RSA key fingerprint is SHA256:HI10434rnGdfQyHjxBA92ywdkib6nBYG6jykNRTddXg.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '35.189.203.140' (RSA) to the list of known hosts.

Password:

c9800-cl#

Let’s double check the version we are running:

c9800-cl#show ver | sec Version

Cisco IOS XE Software, Version 16.12.01

Cisco IOS Software [Gibraltar], C9800-CL Software (C9800-CL-K9_IOSXE), Version 16.12.1, RELEASE SOFTWARE (fc4)

Any neighbor there in the Cloud?

c9800-cl#show cdp neighbors

Capability Codes: R - Router, T - Trans Bridge, B - Source Route Bridge

S - Switch, H - Host, I - IGMP, r - Repeater, P - Phone,

D - Remote, C - CVTA, M - Two-port Mac Relay

Device ID Local Intrfce Holdtme Capability Platform Port ID

Total cdp entries displayed : 0

Ok, makes sense…

The C9800 has a public IP associated to its internal IP address. We need to configure the controller to reply to AP joins with the public IP and not the private one. For that, type the following global configuration command, all on one line:

c9800-cl#sh run | i public

wireless management interface GigabitEthernet1 nat public-ip 35.189.203.140

c9800-cl#sh run | i public

wireless management interface GigabitEthernet1 nat public-ip 3

And indeed, no AP yet.

c9800-cl#show ap summary

Number of APs: 0

c9800-cl#

Let’s plug that Cisco AP9117AX!

I connect a brand new Cisco AP9117ax to a mGIG/ UPOE port in a Cisco Catalyst 9300 switch at 5Gbps over copper.

I connect to console and type the following command to prime the AP to the GCP C9800-cl instance:

AP0CD0.F894.16BC#capwap ap primary-base c9800-cl 35.189.203.140

wireless management interface GigabitEthernet1 nat public-ip 3

This CLI resets connection with WLC to accelerate the join process.

AP0CD0.F894.16BC#capwap ap restart

I check reachability between AP at home and my WLC in GCP.

AP0CD0.F894.16BC#ping 35.189.203.140

Sending 5, 100-byte ICMP Echos to 35.189.203.140, timeout is 2 seconds

!!!!!

The Cisco AP9117AX is joining and downloading IOS-XE image.

My GUI is now showing the AP downloading the right image before joining.

My setup is done!