In the highly dynamic and ever-evolving world of enterprise computing, data centers serve as the backbones of operations, driving the need for powerful, scalable, and energy-efficient server solutions. As businesses continuously strive to refine their IT ecosystems, recognizing and capitalizing on data center energy-saving attributes and design innovations is essential for fostering sustainable development and maximizing

operational efficiency and effectiveness.

Cisco’s Unified Computing System (UCS) stands at the forefront of this technological landscape, offering a comprehensive portfolio of server options tailored to meet the most diverse of requirements. Each component of the UCS family, including the B-Series, C-Series, HyperFlex, and X-Series, is designed with energy efficiency in mind, delivering performance while mitigating energy use. Energy efficiency is a major consideration, starting from the beginning of the planning and design phases of these technologies and products all the way through into each update.

The UCS Blade Servers and Chassis (B-Series) provide a harmonious blend of integration and dense computing power, while the UCS Rack-Mount Servers (C-Series) offer versatility and incremental scalability. These offerings are complemented by Cisco’s UCS HyperFlex Systems, the next-generation of hyper-converged infrastructure that brings compute, storage, and networking into a cohesive, highly efficient platform. Furthermore, the UCS X-Series takes flexibility and efficiency to new heights with its modular, future-proof architecture.

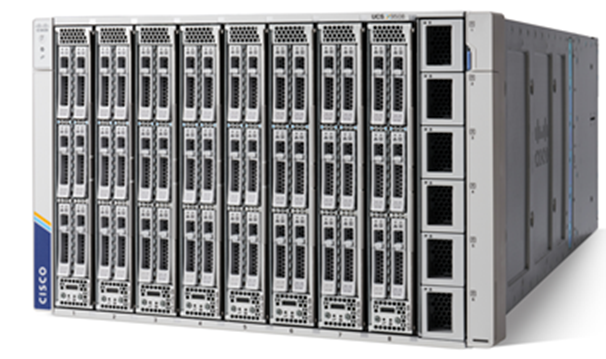

Cisco UCS B-Series Blade Chassis and Servers

The Cisco UCS B-Series Blade Chassis and Servers offer several features and design elements that contribute to greater energy efficiency compared to traditional blade server chassis. The following components and functions of UCS contribute to this efficiency:

1. Unified Design: Cisco UCS incorporates a unified system that integrates computing, networking, storage access, and virtualization resources into a single, cohesive architecture. This integration reduces the number of physical components needed, leading to lower power consumption compared to traditional setups where these elements are usually separate and require additional power.

2. Power Management: UCS includes sophisticated power management capabilities at both the hardware and software levels. This enables dynamic power allocation based on workload demands, allowing unused resources to be powered down or put into a low-power state. By adjusting power usage according to actual requirements, the wasting of energy is minimized.

3. Efficient Cooling: The blade servers and chassis are designed to optimize airflow and cooling efficiency. This reduces the need for excessive cooling, which can be a significant contributor to energy consumption in data centers. By efficiently managing airflow and cooling, Cisco UCS helps minimize the overall energy required for server operation.

4. Higher Density: UCS Blade Series Chassis typically support higher server densities compared to traditional blade server chassis. By consolidating more computing power into a smaller physical footprint, organizations can achieve greater efficiency in terms of space utilization, power consumption, and cooling requirements.

5. Virtualization Support: Cisco UCS is designed to work seamlessly with virtualization technologies such as VMware, Microsoft Hyper-V, and others. Virtualization allows for better utilization of server resources by running multiple virtual machines (VMs) on a single physical server. This consolidation reduces the total number of servers needed, thereby lowering energy consumption across the data center.

6. Power capping and monitoring: UCS provides features for power capping and monitoring, allowing administrators to set maximum power limits for individual servers or groups of servers. This helps prevent power spikes and ensures that power usage remains within predefined thresholds, thus optimizing energy efficiency.

7. Efficient Hardware Components: UCS incorporates energy-efficient hardware components such as processors, memory modules, and power supplies. These components are designed to deliver high performance while minimizing power consumption, contributing to overall energy efficiency.

Cisco UCS Blade Series Chassis and Servers facilitate greater energy efficiency through a combination of unified design, power management capabilities, efficient cooling, higher physical density, support for virtualization, and the use of energy-efficient hardware components. By leveraging these features, organizations can reduce their overall energy consumption and operational costs in the data center.

Cisco UCS C-Series Rack Servers

Cisco UCS C-Series Rack Servers are standalone servers that tend to be more flexible in terms of deployment and may be easier to cool individually. They are often more efficient in environments where fewer servers are required or when full utilization of a blade chassis is not possible. In such cases, deploying a few rack servers can be more energy-efficient than powering a partially empty blade chassis.

The Cisco UCS Rack Servers, like the Blade Series, have been designed with energy efficiency in mind. The following aspects contribute to the energy efficiency of UCS Rack Servers:

1. Modular Design: UCS Rack Servers are built with a modular design that allows for easy expansion and servicing. This means that components can be added or replaced as needed without unnecessary wasting resources.

2. Component Efficiency: Like the Blade Series, UCS Rack Servers use high-efficiency power supplies, voltage regulators, and cooling fans. These components are chosen for their ability to deliver performance while minimizing energy consumption.

3. Thermal Design: The physical design of the UCS Rack Servers helps to optimize airflow, which can reduce the need for excessive cooling. Proper thermal management ensures that the servers maintain an optimal operating temperature, which contributes to energy savings.

4. Advanced CPUs: UCS Rack Servers are equipped with the latest processors that offer a balance between performance and power usage. These CPUs often include features that reduce power consumption when full performance is not required.

5. Energy Star Certification: Many UCS Rack Servers are Energy Star certified, meaning they meet strict energy efficiency guidelines set by the U.S. Environmental Protection Agency.

6. Management Software: Cisco’s management software allows for detailed monitoring and control of power usage across UCS Rack Servers. This software can help identify underutilized resources and optimize power settings based on the workload.

Cisco UCS Rack Servers are designed with energy efficiency as a core principle. They feature a modular design that enables easy expansion and servicing, high-efficiency components such as power supplies and cooling fans, and processors that balance performance with power consumption. The thermal design of these rack servers optimizes airflow, contributing to reduced cooling needs.

Additionally, many UCS Rack Servers have earned Energy Star certification, indicating compliance with stringent energy efficiency guidelines. Management software further enhances energy savings by allowing detailed monitoring and control over power usage, ensuring that resources are optimized according to workload demands. These factors make UCS Rack Servers a suitable choice for data centers focused on minimizing energy consumption while maintaining high performance.

Cisco UCS S-Series Storage Servers

The Cisco UCS S-Series servers are engineered to offer high-density storage solutions with scalability, which leads to considerable energy efficiency benefits when compared to the UCS B-Series blade servers and C-Series rack servers. The B-Series focuses on optimizing compute density and network integration in a blade server form factor, while the C-Series provides versatile rack-mount server solutions. In contrast, the S-Series emphasizes storage density and capacity.

Each series has its unique design optimizations; however, the S-Series can often consolidate storage and compute resources more effectively, potentially reducing the overall energy footprint by minimizing the need for additional servers and standalone storage units. This consolidation is a key factor in achieving greater energy efficiency within data centers.

The UCS S-Series servers incorporate the following features that contribute to energy efficiency:

- Efficient Hardware Components: Similar to other Cisco UCS servers, the UCS S-Series servers utilize energy-efficient hardware components such as processors, memory modules, and power supplies. These components are designed to provide high performance while minimizing power consumption, thereby improving energy efficiency.

- Scalability and Flexibility: S-Series servers are highly scalable and offer flexible configurations to meet diverse workload requirements. This scalability allows engineers to right-size their infrastructure and avoid over-provisioning, which often leads to wasteful energy consumption.

- Storage Optimization: UCS S-Series servers are optimized for storage-intensive workloads by offering high-density storage options within a compact form factor. With consolidated storage resources via fewer physical devices, organizations can reduce power consumption associated with managing and powering multiple storage systems.

- Power Management Features: S-Series servers incorporate power management features similar to other UCS servers, allowing administrators to monitor and control power usage at both the server and chassis levels. These features enable organizations to optimize power consumption based on workload demands, reducing energy waste.

- Unified Management: UCS S-Series servers are part of the Cisco Unified Computing System, which provides unified management capabilities for the entire infrastructure, including compute, storage, and networking components. This centralized management approach helps administrators efficiently monitor and optimize energy usage across the data center.

Cisco UCS HyperFlex HX-Series Servers

The Cisco HyperFlex HX-Series represents a fully integrated and hyperconverged infrastructure system that combines computing, storage, and networking into a simplified, scalable, and high-performance architecture designed to handle a wide array of workloads and applications.

When it comes to energy efficiency, the HyperFlex HX-Series stands out by further consolidating data center functions and streamlining resource management compared to the traditional UCS B-Series, C-Series, and S-Series. Unlike the B-Series blade servers which prioritize compute density, the C-Series rack servers which offer flexibility, or the S-Series storage servers which focus on high-density storage, the HX-Series incorporates all of these aspects into a cohesive unit. By doing so, it reduces the need for separate storage and compute layers, leading to potentially lower power and cooling requirements.

The integration inherent in hyperconverged infrastructure, such as the HX-Series, often results in higher efficiency and a smaller energy footprint as it reduces the number of physical components required, maximizes resource utilization, and optimizes workload distribution; all of this contributes to a more energy-conscious data center environment.

The HyperFlex can contribute to energy efficiency in the following ways:

- Consolidation of Resources: HyperFlex integrates compute, storage, and networking resources into a single platform, eliminating the need for separate hardware components such as standalone servers, storage arrays, and networking switches. By consolidating these resources, organizations can reduce overall power consumption when compared to traditional infrastructure setups that require separate instances of these components.

- Efficient Hardware Components: HyperFlex HX-Series Servers are designed to incorporate energy-efficient hardware components such as processors, memory modules, and power supplies. These components are optimized for performance, per watt, helping to minimize power consumption while delivering the necessary robust compute and storage capabilities.

- Dynamic Resource Allocation: HyperFlex platforms often include features for dynamic resource allocation and optimization. This may include technologies such as VMware Distributed Resource Scheduler (DRS) or Cisco Intersight Workload Optimizer, which intelligently distribute workloads across the infrastructure to maximize resource utilization and minimize energy waste.

- Software-Defined Storage Efficiency: HyperFlex utilizes software-defined storage (SDS) technology, which allows for more efficient use of storage resources compared to traditional storage solutions. Features such as deduplication, compression, and thin provisioning help to reduce the overall storage footprint, resulting in lower power consumption associated with storage devices.

- Integrated Management and Automation: HyperFlex platforms typically include centralized management and automation capabilities that enable administrators to efficiently monitor and control the entire infrastructure from a single interface. This combined integration management approach can streamline operations, optimize resource usage, and identify opportunities for energy saving.

- Scalability and Right-Sizing: HyperFlex allows organizations to scale resources incrementally by adding additional server nodes to the cluster as needed. This scalability enables organizations to custom fit their infrastructure and avoid over-provisioning, which can lead to unnecessary energy consumption.

- Efficient Cooling Design: HyperFlex systems are designed with extreme consideration for efficient cooling to maintain optimal operating temperatures for the hardware components. By optimizing airflow and cooling mechanisms within the infrastructure, HyperFlex helps minimize energy consumption associated with cooling systems.

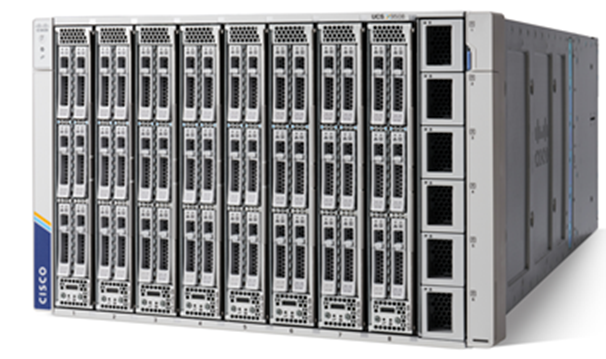

Cisco UCS X-Series Modular System

The Cisco UCS X-Series is a versatile and innovative computing platform that elevates the concept of a modular system to new heights, offering a flexible, future-ready solution for the modern data center. It stands apart from the traditional UCS B-Series blade servers, C-Series rack servers, S-Series storage servers, and even the integrated HyperFlex HX-Series hyperconverged systems, in that it provides a unique blend of adaptability and scalability. The X-Series is designed with a composable infrastructure that allows dynamic reconfiguration of computing, storage, and I/O resources to match specific workload requirements.

In terms of energy efficiency, the UCS X-Series is engineered to streamline power usage by dynamically adapting to the demands of various applications. It achieves this through a technology that allows components to be powered on and off independently, which can lead to significant energy savings compared to the always-on nature of B-Series and C-Series servers. While the S-Series servers are optimized for high-density storage, the X-Series can reduce the need for separate high-capacity storage systems by incorporating storage elements directly into its composable framework. Furthermore, compared to the HyperFlex HX-Series, the UCS X-Series may offer even more granular control over resource allocation, potentially leading to even better energy management and waste reduction.

The UCS X-Series platform aims to set a new standard for sustainability by optimizing power consumption across diverse workloads, minimizing the environmental impact, and lowering the total cost of ownership (TCO) through improved energy efficiency. By intelligently consolidating and optimizing resources, the X-Series promises, and has proven to be, a forward-looking solution that responds to the growing need for eco-friendly and cost-effective data center operations.

The Cisco UCS X-Series can contribute to energy efficiency in the following ways:

- Integrated Architecture: Cisco UCS X-Series combines compute, storage, and networking into a unified system, reducing the need for seperate components. This consolidation leads to lower overall energy consumption compared to traditional data center architectures.

- Energy-Efficient Components: The UCS X-Series is built with the latest energy-efficient technologies; CPUs, memory modules, and power supplies in the X-Series are selected for their performance-to-power consumption ratio, ensuring that energy use is optimized without sacrificing performance.

- Intelligent Workload Placement: Cisco UCS X-Series can utilize Cisco Intersight and other intelligent resource management tools to distribute workloads intelligently and efficiently across available resources, optimizing power usage and reducing unnecessary energy expenditure.

- Software-Defined Storage Benefits: The X-Series can leverage software-defined storage which often includes features like deduplication, compression, and thin provisioning to make storage operations more efficient and reduce the energy needed for data storage.

- Automated Management: With Cisco Intersight, the X-Series provides automated management and orchestration across the infrastructure, helping to streamline operations, reduce manual intervention, and cut down on energy usage through improved allocation of resources.

- Scalable Infrastructure: The modular design of the UCS X-Series allows for easy scalability, thus allowing organizations to add resources only as needed. This helps prevent over-provisioning and the energy costs associated with idle equipment.

- Optimized Cooling: The X-Series chassis is designed with cooling efficiency in mind, using advanced airflow management and heat sinks to keep components at optimal temperatures. This reduces the amount of energy needed for cooling infrastructure.

Mindful energy consumption without compromise

Cisco’s UCS offers a robust and diverse suite of server solutions, each engineered to address the specific demands of modern-day data centers with a sharp focus on energy efficiency. The UCS B-Series and C-Series each bring distinct advantages in terms of integration, computing density, and flexible scalability, while the S-Series specializes in high-density storage capabilities. The HyperFlex HX-Series advances the convergence of compute, storage, and networking, streamlining data center operations and energy consumption. Finally, the UCS X-Series represents the pinnacle of modularity and future-proof design, delivering unparalleled flexibility to dynamically meet the shifting demands of enterprise workloads.

Across this entire portfolio, from the B-Series to the X-Series, Cisco has infused an ethos of sustainability, incorporating energy-efficient hardware, advanced power management, and intelligent cooling designs. By optimizing the use of resources, embracing virtualization, and enabling scalable, granular infrastructure deployments, Cisco’s UCS platforms are not just powerful computing solutions but are also catalysts for energy-conscious, cost-effective, and environmentally responsible data center operations.

For organizations navigating the complexities of digital transformation while balancing operational efficiency with the goal of sustainability, the Cisco UCS lineup stands ready to deliver performance that powers growth without compromising on our commitment to a greener future.