Simplicity is the ultimate sophistication. – Leonardo da Vinci

For IT, complexity is the antithesis of agility. However, with the increased demand for remote healthcare, distance learning, hybrid work, and surging dependence on online retail, there is an urgent shift to hybrid and cloud-native applications to keep up with the necessary digital transformations—thus adding complexity.

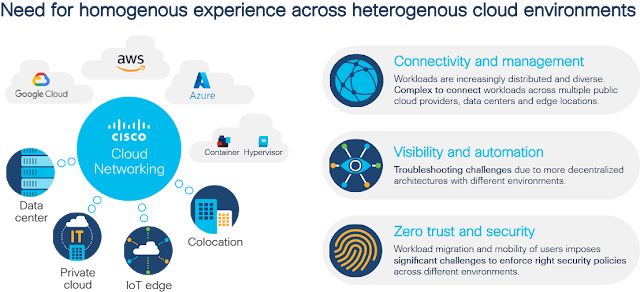

Hybrid cloud is now the reality for nearly all enterprises. Workloads are distributed across on-premises, edge, and public clouds. However, seamless operations of hybrid cloud applications across distributed environments needs to address stringent location-dependent requirements such as low latency, regional data compliance, and resiliency. Adding to the complexity is the additional need for governance—compliance, security, and availability—to which networking teams need to adhere. The need for visibility and insights closer to where data is created and processed—on-premises, cloud, and at the edge—is also critical.

Hybrid Cloud Networking Challenges

How does an operations’ team deal with this complex new hybrid cloud networking reality? They need three operational capabilities:

◉ Obtain a unified correlated and comprehensive view of the infrastructure.

◉ Gain the ability to respond proactively across people, process, and technology silos.

◉ Deliver speed of business, without increasing operating costs and tool-sprawl.

It is a multidimensional challenge for IT to keep applications and networks in sync. With the ever-increasing scope of the roles of NetOps and DevOps, an automation toolset is needed to accelerate hybrid cloud operations and securely manage the expansion from on-prem to cloud.

Flexible Hybrid Cloud Networking with Cisco Nexus Dashboard

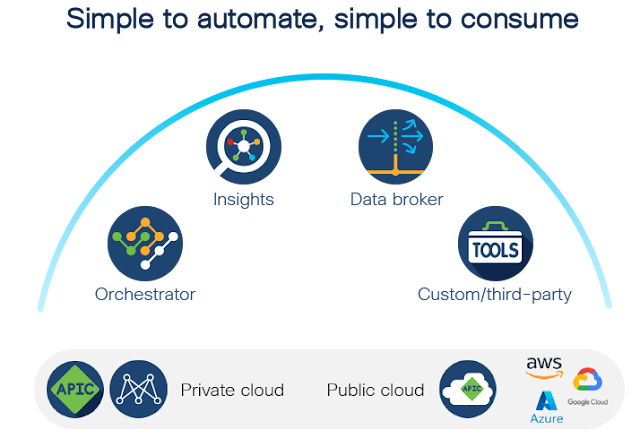

Cisco Nexus Dashboard 2.1, the newest of Cisco’s cloud networking platform innovations, will help IT simplify transition to hybrid applications using a single agile platform. Besides bridging the gap in tooling, one of the major capabilities of the Nexus Dashboard is enabling a flexible operational model for different personas—NetOps, DevOps, SecOps, and CloudOps—across a plethora of use cases.

Cisco Nexus Dashboard: One Scalable, Extensible Platform Across Global Hybrid Infrastructure

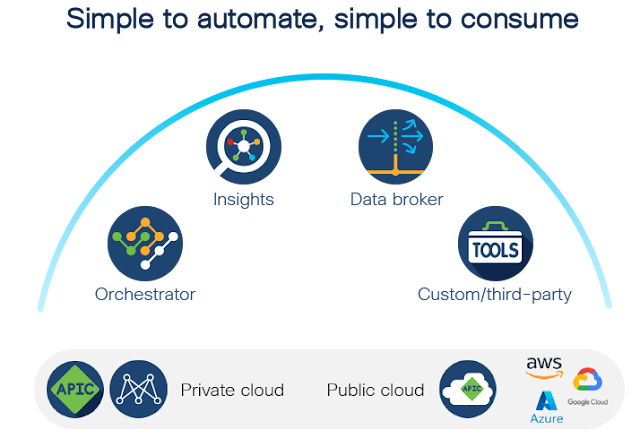

Conventionally, operators relied on disjointed tools for specific functions across connectivity, visibility, and security. With multiple capabilities being natively integrated into the Cisco Nexus Dashboard, as well as 3rd party services, Cisco is simplifying the overall experience for IT.

Operators can now manage their hybrid cloud network infrastructure with ease from a single automation and operations platform, Cisco Nexus Dashboard—whether they are running

Cisco Application Centric Infrastructure (ACI) or Cisco Nexus Dashboard Fabric Controller (NDFC) in their hybrid cloud infrastructures.

New innovations with Nexus Dashboard 2.1 include availability on

AWS and Azure marketplaces; Nexus Dashboard One View, which provides a single cohesive view of all the sites being managed and the services installed across Nexus Dashboard clusters; advanced endpoint analytics; scalable connectivity through Nexus Dashboard Orchestrator (NDO); Nexus Dashboard Insights (NDI); Nexus Dashboard Data Broker (NDDB) service; and many more capabilities. Let’s look at five capabilities of Cisco Nexus Dashboard 2.1 that are delighting customers.

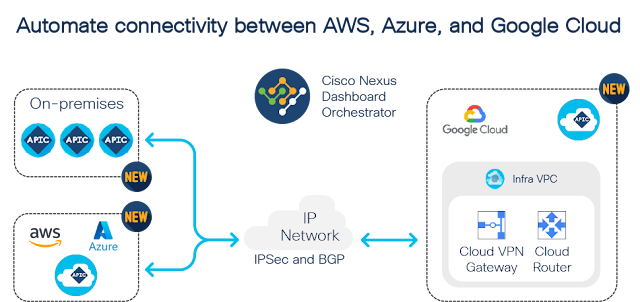

1. Hybrid Cloud Connectivity at Scale with Nexus Dashboard Orchestrator

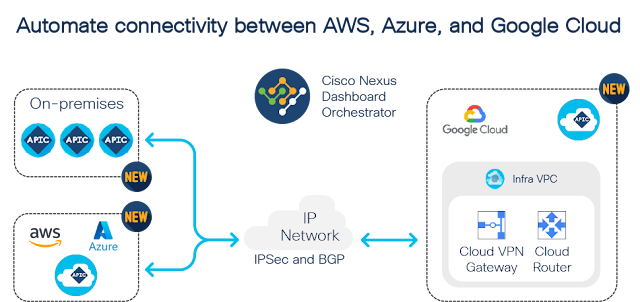

New hybrid cloud capabilities include support for Google Cloud—in addition to AWS and Azure integrations—and connectivity automation capabilities to enable new use cases, such as:

◉ External Connectivity: Cloud VPCs/VNet to external devices (branch router, SD-WAN edge, colocation routers, or on-prem routers)

◉ Hybrid Cloud Connectivity: Automate connectivity for GCP, AWS, and Azure clouds and on-premises ACI sites using BGP and IPSec

◉ Stitching connectivity: Cloud VPCs/VNET, On-Prem VRFs, including route management

Connectivity is established by BGP peering and IPSec tunnels connecting the cloud site’s Cloud Services Routers (CSR) or Google Cloud’s Native Cloud Router, to the external devices. Once connectivity is established, IT can enable route leak configurations to allow subnets from the external sites to establish connectivity with the cloud site’s VPCs/VNETs.

2. Change Management Workflow with Nexus Dashboard Orchestrator

In a modern enterprise IT team, there are typically multiple personas involved from design to deployment. The design team (Designer Persona) can create and edit the Nexus Dashboard Orchestrator templates and send them to the deployment team (Approver/Deployer Persona) for approval. The deployment team reviews and approve templates ahead of a change management window and queues the templates for deployment during the actual change management window.

Starting with the latest version, Nexus Dashboard Orchestrator 3.4(1) release, a structured persona-based change management workflow provides additional operational flexibility. Three personas for template management—Designer, Approver, and Deployer roles—are available. An admin can assume one of these roles or a combination of them.

◉ Designers: Create and edit template application policies and sends them to Approvers for review and approval.

◉ Approvers: Review the templates and either approves for deployment or rejects the proposed changes and sends it back to the Designer to update the template based on comments.

◉ Deployers: Deploys templates or initiates a rollback to previous version of template.

When Approvers review the templates, they have a GitHub-style “diff view” to clearly compare the before and after changes so they can easily review, approve, reject, and comment on the template differences.

Deployers have two additional new capabilities for effective change management operations:

◉ Configuration preview: Preview of the exact configuration—XML Post and graphical views—that will be deployed to the sites so the Deployer can decide to proceed or abort deployment commit.

◉ Template versioning / rollback: Each template is automatically versioned during save or deploy, giving the Deployer the ability to rollback to previous template versions. During rollback the Deployer can see the GitHub style diff between two versions and decide to proceed with the rollback.

Since Nexus Dashboard Orchestrator change management is fully API based, IT can integrate the workflow with in-house tools currently in use.

3. Unify Hybrid Cloud Operations with Nexus Dashboard One View

With Nexus Dashboard 2.1, IT can operate their distributed environment across multiple clusters from a single focal point of control, with the ability to span visibility into fabrics. The scale out architecture adapts to growing operational needs while the One View capability provides a single pane of glass experience, with support for Single Sign On (SSO) and Role Based Access controls (RBAC). This enables operators to consume insights, advisory, and assurance stack as a unified offering to address prevention, diagnosis, and remediation.

Cisco Nexus Dashboard One View

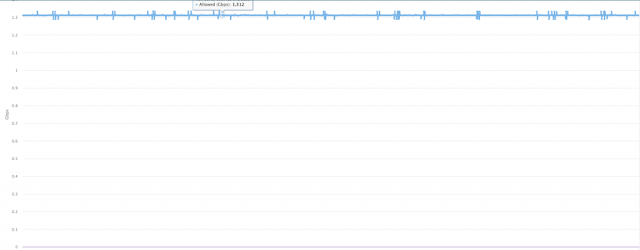

Nexus Dashboard 2.1 takes visibility of network traffic up a notch with support for flow drops, giving IT the ability to identify packet drops in the network as well as the location and reasons. Flows impacted due to events in a switch like buffer, policer, forwarding drops, ACL drops, policer drops, etc. are identified using Flow Table Events (FTE).

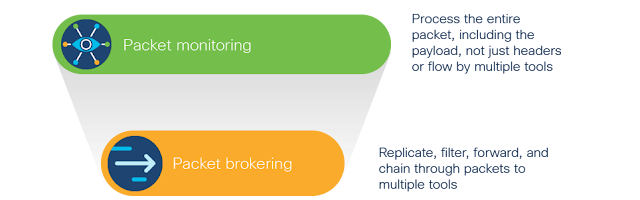

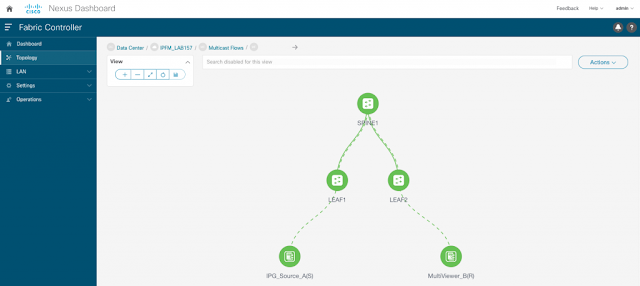

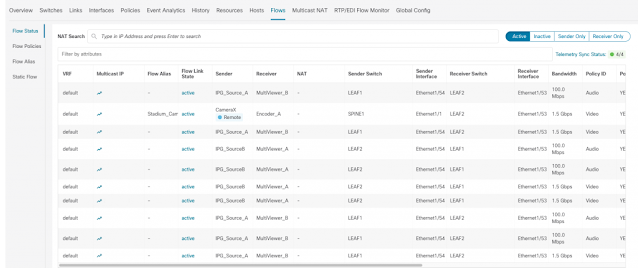

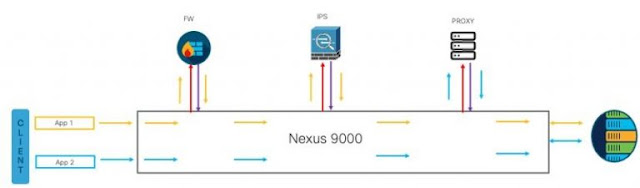

Cisco Nexus Dashboard Data Broker

In addition,

Cisco Nexus Dashboard Data Broker (NDDB) is a one of the newest Nexus Dashboard service that facilitates visibility by filtering the aggregated traffic and forwarding traffic of interest to the tools for analysis. It is a multi-tenant-capable solution that can be used with both Cisco Nexus and Cisco Catalyst fabrics.

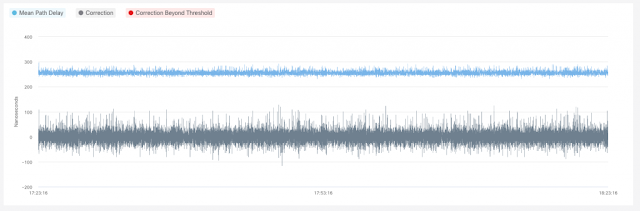

4. Predictive Change Management with Nexus Dashboard Insights

IT can now predict the impact of the intended configuration changes to reduce risk.

◉ Test and validate proposed configurations before rolling out the changes

◉ Proactive checks to prevent compliance violations, while minimizing downtime and Total Cost of Ownership

◉ Continuous assurance to address compliance and security posture

Predictive Change Management with Nexus Dashboard Insights

5. Nexus Dashboard APIs: Automation and Operational Agility for NetOps and DevOps

Cisco Nexus Dashboard now enables a rich suite of services through APIs for third-party developers to build custom apps and integrations. Nexus Dashboard APIs enable automation of intent using policy, lifecycle management, and governance with a common workflow. For example, IT can consume ITSM and SIEM solutions with ServiceNow and Splunk apps available through Nexus Dashboard.

The HashiCorp Terraform and Red Hat Ansible modules published for Nexus Dashboard enables DevOps, CloudOps, and NetOps teams to drive infrastructure automation, maintain network configuration as code, and embed the infrastructure config as part of the CI/CD pipeline for operational agility.

Our Customers Love Nexus Dashboard, and You Will Too!

As a unified, simple to use automation and operations platform, Cisco Nexus Dashboard is the focal point that customers such as T-Systems can use to build, operate, monitor, troubleshoot, and manage their hybrid cloud networking infrastructure.

Are You Ready for Simplicity?

In IT operations, network automation is the key to simplify hybrid cloud complexity, meet KPIs, and increase ROI. Incorporating the needs of NetOps, DevOps, SecOps and CloudOps for full lifecycle operations is table stakes to make this a reality. The latest updates to Cisco Nexus Dashboard deliver the simplicity expected by IT operations teams to become a trusted partner in their digital transformation journey.

Source: cisco.com