Optimize Usage of Available Interfaces, Bandwidth, Connectivity

Dual-homing for endpoints is a common requirement, and many Multi-Chassis Link Aggregation (MC-LAG) solutions were built to address this need. Within the Cisco Nexus portfolio, the virtual Port-Channel (vPC) architecture addressed this need from the very early days of NX-OS. With VXLAN, vPC was enhanced to accommodate the needs for dual-homed endpoints in network overlays.

With EVPN becoming the de-facto standard control-plane for VXLAN, additions to vPC for VXLAN BGP EVPN were required. While the problem space of End-Point Multi-Homing changes, vPC for VXLAN BGP EVPN changes and faces the new requirements and use-cases. The latest innovation in vPC optimizes the usage of the available interfaces, bandwidth and overall connectivity – vPC with Fabric Peering removes the need for dedicating a physical Peer Link and changes how MC-LAG is done. VPC with Fabric Peering is shipping in NX-OS 9.2(3).

Active-Active Forwarding Paths in Layer 2, Default Gateway to Endpoints

At Cisco, we continually innovate on our data center fabric technologies, iterating from traditional Spanning-Tree to virtual Port-Channel (vPC), and from Fabric Path to VXLAN.

Traditional vPC moved infrastructures past the limitations of Spanning-Tree and allow an endpoint to connect to two different physical Cisco Nexus switches using a single logical interface – a virtual Port-Channel interface. Cisco vPC offers an active-active forwarding path not only for Layer 2 but also inherits this paradigm for the first-hop gateway function, providing active-active default gateway to the endpoints. Because of the merged existence of two Cisco Nexus switches, Spanning-Tree does not see any loops, leaving all links active.

vPC for VXLAN BGP EVPN

When vPC was expanded to support VXLAN and VXLAN BGP EVPN environments, Anycast VTEP was added. Anycast VTEP is a shared logical entity, represented with a Virtual IP address, across the two vPC member switches. With this minor increment, the vPC behavior itself hasn’t changed. Anycast VTEP integrates the vPC technology into the new technology paradigm of routed networks and overlays. Such an adjustment had been done previously within FabricPath. In that situation, a Virtual Switch ID was used – another approach for a common shared virtual entity represented to the network side.

While vPC to was enhanced to accommodate different network architectures and protocols, the operational workflow for customers remained the same. As a result, vPC was widely adopted within the industry.

With VXLAN BGP EVPN being a combined Layer 2 and Layer 3 network, where both host and prefix routing exists, the need for MAC, IP and prefix state information is required – in short, the exchange of routing information next to MAC and ARP/ND. To relax a hard routing table and the sync between vPC member, a selective condition for routing advertisement was introduced, “advertise-pip”. With the addition of “advertise-pip”, the selective advertisement of BGP EVPN prefix routes was changed and now advertised from the individual vPC member nodes and its Primary IP (PIP) instead of the shared Virtual IP (VIP). This had the result that unnecessary routing traffic was kept off the vPC Peer Link and instead derived directly to the correct vPC member node.

While many enhancements for convergence and traffic optimization went into vPC for VXLAN BGP EVPN, many implicit changes came with additional configuration accommodating the vPC Peer Link; at this point Cisco decided to change this paradigm of using a physical Peer Link.

The vPC Peer Link

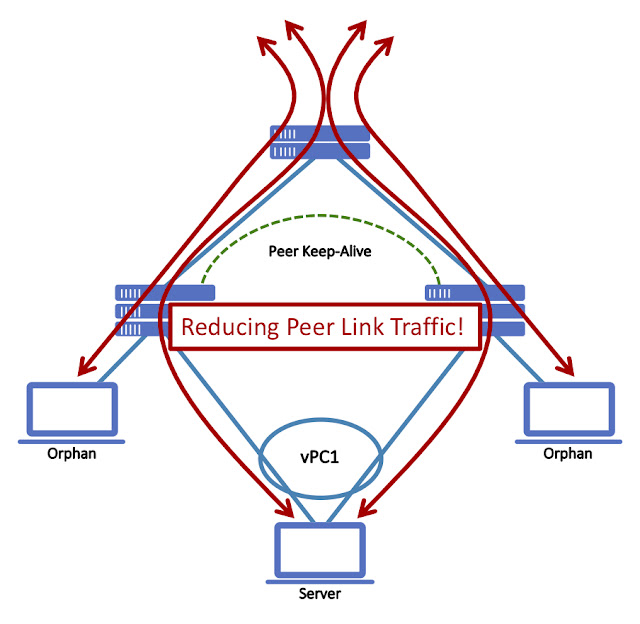

The vPC Peer Link is the binding entity that pairs individual Switches into a vPC domain. This link is used to synchronize the two individual Switches and assists Layer 2 control-plane protocols, like BPDUs or LACP, as it would come from one single Node. In the cases where End-Points are Dual-Homed to both vPC member switches, the Peer Links sole purpose is to synchronize the state information as described before, but in cases of single-connected End-Points, so called Orphans, the vPC Peer Link can still potentially carry traffic.

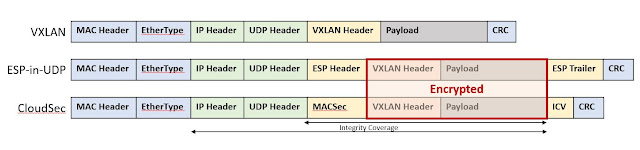

With VXLAN BGP EVPN, the Peer Link was required to support additional duties and provided additional signalization when Multicast-based Underlays were used. Further, the vPC Peer Link was used as a backup routing instance in the case of an extended uplink failure towards the Spines or for the per-VRF routing information exchange for orphan networks.

With all these various requirements, it was a given requirement for making the vPC Peer Link resilient, with Cisco’s recommendation to have at least two or more physical interfaces dedicated for this role.

The aim to simplify topologies and the unique capability of the Cisco Nexus 9000 CloudScale ASICs led to the removal of the physical vPC Peer Link requirement. This freed at least two physical interfaces, increasing interface capacity by nearly 5%.

vPC with Fabric Peering

While changes and adjustment to an existing architecture can always be made, sometimes a more dramatical shift has to be considered. When vPC with Fabric Peering was initially discussed, the removal of the physical vPC Peer Link was the objective but rapidly other improvements came to mind. As such, vPC with Fabric Peering follows a different forwarding paradigm by keeping the operational consistency for vPC intact. The following four sections cover the key architecture principals for vPC with Fabric Peering.

Keep existing vPC Features

As we enhanecd vPC with Fabric Peering, we wanted to ensure that existing features are not being affected. Special focus was added to ensure the availability of Border Leaf functionality with external routing peering, VXLAN OAM and Tenant Routed Multicast (TRM).

Benefits to your Network Design

Every interface has a cost and so every Gigabyte counts. By relaxing the physical vPC Peer Link, we not only achieve architecture fidelity but also return interface and optical cost as well as optimizing the available bandwidth.

Leveraging Leaf/Spine topologies and respective N-way Spines, the available path between any 2 Leafs becomes ECMP and as such, a potential candidate for the vPC Fabric Peering. With all Spines now sharing VXLAN BGP EVPN Leaf to Leaf or East-to-West communication and vPC Fabric Peering, the overall use of provisioned bandwidth becomes more optimized. Given that all links are shared, the increased resiliency for the vPC Peer Link is equal to the resiliency of Leaf to Spine connectivity. This is a significant increase compared to the two physical direct links between two vPC members.

With the infrastructure between the vPC members now shared, the proper classification of vPC Peer Link vs. general fabric payload has to be considered. In foresight of this, the vPC Fabric Peering has the ability to be classified with a high DSCP marking to ensure in-time delivery.

Overview: vPC with Fabric Peering

Another important cornerstone of vPC was the Peer Keep Alive functionality. vPC with Fabric Peering keeps the important failsafe functions in place but relaxes the requirement of using a separate physical link. The vPC Peer Keep Alive can now be over the Spine infrastructure in parallel to the virtual Peer Link. As an alternative and to increase the resiliency, the vPC Peer Keep Alive can still be deployed over the out-of-band management network or any other routed network of choice between the vPC member nodes.

In addition to the vPC Peer Keep Alive, the tracking of the uplinks towards the Spines has been introduced to more deterministically understand the topology. As such the uplink tracking will create a dependency on the vPC primary function and respectively switch the operational primary role depending on the vPC members availability in the fabric.

Focus on individual VTEP behavior

The primary use-case for vPC has always been for dual-homed End-Points. However, with this approach, single attached End-Points (orphans) were treated like 2nd class citizen where the vPC Peer Link allowed reachability.

When vPC with Fabric Peering was designed, unnecessary traffic over the “virtual” Peer Link should be avoided by any means and also the need for per-VRF peering over the same.

With this decision, orphan End-Points become a 1st class citizen similar as dual-homed End-Points are and the exchange of routing information should be done through BGP EVPN instead of per-VRF peering.

Traffic Flow Optimization for vPC and Orphan Host

When using vPC with Fabric Peering, orphan End-Points and networks connected to individual vPC member are advertised from the VTEPs Primary IP address aka PIP; in vPC with physical Peer Link it would always use the Virtual IP (VIP). With the PIP approach, the forwarding decision from and to this orphan End-Point/network will be resolved as part of the BGP EVPN control-plane and forwarded with VXLAN data-plane. The forwarding paradigm of these orphan End/Point/network is the same as it would be with an individual VTEP; the dependency on the vPC Peer Link has been removed. As an additional benefit, consistent forwarding is archived for orphan End-Point/Network connected to an individual VTEP or a vPC domain with Fabric Peering. You could consider that vPC member node existing in vPC with Fabric Peering behaves primarily as an individual VTEP or “always-PIP” for orphan MAC/IP or IP Prefixes.

vPC where vPC is needed

With the paradigm shift to primarily operate an individual vPC member node as a standalone VTEP, the dual-homing functionality has to only be given to specific attachment circuits. As such, the functionality of vPC only comes into play when the vPC keyword has been used on the attachment circuit. In the case for vPC attachment, the End-Point advertisement would be originated with the Virtual IP Address (VIP) of the Anycast VTEP. Leveraging this shared VIP, routed redundancy from the fabric side is achieved with extremely fast ECMP failover times.

In the case of traditional vPC, the vPC Peer Link was also used during failure cases of an End-Points dual attachment. As the advertisement of a previous dual-attached End-Point doesn’t change from VIP to PIP during failures, the need for a Peer Link equivalent function is required. In the case traffic follows the VIP and get hashed towards the wrong vPC member node, the one with the failed link, the respective vPC member node will bounce the traffic the other vPC member.

Traffic redirected in vPC failure cases

vPC with Fabric Peering is shipping as per NX-OS 9.2(3)

Benefits

These enhancements have been delivered without impacting existing vPC features and functionality in lock-step with the same scale and sub-second convergence as existing vPC deployments achieved.

While the addition of new features and functions is simple, having an easy migration path is fundamental to deployment. Knowing this, the impact considerations for upgrades, side grades or migration remains paramount – and changing from vPC Peer Link to vPC Fabric Peering can be easily performed.

vPC with Fabric Peering was primarily designed for VXLAN BGP EVPN networks and is shipping in NX-OS 9.2(3). Even so, this architecture can be equally applied to most vPC environment, as long as routed Leaf/Spine topology exists.