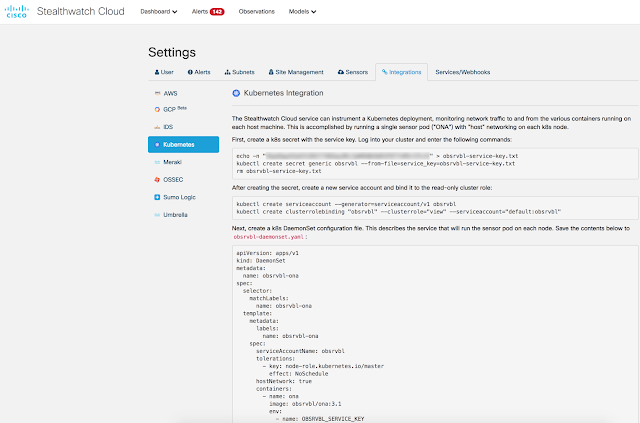

Stealthwatch Cloud is best known for network behavioral anomaly detection and entity modeling, but the level network visibility value it provides far exceeds these two capabilities. The underlying traffic dataset provides an incredibly accurate recording for every network conversation that has transpired throughout your global network. This includes traffic at remote locations and deep into the access layer that is far more pervasive than sensor-based solutions could provide visibility into.

Stealthwatch Cloud can perform policy and segmentation auditing in an automated set-it and forget-it fashion. This allows security staff to detect policy violations across firewalls, hardened segments and applications forbidden on user endpoints. I like to call this setting virtual “tripwires” all over your network, unbeknownst to users, by leveraging your entire network infrastructure as a giant security sensor grid. You cannot hide from the network…therefore you cannot hide from Stealthwatch Cloud.

Here is how we set this framework up and put it into action!

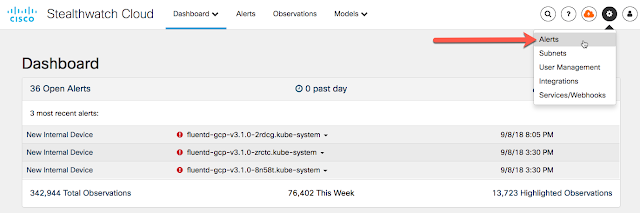

1. Navigate to Alerts from the main dashboard under the gear icon:

Stealthwatch Cloud can perform policy and segmentation auditing in an automated set-it and forget-it fashion. This allows security staff to detect policy violations across firewalls, hardened segments and applications forbidden on user endpoints. I like to call this setting virtual “tripwires” all over your network, unbeknownst to users, by leveraging your entire network infrastructure as a giant security sensor grid. You cannot hide from the network…therefore you cannot hide from Stealthwatch Cloud.

Here is how we set this framework up and put it into action!

1. Navigate to Alerts from the main dashboard under the gear icon:

2. Click Configure Watchlists on the Settings screen:

3. Click Internal Connection Blacklist on the Watchlist Config screen:

4. Here are your options:

5. From here you’ll want to fill out the above form as such:

Name: Whatever you’d like to call this rule, for example “Prohibited outbound RDP” or “permitted internal RDP”Source IP: Source IP address or CIDR rangeSource Block Size: CIDR notation block size, for example (0, 8, 16, 24, etc.)Source Ports: Typically this is left blank as the source ports are usually random ephemeral ports but you have the option if you require a specific source port to trigger the alert.

Destination IP: Target IP Address or CIDR range

Destination Block Size: CIDR notation block size, for example (0, 8, 16, 24, etc.)

Destination Ports: The target port traffic you wish to allow or disallow, for example (21, 3389, etc)

Connections are Allowed checkbox: Check this if this is the traffic you’re going to permit. This is used in conjunction with a second rule to specify all other traffic that’s not allowed.

Reason: Enter a user friendly description of the intent for this rule.

6. Click Add to make the rule active.

7. Here’s an example of a set of rules both permitting and denying traffic on Remote Desktop over TCP 3389:

1. Permit rule:

2. Deny Rule:

8. Resulting Alert set:

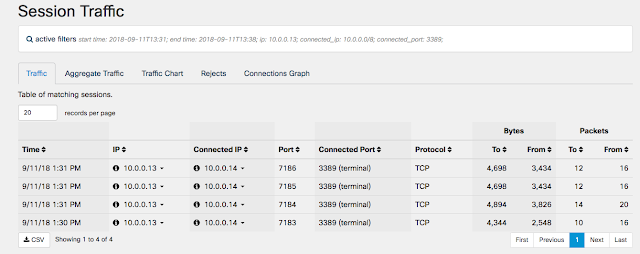

9. Now to test this new ruleset, I will attempt two RDP connections within my Lab. The first will be a lateral connection to another host on the 10.0.0.0/8 subnet and the second to an external IP residing on the public Internet.

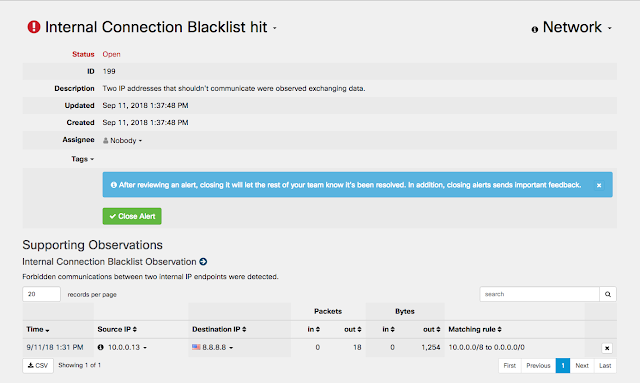

10. Here is the resulting observation that triggered:

11. And the resulting Alert:

12. You can also see the observed ALLOWED traffic from my lateral RDP testing. This traffic did not trigger any observation or alert:

This policy violation alerting framework allows you to be fully accountable for all network prohibited network traffic that will inevitably transit your network laterally or through an egress point. Firewall rules, hardening standards and compliance policies should be adhered to but how can you be certain that they are? Human error, lack of expertise and troubleshooting can and will easily lead to a gap in your posture and Stealthwatch Cloud is the second line of defense to catch any violation the moment that first packet traverses a segment using a prohibited protocol. It’s not a matter of IF your posture will be compromised but WHEN.