In this post, we tackle another common challenge in migration planning – the decision to deploy sites and users on either multi-tenant or “public” cloud solutions versus dedicated, virtualized systems in “private” cloud solutions. In the communications industry, PBX vendors and service providers tend to promote the specific solutions that they sell, taking an almost “black and white” perspective about the benefits of either public or private cloud options.

Public or private cloud. Where do you stand?

If you are a service provider or IT manager, you are probably bombarded by strong opinions on each side – especially for telecom and business communications services. This resulting cacophony of opinions only serves to confuse decision-makers and delay migration plans. To bring some clarity here, this blog post tackles the merits of both public and private cloud, and particularly where these two solutions can work together

The reality, of course, is that there are advantages of both private and public cloud. In fact, a 2018 survey of almost 1,000 IT professionals reveals that 81% of businesses have a “multi-cloud” strategy as shown below: On the right-hand side, you can see some added color to what “Multi-Cloud” means. For that 81% that are Multi-Cloud, 10% are entirely private cloud, 21% are entirely public cloud while 51% is a mix of public and private.

Figure 1: RightScale | 2018 State of the Cloud Report

These numbers present a difficult starting point for migration planning for both IT Managers and CSPs. While in the long term (10+ years), most applications are envisioned for public cloud, the data show that a variety of intermediate steps and near-term plans (over ~5 years) will need a mix of private cloud. To help business IT planners and CSPs, this post will cover:

◈ Description of private and public cloud relative to premises equipment systems

◈ Migration scenarios where a mix of private and public cloud solutions might make sense

◈ CSP opportunity to productize a mix of cloud solution offers

Premises vs. Private vs. Public Cloud

The differences between private and public cloud are subtle but important. From the perspective of end-user applications, there may be no significant difference between the receipt of services from private and public cloud infrastructure. For IT planners and CSPs, the differences can have significant implications. Before describing the attributes of these solutions, it is important to provide some context – the typical starting point for a communications migration, the premises-based PBX system. Below see key attributes of premises PBX, private and public cloud solutions.

Premises-based PBX:

◈ Financing: Systems infrastructure is purchased as a capital expense and depreciated over the life of the equipment.

◈ Management: Systems are managed by the business’s IT staff or in combination with an IT tech firm to provide maintenance and support.

◈ Location: Individual systems are located on a site – in either a wiring closet or, for bigger sites, in an IT rack with power, cooling, controlled access, etc.

◈ Telecom Services (PSTN): Telecom and PSTN services are delivered from a 3rd party (CSP typically) to a “demarcation” point and interconnected to the PBX to allow PSTN calling and emergency services.

◈ Integrations: Connections into 3rd party cloud applications (e.g., salesforce.com) or local systems (e.g., elevators, entry/exit systems, and alarm systems) are managed as “custom” integrations leveraging native PBX APIs and through a specialist vendor or through a preferred IT tech firm.

◈ Economics: Provide lower cost / seat / month for larger sites with simple telephony needs.

Private cloud or dedicated, virtualized cloud systems:

◈ Financing: Systems infrastructure can be owned by the CSP with services delivered for a monthly service charge or owned by the business and treated as a capital expense. There are a variety of financing models depending on the CSP or PBX vendor.

◈ Management: Systems infrastructure can be managed by the CSP, an IT services firm, or the PBX vendor directly. There are multiple options for who manages systems, provides upgrades, and support.

◈ Location: Systems can be located in CSP data centers, IT datacenter, or even the business’s own IT data centers.

◈ Telecom Services: Telecom and PSTN services can either be bundled communications applications or split off and managed as separate contracts.

◈ Integrations: Similar to PBX systems with most integrations managed as “custom” projects leveraging the PBX APIs. There may be some limitations based on location or if the virtualized system leverages a specialized provisioning and management system or “wrapper.”

◈ Economics: Provide lower cost / seat / month when deployed as part of a broader “private cloud” deployment and serve more basic communications needs.

Public cloud or multi-tenant deployments:

◈ Financing: Systems infrastructure is owned and managed by the CSP. Services are provided off this infrastructure to the business for a monthly fee, typically priced by the user, metered charge (e.g., minutes), or virtual service (e.g., IVRs, Meeting Bridges).

◈ Management: Systems are managed by the CSP, including upgrades, maintenance, and support. CSP systems serve multiple businesses.

◈ Location: Systems are typically managed by the CSP in a state of the art data center that leverages public cloud providers (e.g., Google, Amazon, etc.) with extensive peering to partner networks.

◈ Telecom Services: Telecom and PSTN services are typically bundled with user, group, and virtual services with limited to no options to split traffic across multiple wholesale carriers.

◈ Integrations: Connections to 3rd party cloud providers are typically provided as part of standard service packages. There may be limitations to what is actually supported via CSP APIs.

◈ Economics: Provide lower cost / seat / month for multi-site deployments, with more advanced services, and more 3rd party cloud integrations.

Hybrid Cloud Migration Options

From the above description of the attributes of private and public cloud deployments, you may begin to see some of the scenarios where a business may want to take advantage of a mix of cloud solutions. Some common scenarios are as follows:

Large HQ with many small branch offices: Many businesses and specific verticals combine a large headquarters or regional site with multiple smaller branch offices. Headquarter sites typically serve knowledge workers, office workers, and executives. Within these large sites or even campus locations, PBX systems may integrate to co-located business systems serving specific business applications or added communications needs. These sites are different from branch locations. Consider in particular this scenario for retail sites and branch banks. The smaller sites may only need basic telephony for lightweight customer service and mobility applications.

Recommendation: These particular businesses may prefer private cloud for the larger sites or campus environments and public cloud for the branch locations. Larger sites can take advantage of PBX economics and legacy co-located integrations. The branch sites take advantage of multi-site services, reduced management costs, and enhanced mobility offers from public cloud services.

Site(s) with integrated call/contact center(s): In some cases, the PBX that services a large site serves two roles – providing both local calling for business telephony applications and also providing inbound and outbound for contact center employees. There may be certain integrations and efficiencies already achieved through using the same call control platform to support both applications. Deploying a PBX via this approach could be part of a broader effort to integrate employee calling and contact center services.

Recommendation: Sites with integrated contact centers might be targeted for a private cloud deployment while all other sites are targeted for public cloud. The business may want to look at vendors that bring a combination of contact center and enterprise telephony on a public cloud – though such a migration should be planned on its own timeline.

Differing regional cloud migration strategies: There are still some considerable differences in the maturity of cloud communications offers based on region. These differences apply for CSPs, PBX vendors, and IT tech integrators. These differences may also apply to how partners support private cloud solutions.

Recommendation: Businesses should complete a thorough assessment of the maturity of both private and public cloud offers across regional sites. A site-specific recommendation might be necessary along with an interim solution that combines private and public cloud communications across regions.

Communications Service Provider – Hybrid Solution Productization

Considering all instances where businesses may prefer a mix of public and private cloud, CSPs should consider how to “productize” such an offer with a mix of solutions. Such an offer may need to include “managed PBX” options where PBXs remain on site for a period of time during interim migration phases. CSPs should include state-of-the-art SIP trunking and network connectivity to support managed premises equipment sites along with public and private cloud service offerings.

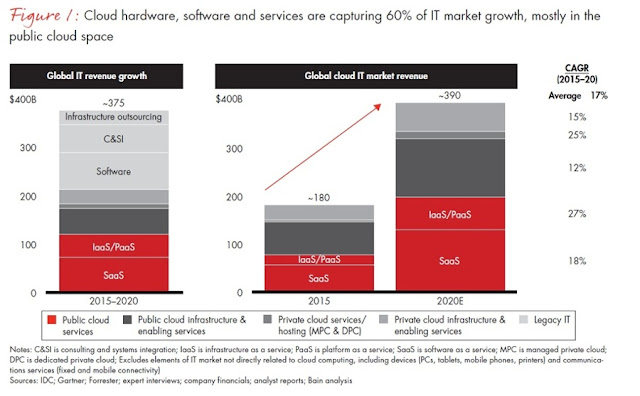

Current forecasts show that businesses currently consume a mix of public and private cloud services along with a mix of SaaS, PaaS, IaaS and other IT solutions. Increasingly CSPs benefit through partnerships to offer a more complete portfolio of offers, enabling them to drive sales engagements. Note the current forecast below from a recent BAIN BRIEF publication which brings forward data from IDC, Gartner, and Forrester:

Figure 2: Bain.com

For the CSP who brings such a mix of managed equipment, private, and public cloud offers, they open the possibility of winning coveted large enterprise opportunities and forming deep partnerships with these businesses as they help them plan a prudent and transparent cloud migration.

Though businesses pay a premium for these types of migration offers, they can ultimately achieve 1) operating and management efficiencies through the consolidation of vendors and systems, 2) productivity benefits as they chart the path to greater enterprise-wide services engagement (see incremental cut-over plans) and 3) reduced risk of disruption to mission-critical communications and data services.

One of the most important things for CSPs in productizing a mix of cloud offers is around pricing. Studies have shown that lack of pricing transparency is one of the critical concerns for businesses that can delay or deter cloud migration. For vendors, offering clear pricing is critical to engaging with businesses’ IT leaders. An August 2018 BCG study suggests that over the next five years, over 90% of revenue growth in the hardware and software sectors will be coming from either on-premises or off-premises offerings sold with “cloud-like” pricing models. BCG goes on to state that re-thinking industry pricing models is “no longer optional.” Thus, the CSP that can position a combination of public and private cloud solutions, all delivered with cloud-like pricing, will likely see strong receptivity in the marketplace.

CSPs should prepare to encounter a variety of existing cloud solutions and “in process” cloud migrations as a “starting point” for preparing hybrid migration offers. This starting point might include an existing mix of premises systems, managed virtualized systems, and some public cloud solutions. The CSP should try to use a small number of relatively simple starting points to enable them to scale their offer. They should consider the Pareto principle (80:20 rule) where the majority of prospects can be targeted with a smaller subset of solutions. Defining these productized solutions may require the CSP to consider geographic or industry vertical characteristics. See below for the wide variety of cloud migration statuses reported in a 2016 McKinsey survey:

Figure 3: McKinsey & Company

This McKinsey chart shows that a CSP may want to consider a different mix of public and private cloud solution offers if the CSP is planning to target financial services vs. healthcare vs. insurance. Most important of all, the CSP needs to understand that they will struggle if they try to productize and especially scale a hybrid offer migration strategy as a one-size-fits-all approach.

In conclusion, there is a tremendous opportunity for both businesses and CSPs to benefit from a migration strategy that includes a mix of both private and public cloud solutions. To the extent that private and public cloud offers can work together can benefit both businesses and CSPs. In the most basic case, businesses and CSPs should look for combined solutions that can emulate an integrated or single cloud solution. Private and public cloud solutions can emulate a single cloud from a functional, economic, and/or delivery point of view – providing benefits in the form of added productivity, cost transparency, and migration project risk reduction.