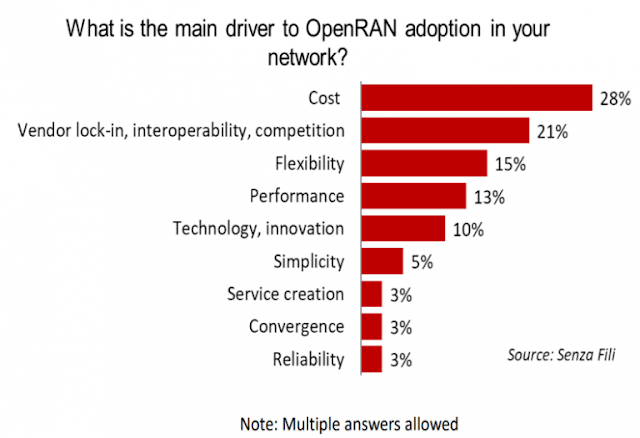

Open Virtualized Radio Access Network i.e. “O-vRAN” has been a buzzword in the industry for quite some time now. To date, many renowned mobile industry analysts and researchers have conducted surveys on O-vRAN and revealed about the strong momentum of Mobile Network Operators (MNO) towards Commercial Off the Shelf (COTS) hardware, Software Defined Networks (SDN), and Virtualization. More and more MNOs are adopting or planning to modernize their Radio Access Networks (RAN) with Open vRAN architecture.

Saturday, 3 October 2020

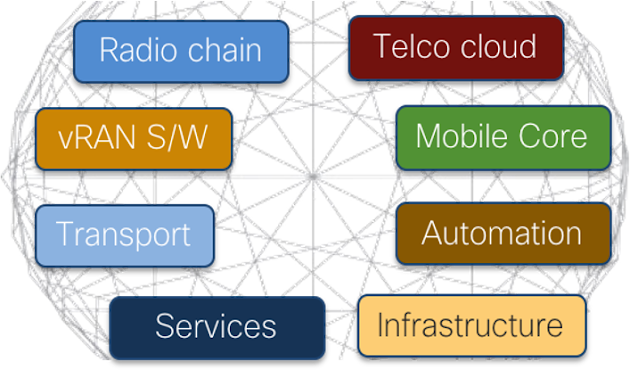

Cisco’s Magic Glue Binds the Pieces of the OvRAN Puzzle

Thursday, 1 October 2020

Universal Release Criteria 2.0–A Disruptive Quality Management Framework

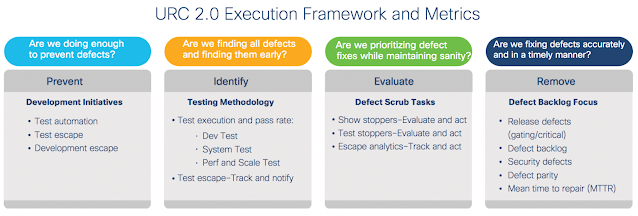

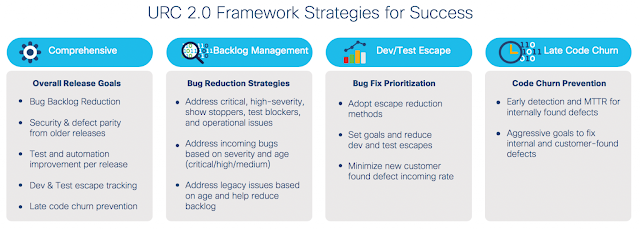

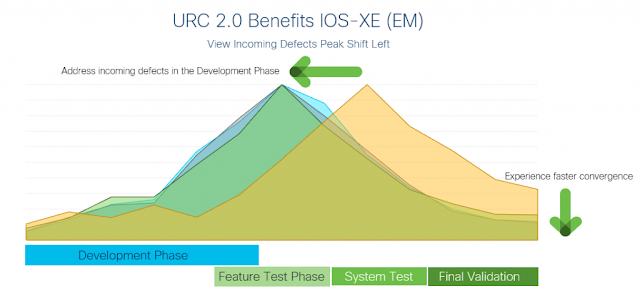

Quality cannot be an afterthought! It takes strategic planning for meticulous implementation. The more time we invest in proactive thinking, tooling, Shift Left approaches, quality governance, and a process-driven culture, the more money and time we can save across the life of the product. Cisco’s Universal Release Criteria (URC 2.0) represents one such disruptive quality management framework that has been developed and adopted. The following criteria will help define a comprehensive set of quality goals, metric standardization, and process governance across the full development lifecycle from product development requirements to releases. The IOS-XE product fully adopted, implemented, and proved the URC 2.0 development process very effective.

Having worked in the software industry for more than two decades, I have gained experience in all aspects of the software development life cycle, process, metrics, and measurement. As a programmer, I designed and developed complex systems. I led the software development strategy and process for CI/CD pipelines, modernized it, and automated it. Also, I led the quality journey for various types of software releases for businesses to grow to scale. Given my background, I feel qualified to share my thoughts on URC development processes that helped us transform traditional development processes to more modern, automated, and streamlined counterparts.

Limited vision in quality goals and unchecked negative behaviors directly impact product quality. Resistance to upgrade requests from customers, lack of scaled environments to test deployments, and delays in early adoption really plague projects. Short-sighted quality goals impact deployments in the following manner:

- There is excessive focus on backlog issues with inadequate focus and management of incoming defects.

- Critical defects are prioritized exclusively.

- Inadequate or negligible attention is paid to proactive defect prevention.

URC 2.0 is the brainchild of cross-functional quality specialists with representatives from operations, development, testing, quality, and supply-chain organizations. The main objective of this process innovation is to “address defects found in a release” that “will have to be fixed in the same release” by bringing “no technical debt” forward. Initially, this simple rule placed tremendous pressure on teams, but within a couple of years, everyone willingly embraced this cultural shift! The results of URC 2.0 can be summarized as follows:

- Provides a failproof framework for release quality management.

- Outlines comprehensive release quality criteria for product development.

- Transforms the departmental culture within software and hardware teams.

- Prevents defects and reduces escape conditions during the product development lifecycle.

- Fosters trickle-down innovation, enhances development practices, and furthers tools automation.

Shift Left techniques enjoy the benefits that come from URC’s quality algorithm innovations and the processes such as the following:

- Manages the incoming defect process using escape analytics and Raleigh’s curve.

- Enhances the algorithm to help address incoming, backlog, and bug disposal trends.

- Prioritizes age-based bug fixes to address high-risk defects.

- Simplifies operational management of the bug backlog.

- Reduces last-minute code churn.

- Operationalizes escape reduction.

- Targets addressing of security vulnerabilities at the right level.

The URC 2.0 Framework

The URC framework is based on four basic tenets of quality management:

- Prevention

- Identification

- Evaluation

- Removal

A set of metrics is identified for each tenet to measure and drive quality. The objective of each phase and corresponding metrics are described in the diagram below.

Important URC Framework Criteria

- Track Defects–Address incoming and legacy issues based on severity and resolve issues within the same

- Use Metrics–Focus on execution quality and pass rate. Adhere to strict goals and periodically measure

- Ensure Release Defect Parity–Ensure that all fixes from prior releases are incorporated into the current release.

- Security Vulnerabilities–Address all known issues before general release availability.

- Control Late Code Churn–Set aggressive mean time to repair goals for all internally and externally found

- URC Window–The time between the “URC Freeze” date of the prior release and the “URC Freeze” date of the current release is considered the window of opportunity to make a difference.

- URC Backlog–When implementing URC 2.0 for the first time, address defects that fall into these major categories:

- All defects that are applicable to the current release, irrespective of where or when they were found.

- All URC defect fixes that must be carried forward from the previous release to maintain functional parity amongst releases.

- Pick a start date from the previous window to begin your URC window for the current release. This will help serve as a reference point to address critical defects.

- URC Freeze–Select a date when you stop working on your URC backlog. Ideally, this date must be after the completion of feature test for the release. The frozen URC backlog must be addressed and brought down to zero within three weeks of the freeze. This date must coincide with the critical defect cut-off date for the release. No bug fixes, immaterial of their severity, should be added to the current release after this critical defect cut-off date.

- Final Code Validation–Conduct final testing and release readiness checks before the release is shipped to

Proof of URC 2.0 Success

Tuesday, 29 September 2020

3 ways Cisco DNA Center and ServiceNow integration makes IT more efficient

Today’s highly complex and dynamic networks create demands that often exceed the capacity of IT operations teams. Within Cisco IT, we are meeting these demands by creating integrations between Cisco DNA Center and ServiceNow.

We use Cisco DNA Center to control the Cisco campus and branch network, as well as to track upgrades and manage the operational states of all network elements, connections, and users.

We use ServiceNow as one of the IT service management platforms for providing helpdesk support to users and management capabilities to our IT service owners.

Customer Zero implements emerging technologies into Cisco’s IT production environments ahead of product launch. We are integrating these systems in multiple ways to make it easier to find the right information to solve problems, streamline tasks for network changes, and allow routine operational tasks to run autonomously in an end-to-end automated workflow. Furthermore, Customer Zero is providing an IT operator’s perspective as we develop integrated solutions, best practices, and accompanying value cases to drive accelerated adoption.

To develop these integrations, Cisco IT takes advantage of Cisco DNA Center platform API Bundles, Cisco DNA Center customizable app in the ServiceNow App store, and other ServiceNow offerings.

Integration #1: All the right information, accessible in one place

One of our first integrations synchronizes inventory information about network devices from Cisco DNA Center to the ServiceNow configuration management database (CMDB). This inventory sync benefits users of both systems. Cisco DNA Center provides up-to-date information on a device so when there’s an issue, an engineer can see it in the CMDB along with context information, such as who to contact about solving the problem.

In the future, the engineer working in the CMDB will be able to click on a link to manage that device in Cisco DNA Center without needing a separate login and subsequent searches for the device. This feature will help the engineer save time, especially when troubleshooting network issues.

Integration #2: Streamlining deployment of software images

Another integration we created supports automation for managing software image updates on our network devices. In the past, Cisco IT engineers have spent thousands of hours every quarter managing these routine updates. But when Cisco IT receives a high-priority security alert, the updates must be distributed and verified ASAP on thousands of affected devices.

With a manual process, this effort requires extensive time for engineers to manage the change activity and track its status on every device. And the network remains exposed to the threat until this process is completed.

In the coming months, we will automate much of the change-management process through the Cisco DNA Center Software Management Functionality and ServiceNow integration. For emergency changes, the engineer can create one change request that covers all devices, which dramatically simplifies approvals. Once the device has been upgraded, Cisco DNA Center updates the individual device record in our ServiceNow system. This automation allows us to maintain current information without needing to process identical change requests for individual devices.

Integration #3: Turning routine work into autonomous processes

More integrations to come

Monday, 28 September 2020

Introduction to Programmability – Part 2

Part 1 of this series defined and explained the terms Network Management, Automation, Orchestration, Data Modeling, Programmability, and APIs. It also introduced the Programmability Stack and explained how an application at the top layer of the stack, wishing to consume an API exposed by the device at the bottom of the stack, does that. The previous post covered data modeling in some detail due to the novelty of the concept for most network engineers. I’m sure that Part I, although quite lengthy, left you scratching your head. At least a little.

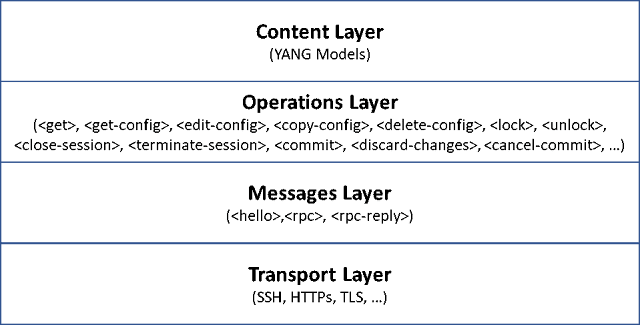

So, in this part of the series, I will try to clear some more of the ambiguity related to programmability. As discussed in the previous post, the API exposed by a device uses a specific protocol. For example, a device exposing a NETCONF API will use the NETCONF protocol. The same applies to RESTCONF, gRPC, or Native REST APIs. The choice of protocol also decides which data encoding to use, as well as the transport over which the application speaks with the device.

Where to start?

One of the problems with discussing programmability is where to start. If you start with a protocol, you will need to understand the encoding in order to decipher the contents of the protocol messages. But for you to appreciate the importance of encoding, you need to understand its application and use by the protocol. The chicken first, or the egg! Moreover, with respect to RESTful protocols, you will also need a pretty good understanding of the transport protocol, HTTP in this case, in order to put all the pieces together.

So in order to avoid unnecessary confusion, this part of the series will only cover NETCONF and XML. HTTP, REST, RESTCONF, and JSON will be covered in the next part. Finally, gRPC and GPB will be covered in one last part of this series.

Note: In this blog post we will make very good use of Cisco’s DevNet Sandboxes. In case you didn’t already know that, Cisco DevNet provides over 70 sandboxes that constitute devices in different technology areas, for you to experiment with during your studies. Some of those are always-on, available for immediate use, and others need a reservation. All the sandboxes can be found at: https://devnetsandbox.cisco.com/RM/Topology. For the purpose of this blog, the sandboxes that do not need a reservation will suffice. Any other excuses for not reading on?… I didn’t think so!

APIs: RPC vs REST

In the previous part of this series we looked at APIs and identified them as software running on a device. An API exposed by the device provides a particular function or service to other software that wish to consume this API. The internal workings of an API are usually hidden from the software that consumes it.

For example, Twitter exposes an API that a program can consume in order to tweet to an account automatically without human intervention. Similarly, Google exposes a Geolocation API that returns the location of a mobile device based on information about cell towers and WiFi nodes that the device detects and sends over to the API.

Similarly, an API exposed by, say, a router, is software running on the router that provides a number of functions that can be consumed by external software, such as a Python script.

APIs may be classified in a number of different ways. Several API types (and different classifications) exist today. For the purpose of this blog series, we will discuss two of the most commonly used types in the network programmability arena today: RPC-based APIs and RESTful APIs.

Remote Procedure Call (RPC)-based APIs

A Remote Procedure Call (RPC) is a programmatic method for a client to Call (execute) a Procedure (piece of code) on another device. Since the device requesting the execution of the procedure (the client) is different than the device actually executing that procedure (the server), it is labelled as Remote.

An RPC-based API opens a software channel on the server, exposing the API, to clients, wishing to consume that API, for those clients to request the remote execution of procedures on the server. Both NETCONF and gRPC are RPC-based protocols/APIs. This part of the series will cover NETCONF and describe its RPC-based operation.

Representational State Transfer (REST):

REST is a framework, specification or architectural style that was developed by Roy Fielding in his doctoral dissertations in 2000 on APIs. The REST framework specifies six constraints, five mandatory and one optional, on coding RESTful APIs. The REST framework requires that a RESTful API be:

◉ Client-Server based

◉ Stateless

◉ Cacheable

◉ Have a uniform interface

◉ Based on a layered system

◉ Utilize code-on-demand (Optional)

When an API is described as RESTful, then this API adheres to the constraints listed above.

To elaborate a little, a requirement such as “Stateless” mandates that the client send a request to the API exposed by the server. The server processes the request, sends back the response, and the transaction ends at this. The server does not maintain the state of this completed transaction. Of course, this is an over simplification of the process and a lot of corner cases exist. An API may also be fully RESTful, or just partially RESTful. It all depends on how much it adheres to the constrains listed here.

REST is an architectural style for programming APIs and uses HTTP as an application-layer protocol to implement this framework. Thus far, HTTP is the only protocol designed specifically to implement RESTful APIs. RETCONF is a RESTful protocol/API and will be the subject of an upcoming part of this series, along with HTTP.

Although gRPC is an RPC-based protocol/API, it still uses HTTP/2 at the transport layer (recall the programmability stack from Part I ?) You may find this a little confusing. While it is beyond the scope of this part of the series to describe the operation of gRPC and its encoding GBP, this will be covered in an upcoming part. Stay with me on this series, and I promise that you won’t regret it ! For the sake of accuracy, gRPC also supports JSON encoding.

NETCONF

In the year 2003 the IETF assembled the NETCONF working group to study the shortcomings of the network management protocols and practices that were in use then (such as SNMP), and to design a new protocol that would overcome those shortcomings. Their answer was the NETCONF protocol. The core NETCONF protocol is defined in RFC 6241 and the application of NETCONF to model-based programmability using YANG models is defined in RFC 6244. NETCONF over SSH is covered on its own in RFC 6242.Figure 1 illustrates the lifecycle of a typical NETCONF session.

NETCONF

Sunday, 27 September 2020

Introduction to Programmability – Part 1

Are you a network engineer and have had to repeat the same boring task at work, every day? Do you feel that there must be a way for you to do a task once, and then “automate” it? Theoretically, an infinite number of times? Or, have you been spending more time cleaning up and correcting configuration mistakes than you spend implementing those configurations? Or maybe you have been hearing a lot about this hot new “thing” called network programmability, but in the middle of the hype, could not figure out what exactly it is?

If any of those cases (and many others) apply to you, then you are in the right place. The fact that you are here, reading this now, means you know that there is probably a solution to your problem(s) in the realm of automation and/or programmability. In this case, buckle up because you are in for a ride!

If you are a network engineer and browsed to this page by mistake, I still urge you to read on. Netflix, Youtube, Facebook and Twitter will still be there when you are done. (Or not.) This is more fun. Trust me!

A Few Definitions For The Road

Before we dive into the nuances of network programmability and automation, let’s clear up some confusion. I hate nothing in the world more than definitions – well, maybe greasy pizza – but this is a necessary evil! In order to start clean, you must understand each of the following: network management, automation, orchestration, modeling, programmability and APIs.

Network Management is an umbrella term that covers the processes, tools, technologies, and job roles, among other things, required to manage a network and the lifecycle of the services offered by that network.

Many standards and frameworks exist today to define the different components of network management. One of them is FCAPS, where the acronym stands for Fault, Configuration, Accounting, Performance and Security Management. FCAPS is geared towards managing the systems that constitute the network.

Another is ITIL. The acronym stands for Information Technology Infrastructure Library and covers an extensive number of practices for IT Services Management (ITSM), which is basically the lifecycle of the services provided by the network. ITIL is divided into 5 major practices: Service Strategy, Service Design, Service Transition, Service Operation, and Continual Service Improvement. Each practice is divided into smaller sub-practices. For example, Service Design includes Capacity Management, Availability Management and Service Catalogue Management while Service Operation includes Incident Management, Problem Management and Request Fulfilment. Some people make a career being ITIL practitioners.

The Merriam-Webster dictionary defines Automation as “the technique of making an apparatus, a process, or a system operate automatically”. In other words, having a system of some sort do the work for you, work that you would otherwise do manually. However, you will have to tell this system what is it that you want to get done, and sometimes, how to do it.

So, configuring a network of routers with dynamic routing protocols, so that these routers speak with each other and figure out the shortest path per destination, is a form of automation. The alternative would be having someone do the calculations manually on a piece of paper, and then configuring static routes on each router. And so is writing a program that configures a VLAN on your switch – or your 500 switches – without someone having to log in to each switch individually and configuring the VLAN via the CLI.

As you have already guessed, the power of automation is not intrinsically in the automation itself. Logging into one switch manually and configuring one VLAN is probably much faster than writing a Python program to do that for you. So why automate? Obviously, the importance of automation is its application to repetitive tasks.

Automation will not only save the time you will spend repeating a task, it also maintains consistency and accuracy of performing that task, over all its iterations. It does not matter if you have 10 or 500 switches. The program you wrote will always go through the exact same steps for eachevery switch, with the exact same result. Every time. Of course, the assumption here is that no errors will happen because of factors external to your program, such as an unreachable switch, wrong credentials configured on a switch, or a switch with a corrupted IOS. Although, you can write a program to detect and mitigate these error conditions!

When you have several systems working together to get a job done, there is typically a need for a system, or a function, to coordinate the execution of the tasks performed by the different systems towards getting this job completed. This coordination function is called Orchestration.

For example, a private or public cloud that provides virtual machines to its users will include different systems to provision the network, compute, virtualization, operating systems, and maybe the applications, for those VMs. Orchestration will provide the function of coordination between all the different systems and applications to get the VM up and running.

Automation and orchestration work well in tandem. Automation covers single tasks. Using software to configure a VLAN on a switch is automation, and so is provisioning a VM over ESXi, or installing Linux on that VM. Orchestration, on the other hand, is the function of coordinating the execution of these automated tasks, in a specific sequence, each task using its own software and each on its respective system. The scope of automation involves single tasks. The scope of orchestration involves a workflow of tasks.

The concept of Modeling Data is not a new concept and is not exclusive to networks or even automation. Data modeling is very involved and is a major branch of data science. For the humble purpose of this blog, let’s use an example to demonstrate what a model is. In Example 1, you can see a configuration snippet of BGP on an IOS-XR router in the left column. In the right column, the specific values for this particular device were removed and replaced with a description of what should be there. A template of sorts.