Machine reasoning is a new category of AI/ML technologies that can enable a computer to work through complex processes that would normally require a human. Common applications for machine reasoning are detail-driven workflows that are extremely time-consuming and tedious, like optimizing your tax returns by selecting the best deductions based on the many available options. Another example is the execution of workflows that require immediate attention and precise detail, like the shut-off protocols in a refinery following a fire alarm. What both examples have in common is that executing each process requires a clear understanding of the relationship between the variables, including order, location, timing, and rules. Because, in a workflow, each decision can alter subsequent steps.

So how can we program a computer to perform these complex workflows? Let’s start by understanding how the process of human reasoning works. A good example in everyday life is the front door to a coffee shop. As you approach the door, your brain goes into reasoning mode and looks for clues that tell you how to open the door. A vertical handle usually means pull, while a horizontal bar could mean push. If the building is older and the door has a knob, you might need to twist the knob and they push or pull depending on which side of the threshold the door is mounted. Your brain does all of this reasoning in an instant, because it’s quite simple and based on having opened thousands of doors. We could program a computer to react to each of these variables in order, based on incoming data, and step through this same process.

Now let’s apply these concepts to networking. A common task in most companies is compliance checking where each network device, (switch, access point, wireless controller, and router) is checked for software version, security patches, and consistent configuration. In small networks, this is a full day of work; larger companies might have an IT administrator dedicated to this process full-time. A cloud-connected machine reasoning engine (MRE) can keep tabs on your device manufacturer’s online software updates and security patches in real time. It can also identify identical configurations for device models and organize them in groups, so as to verify consistency for all devices in a group. In this example, the MRE is automating a very tedious and time-consuming process that is critical to network performance and security, but a task that nobody really enjoys doing.

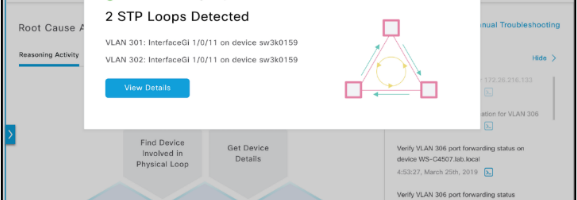

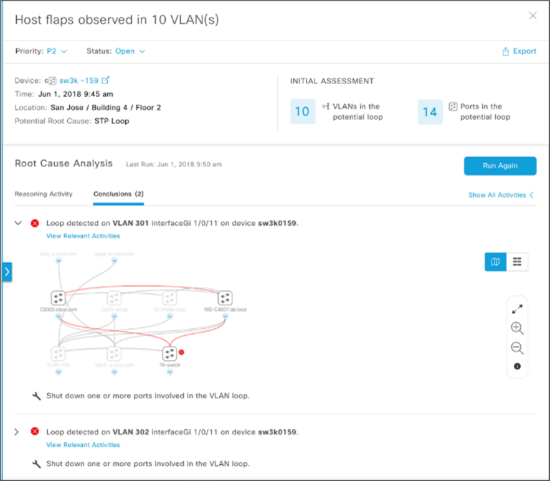

Another good real world example is troubleshooting an STP data loop in your network. Spanning Tree Protocol (STP) loops often appear after upgrades or additions to a layer-2 access network and can data storms that result in severe performance degradation. The process for diagnosing, locating, and resolving an STP loop can be time-consuming and stressful. It also requires a certain level of networking knowledge that newer IT staff members might not yet have. An AI-powered machine reasoning engine can scan your network, locate the source of the loop, and recommend the appropriate action in minutes.

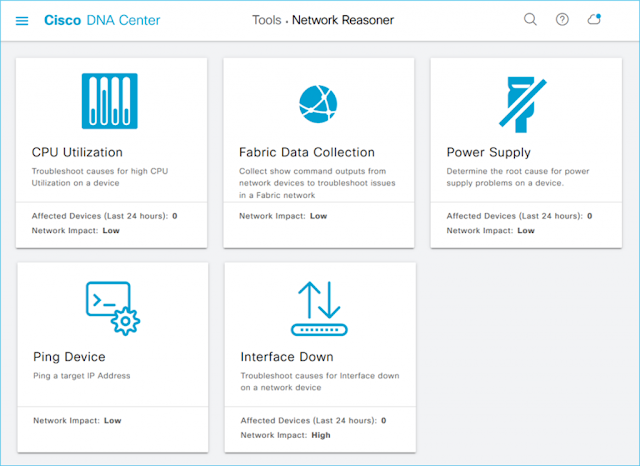

Cisco DNA Center delivers some incredible machine reasoning workflows with the addition of a powerful cloud-connected Machine Reasoning Engine (MRE). The solution offers two ways to experience the usefulness of this new MRE. The first way is something many of you are already aware of, because it’s been part of our AI/ML insights in Cisco DNA Center for a while now: proactive insights. When Cisco DNA Center’s assurance engine flags an issue, it may determine to send this issue to the MRE for automated troubleshooting. If there is an MRE workflow to resolve this issue, you will be presented with a run button to execute that workflow and resolve the issue. Since we’ve already mentioned STP loops, let’s take a look at how that would work.

When a broadcast storm is detected, AI/ML can look at the IP addresses and determine that it’s a good candidate for STP troubleshooting. You’ll get the following window when you click on the alert: