From OS to Industry-leading Software Stack

Cisco Internetwork Operating System (IOS) was developed in the 1980s for the company’s first routers that had only 256 KB of memory and low CPU processing power. But what a difference a few decades make. Today IOS-XE runs our entire enterprise portfolio ̶ ̶ 80 different Cisco platforms for access, distribution, core, wireless, and WAN, with a myriad of combinations of hardware and software, forwarding, and physical and virtual form factors.

Many people still call Cisco IOS XE an operating system. But it’s more appropriately described as an enterprise networking software stack. At 190 million lines of code from Cisco—and more than 300 million lines of code when you include vendor software development kits (SDKs) and open-source libraries—IOS XE is comparable to stacks from Microsoft or Apple.

During the transition of IOS XE to encompass the entire enterprise networking portfolio, within every four-month release cycle our global development team of more than 3000 software engineers averaged the introduction of four new products. IOS-XE now supports more than 20 different ASIC families developed by Cisco and other vendors. We develop over 700 new features per year. It’s a huge undertaking to get this done systematically. It requires the right development environment and software engineering practices that scale the team to the amount of code necessary for our product portfolio.

Here is a look back at how the IOS XE software stack was conceived and the continuous evolution of its capabilities, based on the work of the Polaris team. The team is tasked with providing the right development environment for the current portfolio and the evolving needs of the emerging new class of products.

IOS Origins

The early releases of IOS consisted of a single embedded development environment that included all the functionality required to build a product. Our success comes from managing the growth of functionality and scaling configuration models, scaling performance, scaling the hardware support in a systematic though embedded systems centric manner.

In 2004, Cisco developers built IOS XE for the Cisco 1000 Series Aggregation Services Router (ASR 1000) router family. IOS XE combined a Linux kernel and independent processes to achieve separation of the control plane from the data plane. In the new code and development model we introduced, we began the journey of moving to a database–centric programming model. From the first shipment of ASR 1000, every state update to the data path goes into and out of the in-memory database.

In 2014, the IOS XE development team was put together to drive the software strategy for Enterprise Networking. The entire switching portfolio moved to IOS-XE with the industry-leading Catalyst 9000 range of products. The pivot to evolving IOS XE into a distributed scale-out infrastructure relied on our deep experience of in-memory databases with database replication capabilities and a full, remotely accessible graph database. The elastic wireless controller 9800 represents the successful introduction of these new capabilities.

When the IOS XE development team was formed, there was a common misconception that small, low-end systems with tiny footprints couldn‘t share the same software with very large-scale systems. We have successfully disproved that. IOS XE now runs on everything from tiny IoT routers to large modular systems. It is proving to be a significant strength as we move forward since the ability to fit on small systems means improved efficiency that translates to better outcomes on larger systems. What started as a challenge is now a transformational strength.

Why is a Stack Important?

An OS is only a very tiny part of the full functionality of a complete software development environment. The IOS XE enterprise networking software stack features a deep integration of all layers with a conceptual and semantic integrity.

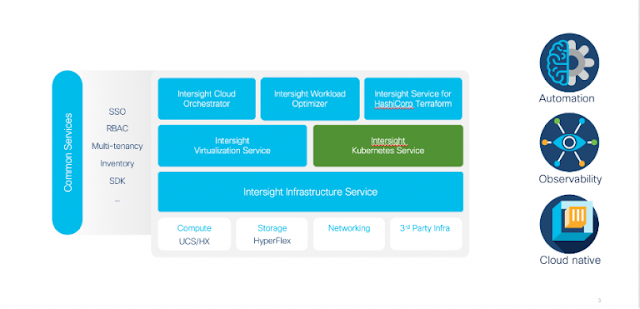

IOS XE software layers include application, software development language, middleware, managed runtime, graph database, transactional store, system libraries, drivers, and the Linux kernel. Our managed runtime enables common functionality to be rapidly deployed to a large amount of existing code seamlessly. The goal of the development environment is to facilitate cloud native, single control, and a monitoring point to operate at enterprise scale with fine-grained multi-tenancy everywhere.

The great value in having the same software is that you have the same software development model that all developers follow. This represents the internal SDK for Cisco Enterprise Networking software engineers. All of our standards-based APIs are a single, often automated translation away. The ability to get total system visibility and control is vital in the days ahead to get to a networking system that does not look like a set of independent point solutions.

What is IOS XE?

There are many types of systems that can be built by different competent teams attempting to solve the same problem. The guiding themes behind IOS XE include:

◉ Asynchronous end-to-end, because synchronous calls can be emulated, if necessary, but the reverse is not true. On low-footprint systems it is key to optimizing performance.◉ Cooperative scheduled run-to-completion is how all IOS XE code functions. It utilizes our experience developing IOS to provide the most CPU-efficient choice and the best model for strongly IO-bound workloads.

◉ It’s a deterministic system that make the root cause of issues easier to fix and makes stateful process restart support easier to design.

◉ A lossless system, IOS XE depends on end-to-end backpressure rather than any loss of information in processing layers. Reasoning about how a system functions in the presence of loss is impossible.

◉ Its transactional nature produces a deep level of correctness across process restarts by reverting deterministically to a known stability point before a current inflight transaction started. This helps prevent fate sharing and crashes in other cooperating processes that work off the same database.

◉ Formal domain specific languages provide specifications that permit build-time and runtime checking.

◉ Close-loop behavior provides resiliency by imposing positive feedback control on developed systems instead of depending on “fire and forget” open loop behavior.

During the last seven years of development, the IOS XE team via the Polaris project has focused on the following areas.

Developing Our Own Managed Runtime Environment

The team has developed a managed runtime that essentially allows processes to run heap–less with state stored in the in-memory database. The Crimson toolchain provides language integrated support for the internal data modeling domain–specific language (DSL), known as The Definition Language (TDL). The use of type-inferencing facilitates a succinct human interface to generated code for messaging and database access. The toolchain integration with language extensions also enables the rapid addition of new capabilities to migrate existing code to meet new expectations. Deep support for a systematic move to increasing multi-tenancy requirements are part of this development environment.

Graph Query/Update Language

The Graph Execution Engine (GREEN) gives remote query and update capabilities to the graph database. It’s a formal way to interact natively using system software development kits (SDKs). All state transfer internally is fully automatic. Changes to state are efficiently tracked to allow incremental updates on persistent long-lived queries.

Integrated Telemetry

The Polaris team has deeply integrated telemetry into the toolchain and managed runtime to avoid error-prone ad hoc telemetry. The separation of concerns between developers writing code and the automation of telemetry is vital to operate at Cisco scale. Standards-based telemetry is a one-level translation. Native telemetry runs at packet scale.

Graph State Distribution Framework

The Graph State Distribution Framework allows location independence to processing by separating the data from the processing software. It’s a big step towards moving IOS XE from a message-passing system to a distributed database system.

Compiler-integrated Patching

Compiler-integrated patching provides safe hot patching via the managed runtime, with script-generated Sev1/PSIRT patches, it is a level of automation that makes hot-patching available to every developer. The runtime application of patches does not require a restart.

With a software stack like the newest generation of IOS XE, developers can add functionality to separate application logic from infrastructure components. The distributed database provides location independence to our software. The completeness and fidelity of the entire software stack allows for a deeply integrated and efficient developer experience.

Source: cisco.com