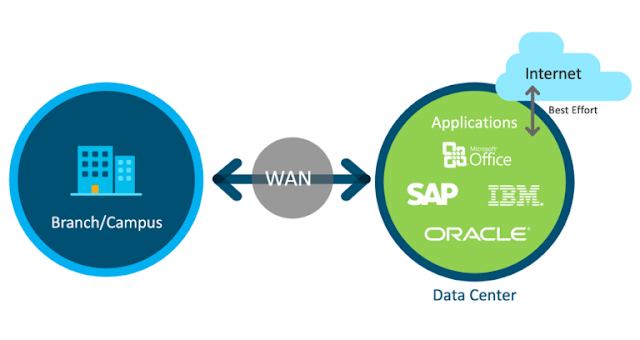

For the past 30 years, service providers have built networks using technology that was limited in terms of speed, cost, and performance. Historically, optical and routing platforms evolved independently, forcing operators to build networks using separate architectures and on different timelines. This resulted in a solution that drives up complexity and Total Cost of Ownership (TCO).

Current multi-layer networks made up of Dense Wavelength Division Multiplexing (DWDM), Reconfigurable Optical Add-Drop Multiplexers (ROADMs), transponders, and routers suffer from current generational technology constraints and head-spinning complexity. Additionally, operators face a highly competitive pricing environment with flat Average Revenue Per User (ARPU) but enormous increases in usage. This requires re-evaluating network architecture to support massive scale in an economically and operationally viable way to improve TCO.

Here are a few factors that contribute to high network TCO:

◉ Redundant resiliency in each network layer leading to poor resource utilization

◉ Siloed infrastructure relying on large volumes of line cards for traffic handoff between layers

◉ High complexity due to multiple switching points, control, and management planes

◉ Layered architecture requiring manual service stitching across network domains that presents challenges to end-to-end cross-loop automation needed for remediation and shorter lead times

But now we live in a different world, and we have technology that can address these problems and more. We envision a network that is simpler and much more cost-effective, where optical and IP networks can operate as one. Rather than having two different networks that need to be deployed and maintained with disparate operational support tools, we’re moving toward greater IP and optical integration within the network.

Advances in technology enabling a fully integrated routing and optical layer

Service provider business profitability runs through best-in-class network processing and coherent optical technology advancements, enabling higher network capacities with lower power and space requirements. These, coupled with software providing robust automation and telemetry, will simplify the network and lead to greater utilization and monetization. Applications like Multi-access Edge Computing (MEC) that require every operator office to become a data center continue to increase the importance of delivering higher capacities.

We’ve made great strides in technology advancements across switching, Network Processing Unit (NPU) silicon, and digital coherent optics. Last year we introduced Cisco Silicon One, an exciting new switching and routing silicon used in the Cisco 8000 Series of devices that can also be purchased as merchant silicon.

Cisco Silicon One allows us to build switches and routers that can scale to 100s of Terabits. It also means the scalability is optimized for 400G and 800G coherent optics.