Today, Cisco and Google Cloud are announcing their intent to develop the industry’s first application-centric multicloud networking fabric. This automated solution will ensure that applications and enterprise networks will be able to share service-level agreement settings, security policy, and compliance data, to provide predictable application performance and consistent user experience.

Our partnership will support the many businesses that are embracing a hybrid and multicloud strategy to get the benefits of agility, scalability and flexibility. The platform will enable businesses to optimize application stacks by distributing application components to their best locations. For example, an application suite could support a front end running on one public cloud to optimize for cost, an analytics library on another cloud to leverage its AI/ML capabilities, and a financial component running on-prem for optimal security and compliance.

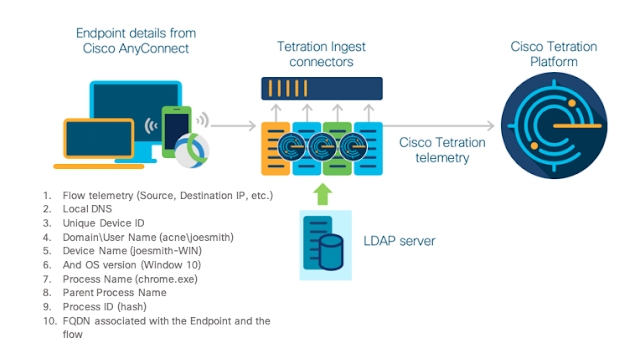

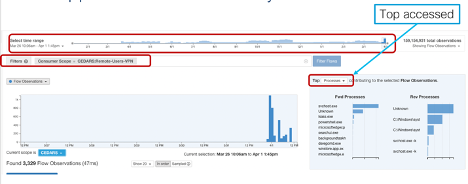

The connective fabric for these modern enterprise apps (and their distributed users) is the network. The network fabric needs to be able to discover the apps, identify their attributes, and adapt for optimal user experience. Likewise, applications need to respond to changing needs and sometimes enterprise-sized shifts in load, while maintaining availability, security and compliance, through the correlation of application, user and network insights.

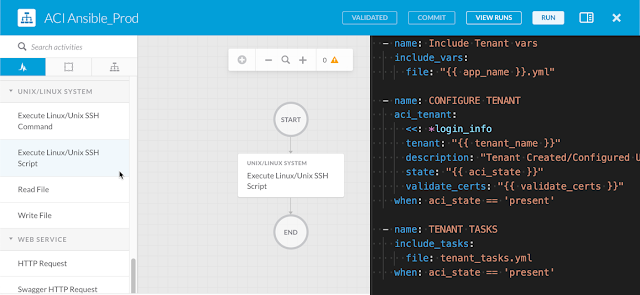

In our newly-expanded partnership, Cisco and Google Cloud will build a close integration between Cisco SD-WAN solutions and Google Cloud. Network policies, such as segmentation, will follow network traffic across the boundary between the enterprise network and Google Cloud, for end-to-end control of security, performance, and quality of experience.

“Expansion of the Google-Cisco partnership represents a significant step forward for enterprises operating across hybrid and multi-cloud environments,” says Shailesh Shukla, Vice President of Products and General Manager, Networking at Google Cloud. “By linking Cisco’s SD-WAN with Google Cloud’s global network and Anthos, we can jointly provide customers a unique solution that automates, secures and optimizes the end to end network based on the application demands, simplifying hybrid deployments for enterprise organizations.”

With Cisco SD-WAN Cloud Hub with Google Cloud, for the first time, the industry will have full WAN application integration with cloud workloads: The network will talk to apps, and apps will talk to the network. Critical security, policy, and performance information will be able to cross the boundaries of network, cloud, and application. This integration will extend into hybrid and multicloud environments, like Anthos, Google Cloud’s open application platform, supporting the optimization of distributed, multicloud microservice-based applications.

Today, applications do not have a way to dynamically signal SLA requests to the underlying network. With this new integration, applications will be able to dynamically request the required network resources, by publishing application data in Google Cloud Service Directory. The network will be able to use this data to provision itself for the appropriate SD-WAN policies.

For example, a business-critical application that needs low latency would have that requirement in its Google Cloud Service Directory entry. The appropriate SD-WAN policies would then be applied on the network. Likewise, as Cisco’s SD-WAN controller, vManage, monitors network performance and service health metrics, it could intelligently direct user requests to the most optimal cloud service nodes.

Cisco SD-WAN can also proactively provide network insights to a distributed system, like Google Anthos, to ensure availability of applications. For example, based on network reachability metrics from Cisco SD-WAN, Anthos can make real-time decisions to divert traffic to regions with better network reachability.

With Cisco SD-WAN Cloud Hub for Google cloud, customers can extend the single point of orchestration and management for their SD-WAN network to include the underlay offered by Google Cloud backbone. Together with Cisco SD-WAN, Google Cloud’s reliable global network provides enterprise customers with operational efficiency and the agility to scale up for bandwidth.

This integration will promote better security and compliance for enterprises. Using Cisco Cloud Hub, policies can be extended into the cloud to authorize access to cloud applications based on user identity. With Cisco SD-WAN Cloud Hub, any device, including IoT devices, will be able to comply with enterprise policy across the network and app ecosystem.

The partnership to create the Cisco SD-WAN Cloud Hub with Google Cloud will lead to applications with greater availability, improved user experience, and more robust policy management.

For enterprise customers who deploy applications in Google Cloud and multicloud environments, Cisco SD-WAN Cloud Hub with Google Cloud offers a faster, smarter, and more secure way to connect with them and consume them. The Cloud Hub will increase the availability of enterprise applications by intelligently discovering and implementing their SLAs through dynamically orchestrated SD-WAN policy. The platform will decrease risk and increase compliance, offering end-to-end policy-based access, and enhanced segmentation from users to applications in the hybrid multicloud environment.

Cisco and Google Cloud intend to invite select customers to participate in previews of this solution by the end of 2020. General availability is planned for the first half of 2021.

Our partnership will support the many businesses that are embracing a hybrid and multicloud strategy to get the benefits of agility, scalability and flexibility. The platform will enable businesses to optimize application stacks by distributing application components to their best locations. For example, an application suite could support a front end running on one public cloud to optimize for cost, an analytics library on another cloud to leverage its AI/ML capabilities, and a financial component running on-prem for optimal security and compliance.

The connective fabric for these modern enterprise apps (and their distributed users) is the network. The network fabric needs to be able to discover the apps, identify their attributes, and adapt for optimal user experience. Likewise, applications need to respond to changing needs and sometimes enterprise-sized shifts in load, while maintaining availability, security and compliance, through the correlation of application, user and network insights.

In our newly-expanded partnership, Cisco and Google Cloud will build a close integration between Cisco SD-WAN solutions and Google Cloud. Network policies, such as segmentation, will follow network traffic across the boundary between the enterprise network and Google Cloud, for end-to-end control of security, performance, and quality of experience.

“Expansion of the Google-Cisco partnership represents a significant step forward for enterprises operating across hybrid and multi-cloud environments,” says Shailesh Shukla, Vice President of Products and General Manager, Networking at Google Cloud. “By linking Cisco’s SD-WAN with Google Cloud’s global network and Anthos, we can jointly provide customers a unique solution that automates, secures and optimizes the end to end network based on the application demands, simplifying hybrid deployments for enterprise organizations.”

With Cisco SD-WAN Cloud Hub with Google Cloud, for the first time, the industry will have full WAN application integration with cloud workloads: The network will talk to apps, and apps will talk to the network. Critical security, policy, and performance information will be able to cross the boundaries of network, cloud, and application. This integration will extend into hybrid and multicloud environments, like Anthos, Google Cloud’s open application platform, supporting the optimization of distributed, multicloud microservice-based applications.

Towards Stronger Multicloud Apps

Today, applications do not have a way to dynamically signal SLA requests to the underlying network. With this new integration, applications will be able to dynamically request the required network resources, by publishing application data in Google Cloud Service Directory. The network will be able to use this data to provision itself for the appropriate SD-WAN policies.

Cisco SD-WAN can also proactively provide network insights to a distributed system, like Google Anthos, to ensure availability of applications. For example, based on network reachability metrics from Cisco SD-WAN, Anthos can make real-time decisions to divert traffic to regions with better network reachability.

With Cisco SD-WAN Cloud Hub for Google cloud, customers can extend the single point of orchestration and management for their SD-WAN network to include the underlay offered by Google Cloud backbone. Together with Cisco SD-WAN, Google Cloud’s reliable global network provides enterprise customers with operational efficiency and the agility to scale up for bandwidth.

This integration will promote better security and compliance for enterprises. Using Cisco Cloud Hub, policies can be extended into the cloud to authorize access to cloud applications based on user identity. With Cisco SD-WAN Cloud Hub, any device, including IoT devices, will be able to comply with enterprise policy across the network and app ecosystem.

The partnership to create the Cisco SD-WAN Cloud Hub with Google Cloud will lead to applications with greater availability, improved user experience, and more robust policy management.

A Partnership Solution for Tomorrow’s Networks

For enterprise customers who deploy applications in Google Cloud and multicloud environments, Cisco SD-WAN Cloud Hub with Google Cloud offers a faster, smarter, and more secure way to connect with them and consume them. The Cloud Hub will increase the availability of enterprise applications by intelligently discovering and implementing their SLAs through dynamically orchestrated SD-WAN policy. The platform will decrease risk and increase compliance, offering end-to-end policy-based access, and enhanced segmentation from users to applications in the hybrid multicloud environment.

Cisco and Google Cloud intend to invite select customers to participate in previews of this solution by the end of 2020. General availability is planned for the first half of 2021.