Organizations are on the constant lookout for specialists who can competently execute core technologies. That's why there's a surge in requirements for those who can demonstrate they have the right skills. If you want to benefit from these opportunities, you require to follow the proper approach that comprises training, taking exams, and achieving certifications. Cisco has a magnificent plan for you by providing several certifications at different entry levels, associate to professional, expert, and architect. To gain any, you should use it for an exam, and this article is dedicated to the Cisco 350-401 ENCOR exam. We'd like you to know essential things about this exam and how you can prepare for it competently.

Wednesday, 20 January 2021

Desktops in the Data Center: Establishing ground rules for VDI

Since the earliest days of computing, we’ve endeavored to provide users with efficient, secure access to the critical applications which power the business.

From those early mainframe applications being accessed from hard-wired dumb terminals to the modern cloud-based application architectures of today, accessible to any user, from anywhere, on any device, we’ve witnessed the changing technology landscape deliver monumental gains in user productivity and flexibility. With today’s workforce being increasingly remote, the delivery of secure, remote access to corporate IT resources and applications is more important than ever.

Although the remote access VPN has been dutifully providing secure, remote access for many years now, the advantages of centrally administering and securing the user desktop through Virtual Desktop Infrastructure (VDI) are driving rapid growth in adoption. With options including hosting of the virtual desktop directly in the data center as VDI or in the public cloud as Desktop-as-a-Service (DaaS), organizations can quickly scale the environment to meet business demand in a rapidly changing world.

Allowing users to access a managed desktop instance from any personal laptop or mobile device, with direct access to their applications provides cost efficiencies and great flexibility with lower bandwidth consumption…. and it’s more secure, right? Well, not so fast!

Considering the Risks

Although addressing some of the key challenges in enabling a remote workforce, VDI introduces a whole new set of considerations for IT security. After all, we’ve spent years keeping users OUT of the data center…. and now with VDI, the user desktop itself now resides on a virtual machine, hosted directly inside the data center or cloud, right inside the perimeter security which is there to protect the organization’s most critical assets. The data!

This raises some important questions around how we can secure these environments and address some of these new risks.

◉ Who is connecting remotely to the virtual desktop?

◉ Which applications are being accessed from the virtual desktops?

◉ Can virtual desktops communicate with each other?

◉ What else can the virtual desktop gain access to outside of traditional apps?

◉ Can the virtual desktop in any way open a reverse tunnel or proxy out to the Internet?

◉ What is the security posture of the remote user device?

◉ If the remote device is infected by virus or malware, is there any possible way that might infect the virtual desktop?

If the virtual desktop itself is infected by virus or malware, could an attacker access or infect other desktops, application servers, databases etc. Are you sure?

With VDI solutions today ranging from traditional on-premises solutions from Citrix and VMware to cloud offered services with Windows Virtual Desktop from Azure and Amazon Workspaces from AWS, there are differing approaches to the delivery of a common foundation for secure authentication, transport and endpoint control. What is lacking however, is the ability to address some of the key fundamentals for a Zero Trust approach to user and application security across the multiple environments and vendors that make up most IT landscapes today.

How can Cisco Secure Workload (Tetration) help?

Cisco Secure Workload (Tetration) provides zero trust segmentation for VDI endpoints AND applications. Founded on a least-privilege access model, this allows the administrator to centrally define and enforce a dynamic segmentation policy to each and every desktop instance and application workload. Requiring no infrastructure changes and supporting any data center or cloud environment, this allows for a more flexible, scalable approach to address critical security concerns, today!

Establishing Control for Virtual Desktops

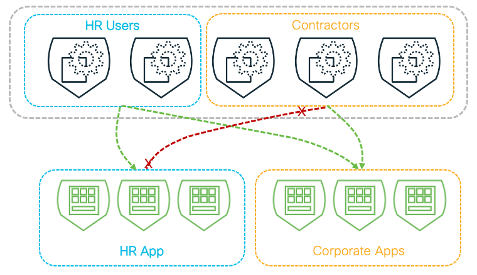

With Secure Workload, administrators can enforce a dynamic allow-list policy which allows users to access a defined set of applications and resources, while restricting any other connectivity. Virtual desktops are typically connected to a shared virtual network, leaving a wide-open attack surface for lateral movement or malware propagation so this policy provides an immediate benefit in restriction of desktop to desktop communication.

This flexible policy allows rules to be defined based on context, whether identifying a specific desktop group/pool, application workloads or vulnerable machines, providing simplicity in administration and the flexibility to adapt to a changing environment without further modification.

◉ Do your VDI instances really need to communicate with one another?

With a single policy rule, Secure Workload can enforce a desktop isolation policy to restrict communication between desktop instances without impacting critical services and application access. This simple step will immediately block malware propagation and restrict visibility and lateral movement between desktops.

Tuesday, 19 January 2021

Bringing Office-Quality Connectivity to Home Teleworkers with SD-WAN

Most likely you are reading this Cisco blog post from your home office, just as I wrote it from mine. Whereas, at least in the States, it used to be “there’s no place like home” now it’s more like “no place but home” as we weather the new normal of isolation and lock downs to stay safe.

Working from home has taken on a whole new meaning as we spend our days on video meetings with colleagues, accessing corporate data center resources via VPN, and using direct internet access to cloud and SaaS applications. Our home Wi-Fi is overloaded with internet-dependent work, school, and play, causing more than a few eye-rolls as application performance slows to a crawl and video conversations are peppered with static. What’s a corporate warrior to do to keep up with the streaming workload and stay in good spirits?

While individuals have limited options to speed up their home office connectivity, IT can step in to provide enterprise-grade services to high-value workers for whom every minute with clients, customers, and coworkers counts. These professionals have a wide variety of business-critical functions such as a brokerage advisor recommending trade strategies for clients, a telemedicine physician monitoring an elderly patient, or a CX representative walking a customer through a complex installation procedure. The high-value workforce needs superior connectivity that makes working at home just as fluid as being in the office with consistent connectivity and performance. What was once “good enough” for occasional evening and weekend work-at-home stints is no longer adequate.

Beyond consistent and reliable connectivity, professional teleworkers need the ability to use personal devices—laptops, phones, and tablets—to securely access data and applications to keep their workflow fluid. On top of all that, they need peace of mind provided by enterprise-grade security that is easy to use and practically invisible to daily work routines.

When tasked with upgrading high-value workers’ home offices, IT needs a solution that provides:

◉ Centralized policy management and zero-touch provisioning to bring thousands of remote offices online quickly.

◉ Monitoring of Quality of Service (QoS) and remotely troubleshooting connection reliability to enhance application experience from non-standardized home internet connections and Wi-Fi to cloud and SaaS resources.

◉ Centralized, cloud-delivered, multi-layer security—including DNS URL-Filtering, Application Aware Firewall, Intrusion Protection System, and Advanced Malware Protection—to protect sensitive traffic on its round trip from home office to cloud or data center and back.

◉ Ability to automatically detect and define devices connecting to the home office network and apply segmentation policies to control access permissions and prevent infections from spreading from home offices and to corporate resources.

With these capabilities, IT can provide an office-like experience to high-value workers while keeping enterprise assets secure. SD-WAN application-aware routing identifies the best routes to send data traffic to access SaaS applications—even as they may dynamically change during the workday. A split-tunnel configuration over a single WAN interface or second WAN interface over LTE provides redundant connectivity. IT can continuously monitor the edge-to-SaaS performance on both DIA and backhaul paths to ensure appropriate application Quality of Experience and consistent connectivity.

An All-in-One Secure Routing Solution for Teleworkers

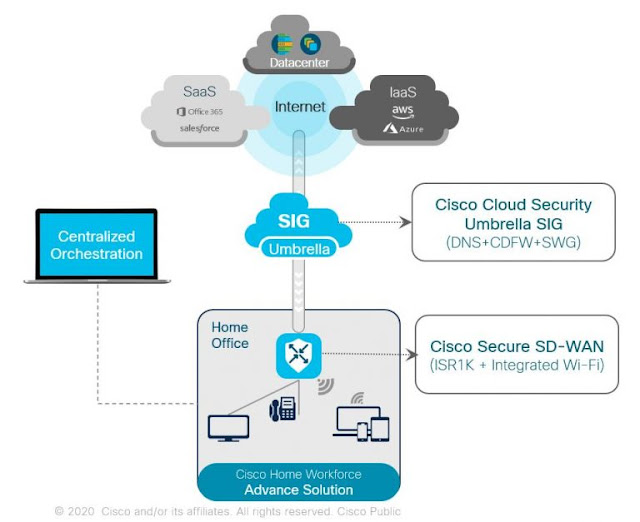

From a teleworker’s point of view, an ideal at home connectivity solution provides an enhanced direct access to cloud or data center application experience. Performance-optimized cloud on-ramps deliver access to popular SaaS applications suites, such as Office365, and public cloud resources. A Secure Internet Gateway protects the remote worker against advanced persistent threats emanating from spoofed sites on the open internet.

The professional’s home office solution starts with an enterprise-grade router with integrated Wi-Fi that delivers a zero-trust fabric with end-to-end segmentation and always-on network connectivity. For areas with unreliable broadband connections, a Gb Ethernet speed LTE Advanced Pro or a 4G plug-in module provides backup or acts as the main direct internet connection.

The at-home system is capable of zero-touch provisioning, with remote NetOps teams able to detect, provision, and monitor the home network from anywhere. The remote worker simply plugs the router into power and a broadband internet connection or relies on the LTE/5G broadband cellular connection. The router automatically searches for the plug and play controller and downloads enterprise policies and segmentation rules before securely joining the corporate WAN. After that, the office worker connects to the permitted enterprise and cloud resources as if they were working on campus, guarded by the same security protection and access policies.

Security is Even More Critical for Remote Offices

It is Possible to Have the Best of Both Worlds at Home

Monday, 18 January 2021

Cisco DNA Center and Cisco Umbrella- Automate your journey towards DNS Security

Introducing Cisco DNA Center Integration with Umbrella

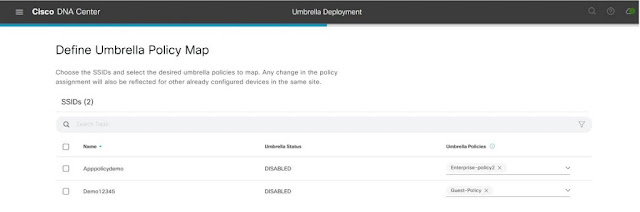

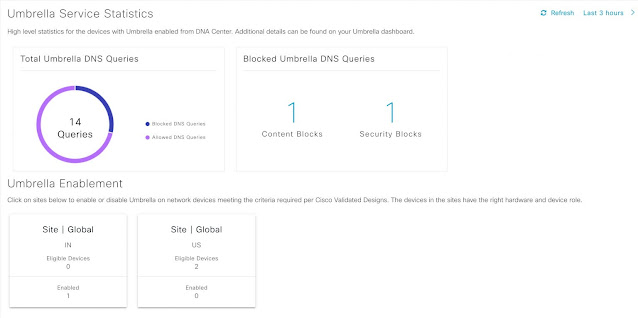

Cisco Umbrella provides the first line of defense against threats on the internet wherever users go. Umbrella delivers complete visibility into internet activity across all locations, devices, and users, and blocks threats before they ever reach your network or endpoints. Cisco Umbrella helps in securing traffic using Secure Internet Gateway(SIG) in cloud. In this blog, we will look at how Integration of Cisco Umbrella with Cisco DNA Center will help in automating and securing WLAN’s to provide maximum visibility and granularity using network infrastructure.

Friday, 15 January 2021

Cisco’s Data Cloud Transformation: Moving from Hadoop On-Premises Architecture to Snowflake and GCP

The world is seeing an explosion of data growth. There are countless data-generating devices, digitized video and audio content, and embedded devices such as RFID tags and smart vehicles that have become our new global norm. Cisco is experiencing this dramatic shift as more data sources are being ingested into our enterprise platforms and business models are evolving to harness the power of data, driving Cisco’s growth across Marketing, Customer Experience, Supply Chain, Customer Partner Services and more.

Enterprise Data Growth Impact on Cisco

Enterprise data at Cisco has also grown over the years—with the size of legacy on-premises platforms having grown 5x over the past five years alone. The appetite and demand for data-driven insights has also grown exponentially as Cisco realized the potential of driving growth and business outcomes with insights from data, revealing new business levers and opportunities.

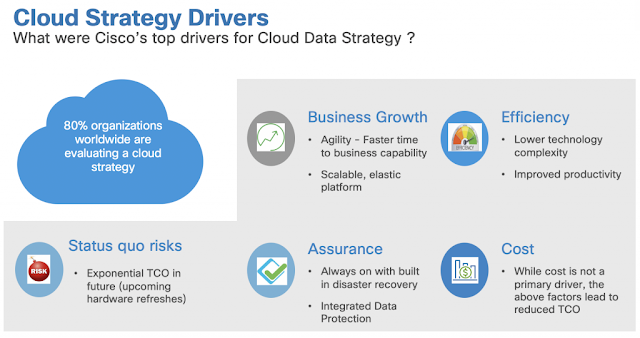

Cloud Data Transformation Drivers

When Cisco started its migration journey several years ago, its data warehouse footprint was entirely on-Prem. With the business pivoting towards an accelerated data-to-insights cycle and the demand for analytics exploding, it quickly became apparent that some of the existing technologies would not allow us to scale to meet data demands.

Why Snowflake and GCP?

Journey and key success factors

Results observed and testimonials

Wednesday, 13 January 2021

Evolving Threat Landscapes: Learning from the SolarWinds Breach

During 2020 we saw a huge expansion and adoption of online services precipitated by a global pandemic. By all accounts, a good proportion of these changes will become permanent, resulting in greater reliance on resilient, secure services to support activities from online banking and telemedicine to e-commerce, curbside pickup, and home delivery of everything from groceries to apparel and electronics.

While this blog typically focuses on topics specific to financial services, the growth of online services has brought with it new and expanding operational risks that have the potential to impact not just a particular entity or industry, but are a serious concern for all private and public industries alike. Recently we witnessed just how serious and threatening a particular risk – the compromise of a widely used supply chain – can be. When we think about supply chain attacks, we tend to conjure up an image of grocery or pharmaceutical products being deliberately contaminated or some other physical threat against things we buy or the components that collectively become a finished product. What the recent SolarWinds breach has starkly highlighted, to a much broader audience, is the threat that is posed to our digital products and the truly frightening cascade effect to the digital supply chain of a single breach across all industries and, in turn, to their end customers. When we embrace a technology or platform and deploy it on-premise, any threat associated with it is now inside our environment, frequently with administrative rights – and although the threat actors may be external to the company, the threat vector is internal. Essentially, it has become an insider threat that is unfettered by perimeter defenses, and if not contained, may move unchecked within the organization.

To illustrate, consider the potential risk to a software solutions provider compromised by a digital supply chain attack. Unlike most physical supply chain attacks, the compromised systems are not tied to a downstream product. The risk of lateral movement in the digital realm once inside perimeter defenses is far greater: in a worst-case scenario, malicious actors could gain access to the source code for multiple products. Viewing the inner workings of an application may reveal undisclosed vulnerabilities and create opportunities for future malicious activity and, in extreme cases, may allow an attacker to modify the source code. This in itself represents a potential future supply chain compromise. The entities who have potentially been breached due to their use of SolarWinds includes both private and public sector organizations. While neither rely on SolarWinds directly for their business activities, the nature of a supply chain compromise has exposed them to the possibility that one breach can more easily beget another.

What should private and public institutions do to protect themselves? When we examine organizational risk, we look, primarily, at two things – How can we reduce the probability of a successful attack? How do we mitigate damage should an attack be successful?

Preparing the environment

◉ Identify what constitutes appropriate access in the environment – which systems, networks, roles, groups or individuals need access to what and to what degree?

◉ Baseline the environment – ensure we know what “normal” operation looks like so we can identify “abnormal” behavior in the environment.

◉ Ensure an appropriate staffing level, what our team/individual roles & responsibilities are and ensure staff are trained appropriately. No amount of technology will prevent a breach if the staff are not adequately trained and/or processes break down.

◉ Implement the tools & processes mentioned in later sections. Test the staff, tools & processes regularly – once an attack is underway, it’s too late.

Reducing the probability

◉ Ensure users are who they claim to be, and employ a least privilege approach, meaning their access is appropriate for their role and no more. This can be accomplished by deploying Multi-Factor Authentication (MFA) and a Zero-Trust model, which means that if you are not granted access, you do not have implicit or inherited access.

◉ Enforce that only validated secure traffic can enter, exit or traverse your environment, including to cloud providers, by leveraging NextGen Firewalls (NGFW), Intrusion Prevention/Detection Systems (IPS/IDS), DNS validation and Threat Intelligence information to proactively safeguard against known malicious actors and resources, to name a few.

◉ For developers, implement code validation and reviews to ensure that the code in the repository is the same code that was developed and checked into the repository and enforce access controls to the repository and compilation resources.

Reducing the impact

Former Cisco Chairman John Chambers famously said, “There are two types of companies: those that have been hacked, and those who don’t know they have been hacked”. You can attempt to reduce the probability of a successful attack; however, the probability will never be zero. Successful breaches are inevitable, and we should plan accordingly. Many of the mechanisms are common to our efforts to reduce the probability of a successful attack and must be in place prior to an attack. In order to reduce the impact of a breach we must reduce the amount to time an attacker is in the environment and limit the scope of the attack such as the value/criticality of the exposure. According to IBM, the average time to detect and contain a breach in 2019 was 280 days and costs an average of $3.92m but reducing that exposure to 200 days could save $1m in breach related costs.

◉ A least privilege or zero-trust model may prevent an attacker from gaining access to the data they seek. This is particularly true for third party tools that provide limited visibility into their inner workings and that may have access to mission critical systems.◉ Appropriate segmentation of the network should keep an attacker from traversing the network in search of data and/or from systems to mount pivot attacks.

◉ Automated detection of, and response to, a breach is critical to reducing the time to detect. The longer an attacker is in the environment the more damage and loss can occur.

◉ Encrypt traffic on the network while maintaining visibility into that traffic.

◉ Ensure the capability to retrospectively track where an attacker has been to better remediate vulnerabilities and determine their original attack vector.

The SolarWinds breach is a harsh example of the insidious nature of a digital supply chain compromise. It’s also a reminder of the immeasurable importance of a comprehensive security strategy, robust security solution capabilities, and technology partners with the expertise and skills to help enterprises – including financial services institutions – and public institutions meet these challenges confidently.

Tuesday, 12 January 2021

Network Security and Containers – Same, but Different

Introduction

Network and security teams seem to have had a love-hate relationship with each other since the early days of IT. Having worked extensively and built expertise with both for the past few decades, we often notice how each have similar goals: both seek to provide connectivity and bring value to the business. At the same time, there are also certainly notable differences. Network teams tend to focus on building architectures that scale and provide universal connectivity, while security teams tend to focus more on limiting that connectivity to prevent unwanted access.

Often, these teams work together — sometimes on the same hardware — where network teams will configure connectivity (BGP/OSPF/STP/VLANs/VxLANs/etc.) while security teams configure access controls (ACLs/Dot1x/Snooping/etc.). Other times, we find that Security defines rules and hands them off to Networking to implement. Many times, in larger organizations, we find InfoSec also in the mix, defining somewhat abstract policy, handing that down to Security to render into rulesets that then either get implemented in routers, switches, and firewalls directly, or else again handed off to Networking to implement in those devices. These days Cloud teams play an increasingly large part in those roles, as well.

All-in-all, each team contributes important pieces to the larger puzzle albeit speaking slightly different languages, so to speak. What’s key to organizational success is for these teams to come together, find and communicate using a common language and framework, and work to decrease the complexity surrounding security controls while increasing the level of security provided, which altogether minimizes risk and adds value to the business.

As container-based development continues to rapidly expand, both the roles of who provides security and where those security enforcement points live are quickly changing, as well.

The challenge

For the past few years, organizations have begun to significantly enhance their security postures, moving from only enforcing security at the perimeter in a North-to-South fashion to enforcement throughout their internal Data Centers and Clouds alike in an East-to-West fashion. Granual control at the workload level is typically referred to as microsegmentation. This move toward distributed enforcement points has great advantages, but also presents unique new challenges, such as where those enforcement points will be located, how rulesets will be created, updated, and deprecated when necessary, all with the same level of agility business and thus its developers move at, and with precise accuracy.

At the same time, orchestration systems running container pods, such as Kubernetes (K8S), perpetuate that shift toward new security constructs using methods such as the CNI or Container Networking Interface. CNI provides exactly what it sounds like: an interface with which networking can be provided to a Kubernetes cluster. A plugin, if you will. There are many CNI plugins for K8S such as pure software overlays like Flannel (leveraging VxLAN) and Calico (leveraging BGP), while others tie worker nodes running the containers directly into the hardware switches they are connected to, shifting the responsibility of connectivity back into dedicated hardware.

Regardless of which CNI is utilized, instantiation of networking constructs is shifted from that of traditional CLI on a switch to that of a sort of structured text-code, in the form of YAML or JSON- which is sent to the Kubernetes cluster via it’s API server.

Now we have the groundwork laid to where we begin to see how things may start to get interesting.

Scale and precision are key

As we can see, we are talking about having a firewall in between every single workload and ensuring that such firewalls are always up to date with the latest rules.

Say we have a relatively small operation with only 500 workloads, some of which have been migrated into containers with more planned migrations every day.

This means in the traditional environment we would need 500 firewalls to deploy and maintain minus the workloads migrated to containers with a way to enforce the necessary rules for those, as well. Now, imagine that a new Active Directory server has just been added to the forest and holds the role of serving LDAP. This means that a slew of new rules must be added to nearly every single firewall, allowing the workload protected by it to talk to the new AD server via a range of ports – TCP 389, 686, 88, etc. If the workload is Windows-based it likely needs to have MS-RPC open – so that means 49152-65535; whereas if it is not a Windows box, it most certainly should not have those opened.

Quickly noticeable is how physical firewalls become untenable at this scale in the traditional environments, and even how dedicated virtual firewalls still present the complex challenge of requiring centralized policy with distributed enforcement. Neither does much to aid in our need to secure East-to-West traffic within the Kubernetes cluster, between containers. However, one might accurately surmise that any solution business leaders are likely to consider must be able to handle all scenarios equally from a policy creation and management perspective.

Seemingly apparent is how this centralized policy must be hierarchical in nature, requiring definition using natural human language such as “dev cannot talk to prod” rather than the archaic and unmanageable method using IP/CIDR addressing like “deny ip 10.4.20.0/24 10.27.8.0/24”, and yet the system must still translate that natural language into machine-understandable CIDR addressing.

The only way this works at any scale is to distribute those rules into every single workload running in every environment, leveraging the native and powerful built-in firewall co-located with each. For containers, this means the firewalls running on the worker nodes must secure traffic between containers (pods) within the node, as well as between nodes.

Business speed and agility

Back to our developers.

Businesses must move at the speed of market change, which can be dizzying at times. They must be able to code, check-in that code to an SCM like Git, have it pulled and automatically built, tested and, if passed, pushed into production. If everything works properly, we’re talking between five minutes and a few hours depending on complexity.

Whether five minutes or five hours, I have personally never witnessed a corporate environment where a ticket could be submitted to have security policies updated to reflect the new code requirements, and even hope to have it completed within a single day, forgetting for a moment about input accuracy and possible remediation for incorrect rule entry. It is usually between a two-day and a two-week process.

This is absolutely unacceptable given the rapid development process we just described, not to mention the dissonance experience from disaggregated people and systems. This method is ripe with problems and is the reason security is so difficult, cumbersome, and error prone within most organizations. As we shift to a more remote workforce, the problem becomes even further compounded as relevant parties cannot so easily congregate into “war rooms” to collaborate through the decision making process.

The simple fact is that policy must accompany code and be implemented directly by the build process itself, and this has never been truer than with container-based development.

Simplicity of automating policy

With Cisco Secure Workload (Tetration), automating policy is easier than you might imagine.

Think with me for a moment about how developers are working today when deploying applications on Kubernetes. They will create a deployment.yml file, in which they are required to input, at a minimum, the L4 port on which containers can be reached. The developers have become familiar with networking and security policy to provision connectivity for their applications, but they may not be fully aware of how their application fits into the wider scope of an organizations security posture and risk tolerance.

This is illustrated below with a simple example of deploying a frontend load balancer and a simple webapp that’s reachable on port 80 and will have some connections to both a production database (PROD_DB) and a dev database (DEV_DB). The sample policy for this deployment can be seen below in this `deploy-dev.yml` file: