◈ Infiltrate Nakatomi Plaza and get rid of the guards

◈ Get the vault password from Mr. Takagi

◈ Have your computer guy hack through the vault locks

◈ Have the FBI cut power to the building, which in turn disables the last lock

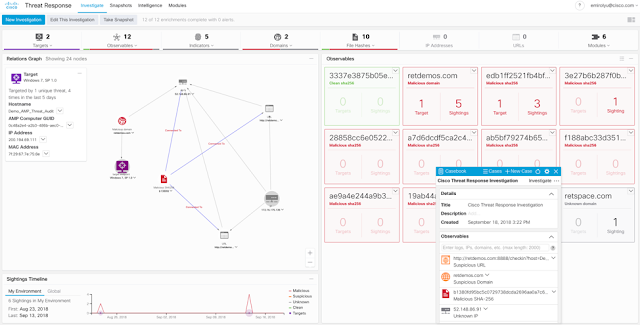

So, how does this relate to Security? Layers. Lots and lots of layers. Utilizing a layered approach to security means that there are several hurdles that the bad guys need to overcome in order to get to your “bearer bonds” (your data, user information, etc.). The more challenging it is for someone to gain access to your resources, the less likely they are to spend their resources in getting them. While that is not always the case, if your security methodology is such that you can stop a large percentage of malicious activity early, you can focus on the more sophisticated attempts. Former Cisco CEO John Chambers said “There are two types of companies: those that have been hacked, and those who don’t know they have been hacked. “. If you take this to heart, it will help in laying out the strategy you need to best protect your people, applications, and data.

There is not one way to accomplish setting up these layers and they are certainly not linear as attacks can come from both inside and outside of your network. Let’s take a look at some of these layers that could be considered foundational to any security plan.

Starting at the Front Door

From a technology point of view, this makes me think of the firewall. Granted, in many ways this is obvious. Limit access to/from the Internet. This is a great place to start as this is like a lock on the front door of your home. With today’s Next-Gen Firewalls, one can look at applications, provide deeper packet inspection, and ultimately more granular control. As the infrastructures change, we are now deploying firewall technology within segments of the network and now even into the Cloud.

Who? What? Where? When?

Nakatomi Plaza had locks on their doors, guards, and security cameras. Managing Whether it’s physical or network security, managing access is critical. When we understand who is accessing the network (employee, contractor, guest, CEO), how they are accessing it (corporate laptop, phone, personal tablet), where they are accessing (HQ, Branch Office, VPN), and even the time of day, decisions can be made to allow or deny access. Taking it a step further, access control today with a solution like Identity Service Engine (ISE) can take all of this into consideration to allow/deny access to specific resources on the network. For example, if a user in the Engineering group is at HQ and trying to update a critical server using their corporate issued laptop, the engineer may be able to do so. That same engineer still at HQ but on a personal laptop or tablet may be denied access. Managing access to resources is one of the most important and challenging areas of security.

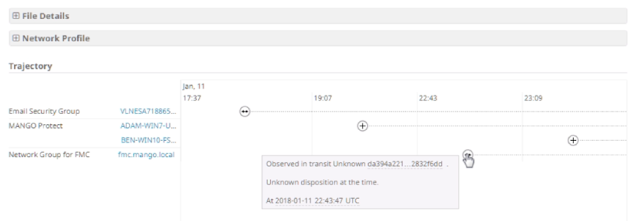

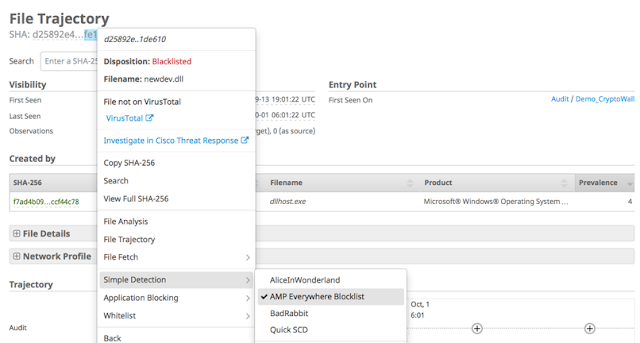

You’ve Got Mail

Email is still the number one threat vector when it comes to malware and breaches. The criminals are getting smarter and when they send out a phishing attack, SPAM, or malicious email, they look completely legitimate and it’s challenging to know what is real and what is not. Email security solutions can pour through all incoming and outgoing mail. These tools can verify the sender and receiver. They can look at the content and attachments. Based on policies and information from resources such as Talos, compromised emails may never even make it to the recipient. As long as email continues to be a primary source of communication, it will continue to be a primary way to be breached.

We’re Not in Kansas (or the office) Anymore

Almost 50% of the workforce is mobile today. People are working from homes, hotels, coffee shops, and planes now. There is also the need to access data from anywhere at any time. Keeping that data secure is not the job of the cloud provider but the owner of the data. Additionally, the users accessing the data from so many not-so-secure locations are of course always using their VPNs every time, right? Wrong! In a recent survey over 80% polled admitted to not using their VPN when connecting to public networks. So, now the bad guys are through another layer of security. We need to protect the cloud users, applications, and data. CASB (Cloud Access Security Broker) is a technology that does just that. Cloudlock can detect compromised accounts, monitor and flag anomalies, and provide visibility into those cloud applications, users, and data.

As work becomes a thing we do and less of a place we go, the risk of attack gets higher. I said earlier that the number one threat vector is email. Within those emails, many of the malware is launched from clicking on a link. That means DNS is yet another method that can be used by the bad guys. In fact, around 90% of malware is DNS based. Umbrella provides not only a better Internet access, but a secure Internet access. Regardless of where you go, Umbrella can protect you.

Having all of these layers to protect your “bearer bonds” doesn’t guarantee that nothing bad will happen. The bad guys have a lot of resources and time to get what they want. This methodology will hopefully help make it so difficult for them, that they don’t want to even try. People, applications, and data. It’s a lot to protect and a lost to lose. If you do it correctly though, you get to be the hero at the end.