As Cisco’s SAP ambassador, I’m often asked, “Tell me about the Cisco and SAP partnership.” Many may not know, but in 2019 we celebrated twenty years of Cisco and SAP working strategically together—always with the objective of benefiting our mutual customers. Innovation has been an intense focus for the partnership, which is why, for example, Cisco became a founding sponsor of the SAP co-innovation lab in 2014.

Today, the Cisco and SAP partnership touches many business units at Cisco; what began with optimizing Cisco Data Center products to run SAP software has evolved to include other strategic areas such as Internet of Things (IoT), cloud computing, big data processing, AI/ML, and collaboration.

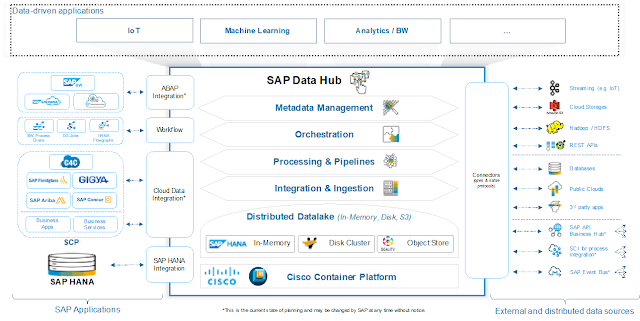

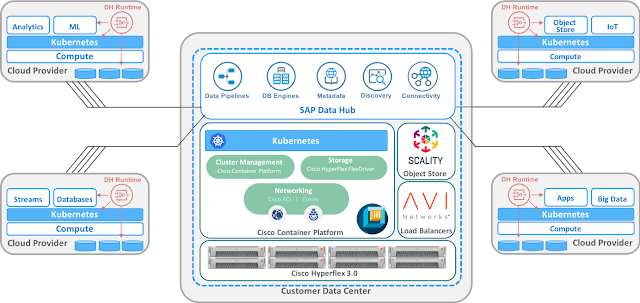

As an example of software co-innovation, Cisco Container Platform (CCP) is certified for the SAP Data Hub and includes support for use cases such as hybrid cloud big data processing. Many SAP Data Hub customers want to run in hybrid cloud environments to leverage cloud-based services, while also keeping some data on premises to meet security and governance requirements.

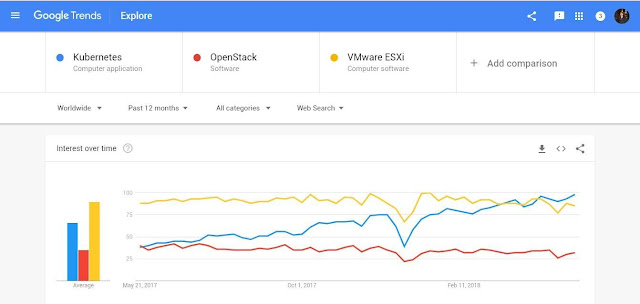

SAP Data Hub is SAP’s first micro services container-based application, and it enables users to orchestrate, aggregate, visualize, and generate insights from across their entire data landscape. SAP Data Hub runs anywhere Kubernetes runs.

Unfortunately, running Kubernetes on premises has its challenges. For instance, IT must answer questions about how to manage and support Kubernetes. In addition, it’s challenging to connect the private and public cloud environments and complicated to manage user access and authorizations across multiple environments.

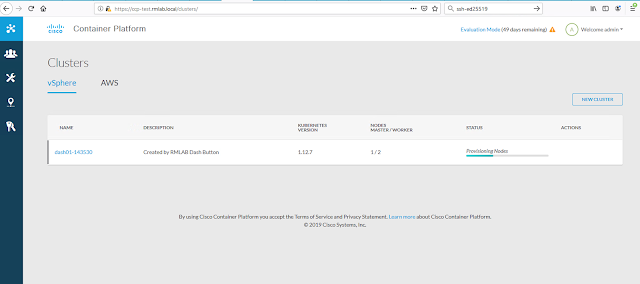

The integration of SAP Data Hub with CCP addresses these challenges. CCP is a production-ready Kubernetes container management platform based on 100 percent upstream Kubernetes and delivered with Cisco enterprise-class Technical Assistance Center (TAC) support. It reduces the complexity of configuring, deploying, securing, scaling, and managing containers via automation. CCP works across on-premises and public cloud environments.

The Cisco and SAP teams are working closely to bring the next iteration of SAP’s multicloud strategy for on-premises deployments—SAP Data Intelligence, which marries SAP Data Hub to AI/ML—to fruition.

Cisco has enhanced AppDynamics, its application performance monitoring product, to monitor SAP environments. This engineering effort includes giving AppDynamics code- level visibility into SAP ABAP, which is the primary programming language for SAP applications.

This new capability provides direct hooks that enable AppDynamics to measure the business process performance of SAP applications. And though SAP has its own monitoring solution, AppDynamics enables SAP customers to monitor their business processes across SAP and non-SAP solutions.

Monitoring is of special importance to SAP customers because their systems often consist of SAP and non-SAP components. For example, at a minimum, an online retail e-commerce system likely consists of a web server connected to an SAP ERP system, and slow checkout can potentially drive customers away. Unfortunately, it is time-consuming and difficult for engineering teams to diagnose where in the stack a performance issue is occurring.

Everyone is talking about IoT and digital transformation. However, a big challenge in deploying an IoT strategy is the need to put sensors everywhere, which represents a huge investment of capital, time and resources.

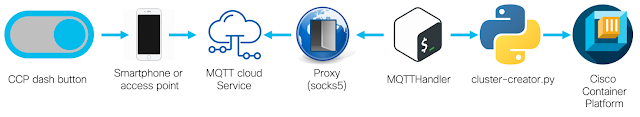

As a leading network provider, Cisco can help customers meet this challenge, because, in many cases, a wireless network is already in place. A wireless access point not only acts as a transmission device, but it can also sense things if enabled with Cisco DNA Spaces. For instance, an access point can track how many mobile phones are connected, for how long, and where they are located at all points in time. By combining geo-location information with enterprise data, businesses get closer to achieving the IoT promise of utilizing data from things to ultimately make better decisions.

Consider this scenario: the owner/operator of a shopping mall wants to know not only quantity of traffic but also where visitors to the mall go. By combining this data with SAP ERP data such as lease fees and analyzing it, the owner/operator can decide upon fair lease prices for shops located in lower- versus higher-traffic areas.

Through Cisco and SAP co-engineering, the rich on-location people and things data provided by Cisco DNA Spaces is now integrated with SAP software, enabling our mutual customers to gain additional insights into what’s happening in their businesses.

Finally, Cisco UCS-based converged infrastructure solutions—which were launched over a decade ago—are at the heart of the infrastructure running many SAP workloads today. These solutions blend secure connectivity, programmable computing, multicloud orchestration, and cloud-based management with operational analytics for our customers’ SAP data centers.

We continue to innovate around these data center solutions to support evolving use cases such as providing support for machine learning applications. Cisco Data Center solutions, for example, have now integrated NVIDIA GPUs and are certified to support Intel® Optane, which enables persistent memory, larger memory pools, faster caching, and faster storage.

As Cisco’s SAP ambassador, I’ve seen over and over again how Cisco and SAP’s portfolios complement each other. For example, a key SAP mission is to help its customers become intelligent enterprises, which requires robust connectivity at all customer touchpoints. This mission, of course, meshes with Cisco’s core competency as the world’s leading network provider.

As we continue to innovate, Cisco and SAP will continue our laser focus on co-engineering innovations that deliver the value our mutual customers require in their evolving business environments.

SAP Data Hub on Cisco Container Platform

As an example of software co-innovation, Cisco Container Platform (CCP) is certified for the SAP Data Hub and includes support for use cases such as hybrid cloud big data processing. Many SAP Data Hub customers want to run in hybrid cloud environments to leverage cloud-based services, while also keeping some data on premises to meet security and governance requirements.

SAP Data Hub is SAP’s first micro services container-based application, and it enables users to orchestrate, aggregate, visualize, and generate insights from across their entire data landscape. SAP Data Hub runs anywhere Kubernetes runs.

Unfortunately, running Kubernetes on premises has its challenges. For instance, IT must answer questions about how to manage and support Kubernetes. In addition, it’s challenging to connect the private and public cloud environments and complicated to manage user access and authorizations across multiple environments.

The integration of SAP Data Hub with CCP addresses these challenges. CCP is a production-ready Kubernetes container management platform based on 100 percent upstream Kubernetes and delivered with Cisco enterprise-class Technical Assistance Center (TAC) support. It reduces the complexity of configuring, deploying, securing, scaling, and managing containers via automation. CCP works across on-premises and public cloud environments.

The Cisco and SAP teams are working closely to bring the next iteration of SAP’s multicloud strategy for on-premises deployments—SAP Data Intelligence, which marries SAP Data Hub to AI/ML—to fruition.

AppDynamics monitors SAP environments

Cisco has enhanced AppDynamics, its application performance monitoring product, to monitor SAP environments. This engineering effort includes giving AppDynamics code- level visibility into SAP ABAP, which is the primary programming language for SAP applications.

This new capability provides direct hooks that enable AppDynamics to measure the business process performance of SAP applications. And though SAP has its own monitoring solution, AppDynamics enables SAP customers to monitor their business processes across SAP and non-SAP solutions.

Monitoring is of special importance to SAP customers because their systems often consist of SAP and non-SAP components. For example, at a minimum, an online retail e-commerce system likely consists of a web server connected to an SAP ERP system, and slow checkout can potentially drive customers away. Unfortunately, it is time-consuming and difficult for engineering teams to diagnose where in the stack a performance issue is occurring.

Cisco DNA spaces

Everyone is talking about IoT and digital transformation. However, a big challenge in deploying an IoT strategy is the need to put sensors everywhere, which represents a huge investment of capital, time and resources.

As a leading network provider, Cisco can help customers meet this challenge, because, in many cases, a wireless network is already in place. A wireless access point not only acts as a transmission device, but it can also sense things if enabled with Cisco DNA Spaces. For instance, an access point can track how many mobile phones are connected, for how long, and where they are located at all points in time. By combining geo-location information with enterprise data, businesses get closer to achieving the IoT promise of utilizing data from things to ultimately make better decisions.

Consider this scenario: the owner/operator of a shopping mall wants to know not only quantity of traffic but also where visitors to the mall go. By combining this data with SAP ERP data such as lease fees and analyzing it, the owner/operator can decide upon fair lease prices for shops located in lower- versus higher-traffic areas.

Through Cisco and SAP co-engineering, the rich on-location people and things data provided by Cisco DNA Spaces is now integrated with SAP software, enabling our mutual customers to gain additional insights into what’s happening in their businesses.

Cisco Data Center solutions for SAP

Finally, Cisco UCS-based converged infrastructure solutions—which were launched over a decade ago—are at the heart of the infrastructure running many SAP workloads today. These solutions blend secure connectivity, programmable computing, multicloud orchestration, and cloud-based management with operational analytics for our customers’ SAP data centers.

We continue to innovate around these data center solutions to support evolving use cases such as providing support for machine learning applications. Cisco Data Center solutions, for example, have now integrated NVIDIA GPUs and are certified to support Intel® Optane, which enables persistent memory, larger memory pools, faster caching, and faster storage.

The next twenty years …

As Cisco’s SAP ambassador, I’ve seen over and over again how Cisco and SAP’s portfolios complement each other. For example, a key SAP mission is to help its customers become intelligent enterprises, which requires robust connectivity at all customer touchpoints. This mission, of course, meshes with Cisco’s core competency as the world’s leading network provider.

As we continue to innovate, Cisco and SAP will continue our laser focus on co-engineering innovations that deliver the value our mutual customers require in their evolving business environments.