Thursday, 30 November 2023

Making Your First Terraform File Doesn’t Have to Be Scary

Saturday, 25 November 2023

10 Useful Tips to Ace Cisco 300-415 ENSDWI Exam

Decoding 300-415 ENSDWI Exam

The 300-415 ENSDWI exam delves into Implementing Cisco SD-WAN Solutions, evaluating candidates' proficiency in orchestrating WAN edge routers to connect to the SD-WAN fabric. This exam is not merely a test of theoretical knowledge but a practical assessment of your ability to deploy, manage, and troubleshoot SD-WAN solutions in real-world scenarios.

Before delving into preparation tips, it's crucial to understand the terrain you'll be navigating. The 300-415 ENSDWI exam typically consists of 55-65 questions; candidates are allotted 90 minutes for completion. The format encompasses a variety of question types, including multiple-choice, drag-and-drop, and simulation-based scenarios.

Cisco 300-415 ENSDWI Exam Objectives

Ten Expert Tips for 300-415 ENSDWI Preparation

1. Create a Study Plan

Building a structured study plan is the foundation of successful exam preparation. Allocate dedicated time daily to cover specific topics, ensuring a balanced approach to all exam objectives. Consistency is key, and a well-organized study plan will help you stay on track.

2. Hands-On Practice

Theory is vital, but practical application is paramount. Set up a virtual lab environment to experiment with SD-WAN configurations. The hands-on experience will reinforce theoretical concepts and enhance your troubleshooting skills—a crucial aspect of the exam.

3. Utilize Official Cisco Resources

Cisco provides many official resources, including documentation, whitepapers, and video tutorials. These materials offer insights into SD-WAN technologies and Cisco's expectations for exam candidates. Leverage these resources to complement your study materials.

4. Join Online Communities

Engage with fellow candidates and networking professionals in online forums and communities. Discussing concepts, sharing experiences, and seeking clarification on doubts can provide a fresh perspective and fill gaps in your understanding. The collective wisdom of the community is a valuable asset.

5. Use Cisco 300-415 ENSDWI Practice Test

Perform Cisco 300-415 ENSDWI practice tests to familiarize yourself with the time constraints and pressure. Timed practice exams assess your knowledge and train you to manage time effectively during the test. This step is crucial for building confidence and reducing exam-day anxiety.

6. Focus on Weak Cisco 300-415 ENSDWI Syllabus Topics

Regularly evaluate your progress and identify weak areas. Allocate additional time to reinforce your understanding of these topics. Whether it's troubleshooting, security configurations, or SD-WAN policies, addressing weaknesses proactively will contribute to a more well-rounded preparation.

7. Stay Updated with Industry Trends

The world of networking is dynamic, with technologies evolving rapidly. Stay abreast of industry trends, especially those related to SD-WAN. Familiarizing yourself with the latest developments ensures that your knowledge is exam-centric and reflective of real-world scenarios.

8. Explore Third-Party Study Materials

While official Cisco resources are indispensable, exploring third-party study materials can offer diverse perspectives. Books, practice exams, and online courses from reputable sources can provide alternative explanations and additional context, enriching your understanding.

9. Teach to Learn

The act of teaching reinforces your understanding. Collaborate with study partners or create study guides for specific topics. Explaining concepts in your own words solidifies your knowledge and highlights areas in which you need further clarification.

10. Review and Revise Cisco 300-415 ENSDWI Exam Topics

Continuous revision is the key to retention. Periodically revisit previously covered topics to reinforce your memory. The spaced repetition technique, where you review information at increasing intervals, is particularly effective in ingraining knowledge for the long term.

Beyond 300-415 Exam: The CCNP Enterprise Certification

Earning the 300-415 ENSDWI certification is a significant accomplishment, but it's just one piece of the puzzle. Combining it with the 350-401 ENCOR (Implementing and Operating Cisco Enterprise Network Core Technologies) exam leads to the coveted CCNP Enterprise certification.

1: Career Paths with CCNP Enterprise

The CCNP Enterprise certification opens doors to a myriad of career opportunities. You become a sought-after professional capable of designing and implementing complex enterprise network solutions. Roles such as Network Engineer, Systems Engineer, and Network Administrator are within reach.

2: Industry Recognition

CCNP Enterprise certification is globally recognized and respected. It serves as a testament to your expertise in networking technologies and positions you as a qualified professional in the eyes of employers worldwide. This recognition can be a game-changer in job interviews and salary negotiations.

3: Salary Advancement

With CCNP Enterprise certification, you're not just acquiring knowledge but investing in your earning potential. Certified professionals often command higher salaries than their non-certified counterparts. The certificate becomes a tangible asset, showcasing your dedication to continuous learning and mastery of your craft.

Conclusion

The journey to mastering the 300-415 ENSDWI exam is undoubtedly challenging, but the rewards are commensurate with the effort invested. As you delve into the intricacies of SD-WAN solutions, remember that each concept mastered is a step closer to unlocking a world of career possibilities. The CCNP Enterprise certification, born from the synergy of 300-415 ENSDWI and 350-401 ENCOR, is your passport to a future where your expertise is valued and indispensable in the ever-evolving networking landscape. So, gear up, embrace the challenge, and set forth on the path to CCNP Enterprise mastery. Success awaits those who dare to venture into the realm of possibilities that certification unlocks.

Thursday, 23 November 2023

Secure Multicloud Infrastructure with Cisco Multicloud Defense

It’s a multicloud world!

Market Trend

- Security: Provides a full suite of security capabilities for workload protection

- Cloud: Integrates with cloud constructs, enabling auto-scale and agility

- Networking: Seamlessly and accurately inserts scalable security across clouds without manual intervention

- Keep policies up to date in near-real time as your environment changes.

- Connect continuous visibility and control to discover new cloud assets and changes, associate tag-based business context, and automatically apply the appropriate policy to ensure security compliance.

- Power and protect your cloud infrastructure with security that runs in the background via automation, getting out of the way of your cloud teams.

- Mitigate security gaps and ensure your organization stays secure and resilient.

Cisco Multicloud Defense Overview

- Multicloud Defense Controller (Software-as-a-Service): The Multicloud Defense Controller is a highly reliable and scalable centralized controller (control plane) that automates, orchestrates, and secures multicloud infrastructure. It runs as a Software-as-a-Service (SaaS) and is fully managed by Cisco. Customers can access a web portal to utilize the Multicloud Defense Controller, or they may choose to use Terraform to instantiate security into the DevOps/DevSecOps processes.

- Multicloud Defense Gateway (Platform-as-a-Service): The Multicloud Defense Gateway is an auto-scaling fleet of security software with a patented flexible, single-pass pipelined architecture. These gateways are deployed as Platform-as-a-Service (PaaS) into the customer’s public cloud account(s) by the Multicloud Defense Controller, providing advanced, inline security protections to defend against external attacks, block egress data exfiltration, and prevent the lateral movement of attacks.

Multicloud Defense Gateways

- High throughput and low latency

- Reverse proxy, forward proxy, and forwarding mode

- Flexibility in selecting relevant advanced network security inspection engines, including TLS decryption and re-encryption, WAF (HTTPS and web sockets), IDS/IPS, antivirus/anti-malware, FQDN and URL filtering, DLP

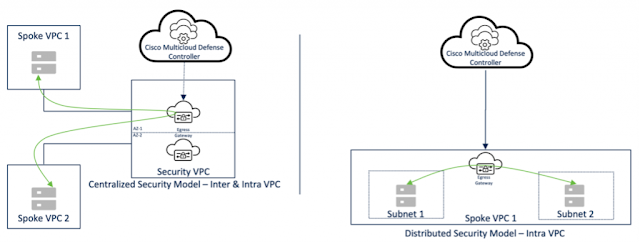

Security Models

- For scalability, autoscaling is supported.

- For resiliency, auto-scaled instances are deployed in multi-availability zones.

- For scalability, autoscaling is supported.

- For resiliency, auto-scaled instances are deployed in multi-availability zones.

- For scalability, autoscaling is supported.

- For resiliency, auto-scaled instances are deployed in multi-availability zones.

Use-cases

- In the centralized security model, traffic is inspected by gateways deployed in the security VPC/VNet/VCN.

- Gateways are auto-scale and multi-AZ aware.

- In the distributed security model, traffic is inspected by gateways deployed in the application VPC/VNet/VCN.

- In the centralized security model, traffic is inspected by gateways deployed in the security VPC/VNet/VCN.

- In the distributed security model, traffic is inspected by gateways deployed in the application VPC/VNet/VCN.

- Gateways are auto-scale and multi-AZ aware.

- In the centralized security model, intra and inter-VPC/VNet/VCN traffic is inspected by gateways deployed in the security VPC/VNet/VCN.

- In the distributed security model, intra-VPC/VNet/VCN traffic is inspected by gateways deployed in the application VPC/VNet/VCN.

- Gateways are auto-scale and multi-AZ aware.

- URL filtering requires TLS decryption on the gateway.

- FQDN-based filtering can be enforced on encrypted traffic flows.

Tuesday, 21 November 2023

Cisco DNA Center Has a New Name and New Features

Version selection menu

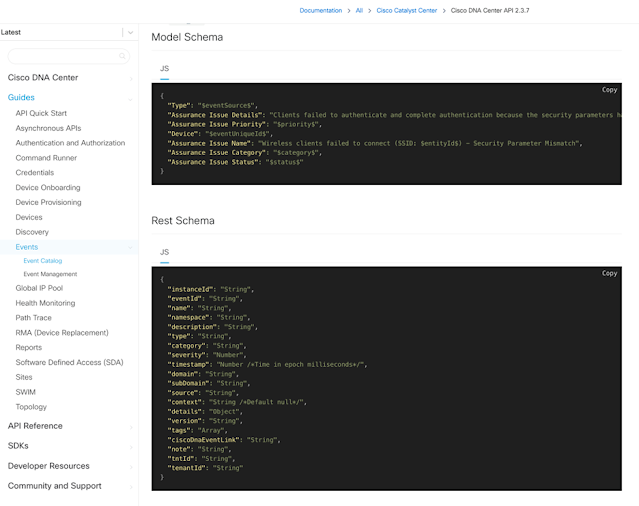

Event catalog

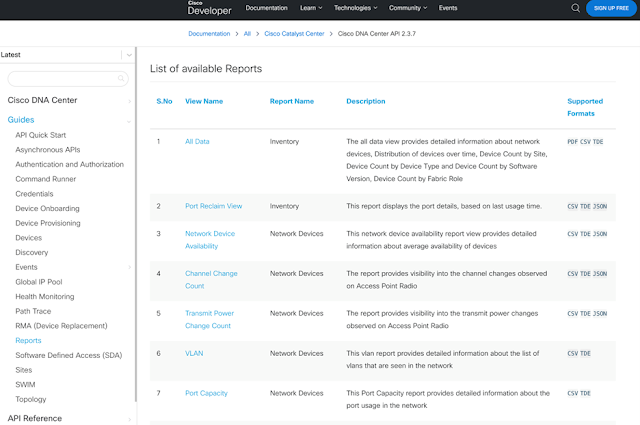

List of available reports

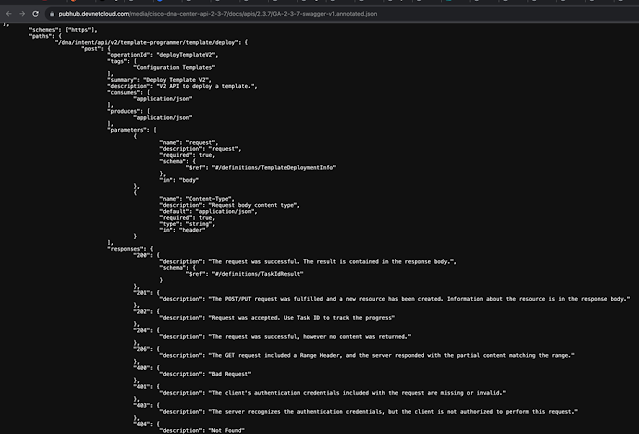

OpenAPI specification in JSON format

Net Promoter Score

Your feedback is most welcome

Saturday, 18 November 2023

The Power of LTE 450 for Critical Infrastructure

- A reduced number of base stations need to be kept up and running; it’s easier to manage the network.

- It’s easier to reach rural areas due to the extended coverage.

- Backup battery power can be used to continue to connect critical devices in the event of a power failure.

- a private wireless network to connect thousands of SCADA systems used to control and monitor substations and other renewable energy assets;

- a public network to serve a broad range of power utilities, including water, gas, heat distribution networks and smart power grids.

Cisco solution for critical networks

Thursday, 16 November 2023

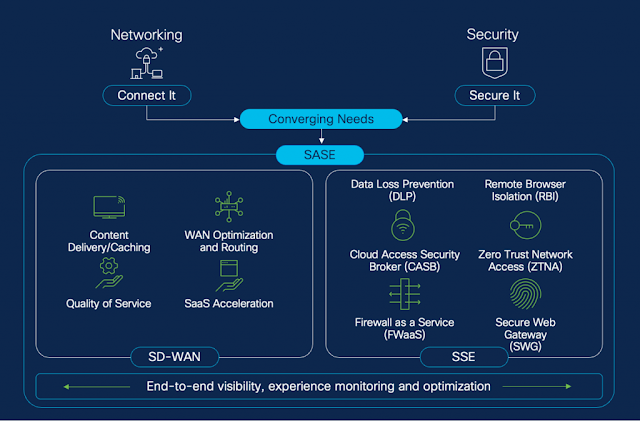

ESG Survey results reinforce the multi-faceted benefits of SSE

- Remote/hybrid workers were found to be the biggest source of cyber-attacks with 44% coming from them.

- Organizations are moving towards cloud-delivered security, as 75% indicated a preference for cloud-delivered cybersecurity products vs. on-premises security tools.

- SSE is delivering value, with over 70% of respondents stating they achieved at least 10 key benefits involving operational simplicity, improved security, and better user experience.

- SecOps teams report significantly fewer attacks, with 56% stating they observed over a 20% reduction in security incidences using SSE.

Overcome cybersecurity complexity

Enhance end-user experience

Solve hybrid work security challenges

Benefits derived through SSE

- Simplified security operations/increased efficiency with ease of configuration and management

- Improved security specifically for remote/hybrid workforce

- Enacting principles of least privilege by allowing remote access only to approved resources

- Superior end-user access experience

- Prevention of malware spread from remote users to corporate resources

- Increased visibility into remote device posture assessment

Cisco leads the way in SSE

- Authorized users can access any app, including non-standard or custom, regardless of the underlying protocols involved.

- Security teams can employ a safer, layered approach to security, with multiple techniques to ensure granular access control.

- Confidential resources remain hidden from public view with discovery and lateral movement prevented.

- Performance is optimized with the use of next-gen protocols, MASQUE and QUIC, to realize HTTP3 speeds

- Administrators can quickly deploy and manage with a unified console, single agent and one policy engine.

- Compliance is maintained via continuous in-depth user authentication and posture checks.