Cisco’s Computer Security Incident Response Team (CSIRT) detected a large and ongoing malspam campaign leveraging the .IMG file extension to bypass automated malware analysis tools and infect machines with a variety of Remote Access Trojans. During our investigation, we observed multiple tactics, techniques, and procedures (TTPs) that defenders can monitor for in their environments. Our incident response and security monitoring team’s analysis on a suspicious phishing attack uncovered some helpful improvements in our detection capabilities and timing.

In this case, none of our intelligence sources had identified this particular campaign yet. Instead, we detected this attack with one of our more exploratory plays looking for evidence of persistence in the Windows Autoruns data. This play was successful in detecting an attack against a handful of endpoints using email as the initial access vector and was able to evade our defenses at the time. Less than a week after the incident, we received alerts from our retrospective plays for this same campaign once our integrated threat intelligence sources delivered the indicators of compromise (IOC). This blog is a high level write-up of how we adapted to a potentially successful attack campaign and our tactical analysis to help prevent and detect future campaigns.

Incident Response Techniques and Strategy

The Cisco Computer Security and Incident Response Team (CSIRT) monitors Cisco for threats and attacks against our systems, networks, and data. The team provides around the globe threat detection, incident response, and security investigations. Staying relevant as an IR team means continuously developing and adapting the best ways to defend the network, data, and infrastructure. We’re constantly experimenting with how to improve the efficiency of our data-centric playbook approach in the hope it will free up more time for threat hunting and more in-depth analysis and investigations. Part of our approach has been that as we discover new methods for detecting risky activity, we try to codify those methods and techniques into our incident response monitoring playbook to keep an eye on any potential future attacks.

Although some malware campaigns can slip past the defenses with updated techniques, we preventatively block the well-known, or historical indicators and leverage broad, exploratory analysis playbooks that spotlight more on how attackers operate and infiltrate. In other words, there is value in monitoring for the basic atomic indicators of compromised like IP addresses, domain names, file hashes, etc. but to go further you really have to look broadly at more generic attack techniques. These playbooks, or plays, help us find out about new attack campaigns that are possibly targeted and potentially more serious. While some might label this activity “threat hunting”, this data exploration process allows us to discover, track, and potentially share new indicators that get exposed during a deeper analysis.

Defense in depth demands that we utilize additional data sources in case attackers successfully evade one or more of our defenses, or if they were able to obscure their malicious activities enough to avoid detection. Recently we discovered a malicious spam campaign that almost succeeded due to a missed early detection. In one of our exploratory plays, we use daily diffs for all the Microsoft Windows registry autorun key changes since the last boot. Known as “Autoruns“, this data source ultimately helped us discover an ongoing attack that was attempting to deliver a remote access trojan (RAT). Along with the more mundane Windows event logs, we pieced together the attack from the moment it arrived and made some interesting discoveries on the way — most notably how the malware seemingly slipped past our front line filters. Not only did we uncover many technical details about the campaign, but we also used it as an opportunity to refine our incident response detection techniques and some of our monitoring processes.

IMG File Format Analysis

.IMG files are traditionally used by disk image files to store raw dumps of either a magnetic disk or of an optical disc. Other disk image file formats include ISO and BIN. Previously, mounting disk image file files on Windows required the user to install third-party software. However Windows 8 and later automatically mount IMG files on open. Upon mounting, Windows File Explorer displays the data inside the .IMG file to the end user. Although disk image files are traditionally utilized for storing raw binary data, or bit-by-bit copies of a disk, any data could be stored inside them. Because of the newly added functionality to the Windows core operating system, attackers are abusing disk image formats to “smuggle” data past antivirus engines, network perimeter defenses, and other auto mitigation security tooling. Attackers have also used the capability to obscure malicious second stage files hidden within a filesystem by using ISO and DMG (to a lesser extent). Perhaps the IMG extension also fools victims into considering the attachment as an image instead of a binary pandora’s box.

Know Where You’re Coming From

As phishing as an attack vector continues to grow in popularity, we have recently focused on several of our email incident response plays around detecting malicious attachments, business email compromise techniques like header tampering or DNS typosquatting, and preventative controls with inline malware prevention and malicious URL rewriting.

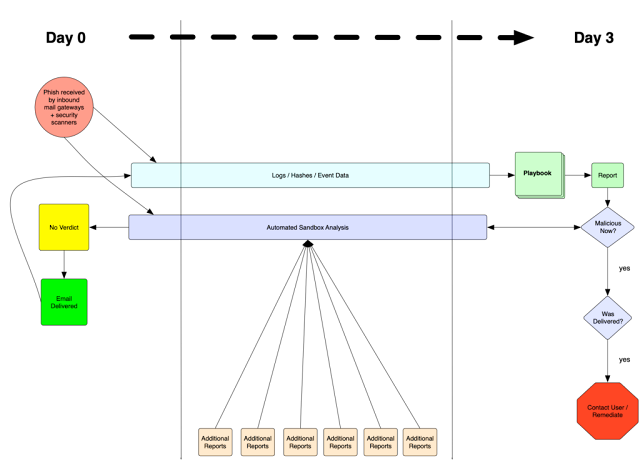

Any security tool that has even temporarily outdated definitions of threats or IOCs will be unable to detect a very recent event or an event with a recent, and therefore unknown, indicator. To ensure that these missed detections are not overlooked, we take a retrospective look back to see if any newly observed indicators are present in any previously delivered email. So when a malicious attachment is delivered to a mailbox, if the email scanners and sandboxes do not catch it the first time, our retrospective plays look back to see if the updated indicators are triggered. Over time sandboxes update their detection abilities and previously “clean” files could change status. The goal is to detect this changing status and if we have any exposure, then we reach out and remediate the host.

This process flow shows our method for detecting and responding to updated verdicts from sandbox scanners. During this process we collect logs throughout to ensure we can match against hashes or any other indicator or metadata we collect:

Figure 1: Flow chart for Retrospective alerting

This process in combination with several other threat hunting style plays helped lead us to this particular campaign. The IMG file isn’t unique by any means but was rare and stood out to our analysts immediately when combined with the file name as a fake delivery invoice – one of the more tantalizing and effective types of phishing lures.

Incident Response and Analysis

We needed to pull apart as much of the malicious components as possible to understand how this campaign worked and how it might have slipped our defenses temporarily. The process tree below shows how the executable file dropped from the original IMG file attachment after mounting led to a Nanocore installation:

Figure 2: Visualization of the malicious process tree.

Autoruns

As part of our daily incident response playbook operations, we recently detected a suspicious Autoruns event on an endpoint. This log (Figure 2) indicated that an unsigned binary with multiple detections on the malware analysis site, VirusTotal, had established persistence using the ‘Run’ registry key. Anytime the user logged in, the binary referenced in the “run key” would automatically execute – in this case the binary called itself “filename.exe” and dropped in the typical Windows “%SYSTEMROOT%\%USERNAME%\AppData\Roaming” directory:

{

"enabled": "enabled",

"entry": "startupname",

"entryLocation": "HKCU\\SOFTWARE\\Microsoft\\Windows\\CurrentVersion\\Run",

"file_size": "491008",

"hostname": "[REDACTED]",

"imagePath": "c:\\users\\[REDACTED]\\appdata\\roaming\\filename.exe",

"launchString": "C:\\Users\\[REDACTED]\\AppData\\Roaming\\filename.exe",

"md5": "667D890D3C84585E0DFE61FF02F5E83D",

"peTime": "5/13/2019 12:48 PM",

"sha256": "42CCA17BC868ADB03668AADA7CF54B128E44A596E910CFF8C13083269AE61FF1",

"signer": "",

"vt_link": "https://www.virustotal.com/file/42cca17bc868adb03668aada7cf54b128e44a596e910cff8c13083269ae61ff1/analysis/1561620694/",

"vt_ratio": "46/73",

"sourcetype": "autoruns",

}

Figure 3: Snippet of the event showing an unknown file attempting to persist on the victim host

Many of the anti-virus engines on VirusTotal detected the binary as the NanoCore Remote Access Trojan (RAT), a well known malware kit sold on underground markets which enables complete control of the infected computer: recording keystrokes, enabling the webcam, stealing files, and much more. Since this malware poses a huge risk and the fact that it was able to achieve persistence without getting blocked by our endpoint security, we prioritized investigating this alert further and initiated an incident.

Once we identified this infected host using one of our exploratory Autoruns plays, the immediate concern was containing the threat to mitigate as much potential loss as possible. We download a copy of the dropper malware from the infected host and performed additional analysis. Initially we wanted to confirm if other online sandbox services agreed with the findings on VirusTotal. Other services including app.any.run also detected Nanocore based on a file called run.dat being written to the %APPDATA%\Roaming\{GUID} folder as shown in Figure 3:

Figure 4: app.any.run analysis showing Nanocore infection

The sandbox report also alerted us to an unusual outbound network connection from RegAsm.exe to 185.101.94.172 over port 8166.

Now that we were confident this was not a false positive, we needed to find the root cause of this infection, to determine if any other users are at risk of being victims of this campaign. To begin answering this question, we pulled the Windows Security Event Logs from the host using our asset management tool to gain a better understanding of what occurred on the host at the time of the incident. Immediately, a suspicious event that was occurring every second jumped out due to the unusual and unexpected activity of a file named “DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe” spawning the Windows Assembly Registration tool RegAsm.exe.

Process Information:

New Process ID: 0x4128

New Process Name: C:\Windows\Microsoft.NET\Framework\v2.0.50727\RegAsm.exe

Token Elevation Type: %%1938

Mandatory Label: Mandatory Label\Medium Mandatory Level

Creator Process ID: 0x2ba0

Creator Process Name: \Device\CdRom0\DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe

Process Command Line: "C:\WINDOWS\Microsoft.NET\Framework\v2.0.50727\RegAsm.exe"

Figure 5: New process spawned from a ‘CdRom0’ device (the fake .img) calling the Windows Assembly Registration tool

This event stands out for several reasons.

◉ The filename:

1. Attempts to social engineer the user into thinking they are executing a PDF by appending “_PDF”

2. “DHL_Label_Scan” Shipping services are commonly spoofed by adversaries in emails to spread malware.

◉ The file path:

1. \Device\CdRom0\ is a special directory associated with a CD-ROM that has been inserted into the disk drive.

2. A fake DHL label is a strange thing to have on a CD-ROM and even stranger to insert it to a work machine and execute that file.

◉ The process relationship:

1. Adversaries abuse the Assembly Registration tool “RegAsm.exe” for bypassing process whitelisting and anti-malware protection.

2. MITRE tracks this common technique as T1121 indicating, “Adversaries can use Regsvcs and Regasm to proxy execution of code through a trusted Windows utility. Both utilities may be used to bypass process whitelisting through use of attributes within the binary to specify code that should be run before registration or unregistration”

3. We saw this technique in the app.any.run sandbox report.

◉ The frequency of the event:

1. The event was occurring every second, indicating some sort of command and control or heartbeat activity.

Mount Up and Drop Out

At this point in the investigation, we have now uncovered a previously unseen suspicious file: “DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe”, which is strangely located in the \Device\CdRom0\ directory, and the original “filename.exe” used to establish persistence.

The first event in this process chain shows explorer.exe spawning the malware from the D: drive.

Process Information:

New Process ID: 0x2ba0

New Process Name: \Device\CdRom0\DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe

Token Elevation Type: %%1938

Mandatory Label: Mandatory Label\Medium Mandatory Level

Creator Process ID: 0x28e8

Creator Process Name: C:\Windows\explorer.exe

Process Command Line: "D:\DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe"

Figure 6: Additional processes spawned by the fake PDF

The following event is the same one that originally caught our attention, which shows the malware spawning RegAsm.exe (eventually revealed to be Nanocore) to establish communication with the command and control server:

Process Information:

New Process ID: 0x4128

New Process Name: C:\Windows\Microsoft.NET\Framework\v2.0.50727\RegAsm.exe

Token Elevation Type: %%1938

Mandatory Label: Mandatory Label\Medium Mandatory Level

Creator Process ID: 0x2ba0

Creator Process Name: \Device\CdRom0\DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe

Process Command Line: "C:\WINDOWS\Microsoft.NET\Framework\v2.0.50727\RegAsm.exe"

Figure 7: RegAsm reaching out to command and control servers

Finally, the malware spawns cmd.exe and deletes the original binary using the built-in choice command:

Process Information:

New Process ID: 0x2900

New Process Name: C:\Windows\SysWOW64\cmd.exe

Token Elevation Type: %%1938

Mandatory Label: Mandatory Label\Medium Mandatory Level

Creator Process ID: 0x2ba0

Creator Process Name: \Device\CdRom0\DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe

Process Command Line: "C:\Windows\System32\cmd.exe" /C choice /C Y /N /D Y /T 3 & Del "D:\DHL_Label_Scan _ June 19 2019 at 2.21_06455210_PDF.exe"

Figure 8: Evidence of deleting the original dropper.

At this point in the investigation of the original dropper and the subsequent suspicious files, we still could not answer how the malware ended up on this user’s computer in the first place. However with the filename of the original dropper to pivot with, a quick web search for the filename turned up a thread on Symantec.com from a user asking for assistance with the file in question. In this post, they write that they recognize the filename from a malspam email they received. Based on the Symantec thread and other clues, such as the use of the shipping service DHL in the filename, we now know the delivery method is likely via email.

Delivery Method Techniques

We used the following Splunk query to search our Email Security Appliance logs for the beginning of the filename we found executing RegAsm.exe in the Windows Event Logs.

index=esa earliest=-30d

[search index=esa "DHL*.img" earliest=-30d

| where isnotnull(cscoMID)

| fields + cscoMID,host

| format]

| transaction cscoMID,host

| eval wasdelivered=if(like(_raw, "%queued for delivery%"), "yes", "no")

| table esaTo, esaFrom, wasdelivered, esaSubject, esaAttachment, Size, cscoMID, esaICID, esaDCID, host

Figure 9: Splunk query looking for original DHL files.

As expected, the emails all came from the spoofed sender address noreply@dhl.com with some variation of the subject “Re: DHL Notification / DHL_AWB_0011179303/ ETD”. In total, CSIRT identified a total of 459 emails from this campaign sent to our users. Of those 459 emails, 396 were successfully delivered and contained 18 different Nanocore samples.

396 malicious emails making it past our well-tuned and automated email mitigation tools is no easy feat. While the lure the attacker used to social engineer their victims was common and unsophisticated, the technique they employed to evade defenses was successful – for a time.

Detecting the Techniques

During the lessons learned phase after this campaign, CSIRT developed numerous incident response detection rules to alert on newly observed techniques discovered while analyzing this incident. The first and most obvious being, detecting malicious disk image files successfully delivered to a user’s inbox. The false-positive rate for this specific type of attack is low in our environment, with a few exceptions here and there – easily tuned out based on the sender. This play could be tuned to look only for disk image files with a small file size if they are more prevalent in your environment.

Another valuable detection rule we developed after this incident is monitoring for suspicious usage (network connections) of the registry assembly executable on our endpoints, which is ultimately the process Nanocore injected itself into and was using to facilitate C2 communication. Also, it is pretty unlikely to ever see legitimate use of the choice command to create a self-destructing binary of sorts, so monitoring for execution of choice with the command-line arguments we saw in the Windows Event above should be a high fidelity alert.

Some additional, universal takeaways from this incident:

1. Auto-mitigation tools should not be treated as a silver bullet – Effective security monitoring, rapid incident response, and defense in depth/layers is more important.

2. Obvious solutions such as blocking extensions at email gateway are not always realistic in large, multifunction enterprises – .IMG files were legitimately being used by support engineers and could not be blocked.

3. Malware campaigns can slip right past defenders on occasion, so a wide playbook that focuses on how attackers operate and infiltrate (TTPs) is key for finding new and unknown malware campaigns in large enterprises (as opposed to relying exclusively on indicators of compromise.)

Indicators Of Compromise (IOCS)

2b6f19fac64c847258fe776a2ea6444cc469ac6a348e714fcab23cc6cb2c5b74

327c646431a644192aae8a0d0ebe75f7a2b98d7afa7a446afa97e2a004ca64b0

3718957d7f0da489935ce35b6587a6c93f25cff69d233381131b757778826da3

3873ef89a74a9c03ba363727b20429a45f29a525532d0ef9027fce2221f64f60

3a7c23a01a06c257b2f5b59647461ebf8f58209a598390c2910d20a9c5757c62

4eb2af63e121c22df7945258991168be4a70aa32669db173743701aab94383fb

5d14e5959c05589978680e46bffd586e10c1fcabc21ddd94c713520cd0037640

6a2af44e186531d07c53122d42280bc18929d059b98f0449c1a646d66a778ffb

80ab695da86e97861b294b72ba1ef2e8e2f322e7ec0d0834e71f92497515b63d

a34aa05710cf0afb111181c23468c2dcc3a2c2d6aa496c9dffe45dde11e2c4d1

abf41ea1909a39c644e5b480b176ef8a3c4a80e2ee8b447d4320e777384392cf

af5d9ca1ed166a8d378c5b5ed7e187035f374b4376bdd632c3a2ee156613fd29

afb87da69c9ad418ac29af27602a450a7eae63132443c7bc56ab17785dd3bbfd

d871704baad496b47b15da54e7766c0a468ac66337d99032908ad7d4732ecffb

da79495b8b75c9b122a1116494f68661ec45a1fdfb8fd39c000f1f691b39bc13

deb805ce329f17a48165328879b854674eb34abd704eeb575e643574f31d3e83

eaee0577806861c23bef8737e5ba2d315e9c6bfa38bf409dda9a2a13599615b4

fc0cf381e433cd578128be91dfd7567d2294a6d3ff4d2ce0e3f4046442b1f5f0

185.101.94.172:8166