Many customers are starting to look at third party tools such as Terraform and Ansible to deploy and manage their infrastructure and applications. In this five-part series we’ll introduce Terraform and understand how it’s used with Cisco products such as ACI. Over the coming weeks this blog series will cover:

1. Introduction to Terraform

2. Terraform and ACI

3. Explanation of the Terraform configuration files

4. Terraform Remote State and Team Collaboration

5. Terraform Providers – How are they built?

Code Example

https://github.com/conmurphy/intro-to-terraform-and-aci

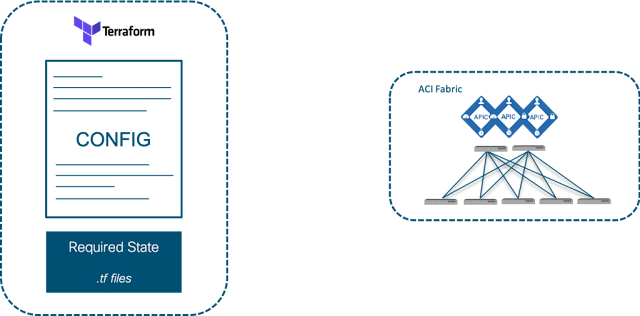

Infrastructure as Code

Before diving straight in, let’s quickly explore the category of tool in which Terraform resides, Infrastructure as Code (IaC). Rather than directly configuring devices through CLI or GUI, IaC is a way of describing the desired state with text files. These text files are read by the IaC tool (e.g. Terraform) which implements the required configuration.

Imagine a sysadmin needs to configure their VCenter/ESXi cluster including data centres, clusters, networks, and VMs. One option would be to click through the GUI to configure each of the required settings. Not only does this take time, but also may introduce configuration drift as individual settings are configured over the lifetime of the platform.

Recording the desired configuration settings in a file and using an IaC tool eliminates the need to click through a GUI, thus reducing the time to deployment.

Additionally, the tool can monitor the infrastructure (e.g Vcenter) and ensure the desired configuration in the file matches the infrastructure.

Here are a couple of extras benefits provided by Infrastructure as Code:

- Reduced time to deployment

- See above

- Additionally, infrastructure can quickly be re-deployed and configured if a major error occurs.

- Eliminate configuration drift

- Increase team collaboration

- Since all the configuration is represented in a text file, colleagues can quickly read and understand how the infrastructure has been configured

- Accountability and change visibility

- Text files describing configuration can be stored using version control software such as Git, along with the ability to view the config differences between two versions.

- Manage more than a single product

- Most, if not all, IaC tools would work across multiple products and domains, providing you the above mentioned benefits from a single place.

Terraform is a tool for building, changing, and versioning infrastructure safely and efficiently. Terraform can manage existing and popular service providers as well as custom in-house solutions.

There are a couple of components of Terraform which we will now walk through.

Configuration Files

When you run commands Terraform will search through the current directory for one or multiple configuration files. These files can either be written in JSON (using the extension .tf.json), or in the Hashicorp Configuration Language (HCL) using the extension .tf.

The following link provides detailed information regarding Terraform configuration files.

As an example, here is a basic configuration file to configure an ACI Tenant, Bridge Domain, and Subnet.

provider "aci" {

# cisco-aci user name

username = "${var.username}"

# cisco-aci password

password = "${var.password}"

# cisco-aci url

url = "${var.apic_url}"

insecure = true

}

resource "aci_tenant" "terraform_tenant" {

name = "tenant_for_terraform"

description = "This tenant is created by the Terraform ACI provider"

}

resource "aci_bridge_domain" "bd_for_subnet" {

tenant_dn = "${aci_tenant.terraform_tenant.id}"

name = "bd_for_subnet"

description = "This bridge domain is created by the Terraform ACI provider"

}

resource "aci_subnet" "demosubnet" {

bridge_domain_dn = "${aci_bridge_domain.bd_for_subnet.id}"

ip = "10.1.1.1/24"

scope = "private"

description = "This subject is created by Terraform"

}

When Terraform runs (commands below), the ACI fabric will be examined to confirm if the three resources (Tenant, BD, subnet) and their properties match what is written in the configuration file.

If everything matches no changes will be made.

Cross checking the configuration file against the resources (e.g. ACI fabric), reduces the amount of configuration drift since Terraform will create/update/delete the infrastructure to match what’s written in the config file.

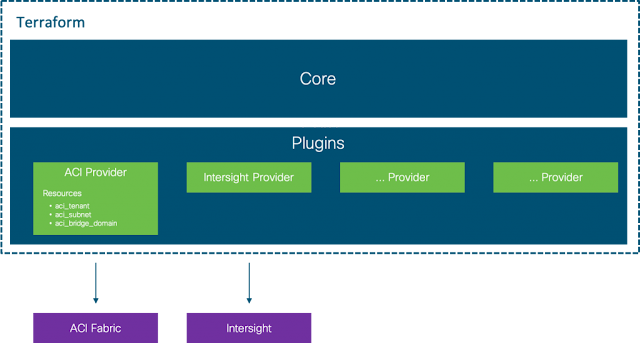

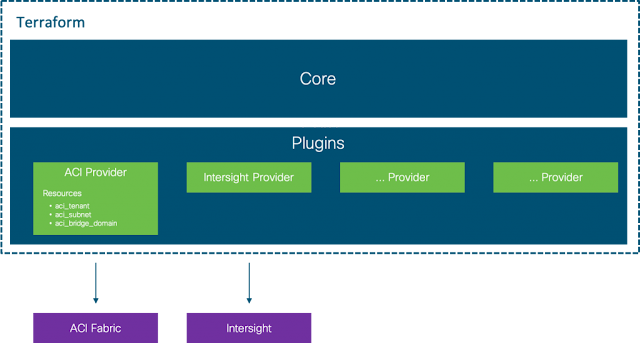

Resources and Providers

A provider is responsible for understanding API interactions and exposing resources. Providers generally are an IaaS (e.g. Alibaba Cloud, AWS, GCP, Microsoft Azure, OpenStack), PaaS (e.g. Heroku), or SaaS services (e.g. Terraform Cloud, DNSimple, Cloudflare), however the ones that we’ll be looking at are Cisco ACI and Intersight.

Resources exist within a provider.

A Terraform resource describes one or more infrastructure objects, for example in an ACI Tenant, EPG, Contract, BD.

A resource block in a .tf config file declares a resource of a given type e.g. (“aci_tenant”) with a given local name (“my_terraform_tenant “). The local name can then be referenced elsewhere in the configuration file.

The properties of the resource are specified within the curly braces of the resource block.

Here is an ACI Tenant resource as example.

resource "aci_tenant" "my_terraform_tenant" {

name = "tenant_for_terraform"

description = "This tenant is created by the Terraform ACI provider"

}

To create a bridge domain within this ACI tenant we can use the resource, aci_bridge_domain, and provide the required properties.

resource "aci_bridge_domain" "my_terraform_bd" {

tenant_dn = "${aci_tenant.my_terraform_tenant.id}"

name = "bd_for_subnet"

description = "This bridge domain is created by the Terraform ACI provider"

}

Since a BD exists within a tenant in ACI, we need to link both resources together.

In this case the BD resource can reference a property of the Tenant resource by using the format, “${terraform_resource.given_name_of_resource.property}”

This makes it very easy to connect resources within Terraform configuration files.

Variables and Properties

As we’ve just learnt resources can be linked together using the format, “${}”. When we need to receive input from the user you can use input variables as described in the following link.

For many resources computed values such as an ID are also available. These are not hard coded in the configuration file but provided by the infrastructure.

They can be accessed in the same way as previously demonstrated. Note that in the following example the ID property is not hard coded in the aci_tenant resource, however this is referenced in the aci_bridge_domain resource. This ID was computed behind the scenes when the tenant was created and made available to any other resource that needs it.

resource "aci_tenant" "my_terraform_tenant" {

name = "tenant_for_terraform"

description = "This tenant is created by the Terraform ACI provider"

}

resource "aci_bridge_domain" "my_terraform_bd" {

tenant_dn = "${aci_tenant.my_terraform_tenant.id}"

name = "bd_for_subnet"

}

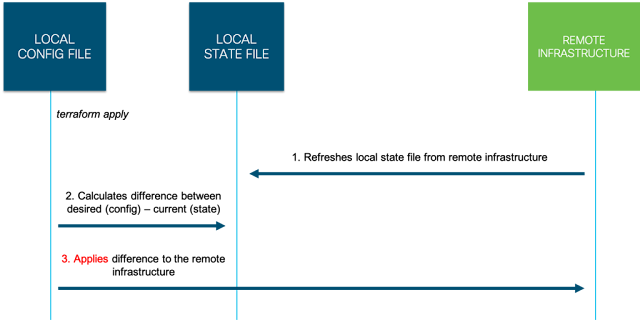

State Files

In order for Terraform to know what changes need to be made to your infrastructure, it must keep track of the environment. This information is stored by default in a local file named “terraform.tfstate“

NOTE: It’s possible to move the state file to a central location and this will be discussed in a later post

As you can see from examining this file Terraform keeps a record of how your infrastructure should be configured. When you run the plan or apply command, your desired config (.tf files) will be cross checked against the current state (.tfstate file) and the difference calculated.

For example if a subnet exists within the config.tf file but not within the terraform.tfstate will configure a new subnet in ACI and update terraform.tfstate.

The opposite is also true. If the subnet exists in terraform.tfstate but not within the config.tf file, Terraform assumes this configuration is not required and will delete the subnet from ACI.

This is a very important point and can result in undesired behaviour if your terraform.tfstate file was to change unexpectedly for some reason.

Here’s a great real world example.

Commands

There are many Terraform commands available however the key ones you should know about are as follows:

terraform init

Initializes a working directory containing Terraform configuration files. This is the first command that should be run after writing a new Terraform configuration or cloning an existing one from version control. It is safe to run this command multiple times.

During init, Terraform searches the configuration for both direct and indirect references to providers and attempts to load the required plugins.

This is important when using the Cisco infrastructure providers (ACI and Intersight)

NOTE: For

providers distributed by HashiCorp, init will automatically download and install plugins if necessary. Plugins can also be manually installed in the user plugins directory, located at ~/.terraform.d/plugins on most operating systems and %APPDATA%\terraform.d\plugins on Windows.

terraform plan

Used to create an execution plan. Terraform performs a refresh, unless explicitly disabled, and then determines what actions are necessary to achieve the desired state specified in the configuration files.

This command is a convenient way to check whether the execution plan for a set of changes matches your expectations without making any changes to real resources or to the state. For example, terraform plan might be run before committing a change to version control, to create confidence that it will behave as expected.

terraform apply

The terraform apply command is used to apply the changes required to reach the desired state of the configuration, or the pre-determined set of actions generated by a terraform plan execution plan.

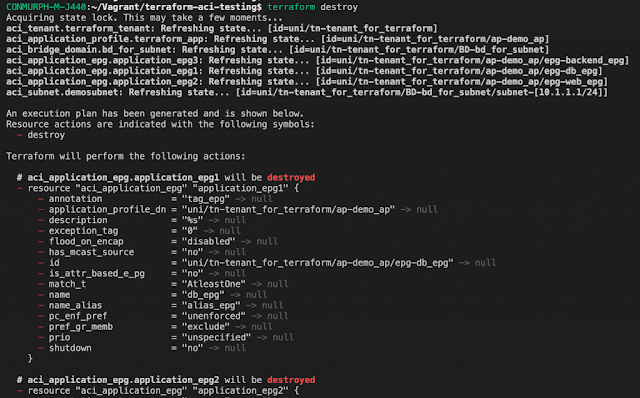

terraform destroy

Infrastructure managed by Terraform will be destroyed. This will ask for confirmation before destroying.

Source: cisco.com