The adoption of new Catalyst 9800 not only brought new ways to configure Wireless Controllers, but also several new mechanisms to troubleshoot them. It has some awesome troubleshooting features that will help to identify root causes faster spending less time and effort.

There are several new key troubleshooting differentiators compared to previous WLC models:

◉ Trace-on-failure: Summary of detected failures

◉ Always-on-tracing: Events continuously stored without having to enable debugging

◉ Radioactive-traces: More detailed debugging logs filtered per mac, or IP address

◉ Embedded Packet capture: Perform filtered packet captures in the device itself

◉ Archive logs: Collect stored logs from all processes

Let me present you the different features through a real reported wireless problem: a typical “client connectivity issue”, showing how to use them to do root cause analysis, while following a systematic approach.

Let’s start by a user reporting wireless client connectivity issue. He was kind to provide the client mac address and timestamp for the problem, so scope starts already partially delimited.

First feature that I would use to troubleshoot is the Trace-on-failure. The Catalyst 9800 can keep track of predefined failure conditions and show the number of events per each one, with details about failed events. This feature allows to be proactive and detect issues that could be occurring in our network even without clients reporting them. There is nothing required for this feature to work, it is continuously working in the background without the need of any debug command.

How to collect Trace-on-failure:

◉ show wireless stats trace-on-failure.

Show different failure conditions detected and number of events.

◉ show logging profile wireless start last 2 days trace-on-failure

Show failure conditions detected and details about event. Example:

9800wlc# show logging profile wireless start last 2 days trace-on-failure

Load for five secs: 0%/0%; one minute: 1%; five minutes: 1%

Time source is NTP, 20:50:30.872 CEST Wed Aug 4 2021

Logging display requested on 2021/08/04 20:50:30 (CEST) for Hostname: [eWLC], Model: [C9800-CL-K9], Version: [17.03.03], SN: [9IKUJETLDLY], MD_SN: [9IKUJETLDLY]

Displaying logs from the last 2 days, 0 hours, 0 minutes, 0 seconds

executing cmd on chassis 1 ...

Large message of size [32273]. Tracelog will be suppressed.

Large message of size [32258]. Tracelog will be suppressed.

Time UUID Log

----------------------------------------------------------------------------------------------------

2021/08/04 06:32:45.763075 0x1000000e37c92 f018.985d.3d67 CLIENT_STAGE_TIMEOUT State = WEBAUTH_REQUIRED, WLAN profile = CWA-TEST2, Policy profile = flex_vlan4_cwa, AP name = ap3800i-r3-sw2-Gi1-0-35

Tip: To just focus on failures impacting our setup we can filter output by removing failures that have no events. We also can monitor statistics to check failures increasing and its pace. Following command can be used:

◉ show wireless stats trace-on-failure | ex : 0$

With those commands we can identify which are the failure events detected in the last few day by the controller, and check if there is any reported event for client mac and timestamp provided by the user.

In case that there is no event for user reported issue or we need more details I would use the next feature, Always-on-tracing.

The Catalyst 9800 is continuously logging control plane events per process into a memory buffer, copying them to disk regularly. Each process logs can span several days, even in the case of a fully controller.

This feature allows to check events that have occurred in the past even without having any debugs enabled. This can be very useful to get context and actions that caused a client or AP disconnections, to check client roaming patterns, or the SSIDs where client had connected. This is a huge advantage if we compare with previous platforms where we had to enable “debug client” command after issue occurred and wait for next occurrence.

Always-on-tracing can be used to check past events for clients, APs or any wireless related process. We can collect all events for wireless profile or filter by concrete client or AP mac address. By default, command is showing last 10 minutes, and output is displayed in the terminal, but we can specify start/end time selecting date from where we want to have logs and we can store them into a file.

How to collect Always-on-tracing:

◉ show logging profile wireless

Show last 10minutes of all wireless involved process in WLC terminal

◉ show logging profile wireless start last 24 hours filter mac MAC-ADDRESS to-file bootflash:CLIENT_LOG.txt

Show events for specific client/AP mac address in the last 24 hours and stores results into a file.

With these commands and since we know the client mac address and timestamp for the issue, we can collect logs for the corresponding point in the past. I always try to get logs starting sometime before the issue so I can find what client was doing before problem occurred.

The Catalyst 9800 has several logging levels details. Always-on-tracing is storing at “info” level events. We can enable higher logging levels if required, like notice, debugging, or even verbose per process or for group of processes. Higher levels will generate more events and reduce the total overall period of time that can be logged for that process.

In case we couldn’t identify root cause with previously collected data and need more in-depth information of all processes and actions I would use the next feature Radioactive-traces.

This feature avoids the need to manually increase logging level per process and will increase level of logging per different processes involved when a concrete set of specified mac or ip addresses transverses the system. It will return logging level back to “info” once it is finished.

Radioactive-traces needs to be enabled before issue occur and will require we wait for next event to collect the data, behaving like the old “debug client” present in legacy controllers. This will be one “One Stop Shop” to do in-depth troubleshooting for multiple issues, like client related problems, APs, mobility, radius, etc, and avoid having to enable a list of different debug commands for each scenario. By default, it will provide logging level “notice” but the keyword “internal” can be added to provide additional logging level details intended for development to troubleshoot.

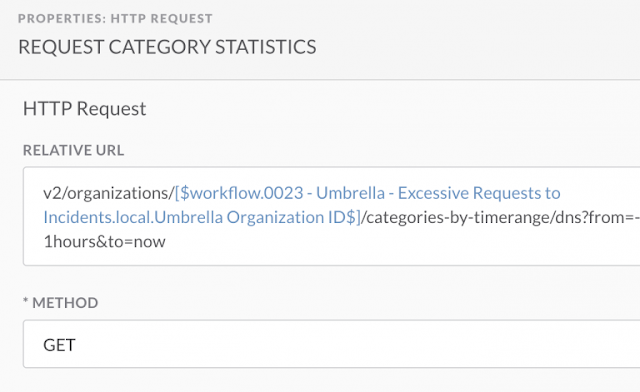

How to collect Radioactive-traces:

◉ CLI Method 1:

show platform condition

clear platform condition all

debug platform condition feature wireless mac dead.beaf.dead

debug platform condition start

Reproduce issue

debug platform condition stop

show logging profile wireless filter mac dead.beaf.dead to-file File.log

If needed more details for engineering

show logging profile wireless internal filter mac dead.beaf.dead to-file File.log

◉ CLI Method 2: Script doing same steps as Method 1, automatically starting traces for next 30 minutes. Time is configurable.

debug wireless mac MAC@ [internal]

Reproduce issue

no debug wireless mac MAC@ [internal]

It will generate ra_trace file in bootflash with date and mac address.

dir bootflash: | i ra_trace

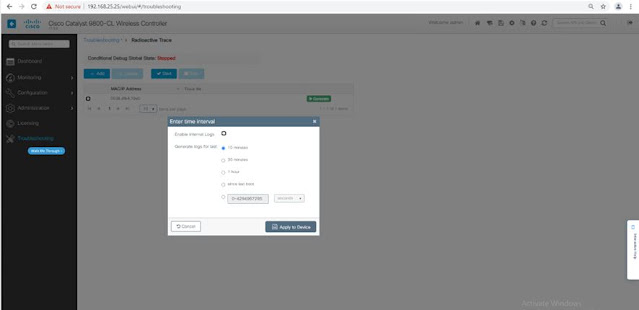

◉ This can also be enabled through GUI, in the troubleshooting section:

Or, directly from the client monitoring:

Tip1: When I need to enable Radioactive-traces I follow these rules:

◉ If issue is consistently reproducible, I use CLI Method 2 since it is faster to type and easy to remember.

◉ If issue is not consistently reproducible, I use CLI Method 1 since it allows to start debugging and wait until issue occurs to stop and collect data.

◉ If I suspect that issue is a bug and will need to involve developers, or issue is infrequently observed I collect logs with internal keyword.

Tip2: We can have “realtime” logs in WLC terminal, similar to “debug client” in previous platforms by enabling Radioactive-traces, and using following command to print outputs in terminal:

◉ monitor log profile wireless level notice filter mac MAC-ADDRESS

The current controller terminal would be just showing outputs, and will not allow to type or execute any command until we exit from monitoring logs by executing “Control+C”.

With the information collected in Radioactive-traces it should be possible to identify root cause for most of the scenarios. Generally, we will stop at this point for our user reporting client connectivity issues.

For complex issues I usually combine Radioactive-traces with the next feature, Embedded Packet captures. This is a feature that as a “geek” I really love. It can not only be used for troubleshooting, but also to understand how a feature works, or which kind of traffic is transmitted and received by client, AP or WLC. Since I am familiar with packet capture analysis I prefer to first check packet capture, identify point where failure occurs, and then focus on that point in the logs.

The Catalyst 9800 controller can collect packet captures from itself and is able to filter by client mac address, ACL, interface, or type of traffic. Embedded Packet captures allows to capture packets in data-plane, or in control-plane allowing to check if packet is received by device but not punted to the CPU. We can easily collect and download filtered packet captures from the controller and get all details about packets transmitted and received by it for a concrete client or AP.

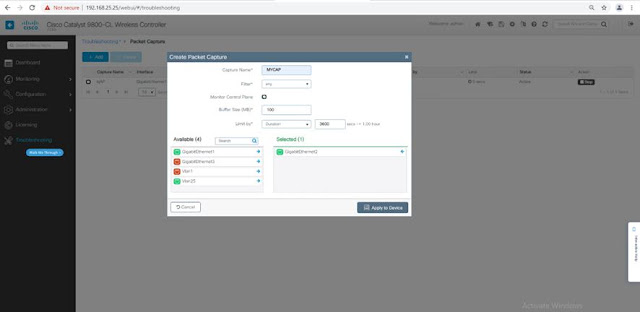

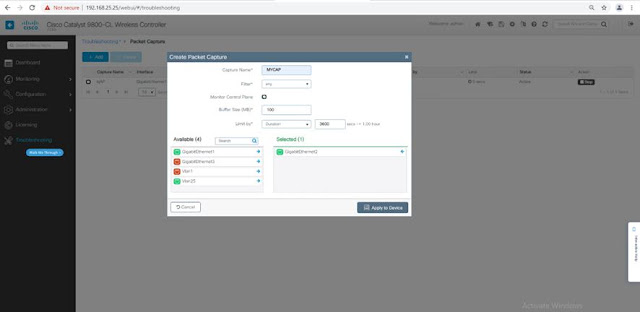

How to collect Embedded Packet captures:

◉ CLI Method:

monitor capture MYCAP clear

monitor capture MYCAP interface Po1 both

monitor capture MYCAP buffer size 100

monitor capture MYCAP match any

monitor capture MYCAP inner mac MAC-ADDRESS

Inner filter available after 17.1.1s

monitor capture MYCAP access-list CAP-FILTER

Apply ACL filter in case is needed

monitor capture MYCAP start

Reproduce

monitor capture MYCAP stop

monitor capture export flash:|tftp:|http:…/filename.pcap

◉ This can also be enabled through GUI, in the troubleshooting section:

Tip: If we don’t have any ftp/tftp/scp/sftp server to copy the capture it can be saved into harddisk or bootflash and retrieve it from WLC GUI using File Manager.

For our client connectivity issue collecting the packet captures with radioactive-traces will help us to recognize if any of the fields in headers/payloads of packets collected is causing the issue, identify delays in transmission or reception of packets, or to focus in the phase where issue is seen: association, authentication, ip learning, …

In case issue could be related to interaction of different process, or different clients we can collect logs for profile wireless instead of filtering by mac address. Another available option is to collect logs for a concrete process we suspect could be causing the issue. Since 9800 is storing the logs for all processes we can gather and view the different logs saved in the WLC until they rotate. Log files are stored as binary files and WLC will decode those logs and display them on terminal or copy them into a file. Getting logs from concrete process can be done with command:

◉ show logging process PROCESS_NAME start last boot

The Catalyst 9800 has a feature called Archived logs that will archive every stored log for different processes providing details of what happened to each of the device’s processes. File generated by Archived logs has binary log files and needs to be decoded using tool to convert binary to text once exported, this is intended to be used by Cisco’s support personnel.

How to collect Archived logs:

◉ request platform software trace archive last 10 days target bootflash:DeviceLogsfrom02082021

This will generate a binary file with all logs from last 10 days.

Archived logs are last resource and intended for engineering use due to the amount of data generated. Usually is requested when complete data is needed to identify root cause, and it is used to troubleshoot issues related to WLC itself, and not to a particular client or AP.

In our case regarding client connectivity issues, I would collect profile wireless logs to check if other clients could be impacted at the same time, and if we could see error logs in a concrete process. And only collect Archived logs if requested by development.