You hear a lot about zero trust microsegmentation these days and rightly so. It has matured into a proven security best-practice to effectively prevent unauthorized lateral movement across network resources. It involves dividing your network into isolated segments, or “microsegments,” where each segment has its own set of security policies and controls. In this way, even if a breach occurs or a potential threat gains access to a resource, the blast radius is contained.

And like many security practices, there are different ways to achieve the objective, and typically much of it depends on the unique customer environment. For microsegmentation, the key is to have a trusted partner that not only provides a robust security solution but gives you the flexibility to adapt to your needs instead of forcing a “one size fits all” approach.

Now, there are broadly two different approaches you can take to achieve your microsegmentation objectives:

◉ A host-based enforcement approach where the policies are enforced on the workload itself. This can be done by installing an agent on the workload or by leveraging APIs in public cloud.

◉ A network-based enforcement approach where the policies are enforced on a network device like an east-west network firewall or a switch.

While a host-based enforcement approach is immensely powerful because it provides access to rich telemetry in terms of processes, packages, and CVEs running on the workloads, it may not always be a pragmatic approach for a myriad of reasons. These reasons can range from application team perceptions, network security team preferences, or simply the need for a different approach to achieve buy-in across the organization.

Long story short, to make microsegmentation practical and achievable, it’s clear that a dynamic duo of host and network-based security is key to a robust and resilient zero trust cybersecurity strategy. Earlier this year, Cisco completed the native integration between Cisco Secure Workload and Cisco Secure Firewall delivering on this principle and providing customers with unmatched flexibility as well as defense in depth. Let’s take a deeper look at what this integration enables our customers to achieve and some of the use cases.

Use case #1: Network visibility via an east-west network firewall

The journey to microsegmentation starts with visibility. This is a perfect opportunity for me to insert the cliché here – “What you can’t see, you can’t protect.” In the context of microsegmentation, flow visibility provides the foundation for building a blueprint of how applications communicate with each other, as well as users and devices – both within and outside the datacenter.

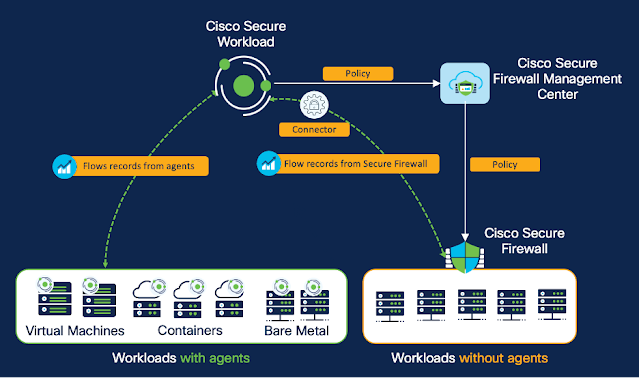

The integration between Secure Workload and Secure Firewall enables the ingestion of NSEL flow records to provide network flow visibility, as shown in Figure 1. You can further enrich this network flow data by bringing in context in the form of labels and tags from external systems like CMDB, IPAM, identity sources, etc. This contextually enriched data set allows you to quickly identify the communication patterns and any indicators of compromise across your application landscape, enabling you to immediately improve your security posture.

Figure 1: Secure Workload ingests NSEL flow records from Secure Firewall

Use case #2: Microsegmentation using the east-west network firewall

The integration of Secure Firewall and Secure Workload provides two powerful complimentary methods to discover, compile, and enforce zero trust microsegmentation policies. The ability to use a host-based, network-based, or mix of the two methods gives you the flexibility to deploy in the manner that best suits your business needs and team roles (Figure 2).

And regardless of the approach or mix, the integration enables you to seamlessly leverage the full capabilities of Secure Workload including:

- Policy discovery and analysis: Automatically discover policies that are tailored to your environment by analyzing flow data ingested from the Secure Firewall protecting east-west workload communications.

- Policy enforcement: Onboard multiple east-west firewalls to automate and enforce microsegmentation policies on a specific firewall or set of firewalls through Secure Workload.

- Policy compliance monitoring: The network flow information, when compared against a baseline policy, provides a deep view into how your applications are behaving and complying against policies over time.

Figure 2: Host-based and network-based approach with Secure Workload

Use case #3: Defense in depth with virtual patching via north-south network firewall

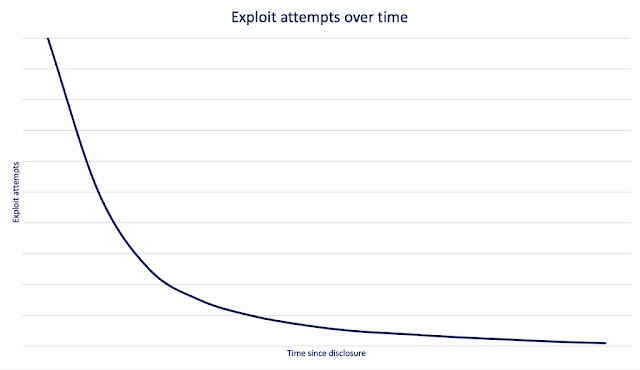

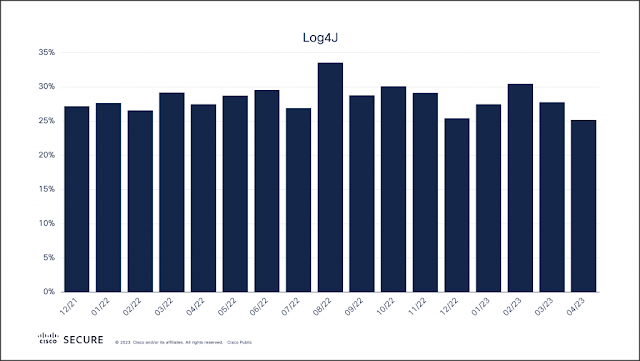

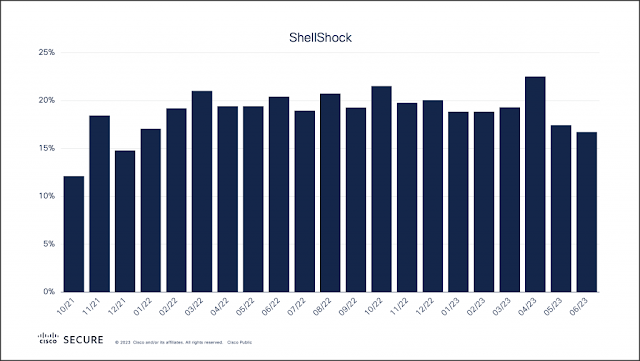

This use case demonstrates how the integration delivers defense in depth and ultimately better security outcomes. In today’s rapidly evolving digital landscape, applications play a vital role in every aspect of our lives. However, with the increased reliance on software, cyber threats have also become more sophisticated and pervasive. Traditional patching methods, although effective, may not always be feasible due to operational constraints and the risk of downtime. When a zero-day vulnerability is discovered, there are a few different scenarios that play out. Consider two common scenarios: 1) A newly discovered CVE poses an immediate risk and in this case the fix or the patch is not available and 2) The CVE is not highly critical so it’s not worth patching it outside the usual patch window because of the production or business impact. In both cases, one must accept the interim risk and either wait for the patch to be available or for the patch window schedule.

Virtual patching, a form of compensating control, is a security practice that allows you to mitigate this risk by applying an interim protection or a “virtual” fix to known vulnerabilities in the software until it has been patched or updated. Virtual patching is typically done by leveraging the Intrusion Prevention System (IPS) of Cisco Secure Firewall. The key capability, fostered by the seamless integration, is Secure Workload’s ability to share CVE information with Secure Firewall, thereby activating the relevant IPS policies for those CVEs. Let’s take a look at how (Figure 3):

- The Secure Workload agents installed on the application workloads will gather telemetry about the software packages and CVEs present on the application workloads.

- A workload-CVE mapping data is then published to Secure Firewall Management Center. You can choose the exact set of CVEs you want to publish. For example, you can choose to only publish CVEs that are exploitable over network as an attack vector and has CVSS score of 10. This would allow you to control any potential performance impact on your IPS.

- Finally, the Secure Firewall Management Center then runs the ‘firepower recommendations’ tool to fine tune and enable the exact set of signatures that are needed to provide protection against the CVEs that were found on your workloads. Once the new signature set is crafted, it can be deployed to the north-south perimeter Secure Firewall.

Figure 3: Virtual patching with Secure Workload and Secure Firewall

Flexibility and defense in depth is the key to a resilient zero trust microsegmentation strategy

With Secure Workload and Secure Firewall, you can achieve a zero-trust security model by combining a host-based and network-based enforcement approach. In addition, with the virtual patching ability, you get another layer of defense that allows you to maintain the integrity and availability of your applications without sacrificing security. As the cyber threat landscape continues to evolve, harmony between different security solutions is undoubtedly the key to delivering more effective solutions that protect valuable digital assets.

Source: cisco.com