Automation has been the focus of interest in the industry for quite some time now. Out of the top tools available, Ansible and Terraform have been popularly used amongst automation enthusiasts like me. While Ansible and Terraform are different in their implementation, they are equally supported by products from the Cloud Networking Business Unit at Cisco (Cisco ACI, DCNM/NDFC, NDO, NXOS). Here, we will discuss how Terraform and Ansible work with Nexus Dashboard Fabric Controller (NDFC).

First, I will explain how Ansible and Terraform works, along with their workflow. We will then look at the use cases. Finally, we will discuss implementing Infrastructure as Code (IaC).

Ansible – Playbooks and Modules

For those of you that are new to automation, Ansible has two main parts – the inventory file and playbooks. The inventory file gives information about the devices we are automating including any sandbox environments set up. The playbook acts as the instruction manual for performing tasks on the devices declared in the inventory file.

Ansible becomes a system of documentation once the tasks are written in a playbook. The playbook leverages REST API modules to describe the schema of the data that can be manipulated using Rest API calls. Once written, the playbook can be executed using the ansible-playbook command line.

Ansible Workflow

Terraform – Terraform Init, Plan and Apply

Terraform has one main part – the TF template. The template will contain the provider details, the devices to be automated as well as the instructions to be executed. The following are the 3 main points about terraform:

1. Terraform defines infrastructure as code and manage the full lifecycle. Creates new resources, manages existing ones, and destroys ones no longer necessary.

2. Terraform offers an elegant user experience for operators to predictably make changes to infrastructure.

3. Terraform makes it easy to re-use configurations for similar infrastructure designs.

While Ansible uses one command to execute a playbook, Terraform uses three to four commands to execute a template. Terraform Init checks the configuration files and downloads required provider plugins. Terraform Plan allows the user to create an execution plan and check if the execution plan matches the desired intent of the plan. Terraform Apply applies the changes, while Terraform Destroy allows the user to delete the Terraform managed infrastructure.

Once a template is executed for the first time, Terraform creates a file called terraform.state to store the state of the infrastructure after execution. This file is useful when making mutable changes to the infrastructure. The execution of the tasks is also done in a declarative method. In other words, the direction of flow doesn’t matter.

Terraform Workflow

Use Cases of Ansible and Terraform for NDFC

Ansible executes commands in a top to bottom approach. While using the NDFC GUI, it gets a bit tedious to manage all the required configuration when there are a lot of switches in a fabric. For example, to configure multiple vPCs or to deal with network attachments for each of these switches, it can get a bit tiring and takes up a lot of time. Ansible uses a variable in the playbook called states to perform various activities such as creation, modification and deletion which simplifies making these changes. The playbook uses the modules we have depending on the task at hand to execute the required configuration modifications.

Terraform follows an infrastructure as code approach for executing tasks. We have one main.tf file which contains all the tasks which are executed with a terraform plan and apply command. We can use the terraform plan command for the provider to verify the tasks, check for errors and a terraform apply executes the automation. In order to interact with application specific APIs, Terraform uses providers. All Terraform configurations must declare a provider field which will be installed and used to execute the tasks. Providers power all of Terraform’s resource types and find modules for quickly deploying common infrastructure configurations. The provider segment has a field where we specify whether the resources are provided by DCNM or NDFC.

Ansible Code Example (Click to view full size)

Terraform Code Example (Click to view full size)

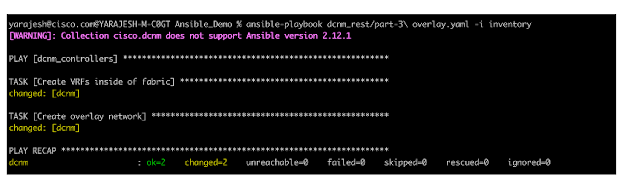

Below are a few examples of how Ansible and Terraform works with NDFC. Using the ansible-playbook command we can execute our playbook to create a VRF and network.

Below is a sample of how a Terraform code execution looks:

Infrastructure as Code (IaC) Workflow

Infrastructure as a Code – CI/CD Workflow

One popular way to use Ansible and Terraform is by building it from a continuous integration (CI) process and then merging it from a continuous delivery (CD) system upon a successful application build:

◉ The CI asks Ansible or Terraform to run a script that deploys a staging environment with the application.

◉ When the stage tests pass, CD then proceeds to run a production deployment.

◉ Ansible/Terraform can then check out the history from version control on each machine or pull resources from the CI server.

An important benefit that is highlighted through IaC is the simplification of testing and verification. CI rules out a lot of common issues if we have enough test cases after deploying on the staging network. CD automatically deploys these changes onto production with just a simple click of a button.

While Ansible and Terraform have their differences, NDFC supports the automation through both software equally and customers are given the option to choose either one or even both.

Terraform and Ansible complement each other in the sense that they both are great at handling IaC and the CI/CD pipeline. The virtualized infrastructure configuration remains in sync with changes as they occur in the automation scripts.

There are multiple DevOps software alternatives out there to handle the runner jobs. Gitlab, Jenkins, AWS and GCP to name a few.

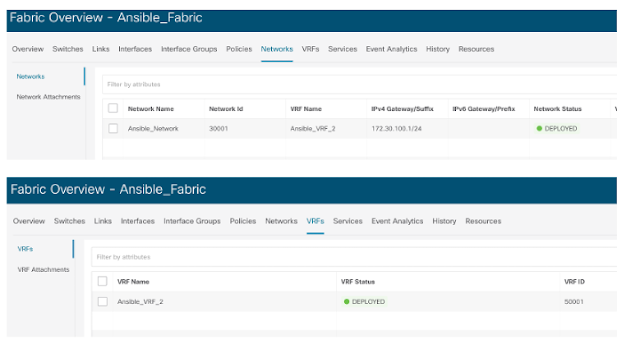

In the example below, we will see how GitLab and Ansible work together to create a CI/CD pipeline. For each change in code that is pushed, CI triggers an automated build and verify sequence on the staging environment for the given project, which provides feedback to the project developers. With CD, infrastructure provisioning and production deployment is ensured once the verify sequence through CI has been successfully confirmed.

As we have seen above, Ansible works in similar way to a common line interpreter, we define a set of commands to run against our hosts in a simple and declarative way. We also have a reset yaml file which we can use to revert all changes we make to the configuration.

NDFC works along with Ansible and the Gitlab Runner to accomplish a CI/CD Pipeline.

Gitlab Runner is an application that works with Gitlab CI/CD to run jobs in a pipeline. Our CI/CD job pipeline runs in a Docker container. We install GitLab Runner onto a Linux server and register a runner that uses the Docker executor. We can also limit the number of people with access to the runner so Pull Requests (PRs) of the merge can be raised and approved of the merge by a select number of people.

Step 1: Create a Repository for the staging and production environment and an Ansible file to keep credentials safe. In this, I have used the ansible vault command to store the credentials file for NDFC.

Step 2: Create an Ansible file for resource creation. In our case, we have one main file for staging and production separately followed by a group_vars folder to have all the information about the resources. The main file pulls the details from the group_vars folder when executed.

Step 3: Create a workflow file and check the output.

As above, our hosts.prod.yml and hosts.stage.yml inventory files act as the main file for implementing resource allocation to both production and staging respectively. Our group_vars folder contains all the resource information including fabric details, switch information as well as overlay network details.

For the above example, we will be showing how adding a network to the overlay.yml file and then committing this change will invoke a CI/CD pipeline for the above architecture.

Optional Step 4: Create a password file (Optional). Create a new file called password.txt containing the ansible vault password to encrypt and decrypt the Ansible vault file.

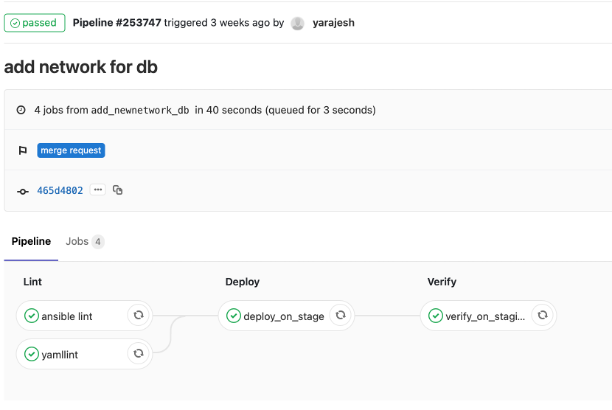

Our overlay.yml file currently has 2 networks. Our staging and production environment has been reset to this stage. We will now add our new network network_db to the yaml file as below:

First, we make this change to the staging by raising a PR and once it has been verified, the admin of the repo can then approve this PR merge which will make the changes to production.

Once we make these changes to the Ansible file, we create a branch under this repo to which we commit the changes.

After this branch has been created, we raise a PR request. This will automatically start the CI pipeline.

Once the staging verification has passed, the admin/manager of the repo can go ahead and approve of the merge which kicks in the CD pipeline for the production environment.

If we check the NDFC GUI, we can find both staging and production contain the new network network_db.

Source: cisco.com