Suppose you were to peruse any book or paper on the topic of computer networking. In that case, you will undoubtedly find at least a cursory mention of the OSI or TCP/IP networking stack. This 7 (or 5) layers model defines the protocols used in a communication network, described in a hierarchy with abstract interfaces and standard behaviors. In this “Networking Demystified” blog post, we shed light on the modern networking stack but from a completely different vantage point: the focus will be on the technologies and areas associated with the various layers of the stack. The goal is to offer a glimpse of what engineers and technologists are working on in this exciting and continuously evolving space that impacts businesses, education, healthcare, and people worldwide.

But first, how did we get to where we are today?

A Brief History of Time (well, … networking mostly)

The early years of networking were all about plumbing: building the pipes to interconnect endpoints and enable them to communicate. The first challenges to conquer were distance and reach—the connection of many devices—which gave rise to local area networks, wide area networks, and the global Internet. The second wave of challenges involved scaling those pipes with technologies that offered faster speeds and feeds and better reliability.

The evolution in Physical and Link Layer technologies continued at a rapid cadence, with several technologies getting their 15 minutes of fame (X 25, Frame Relay, ISDN, ATM, among others) over the years and others ending up as roadkill (which shall remain unnamed to protect the innocent). The Internet Protocol (IP) quickly emerged as the narrow waist of the hourglass, normalizing many applications over several link technologies. This normalization created an explosion in Internet usage that led to the exhaustion of the IPv4 address space, thereby bringing complexities like Network Address Translation (NAT) to the network as a workaround.

The years that followed in the evolution of networking focused on enabling services and applications that run over the plumbing. Voice, video, and numerous data applications (email, web, file transfer, instant messaging, etc.) converged over packet networks and contended for bandwidth and priority over shared pipes. The challenges to overcome were guaranteeing application quality of service, user quality of experience, and client/provider service level agreements. Technologies for traffic marking (setting bits in packet headers to indicate the quality of service level), shaping (delaying/buffering packets above a rate), and policing (dropping packets above a guaranteed rate), as well as resource reservation and performance management, were developed. As networks grew more extensive, and with the emergence of public (provider-managed) network services, scalability and availability challenges led to the development of predominantly Service Provider oriented technologies such as MPLS and VPNs.

Then came the things… the Internet of Things, that is. The success of networks in connecting people gave rise to the idea of connecting machines to machines (M2M) to enable many new use cases in home automation, healthcare, smart utilities, and manufacturing, to name a few. This, in turn, presented a new set of challenges pertaining to constrained devices (i.e., one with limited CPU, memory, and power) networking, ad hoc wireless, time-sensitive communication, edge computing, securing IoT endpoints, scaling M2M networks, and many others. While the industry has solved some of these challenges, many remain on the plates of current and future networking technologists and engineers.

Throughout this evolution, the complexity of networks continued to grow as IT added more and more mission-critical applications and services. Every emerging innovation in networking created new use cases that contributed to more significant network usage. The high-touch, command-line interface (CLI) oriented approach to network provisioning and troubleshooting could no longer achieve the scalability, agility, and availability demanded by networks. A paradigm shift in the approach to network operations and management was needed.

Cue the Controllers

Network management systems are not a new development in the history of networking. They have existed in some form or fashion since the early days. However, those management controls operated at the level of individual protocols, mechanisms, and configuration interfaces. This mode of operation was slowing innovation, increasing complexity, and inflating the operational costs of running networks. The demand for networks to meet business needs with agility led to the requirement for networks to be software-driven and thus programmable.

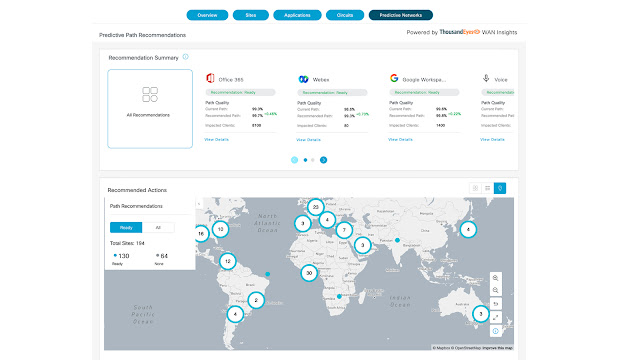

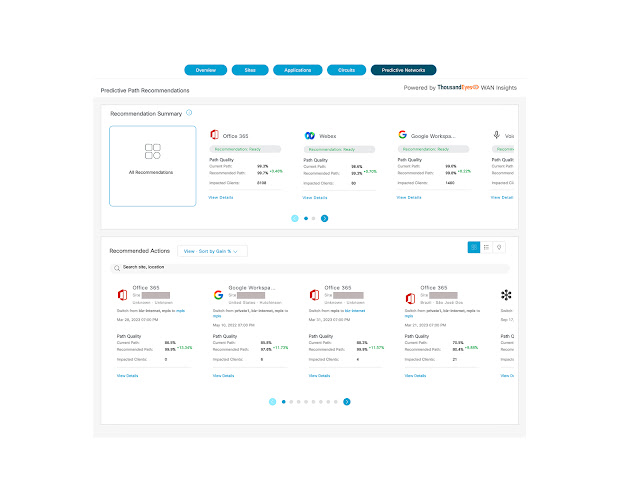

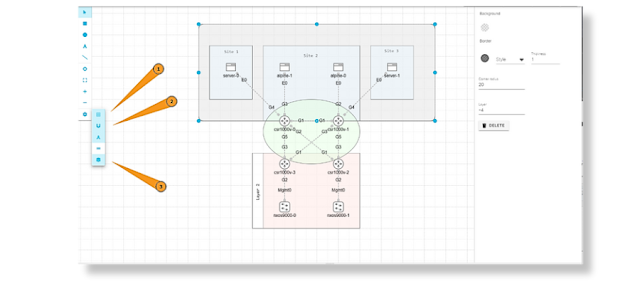

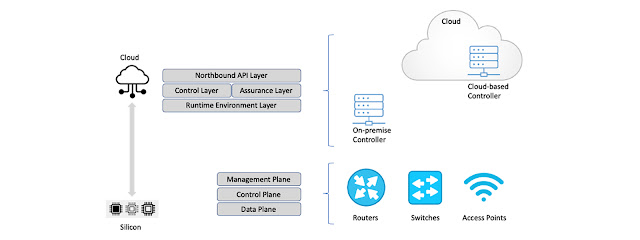

This change led to the notion of Software-Defined Networks (SDN). A core component of a Software-Defined Network is the controller platform: the management system that has a global view of the network and is responsible for automating network configuration, assurance, troubleshooting, and optimization functions. In a sense, the controller replaces the human operator as the brain managing the network. It enables centralized management and control, automation, and policy enforcement across network environments. Controllers have southbound APIs that relay information between the controller and individual network devices (such as switches, access points, routers, and firewalls) and northbound APIs that relay information between the controller and the applications and policy engines.

Controllers originally were physical appliances deployed on-premises with the rest of the network devices. But more recently, it is possible for the controller functions to be implemented in the Cloud. In this case, the network is referred to as a cloud-managed network. The choice of cloud-managed versus on-premises depends on several factors, including customer requirements and deployment constraints.

Figure 1: Modern Networking Stack

So now that we have a historical view of how networking has evolved over the years let’s turn to the modern networking stack.

From Silicon to the Cloud

The OSI and TCP/IP reference models only paint a partial picture of the modern networking stack. These models specify the logical functions of network devices but not the controllers. With networks becoming software-defined, the networking stack spans from silicon hardware to the cloud. So, building modern networking gear and solutions has become as much about low-level embedded systems engineering as it is about cloud-native application development.

First, let’s examine the layers of the stack that run on network devices. The functions of these layers can be broadly categorized into three planes: data plane, control plane, and management plane. The data plane is concerned with packet forwarding functions, flow control, quality of service (QoS), and access-control features. The control plane is responsible for discovering topology and capabilities, establishing forwarding paths, and reacting to failures. In comparison, the management plane focuses on functions that deal with device configuration, troubleshooting, reporting, fault management, and performance management.

Data Plane

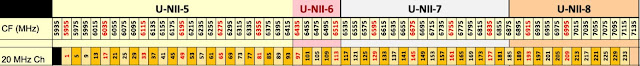

Engineers focusing on the data plane work on or close to the hardware (e.g., ASIC or FPGA design, device drivers, or packet processing engine programming). One of the perennial focus areas in this layer of the stack is performance in the quest for faster-wired link speeds, higher wireless bandwidth, and wider channels. Another focus area is power optimization to achieve usage-proportional energy consumption for better sustainability. A third focus area is determinism in latency/jitter to handle time-sensitive and immersive (AR/VR/XR) applications.

Control Plane

Engineers working on the control plane are involved with designing and implementing networking protocols that handle topology and routing, multicast, OAM, control, endpoint mobility, and policy management, among other functions. Modern network operating systems involve embedded software application development on top of the Linux operating system. Key focus areas in this layer include scaling of algorithms; privacy and identity management; security features; network time distribution and synchronization; distributed mobility management; and lightweight protocols for IoT.

Management Plane

Engineers working on the management plane work with protocols for management information transfer, embedded database technologies, and API design. A key focus area in this layer is scaling the transfer of telemetry information that needs to be pushed from network devices to the controllers to enable better network assurance and closed-loop automation.

Understanding the Controller Software Stack

Next, we will look at the layers of the stack that run on network controllers. Those can be broadly categorized into four layers: the runtime environment layer, the control layer, the assurance layer, and the northbound API layer.

◉ The runtime environment layer is responsible for the lifecycle management of all the software services that run on the controller, including infrastructure services (such as persistent storage and container/VM networking) and application services that are logically part of the other three layers.

◉ The control layer handles the translation and validation of user intent and automatic implementation in the network to create the desired configuration state and enforce policies.

◉ The assurance layer constantly monitors the network state to ensure that the desired state is maintained and performs remedial action when necessary.

◉ The northbound API layer enables the extension of the controller and integration with applications such as trouble-ticketing systems and orchestration platforms.

State-of-the-art controllers are not implemented as monolithic applications. To provide the required flexibility to scale out with the size of the network, controllers are designed as cloud-native applications based on micro-services. As such, engineers who work on the runtime environment layer work on cloud runtime and orchestration solutions. Key focus areas here include all the tools needed for applications to run in a cloud-native environment, including:

◉ Storage that gives applications easy and fast access to data needed to run reliably,

◉ Container runtime, which executes application code,

◉ Networks over which containerized applications communicate,

◉ Orchestrators that manage the lifecycle of the micro-services.

Engineers working on the control layer are involved with high-level cloud-native application development that leverages open-source software and tools. Key focus areas at this layer include Artificial Intelligence (AI) and Natural Language Processing (NLP) to handle intent translation. Other critical focus areas include data modeling, policy rendering, plug-and-play discovery, software image management, inventory management, and automation. User interface design and data visualization (including 3D, AR, and VR) are also crucial.

Engineers developing capabilities for the assurance layer are also involved with high-level cloud-native application development. However, the focus here is more on AI capabilities, including Machine Learning (ML) and Machine Reasoning (MR), to automate the detection of issues and provide remediation. Another center of attention is data ingestion and processing pipelines, including complex event processing systems, to handle the large volumes of network telemetry.

Engineers working on the northbound API layer focus on designing scalable REST APIs that enable network controllers to be integrated with the ecosystem of IT systems and applications that use the network. This layer focuses on API security and scalability and on providing high-level abstractions that hide the complexities and inner workings of networking from applications.

It’s an Exciting Time to be in Network Engineering

As networking evolved over the years, so did the networking stack technologies. What started as a domain focused primarily on low-level embedded systems development has expanded over the years to encompass everything from low-level hardware design to high-level cloud-native application development and everything in between. It is an exciting time to be in the networking industry, connecting industries, enabling new applications, and helping people work together where ever they may be!

Source: cisco.com