Thursday, 14 December 2023

The Technology That’s Remaking OU Health into a Top-Tier Medical Center

Tuesday, 12 December 2023

Bringing Simplicity to Security: The Journey of the Cisco Security Cloud

- We delivered Cisco Secure Access, a cloud-delivered security service edge (SSE) solution, grounded in zero trust, that provides our customers exceptional user experience and protected access from any device to anywhere.

- We improved zero-trust functionality with an integrated client experience (Secure Client), and industry first partnerships with Apple and Samsung using modern protocols to deliver user friendly, zero trust access to private applications, and improved network traffic visibility.

- We delivered our Extended Detection and Response (XDR) solution with first-of-its-kind capabilities for automatically recovering from ransomware attacks that costs businesses billions of dollars annually.

- We have made significant investments in advanced technologies and top talent in strategic areas like multicloud defense, artificial intelligence, and identity with the acquisitions of Valtix, ArmorBlox, and Oort.

- We simplified how customers can procure tightly integrated solutions from us with our first set of Security Suites (User, Cloud, and Breach Protection) that are powered by AI, built on zero trust principles, and delivered by our Security Cloud platform.

- We have taken a major step in making artificial intelligence pervasive in the Security Cloud with the new Cisco AI Assistant for Security, and introduction of our AI Assistant for Firewall Policy. Managing, updating, and deploying policies is one of the most complex and time-consuming tasks that is fraught with human error. Our AI Assistant solves the complexity of setting and maintaining these policies and firewall rules.

Our goal continues to be lifting the complexity tax for customers

- Customers value things that will minimize disruption when migrating to a new solution or platform. They need our help to simplify and make this process easier through features like the Cisco Secure Firewall Migration Tool and the Cisco AI Assistant for Security.

- We must be mindful that there are operational and business costs associated, and vendor or software consolidation may not always be as easy as technology migration – for example, factoring in for cost of existing software licenses of decommissioned products.

- Hybrid cloud is the de facto operating model for companies today and security is no exception. We must continue to deliver the benefits of cloud operating model and SaaS-like functionality to on-premises security environments.

The Road Ahead

- A major priority is for us to optimize the user experience and simplify management across our portfolio for features and products we have shipped. We will continue to focus on delivering innovation from a customer-centric approach and shifting focus from deliverables to outcomes; the business value we can provide and what problems we can solve.

- Working closely with our customers to prioritize customer-found defects or security vulnerabilities as we develop new features. In general, security efficacy continues to be one of our top objectives for Cisco Security engineering.

- Harnessing the incredible power and potential of generative AI technology to revolutionize threat response and simplify security policy management. Solving these problems is one of the first “killer applications” for AI and we’re only scratching the surface of what we can do from AI-driven innovation.

- With Oort’s identity-centric technology, we will enhance user context telemetry and incorporate their capabilities across our portfolio, including our Duo Identity Access Management (IAM) technology and Extended Detection and Response (XDR) portfolios.

- Leveraging our cloud-native expertise and decades of on-premises experience to reimagine and redefine how security appliances are deployed and used.

Saturday, 9 December 2023

How Cisco Black Belt Academy Learns from Our Learners

Learning from our learners

Constantly refining based on learners’ input

Your voice, our actions

An experience based on learners’ needs

Wednesday, 6 December 2023

Why You Should Pass Cisco 350-701 SCOR Exam?

What Is the Cisco 350-701 SCOR Exam?

The 350-701 SCOR exam by Cisco assesses a wide range of competencies, encompassing network, cloud, and content security, as well as endpoint protection and detection. It also evaluates skills in ensuring secure network access, visibility, and enforcement.

The SCOR 350-701 exam, titled "Implementing and Operating Cisco Security Core Technologies v1.0," lasts for 120 minutes and includes 90-10 questions. It is linked to certifications such as CCNP Security, Cisco Certified Specialist - Security Core, and CCIE Security. The exam focuses on the following objectives:

Tips and Tricks to Pass the Cisco 350-701 SCOR Exam

When dealing with Cisco exams, it's essential to be clever and strategic. Here are some tips and techniques you can employ to excel in your Cisco 350-701 exam:

1. Have a Good Grasp of the Cisco 350-701 SCOR Exam Content

Initially, it's crucial to possess a well-defined understanding of the examination format. You must comprehend the expectations placed on you, enabling you to confidently provide the desired responses without hesitating among seemingly comparable choices.

2. Familiarize Yourself With the Exam Topics

Gaining insight into the goals of the Cisco SCOR 350-701 Exam can be highly advantageous. It allows you to identify the key concepts within the course, enabling a more concentrated effort to acquire expertise in those specific areas.

3. Develop a Study Schedule

Having a study schedule is crucial as it enhances organization and ensures comprehensive coverage. It provides a clear overview of the time available before the exam, allowing you to determine the necessary study and practice duration.

5. Perform Cisco 350-701 SCOR Practice Exams

Engaging in practice exams assists in identifying areas of deficiency, areas for improvement, and whether there's a need to enhance speed. You can access dependable Cisco 350-701 SCOR practice exams on the nwexam website. Repeat the practice sessions, pinpoint your weaker areas, monitor your results, and ultimately build confidence in your knowledge and skills.

6. Engage in Online Forums

Numerous online communities are specifically focused on Cisco certifications and exams. By becoming a part of these communities, you can connect with individuals possessing relevant experience or working as professionals in the field. Their insights and recommendations will assist you in steering clear of errors and optimizing your work efficiency.

7. Brush Up on Your Knowledge Right Before the Exam

Having a concise set of notes that you can review just before the exam is beneficial. This aids in activating your memory and bringing essential knowledge to the forefront of your mind, saving valuable time that might otherwise be spent trying to recall information.

8. Strategies for Multiple-Choice Questions

Utilizing Multiple-Choice Questions strategies is beneficial when you're uncertain about the correct answer. For instance, employing the method of eliminating incorrect options can be effective. It's also advisable to skip questions that are challenging, proceeding to the others without spending excessive time on them. Complete the remaining Cisco 350-701 questions and return to the challenging one later.

Why Should You Pass the Cisco 350-701 SCOR Exam?

Indeed, examinations are commonly undertaken to acquire the knowledge and skills necessary to address human challenges. Successfully completing the Cisco 350-701 SCOR exam offers more than just that – it grants the CCNP Security certification and additional advantages, including:

1. Set Yourself Apart From the Crowd

The job landscape for IT professionals is intensely competitive. Simultaneously, hiring managers are compelled to seek exceptionally skilled candidates. Consequently, only individuals who have demonstrated dedication and commitment to their careers through examinations and certifications are chosen. Opting for a Cisco 350-701 SCOR exam also signifies your enthusiasm and practical expertise in your professional domain.

2. Official Validation

Consider the perspective of the hiring manager: asserting your proficiency in network security technologies through words alone may not be highly persuasive. However, when your resume is enhanced by an industry-standard certification from a reputable vendor, additional explanations become unnecessary. The esteemed reputation of Cisco in the networking field alone is sufficient to secure a job.

3. Showcase Your Professional Relevance

Many employers prefer to recruit versatile professionals capable of undertaking diverse responsibilities within a company. Successfully completing the Cisco 350-701 SCOR exam demonstrates your accurate understanding of workplace technologies and your capacity to contribute to organizational empowerment. In essence, certification assures your employer that your skill set aligns with the requirements of their job position.

4. Boost Your Earnings

Attaining the CCNP Security certification opens doors to lucrative opportunities for increased earnings. Additionally, you become eligible for job positions that offer higher salaries compared to those available to non-certified professionals.

5. Propel Your Career Advancement Swiftly

If you've been aiming for promotions within your company, obtaining CCNP Security certification can be instrumental in reaching even managerial positions. The widespread application of Routing and Switching technologies in many organizations is attributed to their provision of secure communication and data sharing.

6. Reinforce Your Confidence

Positions in networking are typically hands-on, demanding consistent performance. Successfully completing the Cisco 350-701 SCOR exam instills confidence in executing tasks, as the acquired skills provide a robust comprehension of network security. Certifications also hold significance for employers during the hiring process, serving as proof that they have selected a qualified and capable professional.

Conclusion

Ascending to higher levels and achieving your aspirations in the IT field can be challenging without additional certifications validating your capabilities. Although various organizations provide numerous certifications, it's crucial to identify the one that aligns most effectively with your objectives. Otherwise, the investment of time and money could prove futile. Act promptly and secure your Cisco 350-701 SCOR certification using the best available resources tailored for you.

Tuesday, 5 December 2023

Integrated Industrial Edge Compute

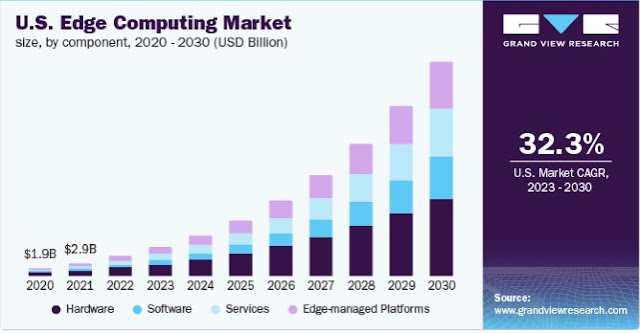

Industry Trends

Container Apps at the Cisco Edge

And More

Saturday, 2 December 2023

Effortless API Management: Exploring Meraki’s New Dashboard Page for Developers

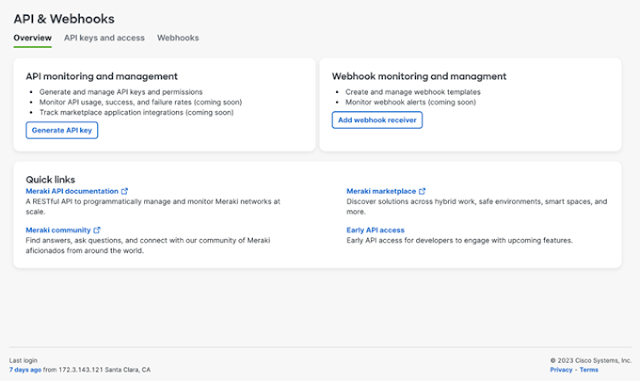

A Dashboard Designed for Developers

Simplifying API Key Management

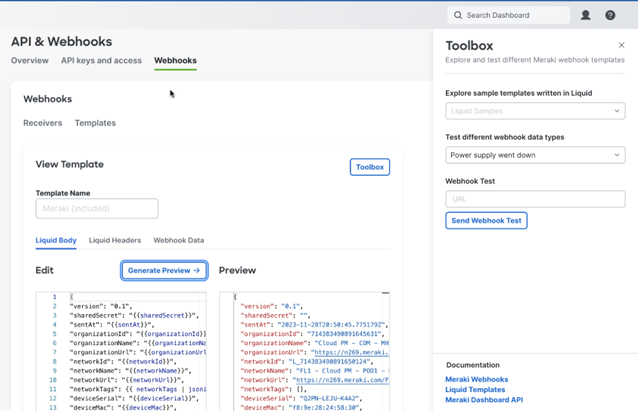

Streamlining Webhook Setup

- Create webhook receivers across your various networks

- Assign payload templates to integrate with your webhook receiver

- Create a custom template in the payload template editor and test your configured webhooks.

- Establish custom headers to enable adaptable security options.

- Experiment with different data types, like scenarios involving an access point going offline or a camera detecting motion, for testing purposes.