Making hot desking secure and accessible on a global scale

The first rule of interviewing a CISO at the Australian division of

Laing O’Rourke is this: You can’t dig deep into use cases or clients.

And this makes perfect sense, because when you’re responsible for securing critical infrastructure for an AUD $6 billion global construction and engineering firm, with projects ranging from transport to defense, even scant details can lead to cyberattacks.

Crafting security for joint ventures, and a very distributed network

Despite the high stakes, Laing O’Rourke’s security challenges are distinctly universal – especially post-2020, where the world saw a massive boost in the sophistication and number of DDoS, VPN, and other web-related attacks. And like peer companies, the company needed to set a firm foundation to block internet-based attacks on distributed infrastructure.

But here’s where things are different. Thanks to business requirements, Laing O’Rourke’s network environment is complex. The company often works on what James Fields, Group Deputy CISO for Laing O’Rourke, calls “mega projects,” joint ventures (JVs) with other companies that are – to put it plainly – competitors.

“Being a construction business, physical security is a real challenge out on project sites. Often, for some of our larger-scale projects, we find ourselves in collaborative partnerships with our rivals,'” Fields commented. “At one moment, they’re our partners in a project, and in the next, they could be our competitors for fresh contracts. By engaging in these joint ventures, we’re effectively inviting our competition into our network.”

So, it is imperative that Laing O’Rourke delivers secure network access to staff, clients and JV partners in a hot-desking environment AND satisfy clients demanding adherence to different frameworks and certification. The company must also prevent threat actors — as well as anyone who could benefit competitively, financially, or in any other way – – from accessing or exfiltrating information from the network.

And they did it this by adding two different Cisco solutions to the stack: Cisco Secure Firewall and Cisco Identity Services Engine (ISE).

Streamlining security in the face of unnecessary, time-consuming tasks

Getting backing from leadership to invest in the best traffic and threat management tools can seem impossible for many teams. Thankfully, Fields has enthusiastic backing from the board.

“My team and I are truly passionate about cybersecurity, and we have the board’s support not just for compliance’s sake (not just performing a tick box exercise), but also for establishing the best practices and instilling a cyber-centric mindset throughout the business.”

But that doesn’t mean it’s been easy building that framework.

As a snapshot, before Cisco ISE, Fields says, “Our joint venture partners and clients had a potential risk of unintentionally (or deliberately) accessing our corporate network due to shared office space. This prevented business agility, necessitating fixed desks. Consequently, IT had to frequently reconfigure ports on project sites as staff assignments changed based on project phases or collaboration needs.”

Developing those pre-designed workspaces based on whether the user was from Laing O’Rourke, or a JV took precious time and energy that could have been used elsewhere. The Laing O’Rourke team needed intelligent automation to streamline the process.

Laing O’Rourke already had multiple firewalls in place, but it needed a Cisco Secure Firewall to help the company control network access, prevent intrusions and exfiltration, filter URLs, and conduct deep packet inspection. Meanwhile, Cisco ISE would help wrangle all those joint venture devices.

Since the Laing O’Rourke team was already using Cisco switches and was familiar with how Cisco solutions work, it made the choice to add more Cisco to the stack all that much easier.

“We, like most enterprises, use Cisco switches at our core and at the edge. So it made sense to talk to Cisco about how they could help us protect our network.”

Using Cisco Secure Firewall to streamline access and safeguard the network

Laing O’Rourke needed physical security that could accommodate hybrid staff members and contractors through hot-desking (multiple workers using a single physical workstation) and achieving seamless connectivity and network management was crucial.

To address this, Laing O’Rourke turned to Cisco Secure Firewall, allowing the company to achieve and maintain the confidentiality, integrity, and availability — the coveted CIA triad — of data. By effectively controlling network access and preventing unauthorized data changes, Cisco Secure Firewall played a pivotal role in safeguarding Laing O’Rourke’s network infrastructure.

Key stakeholders, including Fields, emphasized the importance of Cisco’s wide-ranging threat intelligence. These updates ensured that the firewalls remain current with the latest threat and vulnerability signatures, reinforcing the strength and effectiveness of Laing O’Rourke’s security measures.

By partnering with Cisco, Laing O’Rourke has enhanced its ability to identify and mitigate a wide range of threats by using advanced features of Cisco Secure Firewall, including intrusion prevention, URL filtering, and deep packet inspection capabilities.

The team also used Firewall Management Center (FMC) dashboards to manage firewalls using a single pane of glass, which was ultra-convenient when they needed insights on intrusion events, potential threats, and geolocation. Thanks to the proactive security measures implemented through Cisco’s Secure Firewall solution, Laing O’Rourke has experienced a considerable decrease in web-related vulnerability attacks.

Once the Cisco Firewall was in place for Laing O’Rourke, it was ready to do what it’s known for: helping prevent DDOS, malware, VPN, and many other attacks.

“When it comes to firewalling, we take a dual vendor approach. Around five years ago we went out to market to replace our [competitor] firewalls. Given our positive experience with Cisco’s networking equipment, Cisco FTD’s were on our shopping list,” Fields said. “We still take a dual vendor approach and Cisco is still helping secure our edge.”

Adding a zero-trust framework with ISE for identity

Cisco Secure Firewall has proven itself a formidable force to manage traffic and block threats, with automatic updates and frequent attack intel as a sweetener. But ISE has been a revelation for Laing O’Rourke, giving the team a firm, confident hand when managing IP phones, tablets, and laptops – all used to conduct business.

“ISE was a real game changer for us. It has transformed the way we operate on project sites, negating the need for predefined workspaces based on if the user was a Laing O’Rourke staff member, JV partner, client, or guest, while simultaneously increasing protection of our corporate network”.

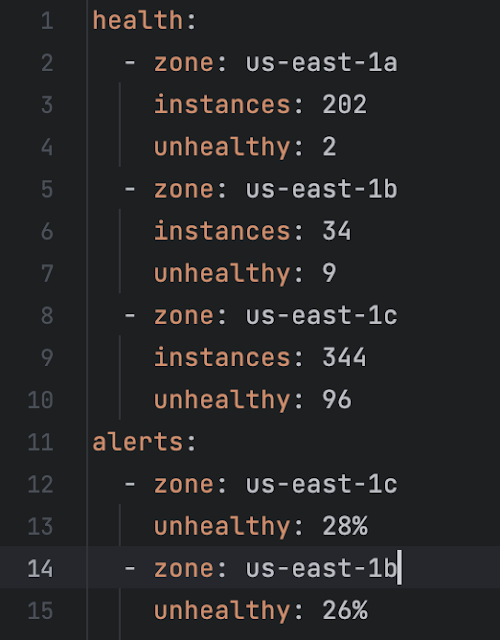

With ISE, ports can be configured to dynamically reconfigure a port based on security posture and device ownership, permitting access to the right network segments at the right time. This includes access to the company’s corporate wireless (and wired) networks, guest Wi-Fi, and BYOD – including operational technology (OT) networks.

“While ISE takes a bit of effort to set up right, once it up and running, it’s a very stable platform, easy to configure and integrates well with other security platforms like Firewall Threat Defense (FTD) and mobile device management (MDM) solutions,” Fields said.

If he had to name three things that make Cisco ISE a solid solution for Laing O’Rourke, Fields spoke of dynamic profiling that detects device type and applies the right policy, the MDM integration and compliance check that makes sure devices are up-to-date, and anomalous behaviour detection.

According to Fields, many years ago, a pen-tester discovered a technical gap that absolutely needed to be closed. So now when an IP phone starts to communicate as Windows traffic, for instance, ISE catches it with behavioural detection.

“With the lack of physical security on our project sites, along with actively inviting our competitors onto our network, seems like a disaster waiting to happen,” he said. “Cisco ISE has proven to be an invaluable solution for segregating access between our employees and our clients and partners, protecting us from threat actors and rogue network devices.”

Cisco Secure Firewall and ISE save money and time

Many network and security pros understand how painful it can be to secure a network – especially one that’s distributed. But with a Cisco Secure Firewall in play and ISE to manage BYODs, Laing O’Rourke’s networking team has already seen a difference.

To start, those Monday morning calls about desk moves and disrupted network access are no more. Laing O’Rourke is saving minutes, hours, and days, while simultaneously bolstering network security: something that notoriously…takes time.

The user experience has improved, and the team has more time to focus on threats. Though Laing O’Rourke uses a dual vendor approach, Cisco is the go-to for this critical, global company, with ROI already evident once the company’s other firewalls were replaced with Cisco Firewalls.

“The [competitor] firewalls were significantly more expensive and offered no additional functionality. The replacement [Cisco] actually saved us money,” Fields said. “What I can say is one of the few things that doesn’t keep me up at night is our network uptime or network-based security — thanks to Cisco Firewall Threat Defense (FTD) and Cisco ISE.”

Source: cisco.com