What a year 2020 was, and still what success for Cisco Embedded Wireless Controller!

Despite COVID-19 transforming our lives, despite the challenges of working in a virtual environment for many of us, the C9100 EWC had an excellent year.

We had many thousands of EWC software downloads, and the C9100 EWC Product Booking increased quarter after quarter. We had more than 200 customers controlling 13K+ Access Points!

Let’s try to summarize some learnings from 2020 customer’s experience with EWC:

Why are customers so interested in EWC, how does EWC address their needs?

The short story: The EWC gives them full Catalyst 9800 experience while running in a Container on the Access Point itself.

The long story: For small and medium businesses, EWC is the sweet spot to manage the wireless networks. It is simple to use, secure by design, and above all ready to grow once the business grows, due to its flexible architecture. Once your network grows beyond 100 APs, it can be easily migrated to an appliance Controller or a cloud-based Controller. Therefore it offers investment protection.

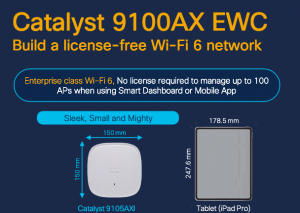

The EWC is supported on all 11ax APs, and the scale varies from 50 APs/1000 clients (C9105AXI, C9115AX, C91117AX) to 100 APs/2000 clients (C9120AX, C9130AX). With such a scale, a medium site or a branch deployment is given the advantage of an integrated Wireless Controller. So no other physical hardware is needed.

What EWC features/capabilities are most sought by the customers?

The short story: The EWC is an all-in-one Controller, combining the best-in-class Cisco RF innovations of an 11ax Access Point with the advanced enterprise features of a Cisco Controller.

The long story: Firstly, the most appealing 11ax AP Cisco RF innovations for the customers:

◉ RF Signature Capture provides superior security for mission-critical deployments.

◉ 11ax APs offer Zero-Wait, Dual Filter DFS (Dynamic Frequency Selection). 9120/9130 APs will use both client-radio and Cisco RF ASIC to detect radar and to virtually eliminate DFS false positives.

◉ Cisco APs implement aWIPS feature (adaptive Wireless Intrusion Prevention System). This is a threat detection and mitigation mechanism using signature-based techniques, traffic analysis, and device/topology information. It is a full infrastructure-integrated solution.

In addition, a list of EWC enterprise-ready features that customers are looking for:

◉ AAA Override on WLANs (SSIDs) – the administrator can configure the wireless network for RADIUS authentication and apply VLAN/QOS/ACLs to individual clients based on AAA attributes from the server.

◉ Full support for the latest WPA3 Security Standard and for Advanced Wireless Intrusion Prevention (aWIPS).

◉ AVC (Application Visibility and Control) – the administrator can rate limit/drop/mark traffic based on client application.

◉ Controller Redundancy – any 11ax AP could play the Active/Standby role. EWC has the flexibility to designate the preferred Standby Controller AP.

◉ Identify Apple iOS devices and apply prioritization of business applications for such clients.

◉ mDNS Gateway – forwarding Bonjour traffic by re-transmitting the traffic between reflection enabled VLANs.

◉ Integration with Cisco Umbrella for blocking malicious URLs, malware, and phishing exploits.

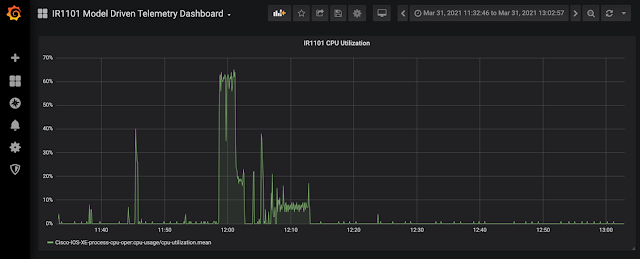

◉ Programmable interfaces with NETCONF/Yang for automation, configuration, and monitoring.

◉ Software Maintenance Upgrades (SMUs) can be applied to either Controller software or AP software.

Ok, we see a lot of interesting features, but with so many features, a certain degree of complexity is expected. The next question coming to mind is:

How about the ease of use of the EWC?

As per reports from the field, the device can be configured in eight minutes in Day-0 configuration using WebUI (Smart Dashboard) and mywifi.cisco.com URL.

The WebUI has been reported as being ‘very straightforward’.

There is no need to reboot the AP after Day-0 configuration is applied.

A quote from a third-party assessment (Miercom) says everything: “The Cisco EWC solution is one of the easiest wireless products to deploy that we’ve encountered to date.”

The user configures a shortlist of items in Day-0 (either in WebUI or in CLI): username/password, AP Profile, WLAN, wireless profile policy, and the default policy tag.

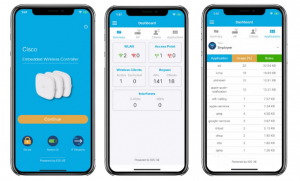

An alternative to WebUI is the mobile app from either Google Play or Apple App Store. The app allows the user to bring up the device in Day-0, or to view the fleet of APs, the top list of clients, or any other wireless statistics.

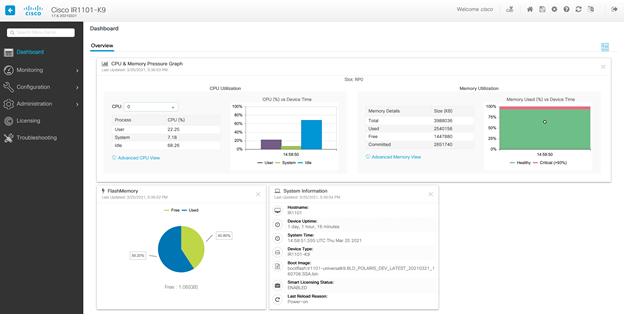

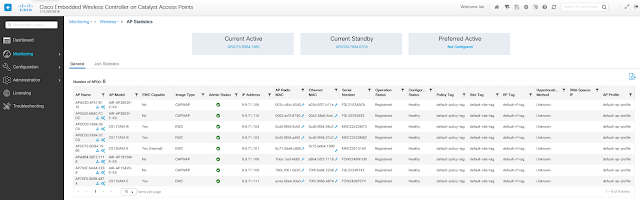

The EWC WebUI is very similar to the 9800 WebUI, so a potential transition to an appliance-based Controller is seamless. Please see the snapshot below:

Trying yourself the EWC WebUI is the most convincing argument to demonstrate its ease of use.

What else did customers like in 2020 regarding EWC?

A couple of EWC deliverables in release 17.4 were welcome by the customers:

◉ DNA License-free availability for EWC reduces the total cost of ownership, but it will still give customers the advantage of having the Network Essentials stack by default.

◉ New Access Point 9105 models (9105AXI, 9105AXW) give customers value options for their network deployment through EWC (9105AXI)

Regarding the new 9105 Access Points, the 11ax feature-set is rich: 2×2 MU-MIMO with two spatial streams, uplink/downlink OFDMA, TWT, BSS coloring, 802.11ax beamforming, 20/40/80 MHz channels.

9105AXI has a 1×1.0 mGig uplink interface, while the wall-mountable version (9105AXW) has 3×1.0 mGig interfaces, a USB port, and a Passthru port.

Next IOS-XE releases coming out in 2021 are already planning new and interesting features rolled out for EWC, please stay tuned!

Bottom line

EWC proved last year to be a simple, flexible, and secure platform of choice for small/medium business customers. The 2020 EWC customer adoption rate was growing continuously.

Source: cisco.com