Businesses today are digitally led, providing experiences to their customers and consumers through applications. The environments these applications are built upon are complex and evolve rapidly — requiring that IT teams, security teams, and business leaders can observe all aspects of their applications’ performance and be able to tie that performance to clear business outcomes. This calls for a new type of platform that can scale as a business scale and easily extend across an organization’s entire infrastructure and application lifecycle. It’s critical for leaders to have complete visibility, context and control of their applications to ensure their stakeholders — from employees to business partners to customers — are empowered with the best experiences possible.

What is Cisco Full-Stack Observability (FSO) Platform?

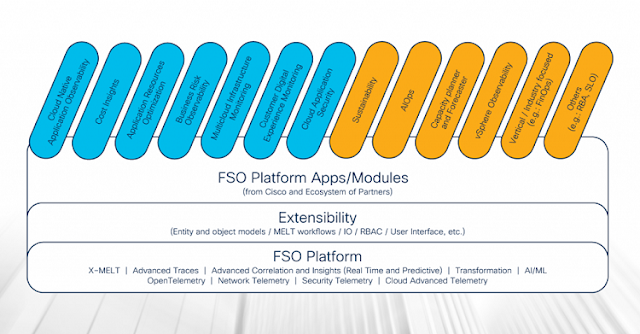

The Cisco FSO Platform is an open and extensible, API-driven full stack observability (FSO) platform focused on OpenTelemetry and anchored on metrics, events, logs, and traces (MELT), providing AI/ML driven analytics as well as a new observability ecosystem delivering relevant and impactful business insights through new use-cases and extensions.

Benefits of The Cisco FSO Platform

Cisco’s FSO Platform is future-proof and vendor agnostic, bringing data together from multiple domains — including application, networking, infrastructure, security, cloud, sustainability — and business sources. It is a unified observability platform enabling extensibility from queries, data ingestion pipelines and entity models all the way to APIs and a composable UI framework.

This provides Cisco customers with in-context, correlated, and predictive insights which enables them to reduce time to resolve issues, optimize their own users’ experiences, and minimize business risk — all with the additional flexibility to extend the Cisco FSO Platform’s capabilities with the creation of new or customized business use cases. This extensibility unleashes a diverse ecosystem of developers who can create new solutions or build upon existing ones to rapidly add value with observability, telemetry, and actionable insights.

Cisco FSO Platform Diagram

First Application on the Cisco FSO Platform – Cloud Native Application Observability

Cloud Native Application Observability is a premier solution delivered on the Cisco FSO Platform. Cisco’s extensible application performance management (APM) solution for cloud native architectures, Cloud Native Application Observability with business context – now on the Cisco FSO Platform – helps customers achieve business outcomes, make the right digital experiences related decisions, ensure performance alignment with end-user expectations, prioritize, and reduce risk while securing workloads.

The following are some of the modules built on Cisco FSO Platform that work with Cloud Native Application Observability.

Modules built by Cisco

Cost Insights: This module provides visibility and insights into application-level costs alongside performance metrics, helping businesses understand the fiscal impact of their cloud applications. It leverages advanced analytics and automation to identify and eliminate unnecessary costs, while also supporting sustainability efforts.

Application Resource Optimizer: This module provides deeper insights into a Kubernetes workload and provides visibility into the workload’s resource utilization. It helps to identify the best candidates for optimization—and reduce your resource utilization. Running continuous AI/ML experiments on workloads, the Application Resource Optimizer creates a utilization baseline, and offers specific recommendations to help you improve. It analyzes and optimizes application workloads to maximize resource usage and reduce excessive cloud spending.

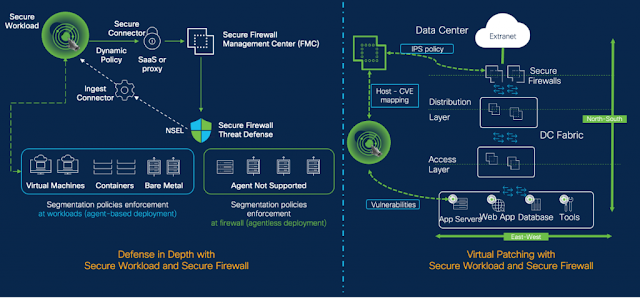

Security Insights: This module provides Business Risk Observability for cloud-native applications. It provides cloud native infrastructure insights to locate threats and vulnerabilities; runtime data security to detect and protect against leakage of sensitive data; and business risk prioritization for cloud security. By integrating features from our market-leading portfolio of security solutions, security and application teams have expanded threat visibility, and the intelligent business risk insights to respond in real-time to revenue-impacting security risks and reduce overall organizational risk profiles.

Cisco AIOps: This module helps to visualize contextualized data relevant to infrastructure, network, incidents, and performance of a business application, all in one place. It simplifies and optimizes the IT operations needs and accelerates time-to market for customer-specific AIOps capabilities and requirements.

Modules built by Partners

Evolutio Fintech: This module helps to reduce revenue losses for financial customers resulting from credit card authorization failures. It monitors infrastructure health impact on hourly credit card authorizations aggregated based on metadata like region, schemas, infra components and merchants.

CloudFabrix vSphere Observability and Data Modernization: This module helps to observe vSphere through the FSO platform and enriches vShpere and vROps data with your environment’s Kubernetes and infrastructure data.

Kanari Capacity Planner and Forecaster: This module provides insights into infrastructure risk factors that have been determined through predictive ML algorithms (ARIMA, SARIMA, LSTM). It helps to derive capacity forecasts and plans using these insights and baseline capacity forecast to analyze changing capacity needs overtime.

Source: cisco.com