Security Operations Centers (SOC) continue to face new and emerging threats that test the limits of their tooling and staff. Attackers have simple, affordable access to a plethora of cloud-based computing resources and can move quicker than ever. Keeping up with threats is no longer about adding more people to the SOC to watch logs and queues. It’s about leveraging automation to match the speed of your attackers. This past April, at the RSA Conference in San Francisco, Cisco announced our new eXtended Detection and Response (XDR) product: Cisco XDR. Cisco XDR combines telemetry and enrichment from a wide variety of products, both Cisco and third party, to give you a single place to correlate events, investigate, and respond to automatically enriched incidents. No modern XDR product is complete without automation, and Cisco XDR has multiple automation features built in to accelerate how your SOC battles their enemies.

Response Playbooks

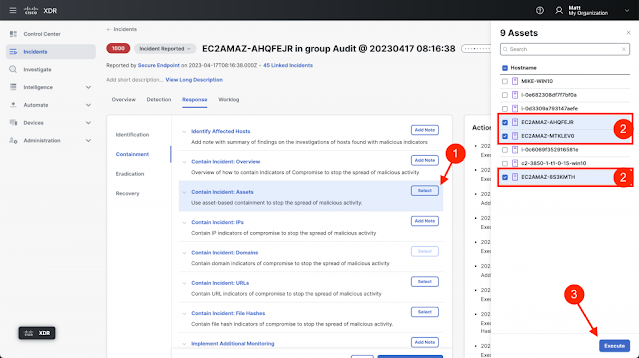

Having visibility from an incident is step one, but being able to quickly take meaningful response actions is vital. In Cisco XDR, the new incident manager has what we’re calling the response playbook. The response playbook is a series of suggested tasks and actions broken down into four phases (based on SANS PICERL):

- Identification – Review the incident details and confirm that a breach of policy has occurred.

- Containment – Prevent malicious resources from continuing to impact the environment.

- Eradication – Remove the malicious artifacts from the environment.

- Recovery – Validate eradication and recover or restore impacted systems.

Each of these four phases has their own tasks that guide the analyst through completing relevant steps, but the one to focus on from an automation perspective is containment. Let’s say you have a few endpoints you want to isolate but they’re managed by multiple different endpoint detection and response (EDR) products. Two are managed by Cisco Secure Endpoint and another is managed by CrowdStrike. With both of these products integrated into Cisco XDR, all you need to do is click “Select” on the “Contain Incident: Assets” task, select the endpoints to contain, and click “Execute.” We’ll handle the rest from there using an automated workflow in Cisco XDR Automation (explained in more detail in the next section). The workflow will check which endpoints are in which EDR and take the corresponding actions in each product. Improving the analyst’s ability to identify and execute a response action from within an incident is one of the many ways Cisco XDR helps your SOC accelerate its operations.

Automated Workflows

With automation being a core component of how we achieve XDR outcomes, it should come as no surprise that Cisco XDR has a fully featured automation engine built in. Cisco XDR Automation is a no-to-low code, drag-and-drop workflow editor that allows your SOC to accelerate how it investigates and responds, among other things. You can do this by importing workflows from Cisco or by writing your own. To take automation to the next level in Cisco XDR, we have a new concept called Automation Rules. These rules allow you to define criteria that determine when a workflow is executed. Here are some example rule types and when you might use them:

- Approval Task – Take response actions after an approval task is approved, or notify the team if a request is denied.

- Email – Investigate suspicious or user-reported emails as they arrive in a spam or phishing investigation mailbox.

- Incident – Enrich incidents with additional context, take automated response actions, assign to an analyst, push data to other systems like ServiceNow, and more.

- Schedule – Automate repetitive tasks like auditing configurations, collecting data, or generating reports.

- Webhook – Integrate with other systems that can call a webhook when something interesting happens. A message being sent to a bot in Webex, for example.

Cisco XDR Automation allows you to move data between systems that don’t know how to communicate with each other, use custom or third party tools to enrich incidents as they’re generated, or tailor how your analysts respond to threats based on your standard operating procedures.

APIs

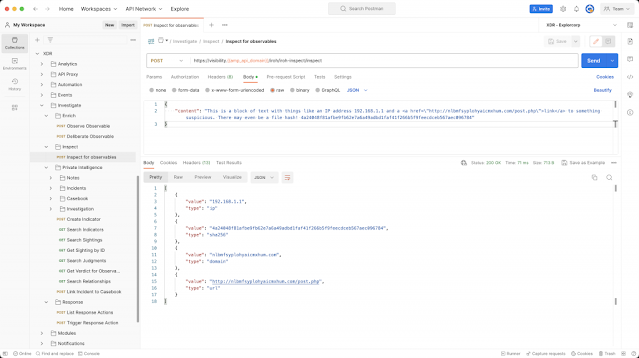

Finally, the core of what powers much of Cisco XDR is its APIs. Cisco XDR has a robust set of APIs that allow you to extend most of the functionality you see in the product out to other systems. You can use Cisco XDR APIs to scrape observables from a block of text (shown below in Postman), gather intelligence from integrated products, conduct an investigation, take response actions using integrated products, and more. The flexibility to use Cisco XDR via APIs allows your SOC to customize your processes at a granular level. Want to enrich tickets in your ticketing platform with intelligence from your security products? We have APIs for that. Want to allow analysts to approve remediation actions by messaging a bot in Webex? We can do that too. Cisco XDR has a full suite of APIs that can help you take your security operations to the next level.

Conclusion

The crucial takeaway from this blog is that automation is a key component of modern security operations. The threats we face evolve constantly, move quickly, and many security teams lack enough skilled staff to monitor all of their tools. We need to use automation to keep up and get ahead of bad actors. From an industry perspective, we also recognize that many teams are trying to do more work with fewer people. Automation can help with that too. We want to enable your SOC to automate the things they don’t want to do and accelerate the tasks that truly matter. All of this and more can be done with Cisco XDR.

Source: cisco.com