Here we are, almost four whole months into 2019 and machine learning and artificial intelligence are still hot topics in the security world. Or at least that was the impression I had. Our 2019 CISO Benchmark Report however, found that between 2018 and 2019, CISO interest in machine learning dropped from 77% to 67%. Similarly, interest in artificial intelligence also dropped from 74% to 66%.

Now there are a number of reasons why these values could have dropped over a year. Maybe there’s a greater lack of certainty or confidence when it comes to implanting ML. Or perhaps widespread adoption and integration into more organizations has made it less of a standout issue for CISOs. Or maybe the market for ML has finally matured to the point where we can start talking about the outcomes from ML and AI and not the tools themselves.

No matter where you stand on ML and AI, there’s still plenty to talk about when it comes to how we as an industry are currently making use of them. With that in mind, I’d like to share some thoughts on ways we need to view machine learning and artificial intelligence as well as how we need to shift the conversation around them.

I’m still amazed by how machine learning is still a hot topic. That’s not to say it does not deserve to be an area of interest though. I am saying however, that what we should be talking about are the outcomes and capabilities it delivers. Some of you may remember when XML was such a big deal, and everyone could not stop talking about it. Fast forward to today and no one advertises that they use XML since that would just be obvious and users care more about the functionality it enables. Machine Learning will follow along the same path. In time, it will become an essential aspect of the way we approach security and become simply another background process. Once that happens, we can focus on talking about the analytical outcomes it enables.

Anyone who has built an effective security analytics pipeline knows that job one is to ensure that it is resilient to active evasion. Threat actors know as much or more than you do about the detection methods within the environments they wish to penetrate and persist. The job of security analytics is to find the most stealthy and evasive threat actor activity in the network and to do this, you cannot just rely on a single technique. In order for that detection to happen, you need a diverse set of techniques all of which complement one another. While a threat actor will be able to evade one or two of them simultaneously, they don’t stand a chance against hundreds of them! Detection in diversity!

To explain this, I would like to use the analogy of a modern bank vault. Vaults employ a diverse set of detection techniques like motion, thermal, laser arrays, and on some physical dimension, an alarm will be tripped, and the appropriate response will ensue. We do the same in the digital world where machine learning helps us model timing or volumetric aspects of the behavior that are statistically normal and we can signal on outliers. This can be done all the way down at the protocol level where models are deterministic or all the way up to the application or users’ behavior which can sometimes be less deterministic. We have had years to refine these analytical techniques and have published well over 50 papers on the topic in the past 12 years.

Now there are a number of reasons why these values could have dropped over a year. Maybe there’s a greater lack of certainty or confidence when it comes to implanting ML. Or perhaps widespread adoption and integration into more organizations has made it less of a standout issue for CISOs. Or maybe the market for ML has finally matured to the point where we can start talking about the outcomes from ML and AI and not the tools themselves.

No matter where you stand on ML and AI, there’s still plenty to talk about when it comes to how we as an industry are currently making use of them. With that in mind, I’d like to share some thoughts on ways we need to view machine learning and artificial intelligence as well as how we need to shift the conversation around them.

More effective = less obvious

I’m still amazed by how machine learning is still a hot topic. That’s not to say it does not deserve to be an area of interest though. I am saying however, that what we should be talking about are the outcomes and capabilities it delivers. Some of you may remember when XML was such a big deal, and everyone could not stop talking about it. Fast forward to today and no one advertises that they use XML since that would just be obvious and users care more about the functionality it enables. Machine Learning will follow along the same path. In time, it will become an essential aspect of the way we approach security and become simply another background process. Once that happens, we can focus on talking about the analytical outcomes it enables.

An ensemble cast featuring machine learning

Anyone who has built an effective security analytics pipeline knows that job one is to ensure that it is resilient to active evasion. Threat actors know as much or more than you do about the detection methods within the environments they wish to penetrate and persist. The job of security analytics is to find the most stealthy and evasive threat actor activity in the network and to do this, you cannot just rely on a single technique. In order for that detection to happen, you need a diverse set of techniques all of which complement one another. While a threat actor will be able to evade one or two of them simultaneously, they don’t stand a chance against hundreds of them! Detection in diversity!

To explain this, I would like to use the analogy of a modern bank vault. Vaults employ a diverse set of detection techniques like motion, thermal, laser arrays, and on some physical dimension, an alarm will be tripped, and the appropriate response will ensue. We do the same in the digital world where machine learning helps us model timing or volumetric aspects of the behavior that are statistically normal and we can signal on outliers. This can be done all the way down at the protocol level where models are deterministic or all the way up to the application or users’ behavior which can sometimes be less deterministic. We have had years to refine these analytical techniques and have published well over 50 papers on the topic in the past 12 years.

The precision and scale of ML

So why then can’t we just keep using lists of bad things and lists of good things? Why do we need machine learning in security analytics and what unique value does it bring us? The first thing I want to say here is that we are not religious about machine learning or AI. To us, it is just another tool in the larger analytics pipeline. In fact, the most helpful analytics comes from using a bit of everything.

If you hand me a list and say, “If you ever see these patterns, let me know about it immediately!” I’m good with that. I can do that all day long and at very high speeds. But what if we are looking for something that cannot be known prior to the list making act? What if what we are looking for cannot be seen but only inferred? The shadows of the objects but never the objects if you will. What if we are not really sure what something is or the role it plays in the larger system (i.e., categorization and classification)? All these questions is where machine learning has contributed a great deal to security analytics. Let’s point to a few examples.

The essence of Encrypted Traffic Analytics

Encryption has made what was observable in the network impossible to observe. You can argue with me on this, but mathematics is not on your side, so let’s just accept the fact that deep packet inspection is a thing of the past. We need a new strategy and that strategy is the power of inference. Encrypted Traffic Analytics is an invention at Cisco whereby we leverage the fact that all encrypted sessions begin unencrypted and that the routers and switches can send us an “Observable Derivative.” This metadata coming from the network is a mathematical shadow of the payloads we cannot inspect directly because it is encrypted. Machine learning helps us train on these observable derivatives so that if its shape and size overtime is the same as some malicious behavior, we can bring this to your attention all without having to deal with decryption.

Why is this printer browsing Netflix?

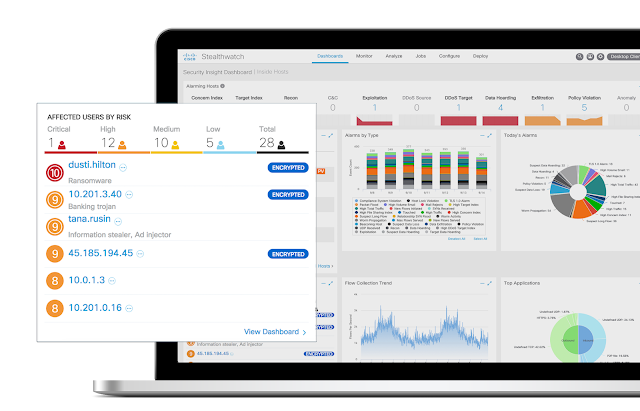

Sometimes we are lucky enough to know the identity and role of a user, application, or device as it interacts with systems across the network. The reality is, most days we are far from 100% on this, so machine learning can help us cluster network activity to make an assertion like, “based on the behavior and interactions of this thing, we can call it a printer!”. When you are dealing with thousands upon thousands of computers interacting with one another across your digital business, even if you had a list at some point in time – it is likely not up to date. The value to this labeling is not just so that you have objects with the most accurate labels, but so you can infer suspicious behavior based on its trusted role. For example, if a network device is labeled a printer, it is expected to act like a printer – future behavior can be expected from this device. If one day it starts to browse Netflix or checks out some code from a repository, our software Stealthwatch generates an alert to your attention. With machine learning, you can infer from behavior what something is or if you already know what something is, you can predict its “normal” behavior and flag any behavior “not normal.”

Pattern matching versus behavioral analytics

Lists are great! Hand me a high-fidelity list and I will hand you back high-fidelity alerts generated from that list. Hand me a noisy or low fidelity list and I will hand you back noise. The definition of machine learning by Arthur Samuels in 1959 is “Field of study that gives computers the ability to learn without being explicitly programmed.” In security analytics, we can use it for just this and have analytical processes that implicitly program a list for you given the activity it observes (the telemetry it is presented). Machine learning helps us implicitly put together a list that could not have been known a priori. In security, we complement what we know with what we can infer through negation. A simple example would be “if these are my sanctioned DNS servers and activities, then what is this other thing here?!” Logically, instead of saying something is A (or a member of set A), we are saying not-A but that only is practical if we have already closed off the world to {A, B} – not-A is B if the set is closed. If, however we did not close off the world to a fixed set of members, not-A could be anything in the universe which is not helpful.

Useful info for your day-to-day tasks

I had gone my entire career measuring humans as if they were machines, and not I am measuring humans as humans. We cannot forget that no matter how fancy we get with the data science, if a human in the end will need to understand and possibly act on this information, they ultimately need to understand it. I had gone my entire career thinking that the data science could explain the results and while this is academically accurate, it is not helpful to the person who needs to understand the analytical outcome. The sense-making of the data is square in the domain of human understanding and this is why the only question we want to ask is “Was this alert helpful?” Yes or no. And that’s exactly what we do with Stealthwatch. At the end of the day, we want to make sure that the person behind the console understands why an alert was triggered and if that helped them. If the “yeses” we’ve received scoring in the mid 90%’s quarter after quarter is any indication, then we’ve been able to help a lot of users make sense of the alerts they’re receiving and use their time more efficiently.