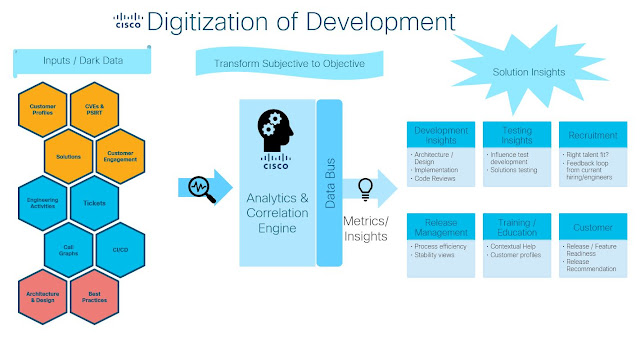

Business leaders often question software development processes to identify their effectiveness, validate if release quality is maintained across all products and features, and to ensure smooth customer deployments. While providing data from multiple perspectives, I hear teams struggling to respond in a meaningful way. In particular, it’s hard for them to be succinct without communicating nitty-gritty details and dependencies. Is there a way in which we can objectively arrive at the release quality measurement to ensure an expected level of quality? Absolutely!

With more than two decades in the industry, I understand the software development life cycle thoroughly, its processes, metrics, and measurement. As a programmer, I have designed and developed numerous complex systems, led the software development strategy for CI/CD pipelines, modernized processes, and automated execution. I have answered the call for stellar customer journey analytics for varied software releases, allowing our business to grow to scale. Given my background, I would like to share my thoughts on our software development process, product and feature release quality, and strategies to prepare for successful product deployments. I look forward to sharing my opinions and collaboratively working with you on building customer confidence through high-quality software deployments.

But, before I begin, here are some terms the way I think of them:

◉ Product Software Release— Mix of new and enhanced features, internally or customer-found defect fixes, and may contain operational elements related to installations or upgrades.

◉ Software Release Quality— Elements like content classification, development and test milestones, quality of the code and test suites, and regressions or collateral to track release readiness prior to deployment.

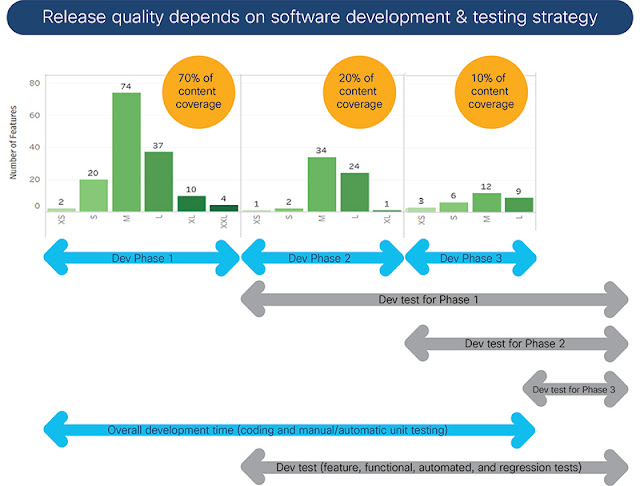

◉ Release Content— Classified list of features and enhancements with effort estimations for development. For example, we may use T-shirt size classification for development efforts (including coding, unit testing, unit test found bug fixes, and unit test automation): Small (less than 4 weeks), Medium (4-8 weeks), Large (8-12 weeks), Extra-Large (12-16 weeks). Categorize feature testing similarly as well.

◉ Release Quality and Health— Criteria for pre-customer deployment quality, with emphasis on code and feature development processes, corresponding tests, and overall release readiness.

Through this lens, let’s view the journey of our Polaris release. Before we do, let me emphasize that quality can never be an afterthought, it has to be integral to the entire process from the very beginning. Every aspect of software development and release logistics require you to adopt a quality-conscious culture. I believe there are four distinct phases or checkpoints to achieve this goal:

With more than two decades in the industry, I understand the software development life cycle thoroughly, its processes, metrics, and measurement. As a programmer, I have designed and developed numerous complex systems, led the software development strategy for CI/CD pipelines, modernized processes, and automated execution. I have answered the call for stellar customer journey analytics for varied software releases, allowing our business to grow to scale. Given my background, I would like to share my thoughts on our software development process, product and feature release quality, and strategies to prepare for successful product deployments. I look forward to sharing my opinions and collaboratively working with you on building customer confidence through high-quality software deployments.

But, before I begin, here are some terms the way I think of them:

◉ Product Software Release— Mix of new and enhanced features, internally or customer-found defect fixes, and may contain operational elements related to installations or upgrades.

◉ Software Release Quality— Elements like content classification, development and test milestones, quality of the code and test suites, and regressions or collateral to track release readiness prior to deployment.

◉ Release Content— Classified list of features and enhancements with effort estimations for development. For example, we may use T-shirt size classification for development efforts (including coding, unit testing, unit test found bug fixes, and unit test automation): Small (less than 4 weeks), Medium (4-8 weeks), Large (8-12 weeks), Extra-Large (12-16 weeks). Categorize feature testing similarly as well.

◉ Release Quality and Health— Criteria for pre-customer deployment quality, with emphasis on code and feature development processes, corresponding tests, and overall release readiness.

Through this lens, let’s view the journey of our Polaris release. Before we do, let me emphasize that quality can never be an afterthought, it has to be integral to the entire process from the very beginning. Every aspect of software development and release logistics require you to adopt a quality-conscious culture. I believe there are four distinct phases or checkpoints to achieve this goal:

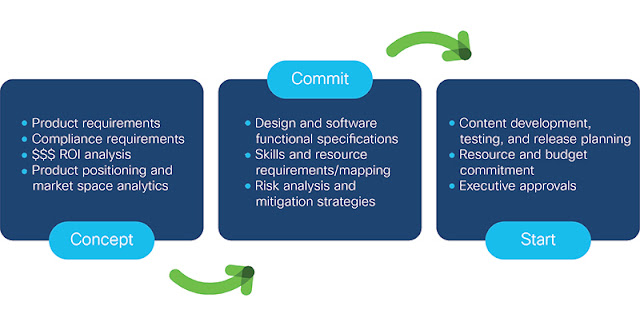

◉ Release content, execution planning and approvals— During this phase we must get our act straight. Good planning will yield great results. Preempting issues and executing on a mitigation plan is critical. Adopt laser-sharp focus on planning for features that will be developed and tested. To be effective, we must allocate a 70:20:10 ratio for complex and large features. Seventy percent of the challenging features will have to be developed first and tested early in the release cycle, twenty percent of them will be addressed in the next cycle, and ten percent at the end of the cycle. Small, medium and test-only features should be distributed throughout the development cycle depending on resource availability. In this way, the majority of the completed code can be tested early in the process and in parallel! This will help us drive shift left best practices and make them integral to the culture of our organization.

◉ Phase containment, schedules, and quality tracking—This phase represents the core of execution. We need to build a framework for success to guarantee quality. The key is to develop fast, tackle the complex stuff early, and to allow ample time for soak testing. Build the metric and measurement around it. Phase containment is essential for success. During this phase, focus on development and design issues, automation, code coverage, code review, static analytics, code complexity, and code churn data analytics to help build quality. Build the metrics and measurement around these elements and adhere to development principles. If any features do not meet the schedule or quality checkpoints, we must be prepared to defer them and remove them from the release train. The quality metrics should include, the number of features that have met their development schedule, undergone the feature/functional tests with 100% execution, and can claim a 95% pass-rate! If we follow an agile development model, each developmental and validation task must be tracked per sprint. We must document the unit-test found defects at the end of the sprint cycle; especially, if they move from one sprint cycle to the next. Daily defect tracking and weekly review with executives will bring the required attention and visibility as well.

The following image illustrates one such scenario:

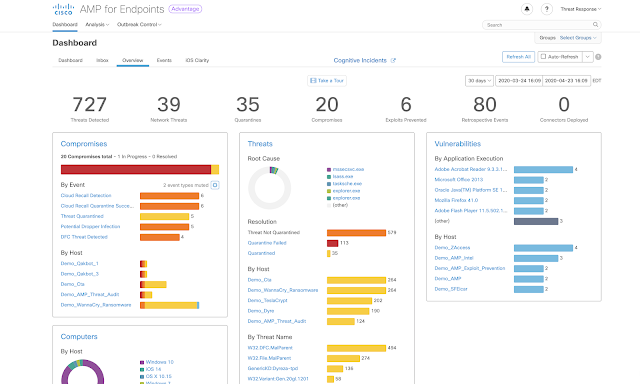

◉ Testing and defect management convergence— This phase can make or break a release. Since development is complete and a certain level of quality has been achieved (though not quite release-ready) it entails more rigorous testing. Tests, such as system integration testing, solutions and use case-driven testing, and performance and scale testing, provide greater insight into the quality of the release. Use time effectively in this phase to track the test completion percentages, the pass-rate percentages, and your metrics surrounding defect management. Defect escape analysis testing will highlight developmental gaps while making for a good learning opportunity. Another invaluable metric is to study the trend of incoming defects. If things are working as they should, you will notice a steady decline each week. The incoming defect rate must decline towards the end of testing cycle! This is a key metric as well.

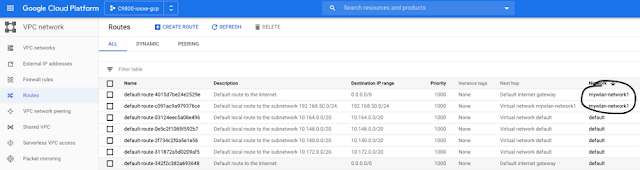

◉ Ready, set, go— In this phase, embark on a stringent review of readiness indicators, carry out checks and balances, address operational issues, finalize documentation content, and prepare for final testing. Testing may entail alpha, canary, early field trials in a real customer environment, or tests in a production environment to uncover residual issues. This phase will provide an accurate insight into the quality of the release.

As you can see, there are many ways we can equip ourselves to measure the quality and the health of a release. Building a process around developing the quality code and discipline in managing the phase containment are the key ingredients. It is important to build a culture to track the progress of shift left initiatives, focus on code quality and schedule discipline. Best of all, data-driven analytics and metrics will empower us to answer all queries from executives with confidence!